- 1鸿蒙开发笔记(二十): 常用组件 TextInput/TextArea, 弹窗,视频播放Video_鸿蒙 textinput

- 2C++图论 最短路问题总结_c++图最短距离问题

- 3Mac完全卸载idea的方法(可重新安装破解)_mac卸载idea重新安装

- 4【鸿蒙4.0】harmonyos Day 01_ohpm error: notfound package "hypium" not found fr

- 5c++数据结构之vector_c++ vector 结构体

- 6hbuilder 开发5+ APP采坑记录_5+app项目自启动

- 7AAU-net: 用于超声图像中乳腺病变分割的自适应注意力U-Net

- 82021年11月-火狐浏览器添加自定义搜索引擎之办法_手机自定义添加搜索引擎搜狗

- 9基于微信小程序的校园订餐系统(源码+文档+部署+讲解)_基于微信的校园订餐系统

- 10本科论文查重会检测AI辅写疑似度吗?7点解答你的疑惑!_学校盲审会使用aigc查重吗是真的吗

sealos+rook部署kubeSphere_default-http-backend kubectl-admin kubesphere-rout

赞

踩

前言

最近CNCF宣布rook毕业,kubeSphere正好也发布了3.0.0版本,由于rancher开源的longhorn还处于孵化阶段,不太适合生产环境使用,这次使用rook作为kubeSphere底层存储快速搭建一个生产可用的容器平台。

sealos简介

Sealos官方描述:只能用丝滑一词形容的 Kubernetes 高可用安装工具,一条命令,离线安装,包含所有依赖,内核负载不依赖 haproxy keepalived,纯 Golang 开发,99 年证书,支持 v1.16 ~ v1.19。

rook简介

rook是Kubernetes的开源云原生存储解决方案,能够为kubernetes提供生产就绪的文件、块和对象存储。

值得一提的是ceph官方最新的部署工具cephadm默认也使用容器来部署ceph集群了。

kubeSphere简介

KubeSphere是在 Kubernetes 之上构建的以应用为中心的多租户容器平台,完全开源免费,支持多云与多集群管理,提供全栈的 IT 自动化运维的能力,简化企业的 DevOps 工作流。KubeSphere 提供了运维友好的向导式操作界面,帮助企业快速构建一个强大和功能丰富的容器云平台。

部署环境准备

准备以下节点资源:

| 节点名称 | 节点IP | CPU | 内存 | 系统盘 | 数据盘 | 存储盘 | 操作系统 |

|---|---|---|---|---|---|---|---|

| k8s-master1 | 192.168.1.102 | 2核 | 8G | vda: 60G | vdb: 200G | - | CentOS7.8 minimal |

| k8s-master2 | 192.168.1.103 | 2核 | 8G | vda: 60G | vdb: 200G | - | CentOS7.8 minimal |

| k8s-master3 | 192.168.1.104 | 2核 | 8G | vda: 60G | vdb: 200G | - | CentOS7.8 minimal |

| k8s-node1 | 192.168.1.105 | 4核 | 16G | vda: 60G | vdb: 200G | vdc:200G | CentOS7.8 minimal |

| k8s-node2 | 192.168.1.106 | 4核 | 16G | vda: 60G | vdb: 200G | vdc:200G | CentOS7.8 minimal |

| k8s-node3 | 192.168.1.107 | 4核 | 16G | vda: 60G | vdb: 200G | vdc:200G | CentOS7.8 minimal |

部署示意图

说明:

- vdb盘:/var/lib/docker作为镜像及容器数据存储目录建议单独挂盘,创建为逻辑卷方便扩容。另外/var/lib/kubelet存储了pod临时卷,如果临时卷用量较大也建议使用单独存储(可选)。

- vdc盘:使用rook作为kubesphere容器平台底层存储,3数据副本的ceph集群每个节点至少需要1个OSD,每个OSD位于3个不同的节点上,与longhorn不同,rook只能使用干净的裸盘或分区,所以在每个node上添加一块vdc盘。

所有节点vdb整盘创建为逻辑卷,挂载到/var/lib/docker目录下,用于存储docker数据(可选):

yum install -y lvm2

pvcreate /dev/vdb

vgcreate data /dev/vdb

lvcreate -l 100%VG -n docker data

mkfs.xfs /dev/data/docker

mkdir /var/lib/docker

echo "/dev/mapper/data-docker /var/lib/docker xfs defaults 0 0" >> /etc/fstab

mount -a

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

后续扩展容量只需将磁盘加入data卷组即可。

验证挂载正常

[root@k8s-master1 ~]# df -h | grep docker

/dev/mapper/data-docker 200G 33M 200G 1% /var/lib/docker

- 1

- 2

vdc盘保留为裸盘用于rook组建ceph集群的osd盘,请勿做任何分区格式化操作,磁盘最终状态如下:

[root@k8s-master1 ~]# lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

vda

└─vda1 ext4 ca08d7b9-ded5-4935-882f-5b12d6efb14f /

vdb LVM2_member cPhOii-QPw5-85Vs-UFSH-aJV1-rq0D-4883rX

└─data-docker xfs c32b7a2c-b1f6-4e5f-b422-39dc68c0d9aa /var/lib/docker

vdc

- 1

- 2

- 3

- 4

- 5

- 6

- 7

部署k8s集群

使用sealos工具部署k8s集群,所有节点必须配置独立主机名,并确认节点时间同步:

hostnamectl set-hostname xx

yum install -y chrony

systemctl enable --now chronyd

timedatectl set-timezone Asia/Shanghai

- 1

- 2

- 3

- 4

验证所有节点时间是否同步

timedatectl

- 1

在第一个master节点操作,下载部署工具及离线包(kubesphere v3.0.0请使用kubernetes v1.18.x版本,务必确认kubesphere支持的kubernetes版本范围)

# 下载sealos

wget -c https://sealyun.oss-cn-beijing.aliyuncs.com/latest/sealos && \

chmod +x sealos && mv sealos /usr/bin

# 下载kubernetes v1.18.8离线包

wget -c https://sealyun.oss-cn-beijing.aliyuncs.com/cd3d5791b292325d38bbfaffd9855312-1.18.8/kube1.18.8.tar.gz

- 1

- 2

- 3

- 4

- 5

- 6

执行以下命令部署k8s集群,passwd为所有节点root密码

sealos init --passwd 123456 \

--master 192.168.1.102 \

--master 192.168.1.103 \

--master 192.168.1.104 \

--node 192.168.1.105 \

--node 192.168.1.106 \

--node 192.168.1.107 \

--pkg-url kube1.18.8.tar.gz \

--version v1.18.8

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

确认k8s集群已经就绪:

[root@k8s-master1 ~]# kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-master1 Ready master 5m57s v1.18.8 192.168.1.102 <none> CentOS Linux 7 (Core) 3.10.0-1127.18.2.el7.x86_64 docker://19.3.0 k8s-master2 Ready master 5m28s v1.18.8 192.168.1.103 <none> CentOS Linux 7 (Core) 3.10.0-1127.18.2.el7.x86_64 docker://19.3.0 k8s-master3 Ready master 5m27s v1.18.8 192.168.1.104 <none> CentOS Linux 7 (Core) 3.10.0-1127.18.2.el7.x86_64 docker://19.3.0 k8s-node1 Ready <none> 4m34s v1.18.8 192.168.1.105 <none> CentOS Linux 7 (Core) 3.10.0-1127.18.2.el7.x86_64 docker://19.3.0 k8s-node2 Ready <none> 4m34s v1.18.8 192.168.1.106 <none> CentOS Linux 7 (Core) 3.10.0-1127.18.2.el7.x86_64 docker://19.3.0 k8s-node3 Ready <none> 4m34s v1.18.8 192.168.1.107 <none> CentOS Linux 7 (Core) 3.10.0-1127.18.2.el7.x86_64 docker://19.3.0 [root@k8s-master1 ~]# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-84445dd79f-ntnf2 1/1 Running 0 5m50s kube-system calico-node-6dnfd 1/1 Running 0 5m39s kube-system calico-node-8gxwv 1/1 Running 0 4m45s kube-system calico-node-b28xq 1/1 Running 0 4m45s kube-system calico-node-k2978 1/1 Running 0 4m45s kube-system calico-node-rldns 1/1 Running 0 5m50s kube-system calico-node-zm5dl 1/1 Running 0 4m57s kube-system coredns-66bff467f8-jk8h5 1/1 Running 0 5m50s kube-system coredns-66bff467f8-n8hsn 1/1 Running 0 5m50s kube-system etcd-k8s-master1 1/1 Running 0 5m59s kube-system etcd-k8s-master2 1/1 Running 0 5m33s kube-system etcd-k8s-master3 1/1 Running 0 5m26s kube-system kube-apiserver-k8s-master1 1/1 Running 0 5m59s kube-system kube-apiserver-k8s-master2 1/1 Running 0 5m34s kube-system kube-apiserver-k8s-master3 1/1 Running 0 4m12s kube-system kube-controller-manager-k8s-master1 1/1 Running 1 5m59s kube-system kube-controller-manager-k8s-master2 1/1 Running 0 5m38s kube-system kube-controller-manager-k8s-master3 1/1 Running 0 4m18s kube-system kube-proxy-2lr22 1/1 Running 0 4m45s kube-system kube-proxy-4m78t 1/1 Running 0 4m57s kube-system kube-proxy-jzrc9 1/1 Running 0 5m50s kube-system kube-proxy-kpwnn 1/1 Running 0 4m45s kube-system kube-proxy-lw5bq 1/1 Running 0 4m45s kube-system kube-proxy-rl2g5 1/1 Running 0 5m39s kube-system kube-scheduler-k8s-master1 1/1 Running 1 5m59s kube-system kube-scheduler-k8s-master2 1/1 Running 0 5m33s kube-system kube-scheduler-k8s-master3 1/1 Running 0 4m7s kube-system kube-sealyun-lvscare-k8s-node1 1/1 Running 0 4m43s kube-system kube-sealyun-lvscare-k8s-node2 1/1 Running 0 4m44s kube-system kube-sealyun-lvscare-k8s-node3 1/1 Running 0 3m24s

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

部署rook存储

1、首先使用helm部署rook operator

安装helm:

version=v3.3.1

curl -LO https://repo.huaweicloud.com/helm/${version}/helm-${version}-linux-amd64.tar.gz

tar -zxvf helm-${version}-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm && rm -rf linux-amd64

- 1

- 2

- 3

- 4

添加rook helm repo

helm repo add rook-release https://charts.rook.io/release

helm search repo rook-ceph --versions

- 1

- 2

部署rook operator

helm install rook-operator \

--version v1.4.4 \

--namespace rook-ceph \

--create-namespace \

--set csi.pluginTolerations[0].key="node-role.kubernetes.io/master" \

--set csi.pluginTolerations[0].operator=Exists \

--set csi.pluginTolerations[0].effect=NoSchedule \

rook-release/rook-ceph

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

说明:所有需要使用pv存储的节点必须运行csi pod插件,如果部分pod做了容忍可以调度到master节点又恰好需要挂载pv,而csi插件没有对master容忍就会导致pod挂载pv失败。

[root@k8s-master1 ceph]# kubectl describe nodes k8s-master1 | grep Taints

Taints: node-role.kubernetes.io/master:NoSchedule

- 1

- 2

2、部署rook cluster

wget https://github.com/rook/rook/archive/v1.4.4.tar.gz

tar -zxvf v1.4.4.tar.gz

cd rook-1.4.4/cluster/examples/kubernetes/ceph

kubectl create -f cluster.yaml

- 1

- 2

- 3

- 4

确认所有pod运行正常

[root@k8s-master1 ceph]# kubectl -n rook-ceph get pods NAME READY STATUS RESTARTS AGE csi-cephfsplugin-9qcvs 3/3 Running 0 22m csi-cephfsplugin-c8mb4 3/3 Running 0 20m csi-cephfsplugin-fcrzv 3/3 Running 0 22m csi-cephfsplugin-pmjqn 3/3 Running 0 20m csi-cephfsplugin-provisioner-598854d87f-l26qc 6/6 Running 0 21m csi-cephfsplugin-provisioner-598854d87f-zm2jt 6/6 Running 0 21m csi-cephfsplugin-srz2x 3/3 Running 0 22m csi-cephfsplugin-swh5g 3/3 Running 0 20m csi-rbdplugin-2cgr9 3/3 Running 0 20m csi-rbdplugin-4jxpc 3/3 Running 0 22m csi-rbdplugin-bd68x 3/3 Running 0 22m csi-rbdplugin-fbs6n 3/3 Running 0 20m csi-rbdplugin-jvz47 3/3 Running 0 22m csi-rbdplugin-k98qz 3/3 Running 0 21m csi-rbdplugin-provisioner-dbc67ffdc-hvfln 6/6 Running 0 21m csi-rbdplugin-provisioner-dbc67ffdc-sh5db 6/6 Running 0 21m rook-ceph-crashcollector-k8s-node1-6d79784c6d-fsvxm 1/1 Running 0 3h2m rook-ceph-crashcollector-k8s-node2-576785565c-9dbdr 1/1 Running 0 3h3m rook-ceph-crashcollector-k8s-node3-c8fc68746-8dp7f 1/1 Running 0 3h3m rook-ceph-mgr-a-845ff64bf5-ldhf2 1/1 Running 0 3h2m rook-ceph-mon-a-64fd6c646f-prt9x 1/1 Running 0 3h4m rook-ceph-mon-b-55fd7dc845-ndhtw 1/1 Running 0 3h3m rook-ceph-mon-c-6c4469c6b4-s7s4m 1/1 Running 0 3h3m rook-ceph-operator-667756ddb6-s82sj 1/1 Running 0 3h8m rook-ceph-osd-0-7c57558cf-rf7ql 1/1 Running 0 3h2m rook-ceph-osd-1-58cb86f47f-75rgr 1/1 Running 0 3h2m rook-ceph-osd-2-76b9d45484-qw6l2 1/1 Running 0 3h2m rook-ceph-osd-prepare-k8s-node1-z4k9r 0/1 Completed 0 126m rook-ceph-osd-prepare-k8s-node2-rjtkp 0/1 Completed 0 126m rook-ceph-osd-prepare-k8s-node3-2sbkb 0/1 Completed 0 126m rook-ceph-tools-7cc7fd5755-784tv 1/1 Running 0 113m rook-discover-77m2c 1/1 Running 0 3h8m rook-discover-97svb 1/1 Running 0 3h8m rook-discover-cr48w 1/1 Running 0 3h8m

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

ceph会自动发现节点上的空磁盘并将其创建为ceph OSD,可以看到node节点上的vdc被创建为lvm类型的磁盘:

[root@k8s-node1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

vda 253:0 0 60G 0 disk

└─vda1 253:1 0 60G 0 part /

vdb 253:16 0 200G 0 disk

└─data-docker 252:0 0 200G 0 lvm /var/lib/docker

vdc 253:32 0 200G 0 disk

└─ceph--96e1e8fb--8677--4276--832b--5a9b6ba6061e-osd--data--dc184518--da34--42fb--9100--c56c3d880f19 252:1 0 200G 0 lvm

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

运行ceph命令行工具

[root@k8s-master1 ceph]# kubectl create -f toolbox.yaml

#进入toolbox pod

kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') -- bash

- 1

- 2

- 3

- 4

确认ceph集群状态为HEALTH_OK,所有OSD盘up

[root@rook-ceph-tools-7cc7fd5755-784tv /]# ceph status cluster: id: a5552710-4d29-4717-90ae-f3ded597225e health: HEALTH_OK services: mon: 3 daemons, quorum a,b,c (age 69m) mgr: a(active, since 13m) osd: 3 osds: 3 up (since 69m), 3 in (since 69m) data: pools: 1 pools, 1 pgs objects: 0 objects, 0 B usage: 3.0 GiB used, 597 GiB / 600 GiB avail pgs: 1 active+clean [root@rook-ceph-tools-7cc7fd5755-784tv /]# ceph osd status ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE 0 k8s-node3 1027M 198G 0 0 0 0 exists,up 1 k8s-node2 1027M 198G 0 0 0 0 exists,up 2 k8s-node1 1027M 198G 0 0 0 0 exists,up

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

3、创建块存储类型的存储池和storageclass

[root@k8s-master1 ceph]# kubectl create -f csi/rbd/storageclass.yaml

- 1

设置为默认storageclass

kubectl patch storageclass rook-ceph-block -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

- 1

确认rook-ceph-block为默认storageclass (default)

[root@k8s-master1 ceph]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-ceph-block (default) rook-ceph.rbd.csi.ceph.com Delete Immediate true 58s

- 1

- 2

- 3

登录ceph dashboard

默认已经创建了dashboard service,但是类型为clusterIP,重新创建一个NodePort类型的service

[root@k8s-master1 ceph]# kubectl apply -f dashboard-external-https.yaml

[root@k8s-master1 ceph]# kubectl -n rook-ceph get service | grep dashboard

rook-ceph-mgr-dashboard ClusterIP 10.110.146.141 <none> 8443/TCP 72m

rook-ceph-mgr-dashboard-external-https NodePort 10.107.93.204 <none> 8443:31318/TCP 36s

- 1

- 2

- 3

- 4

- 5

获取dashboard admin用户的密码

kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

- 1

登录后查看ceph集群整体状态

点击Pools,可以看到创建的replicapool存储池,点击Usage查看下大小,由于是3副本,实际可用存储大概200G,后续所有调用rook-ceph-block 这个storageclass创建的pv,数据都会存储在这个存储池内

建议先跑下官方示例,确认pv绑定没问题.

kubectl create -f cluster/examples/kubernetes/mysql.yaml

kubectl create -f cluster/examples/kubernetes/wordpress.yaml

- 1

- 2

清理rook集群:https://rook.io/docs/rook/v1.4/ceph-teardown.html

默认的storageclass就绪后可以继续部署kubesphere容器平台。

部署kubeSphere

参考:https://github.com/kubesphere/helm-charts/tree/master/src/test/ks-installer

添加kubesphere helm repo:

helm repo add test https://charts.kubesphere.io/test

- 1

查看repo中的charts

[root@k8s-master1 ceph]# helm search repo test NAME CHART VERSION APP VERSION DESCRIPTION test/apisix 0.1.5 1.15.0 Apache APISIX is a dynamic, real-time, high-per... test/aws-ebs-csi-driver 0.3.0 0.5.0 A Helm chart for AWS EBS CSI Driver test/aws-efs-csi-driver 0.1.0 0.3.0 A Helm chart for AWS EFS CSI Driver test/aws-fsx-csi-driver 0.1.0 0.1.0 The Amazon FSx for Lustre Container Storage Int... test/biz-engine 0.1.0 1.0 A Helm chart for Kubernetes test/csi-neonsan 1.2.2 1.2.0 A Helm chart for NeonSAN CSI Driver test/csi-qingcloud 1.2.6 1.2.0 A Helm chart for Qingcloud CSI Driver test/etcd 0.1.1 3.3.12 etcd is a distributed reliable key-value store ... test/ks-installer 0.2.1 3.0.0 The helm chart of KubeSphere, supports installi... test/mongodb 0.3.0 4.2.1 MongoDB is a general purpose, document-based, d... test/porter 0.1.4 0.3.1 Bare Metal Load-balancer for Kubernetes Cluster test/postgresql 0.3.2 12.0 PostgreSQL is a powerful, open source object-re... test/rabbitmq 0.3.0 3.8.1 RabbitMQ is the most widely deployed open sourc... test/rbd-provisioner 0.1.1 0.1.0 rbd provisioner is an automatic provisioner tha... test/redis 0.3.2 5.0.5 Redis is an open source (BSD licensed), in-memo... test/skywalking 3.1.0 8.1.0 Apache SkyWalking APM System test/snapshot-controller 0.1.0 2.1.1 A Helm chart for snapshot-controller

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

部署kubesphere,开启要安装的插件,这里开启了devops、日志、metrics_server以及应用商店:

helm install kubesphere \

--namespace=kubesphere-system \

--create-namespace \

--set image.repository=kubesphere/ks-installer \

--set image.tag=v3.0.0 \

--set persistence.storageClass=rook-ceph-block \

--set devops.enabled=true \

--set logging.enabled=true \

--set metrics_server.enabled=true \

--set openpitrix.enabled=true \

test/ks-installer

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

查看部署日志,确认无报错

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

- 1

部署完成后确认所有pod运行正常

[root@k8s-master1 ceph]# kubectl get pods -A | grep kubesphere kubesphere-controls-system default-http-backend-857d7b6856-87hfd 1/1 Running 0 100m kubesphere-controls-system kubectl-admin-58f985d8f6-9s6lr 1/1 Running 0 17m kubesphere-devops-system ks-jenkins-54455f5db8-cxw6r 1/1 Running 0 97m kubesphere-devops-system s2ioperator-0 1/1 Running 1 98m kubesphere-devops-system uc-jenkins-update-center-cd9464fff-6g7w6 1/1 Running 0 99m kubesphere-logging-system elasticsearch-logging-data-0 1/1 Running 0 101m kubesphere-logging-system elasticsearch-logging-data-1 1/1 Running 0 99m kubesphere-logging-system elasticsearch-logging-data-2 1/1 Running 0 98m kubesphere-logging-system elasticsearch-logging-discovery-0 1/1 Running 0 101m kubesphere-logging-system elasticsearch-logging-discovery-1 1/1 Running 0 99m kubesphere-logging-system elasticsearch-logging-discovery-2 1/1 Running 0 98m kubesphere-logging-system fluent-bit-725c4 1/1 Running 0 100m kubesphere-logging-system fluent-bit-9rc6d 1/1 Running 0 100m kubesphere-logging-system fluent-bit-g5n57 1/1 Running 0 100m kubesphere-logging-system fluent-bit-n85tz 1/1 Running 0 100m kubesphere-logging-system fluent-bit-nfpgl 1/1 Running 0 100m kubesphere-logging-system fluent-bit-pc527 1/1 Running 0 100m kubesphere-logging-system fluentbit-operator-855d4b977d-ffr2g 1/1 Running 0 101m kubesphere-logging-system logsidecar-injector-deploy-74c66bfd85-t67gz 2/2 Running 0 99m kubesphere-logging-system logsidecar-injector-deploy-74c66bfd85-vsvrh 2/2 Running 0 99m kubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 98m kubesphere-monitoring-system alertmanager-main-1 2/2 Running 0 98m kubesphere-monitoring-system alertmanager-main-2 2/2 Running 0 98m kubesphere-monitoring-system kube-state-metrics-95c974544-xqkv5 3/3 Running 0 98m kubesphere-monitoring-system node-exporter-95462 2/2 Running 0 98m kubesphere-monitoring-system node-exporter-9cxnq 2/2 Running 0 98m kubesphere-monitoring-system node-exporter-cbp58 2/2 Running 0 98m kubesphere-monitoring-system node-exporter-cx8fz 2/2 Running 0 98m kubesphere-monitoring-system node-exporter-tszl7 2/2 Running 0 98m kubesphere-monitoring-system node-exporter-tt9f2 2/2 Running 0 98m kubesphere-monitoring-system notification-manager-deployment-7c8df68d94-9mtvx 1/1 Running 0 97m kubesphere-monitoring-system notification-manager-deployment-7c8df68d94-rvr6m 1/1 Running 0 97m kubesphere-monitoring-system notification-manager-operator-6958786cd6-6qbqz 2/2 Running 0 97m kubesphere-monitoring-system prometheus-k8s-0 3/3 Running 1 98m kubesphere-monitoring-system prometheus-k8s-1 3/3 Running 1 98m kubesphere-monitoring-system prometheus-operator-84d58bf775-kpdfq 2/2 Running 0 98m kubesphere-system etcd-65796969c7-5fxjf 1/1 Running 0 101m kubesphere-system ks-apiserver-59d74f777d-776rg 1/1 Running 11 50m kubesphere-system ks-apiserver-59d74f777d-djz68 1/1 Running 0 18m kubesphere-system ks-apiserver-59d74f777d-gfn7v 1/1 Running 20 97m kubesphere-system ks-console-786b9846d4-9djs9 1/1 Running 0 100m kubesphere-system ks-console-786b9846d4-g42kc 1/1 Running 0 100m kubesphere-system ks-console-786b9846d4-rhltz 1/1 Running 0 100m kubesphere-system ks-controller-manager-57c45bf58b-4rsml 1/1 Running 20 96m kubesphere-system ks-controller-manager-57c45bf58b-jlfjf 1/1 Running 20 96m kubesphere-system ks-controller-manager-57c45bf58b-vwvq5 1/1 Running 20 97m kubesphere-system ks-installer-7cb866bd-vj9q8 1/1 Running 0 105m kubesphere-system minio-7bfdb5968b-bqw2x 1/1 Running 0 102m kubesphere-system mysql-7f64d9f584-pbqph 1/1 Running 0 101m kubesphere-system openldap-0 1/1 Running 0 102m kubesphere-system openldap-1 1/1 Running 0 21m kubesphere-system redis-ha-haproxy-5c6559d588-9bjrd 1/1 Running 13 102m kubesphere-system redis-ha-haproxy-5c6559d588-9zpz9 1/1 Running 15 102m kubesphere-system redis-ha-haproxy-5c6559d588-bzrxs 1/1 Running 19 102m kubesphere-system redis-ha-server-0 2/2 Running 0 23m kubesphere-system redis-ha-server-1 2/2 Running 0 22m kubesphere-system redis-ha-server-2 2/2 Running 0 22m

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

查看使用helm部署的应用

[root@k8s-master1 ~]# helm ls -A | grep kubesphere

elasticsearch-logging kubesphere-logging-system 1 2020-10-09 13:08:51.160758783 +0800 CST deployed elasticsearch-1.22.1 6.7.0-0217

elasticsearch-logging-curator kubesphere-logging-system 1 2020-10-09 13:08:53.108855322 +0800 CST deployed elasticsearch-curator-1.3.3 5.5.4-0217

ks-jenkins kubesphere-devops-system 1 2020-10-09 13:12:44.612683667 +0800 CST deployed jenkins-0.19.0 2.121.3-0217

ks-minio kubesphere-system 1 2020-10-09 13:07:53.607800017 +0800 CST deployed minio-2.5.16 RELEASE.2019-08-07T01-59-21Z

ks-openldap kubesphere-system 1 2020-10-09 13:07:41.468154298 +0800 CST deployed openldap-ha-0.1.0 1.0

kubesphere kubesphere-system 1 2020-10-09 13:07:53.405494209 +0800 CST deployed ks-installer-0.2.1 3.0.0

ks-redis kubesphere-system 1 2020-10-09 13:07:34.128473211 +0800 CST deployed redis-ha-3.9.0 5.0.5

logsidecar-injector kubesphere-logging-system 1 2020-10-09 13:10:48.478814658 +0800 CST deployed logsidecar-injector-0.1.0 0.1.0

notification-manager kubesphere-monitoring-system 1 2020-10-09 13:12:19.698782146 +0800 CST deployed notification-manager-0.1.0 0.1.0

uc kubesphere-devops-system 1 2020-10-09 13:10:34.954444043 +0800 CST deployed jenkins-update-center-0.8.0 3.0.0

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

查看绑定的pv

[root@k8s-master1 ~]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-02d60e15-a76e-4e8e-98b4-bd2741faf2bb 4Gi RWO Delete Bound kubesphere-logging-system/data-elasticsearch-logging-discovery-0 rook-ceph-block 108m pvc-1d34723f-8908-4dc9-b560-ecd8bd35aa57 2Gi RWO Delete Bound kubesphere-system/openldap-pvc-openldap-1 rook-ceph-block 28m pvc-2a355379-1358-42ae-9eaa-fcba53295134 20Gi RWO Delete Bound kubesphere-logging-system/data-elasticsearch-logging-data-2 rook-ceph-block 105m pvc-38bb0d32-11eb-468b-839f-ddb796bec6a1 4Gi RWO Delete Bound kubesphere-logging-system/data-elasticsearch-logging-discovery-1 rook-ceph-block 105m pvc-41291f83-031a-42a4-9842-4781fcc4383e 8Gi RWO Delete Bound kubesphere-devops-system/ks-jenkins rook-ceph-block 104m pvc-4bddf760-462e-424f-aa24-666ce50168ca 20Gi RWO Delete Bound kubesphere-monitoring-system/prometheus-k8s-db-prometheus-k8s-1 rook-ceph-block 104m pvc-4f741a76-08e7-428f-9489-d9655b82994e 20Gi RWO Delete Bound kubesphere-logging-system/data-elasticsearch-logging-data-1 rook-ceph-block 106m pvc-66f15326-e9de-4b2e-9682-d585f359bada 20Gi RWO Delete Bound kubesphere-monitoring-system/prometheus-k8s-db-prometheus-k8s-0 rook-ceph-block 104m pvc-7adcda06-7875-41b0-8ddb-c7aa6c448ae5 20Gi RWO Delete Bound kubesphere-system/mysql-pvc rook-ceph-block 108m pvc-7f7ac4f3-ed29-42d7-b35f-fc15f1b3f2f8 2Gi RWO Delete Bound kubesphere-system/data-redis-ha-server-1 rook-ceph-block 29m pvc-9a15a19f-59f0-4ddf-9356-8c1a09aad33a 2Gi RWO Delete Bound kubesphere-system/openldap-pvc-openldap-0 rook-ceph-block 109m pvc-9d7ca083-62e8-4177-a7ed-dacc912bf091 2Gi RWO Delete Bound kubesphere-system/data-redis-ha-server-0 rook-ceph-block 109m pvc-a71517e7-0bd8-48ca-b6a0-5710dde18c64 20Gi RWO Delete Bound kubesphere-system/minio rook-ceph-block 109m pvc-db18d04e-6595-4fd1-836a-f42628a25d0b 20Gi RWO Delete Bound kubesphere-logging-system/data-elasticsearch-logging-data-0 rook-ceph-block 108m pvc-e781c644-8304-4461-acd8-a136889e1174 20Gi RWO Delete Bound kubesphere-system/etcd-pvc rook-ceph-block 108m pvc-f288b4df-7458-4f91-8608-3af078986d33 4Gi RWO Delete Bound kubesphere-logging-system/data-elasticsearch-logging-discovery-2 rook-ceph-block 105m pvc-fe493c61-63e7-4a20-96f8-a2c550b019f4 2Gi RWO Delete Bound kubesphere-system/data-redis-ha-server-2 rook-ceph-block 29m

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

实际上pv是ceph使用rbd客户端挂载到节点的块设备

[root@k8s-node1 ~]# lsblk | grep rbd

rbd0 251:0 0 20G 0 disk /var/lib/kubelet/pods/810061ea-fac0-4a38-9586-ee14c8fda984/volumes/kubernetes.io~csi/pvc-e781c644-8304-4461-acd8-a136889e1174/mount

rbd1 251:16 0 20G 0 disk /var/lib/kubelet/pods/7ab52612-0893-4834-839b-dbd3ee9d7589/volumes/kubernetes.io~csi/pvc-7adcda06-7875-41b0-8ddb-c7aa6c448ae5/mount

rbd2 251:32 0 4G 0 disk /var/lib/kubelet/pods/83bf95f7-6e7a-4699-aa4e-ea6564ebaad4/volumes/kubernetes.io~csi/pvc-02d60e15-a76e-4e8e-98b4-bd2741faf2bb/mount

rbd3 251:48 0 20G 0 disk /var/lib/kubelet/pods/e313f3ba-fd19-4f6f-a561-78e4e62614b9/volumes/kubernetes.io~csi/pvc-4f741a76-08e7-428f-9489-d9655b82994e/mount

rbd4 251:64 0 8G 0 disk /var/lib/kubelet/pods/5265b538-90da-412d-be5e-3326af7c3196/volumes/kubernetes.io~csi/pvc-41291f83-031a-42a4-9842-4781fcc4383e/mount

- 1

- 2

- 3

- 4

- 5

- 6

查看pv和块设备对应关系

[root@k8s-master1 ~]# kubectl get volumeattachment NAME ATTACHER PV NODE ATTACHED AGE csi-053f2bfa28ac8ab9c17f7f4981dce4a0b4aadc8c933e8fdff32da16d623501f4 rook-ceph.rbd.csi.ceph.com pvc-f288b4df-7458-4f91-8608-3af078986d33 k8s-node2 true 109m csi-3709f608c4edf4c4c92721add6e69b6223b5390ed81f1b8ccf7a5da1705cd94b rook-ceph.rbd.csi.ceph.com pvc-38bb0d32-11eb-468b-839f-ddb796bec6a1 k8s-node3 true 110m csi-4b8bdac0b581c0291707130d6bb1dcb5ebc66a56d3e067dfbb4f56edb6c7abf9 rook-ceph.rbd.csi.ceph.com pvc-4f741a76-08e7-428f-9489-d9655b82994e k8s-node1 true 111m csi-8a39d696f23a13b00b4dea835f732c5bd9a05e8e851dc47cb6b8bf9a9343a0f2 rook-ceph.rbd.csi.ceph.com pvc-9d7ca083-62e8-4177-a7ed-dacc912bf091 k8s-master2 true 114m csi-8a99e4304666fa74d6216590ff1b6373879c7c6744c97e90950c165fa778653c rook-ceph.rbd.csi.ceph.com pvc-e781c644-8304-4461-acd8-a136889e1174 k8s-node1 true 113m csi-cda98cea8f1ad1f5949005d5f5f10e5929c82d05f348ae31300adb8aec775b6d rook-ceph.rbd.csi.ceph.com pvc-7f7ac4f3-ed29-42d7-b35f-fc15f1b3f2f8 k8s-master3 true 34m csi-d01b8cac9a340fd5e96170e2cc196574bf509db04b00430b24f4d50042e0b9e6 rook-ceph.rbd.csi.ceph.com pvc-02d60e15-a76e-4e8e-98b4-bd2741faf2bb k8s-node1 true 113m csi-d340f3ccdc4681db8ba5336e5ba846f5d0f31369612f685eba304aaa28745971 rook-ceph.rbd.csi.ceph.com pvc-41291f83-031a-42a4-9842-4781fcc4383e k8s-node1 true 109m csi-d4db3283dd2b1c7373534b01330f8ed32ebd0548c348875d590b43d505a75237 rook-ceph.rbd.csi.ceph.com pvc-4bddf760-462e-424f-aa24-666ce50168ca k8s-node3 true 109m csi-dfac397b86769d712799d700e1919a083418c2e706ca000da1da60b715bdbf5a rook-ceph.rbd.csi.ceph.com pvc-2a355379-1358-42ae-9eaa-fcba53295134 k8s-node2 true 110m csi-e7c56dab61b9b42c16e9fca1269e6e700de4d8f0fa38922c0f482c96ad3d757a rook-ceph.rbd.csi.ceph.com pvc-9a15a19f-59f0-4ddf-9356-8c1a09aad33a k8s-master3 true 114m csi-e914c5d320012d41f5f459fa9fd3ca9afc8d73e5d5d126b5be93f0cecff57ebd rook-ceph.rbd.csi.ceph.com pvc-1d34723f-8908-4dc9-b560-ecd8bd35aa57 k8s-master2 true 33m csi-ef8c703a1616bd46231d264fa5413dd562f9472c7430a2a834cbc0a04c1a673f rook-ceph.rbd.csi.ceph.com pvc-7adcda06-7875-41b0-8ddb-c7aa6c448ae5 k8s-node1 true 113m csi-f71974ab0cdcc67cd3ff230d4a0e900755dc2582d58b6b657263c6fc4fc7274d rook-ceph.rbd.csi.ceph.com pvc-a71517e7-0bd8-48ca-b6a0-5710dde18c64 k8s-node3 true 114m csi-fb4499d62b6b9767a4396432983f7c684e2a32dcb042a10e859a7c229be9af0b rook-ceph.rbd.csi.ceph.com pvc-66f15326-e9de-4b2e-9682-d585f359bada k8s-node2 true 109m csi-fe7c56b8f0ed55169ec71654b0952d2839f04b0855c4d0f9c56ec1e9f7c37fe1 rook-ceph.rbd.csi.ceph.com pvc-fe493c61-63e7-4a20-96f8-a2c550b019f4 k8s-master1 true 34m csi-ff5de0f94fc2ca7b5c4f43b81f9eb97c6f9f5ad091d6d78fd9d422d4f3f030cc rook-ceph.rbd.csi.ceph.com pvc-db18d04e-6595-4fd1-836a-f42628a25d0b k8s-node3 true 113m

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

上面的pv对应ceph中的image概念

#进入toolbox pod kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') -- bash #列出replicapool存储池中所有的image [root@rook-ceph-tools-7cc7fd5755-784tv /]# rbd ls replicapool csi-vol-109ef2dd-09ee-11eb-b2f2-ea801b5d83dd csi-vol-57b3fd9d-09ed-11eb-b2f2-ea801b5d83dd csi-vol-5bdc9036-09ed-11eb-b2f2-ea801b5d83dd csi-vol-630ff002-09ed-11eb-b2f2-ea801b5d83dd csi-vol-80284a01-09f8-11eb-9cca-0afc38dd23d7 csi-vol-81db6730-09ed-11eb-b2f2-ea801b5d83dd csi-vol-82a332e4-09ed-11eb-b2f2-ea801b5d83dd csi-vol-857bc4bd-09ed-11eb-b2f2-ea801b5d83dd csi-vol-857bf228-09ed-11eb-b2f2-ea801b5d83dd csi-vol-91eced11-09f8-11eb-9cca-0afc38dd23d7 csi-vol-a4f2686e-09f8-11eb-9cca-0afc38dd23d7 csi-vol-c4a815f1-09ed-11eb-b2f2-ea801b5d83dd csi-vol-d9906c83-09ed-11eb-b2f2-ea801b5d83dd csi-vol-e2b254e2-09ed-11eb-b2f2-ea801b5d83dd csi-vol-f404ab61-09ed-11eb-b2f2-ea801b5d83dd csi-vol-fde4f65d-09ed-11eb-b2f2-ea801b5d83dd csi-vol-fdeef353-09ed-11eb-b2f2-ea801b5d83dd

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

或者登陆ceph dashoboard查看,点击images查看

访问kubeSphere UI

获取web console 监听端口,默认为30880

kubectl get svc/ks-console -n kubesphere-system

- 1

默认登录账号为

admin/P@88w0rd

- 1

登录kubesphere UI,查看集群概况:

集群节点信息

服务组件信息

集群状态监控

应用资源监控

日志查询(右下角图标)

应用商店(helm应用)

kubesphere部署普通应用

以部署deployment类型应用为例

- 点击左上角平台管理,选择访问控制,创建一个普通用户,角色为platform-regular

- 创建一个企业空间,点击进入企业空间,选择企业空间设置,企业成员,邀请成员

- 将之前创建的普通用户加入企业空间,角色选择self-provisioner

- 以普通用户身份登陆到kubesphere,创建项目

- 选择应用负载,服务,创建无状态服务,填写名称、镜像及服务端口信息

以部署nginx为例

开启外网访问选择nodeport,点击创建。

然后查看工作负载,选择右侧编辑配置文件可以看到yaml中定义的deployment类型应用:

工作负载支持部署的应用类型为:

- 部署-对应k8s中的deployment

- 有状态副本集-对应k8s中的statefulset

- 守护进程集-对应k8s中的daemonset

- 下面的任务选项-对应k8s中的job和cronjob

kubesphere部署helm应用

以部署tidb数据库为例。

TiDB 是一款定位于在线事务处理/在线分析处理( HTAP: Hybrid Transactional/Analytical Processing)的融合型数据库产品,实现了一键水平伸缩,强一致性的多副本数据安全,分布式事务,实时 OLAP 等重要特性。同时兼容 MySQL 协议和生态,迁移便捷,运维成本极低。

参考:https://github.com/pingcap/docs-tidb-operator/blob/master/zh/get-started.md

下载tidb operator helm chart

helm repo add pingcap https://charts.pingcap.org/

helm search repo pingcap --version=v1.1.5

helm pull pingcap/tidb-cluster

helm pull pingcap/tidb-operator

- 1

- 2

- 3

- 4

将下载的chart包保存到本地:

[root@k8s-master1 ~]# ls | grep tidb

tidb-cluster-v1.1.5.tgz

tidb-operator-v1.1.5.tgz

- 1

- 2

- 3

以普通用户身份登录kubesphere,点击工作台,应用管理,应用模板,上传模板,上传完成后如下图:

首先使用命令安装 TiDB Operator CRDs

kubectl apply -f https://raw.githubusercontent.com/pingcap/tidb-operator/v1.1.5/manifests/crd.yaml

- 1

然后选择项目管理,进入项目,点击应用,部署新应用,来自应用模板,选择tidb-operator直接部署即可,无需修改配置文件。

完成后同样方式部署tidb-cluster,注意这次需要将values.yaml配置文件下载到本地,将storageClassName值local-storage替换为rook-ceph-block,替换完成后在覆盖粘贴到配置文件中,然后执行部署:

部署完成后,选择应用可以查看部署情况:

点击tidb-ci查看应用详情,可以看到有2个工作负载,这2个为deployment类型资源

点击工作负载,有状态副本集,可以看到tidb部分组件是statefulset类型

点击容器组(pod),过滤tidb,按名称排序,看下tidb operator都部署了哪些组件:

可以看到部署了2个tidb ,3个tikv以及3个pd,都调度在不同的节点上,保证了高可用。

点击存储管理,存储卷,其中tikv和pd这2个组件挂载了pv持久卷。

点击服务,可以看到tidb 4000端口默认service类型为nodePort:

测试使用mysql客户端连接数据库

[root@k8s-master1 ~]# docker run -it --rm mysql bash [root@0d7cf9d2173e:/# mysql -h 192.168.1.102 -P 31503 -u root Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 201 Server version: 5.7.25-TiDB-v4.0.6 TiDB Server (Apache License 2.0) Community Edition, MySQL 5.7 compatible Copyright (c) 2000, 2020, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | INFORMATION_SCHEMA | | METRICS_SCHEMA | | PERFORMANCE_SCHEMA | | mysql | | test | +--------------------+ 5 rows in set (0.01 sec) mysql>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

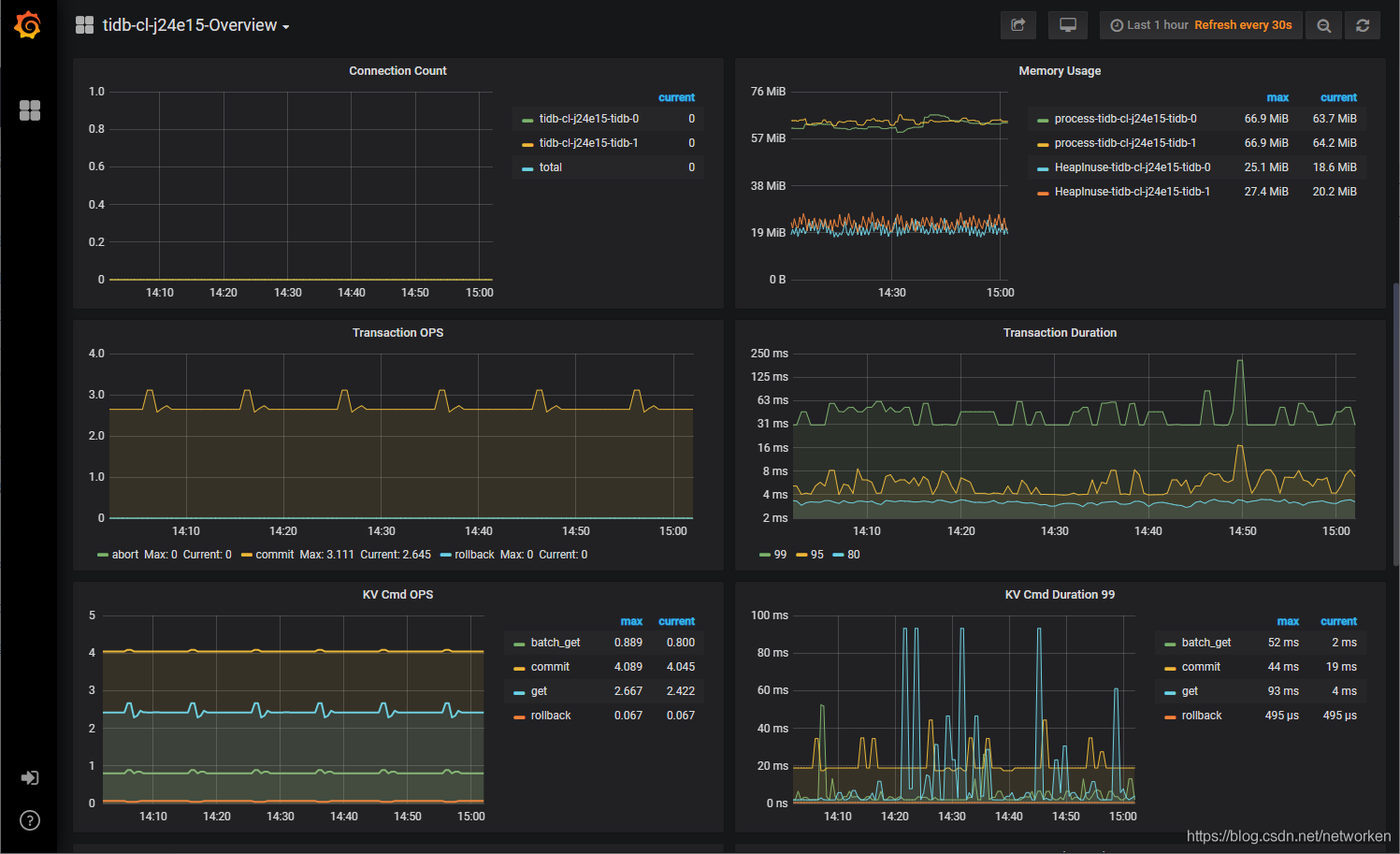

tidb operator自带了prometheus和grafana,用于数据库集群的性能监控,服务选项中可以看到grafana的serivce 3000端口绑定到了30804 nodeport,访问grafana,随便查看一个指标:

注意:

TiDB是重量级数据库,对磁盘IO和网络性能要求较高,建议运行在单独的node节点,rook支持添加SSD盘单独创建存储池和storageclass提供给tidb使用。

不仅tidb,在整个集群规模较大时,像kubesphere及rook都可以调度到独立节点运行,防止相互之间争抢资源、影响业务应用,或者出现性能瓶颈。这里由于节点有限,所有组件都堆到了同样的3个node节点上。

tidb上架应用商店

tidb部署测试没问题后,可以上架到应用商店。

以普通用户登录kubesphere,选择应用模板,点击tidb应用,展开后点击提交审核:

切换到管理员用户,点击左上角平台管理,应用商店管理,应用审核,选择右侧审核通过

再次回到普通用户,选择应用模板,进入tidb应用,展开发现此时应用可以发布到商店

发布后点击左上角应用商店,在应用商店中即可看到上架的tidb应用:

kubesphere运行流水线

依然使用普通用户,点击工作台,devops工程,创建devops工程,点击devops工程进入,创建流水线填写名称其他全部默认,点击创建,编辑jenkinsfile,粘贴以下内容并运行

pipeline { agent any stages { stage('获取源码') { steps { sh 'echo "获取源码"' } } stage('单元测试') { steps { sh 'echo "单元测试"' } } stage('代码扫描') { steps { sh 'echo "源代码扫描"' } } stage('源码编译') { steps { sh 'echo "源码编译"' } } stage('镜像构建') { steps { sh 'echo "docker镜像构建"' } } stage('镜像推送') { steps { sh 'echo "推送镜像到harbor仓库"' } } stage('应用部署') { steps { sh 'echo "部署应用到kubernetes集群"' } } } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

查看运行成功的流水线

查看流水线运行日志

清理kubesphere集群

wget https://raw.githubusercontent.com/kubesphere/ks-installer/master/scripts/kubesphere-delete.sh

sh kubesphere-delete.sh

- 1

- 2

helm方式

helm -n kubesphere-system uninstall xxx

- 1