- 1蓝桥杯EDA历年省赛真题分析_蓝桥杯pcb真题

- 2从理论到实践:AI先行者人工智能的赋能写作之路_《从理论到实践:ai先行者人工智能的赋能写作之路》

- 3谈谈Spring中Bean的生命周期?(让你瞬间通透~)_springbean的五个生命周期

- 4华为OD机试 -螺旋矩阵(java& c++& python & javascript & golang & c# & c)_华为od 机试 螺旋矩阵 顺时针填数字

- 5华为OD机试C卷 - 加密算法、特殊的加密算法(Java & JS & Python & C & C++)_caused by: java.lang.classnotfoundexception: com.m

- 6厉害了!Flutter制霸全平台,新版将支持Windows应用程序!_flutter win7

- 7com.mysql.jdbc.Driver com.mysql.cj.jdbc.Driver_com.mysql.cj.jdbc.driver在哪个包

- 8Python中的split()函数的用法_python中split的用法

- 9长亭雷池部署

- 10IT人职业规划(绝对给力)_公司内it总监的职业规划

2024年大数据最全Hadoop 配置 Kerberos 认证_hadoop kerberos认证,2024年最新大数据开发开发两年

赞

踩

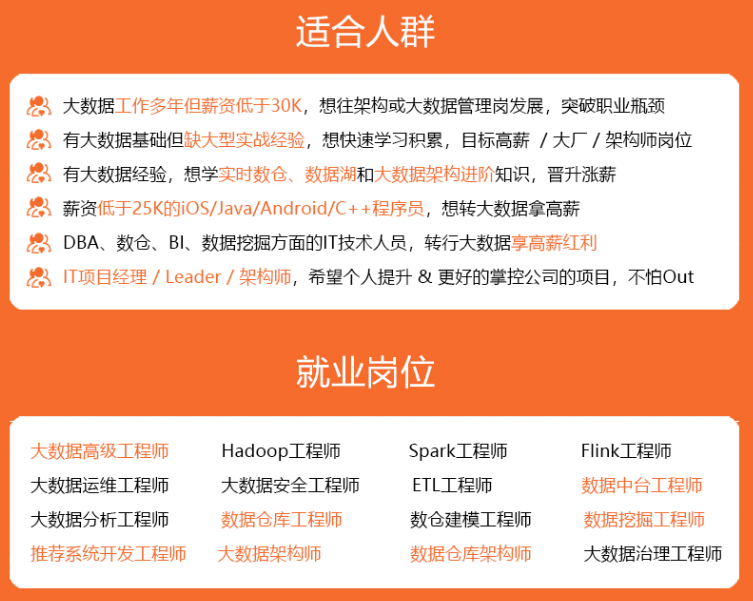

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

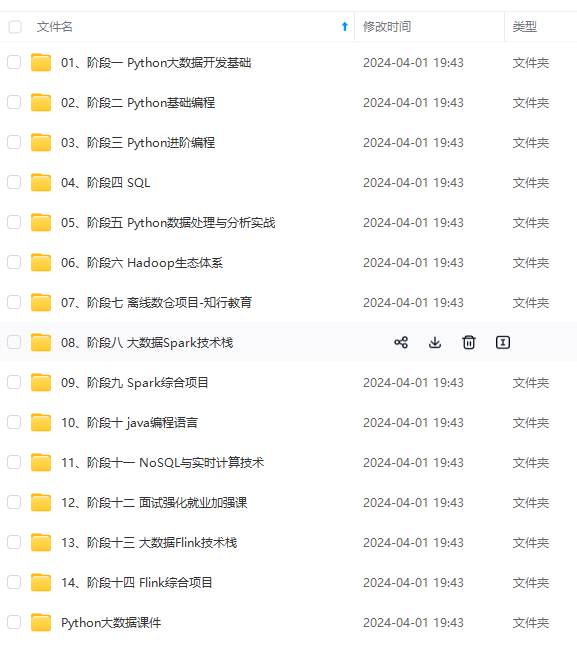

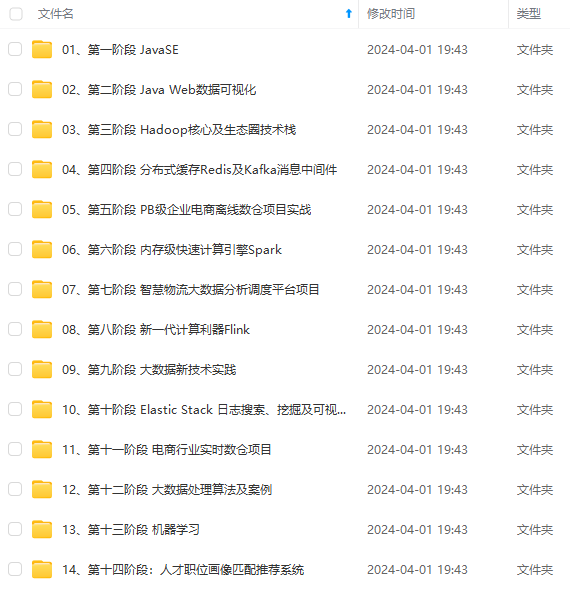

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

<value>50470</value> </property> <property> <name>dfs.datanode.data.dir.perm</name> <value>700</value> </property> <!-- 以下是 Kerberos 相关配置 --> <!-- 配置 Kerberos 认证后,这个配置是必须为 true。否重 Datanode 启动会报错 --> <property> <name>dfs.block.access.token.enable</name> <value>true</value> </property> <!-- NameNode security config --> <property> <name>dfs.namenode.kerberos.principal</name> <value>nn/_HOST@EXAMPLE.COM</value> <description>namenode对应的kerberos账户为 nn/主机名@EXAMPLE.COM, _HOST会自动转换为主机名 </description> </property> <property> <name>dfs.namenode.keytab.file</name> <!-- path to the HDFS keytab --> <value>/etc/security/keytabs/nn.service.keytab</value> <description>指定namenode用于免密登录的keytab文件</description> </property> <property> <name>dfs.namenode.kerberos.internal.spnego.principal</name> <value>HTTP/_HOST@EXAMPLE.COM</value> <description>https 相关(如开启namenodeUI)使用的账户</description> </property> <!--Secondary NameNode security config --> <property> <name>dfs.secondary.namenode.kerberos.principal</name> <value>sn/_HOST@EXAMPLE.COM</value> <description>secondarynamenode使用的账户</description> </property> <property> <name>dfs.secondary.namenode.keytab.file</name> <!-- path to the HDFS keytab --> <value>/etc/security/keytabs/sn.service.keytab</value> <description>sn对应的keytab文件</description> </property> <property> <name>dfs.secondary.namenode.kerberos.internal.spnego.principal</name> <value>HTTP/_HOST@EXAMPLE.COM</value> <description>sn需要开启http页面用到的账户</description> </property> <!-- DataNode security config --> <property> <name>dfs.datanode.kerberos.principal</name> <value>dn/_HOST@EXAMPLE.COM</value> <description>datanode用到的账户</description> </property> <property> <name>dfs.datanode.keytab.file</name> <!-- path to the HDFS keytab --> <value>/etc/security/keytabs/dn.service.keytab</value> <description>datanode用到的keytab文件路径</description> </property> <property> <name>dfs.web.authentication.kerberos.principal</name> <value>HTTP/_HOST@EXAMPLE.COM</value> <description>web hdfs 使用的账户</description> </property> <property> <name>dfs.web.authentication.kerberos.keytab</name> <value>/etc/security/keytabs/spnego.service.keytab</value> <description>对应的keytab文件</description> </property>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

###### yarn-site.xml

- 1

- 2

- 3

- 4

- 5

<property> <name>yarn.resourcemanager.scheduler.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value> <description></description> </property> <property> <name>yarn.nodemanager.local-dirs</name> <value>/data/nm-local</value> <description>Comma-separated list of paths on the local filesystem where intermediate data is written. </description> </property> <property> <name>yarn.nodemanager.log-dirs</name> <value>/data/nm-log</value> <description>Comma-separated list of paths on the local filesystem where logs are written. </description> </property> <property> <name>yarn.nodemanager.log.retain-seconds</name> <value>10800</value> <description>Default time (in seconds) to retain log files on the NodeManager Only applicable if log-aggregation is disabled. </description> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> <description>Shuffle service that needs to be set for Map Reduce applications. </description> </property> <!-- To enable SSL --> <property> <name>yarn.http.policy</name> <value>HTTPS_ONLY</value> </property> <property> <name>yarn.nodemanager.linux-container-executor.group</name> <value>hadoop</value> </property> <!-- 这个配了可能需要在本机编译 ContainerExecutor --> <!-- 可以用以下命令检查环境。 --> <!-- hadoop checknative -a --> <!-- ldd $HADOOP_HOME/bin/container-executor --> <!-- <property> <name>yarn.nodemanager.container-executor.class</name> <value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value> </property> <property> <name>yarn.nodemanager.linux-container-executor.path</name> <value>/bigdata/hadoop-3.3.2/bin/container-executor</value> </property> --> <!-- 以下是 Kerberos 相关配置 --> <!-- ResourceManager security configs --> <property> <name>yarn.resourcemanager.principal</name> <value>rm/_HOST@EXAMPLE.COM</value> </property> <property> <name>yarn.resourcemanager.keytab</name> <value>/etc/security/keytabs/rm.service.keytab</value> </property> <property> <name>yarn.resourcemanager.webapp.delegation-token-auth-filter.enabled</name> <value>true</value> </property> <!-- NodeManager security configs --> <property> <name>yarn.nodemanager.principal</name> <value>nm/_HOST@EXAMPLE.COM</value> </property> <property> <name>yarn.nodemanager.keytab</name> <value>/etc/security/keytabs/nm.service.keytab</value> </property> <!-- TimeLine security configs --> <property> <name>yarn.timeline-service.principal</name> <value>tl/_HOST@EXAMPLE.COM</value> </property> <property> <name>yarn.timeline-service.keytab</name> <value>/etc/security/keytabs/tl.service.keytab</value> </property> <property> <name>yarn.timeline-service.http-authentication.type</name> <value>kerberos</value> </property> <property> <name>yarn.timeline-service.http-authentication.kerberos.principal</name> <value>HTTP/_HOST@EXAMPLE.COM</value> </property> <property> <name>yarn.timeline-service.http-authentication.kerberos.keytab</name> <value>/etc/security/keytabs/spnego.service.keytab</value> </property>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

###### mapred-site.xml

- 1

- 2

- 3

- 4

- 5

<property> <name>mapreduce.jobhistory.http.policy</name> <value>HTTPS_ONLY</value> </property> <!-- 以下是 Kerberos 相关配置 --> <property> <name>mapreduce.jobhistory.keytab</name> <value>/etc/security/keytabs/jhs.service.keytab</value> </property> <property> <name>mapreduce.jobhistory.principal</name> <value>jhs/_HOST@EXAMPLE.COM</value> </property> <property> <name>mapreduce.jobhistory.webapp.spnego-principal</name> <value>HTTP/_HOST@EXAMPLE.COM</value> </property> <property> <name>mapreduce.jobhistory.webapp.spnego-keytab-file</name> <value>/etc/security/keytabs/spnego.service.keytab</value> </property>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

##### 创建https证书

- 1

- 2

- 3

- 4

- 5

openssl req -new -x509 -keyout bd_ca_key -out bd_ca_cert -days 9999 -subj ‘/C=CN/ST=beijing/L=beijing/O=test/OU=test/CN=test’

- 1

- 2

scp -r /etc/security/cdh.https bigdata1:/etc/security/

scp -r /etc/security/cdh.https bigdata2:/etc/security/

- 1

- 2

[root@bigdata0 cdh.https]# openssl req -new -x509 -keyout bd_ca_key -out bd_ca_cert -days 9999 -subj ‘/C=CN/ST=beijing/L=beijing/O=test/OU=test/CN=test’

Generating a 2048 bit RSA private key

…+++

…+++

writing new private key to ‘bd_ca_key’

Enter PEM pass phrase:

Verifying - Enter PEM pass phrase:

[root@bigdata0 cdh.https]#

[root@bigdata0 cdh.https]# ll

总用量 8

-rw-r–r–. 1 root root 1298 10月 5 17:14 bd_ca_cert

-rw-r–r–. 1 root root 1834 10月 5 17:14 bd_ca_key

[root@bigdata0 cdh.https]# scp -r /etc/security/cdh.https bigdata1:/etc/security/

bd_ca_key 100% 1834 913.9KB/s 00:00

bd_ca_cert 100% 1298 1.3MB/s 00:00

[root@bigdata0 cdh.https]# scp -r /etc/security/cdh.https bigdata2:/etc/security/

bd_ca_key 100% 1834 1.7MB/s 00:00

bd_ca_cert 100% 1298 1.3MB/s 00:00

[root@bigdata0 cdh.https]#

在三个节点依次执行

- 1

- 2

- 3

- 4

- 5

cd /etc/security/cdh.https

所有需要输入密码的地方全部输入123456(方便起见,如果你对密码有要求请自行修改)

1 输入密码和确认密码:123456,此命令成功后输出keystore文件

keytool -keystore keystore -alias localhost -validity 9999 -genkey -keyalg RSA -keysize 2048 -dname “CN=test, OU=test, O=test, L=beijing, ST=beijing, C=CN”

2 输入密码和确认密码:123456,提示是否信任证书:输入yes,此命令成功后输出truststore文件

keytool -keystore truststore -alias CARoot -import -file bd_ca_cert

3 输入密码和确认密码:123456,此命令成功后输出cert文件

keytool -certreq -alias localhost -keystore keystore -file cert

4 此命令成功后输出cert_signed文件

openssl x509 -req -CA bd_ca_cert -CAkey bd_ca_key -in cert -out cert_signed -days 9999 -CAcreateserial -passin pass:123456

5 输入密码和确认密码:123456,是否信任证书,输入yes,此命令成功后更新keystore文件

keytool -keystore keystore -alias CARoot -import -file bd_ca_cert

6 输入密码和确认密码:123456

keytool -keystore keystore -alias localhost -import -file cert_signed

最终得到:

-rw-r–r-- 1 root root 1294 Sep 26 11:31 bd_ca_cert

-rw-r–r-- 1 root root 17 Sep 26 11:36 bd_ca_cert.srl

-rw-r–r-- 1 root root 1834 Sep 26 11:31 bd_ca_key

-rw-r–r-- 1 root root 1081 Sep 26 11:36 cert

-rw-r–r-- 1 root root 1176 Sep 26 11:36 cert_signed

-rw-r–r-- 1 root root 4055 Sep 26 11:37 keystore

-rw-r–r-- 1 root root 978 Sep 26 11:35 truststore

配置ssl-server.xml和ssl-client.xml

ssl-sserver.xml

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

<property> <name>ssl.server.truststore.password</name> <value>123456</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.server.truststore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks". </description> </property> <property> <name>ssl.server.truststore.reload.interval</name> <value>10000</value> <description>Truststore reload check interval, in milliseconds. Default value is 10000 (10 seconds). </description> </property> <property> <name>ssl.server.keystore.location</name> <value>/etc/security/cdh.https/keystore</value> <description>Keystore to be used by NN and DN. Must be specified. </description> </property> <property> <name>ssl.server.keystore.password</name> <value>123456</value> <description>Must be specified. </description> </property> <property> <name>ssl.server.keystore.keypassword</name> <value>123456</value> <description>Must be specified. </description> </property> <property> <name>ssl.server.keystore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks". </description> </property> <property> <name>ssl.server.exclude.cipher.list</name> <value>TLS_EE_RSA_WITH_RC4_128_SHA,SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA, SSL_RSA_WITH_DES_CBC_SHA,SSL_DHE_RSA_WITH_DES_CBC_SHA, SSL_RSA_EXPORT_WITH_RC4_40_MD5,SSL_RSA_EXPORT_WITH_DES40_CBC_SHA, SSL_RSA_WITH_RC4_128_MD5</value> <description>Optional. The weak security cipher suites that you want excluded from SSL communication.</description> </property>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

ssl-client.xml

- 1

- 2

- 3

- 4

- 5

<property> <name>ssl.client.truststore.password</name> <value>123456</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.client.truststore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks". </description> </property> <property> <name>ssl.client.truststore.reload.interval</name> <value>10000</value> <description>Truststore reload check interval, in milliseconds. Default value is 10000 (10 seconds). </description> </property> <property> <name>ssl.client.keystore.location</name> <value>/etc/security/cdh.https/keystore</value> <description>Keystore to be used by clients like distcp. Must be specified. </description> </property> <property> <name>ssl.client.keystore.password</name> <value>123456</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.client.keystore.keypassword</name> <value>123456</value> <description>Optional. Default value is "". </description> </property> <property> <name>ssl.client.keystore.type</name> <value>jks</value> <description>Optional. The keystore file format, default value is "jks". </description> </property>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

分发配置

- 1

- 2

- 3

- 4

- 5

scp $HADOOP_HOME/etc/hadoop/* bigdata1:/bigdata/hadoop-3.3.2/etc/hadoop/

scp $HADOOP_HOME/etc/hadoop/* bigdata2:/bigdata/hadoop-3.3.2/etc/hadoop/

### 4、启动服务 ps:初始化 namenode 后可以直接 sbin/start-all.sh ### 5、一些错误: #### 5.1 Kerberos 相关错误 ##### 连不上 realm Cannot contact any KDC for realm 一般是网络不通 1、可能是没关闭防火墙:

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

[root@bigdata0 bigdata]# kinit krbtest/admin@EXAMPLE.COM

kinit: Cannot contact any KDC for realm ‘EXAMPLE.COM’ while getting initial credentials

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]# systemctl stop firewalld.service

[root@bigdata0 bigdata]# systemctl disable firewalld.service

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@bigdata0 bigdata]# kinit krbtest/admin@EXAMPLE.COM

Password for krbtest/admin@EXAMPLE.COM:

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: krbtest/admin@EXAMPLE.COM

Valid starting Expires Service principal

2023-10-05T16:19:58 2023-10-06T16:19:58 krbtgt/EXAMPLE.COM@EXAMPLE.COM

[root@bigdata0 bigdata]#

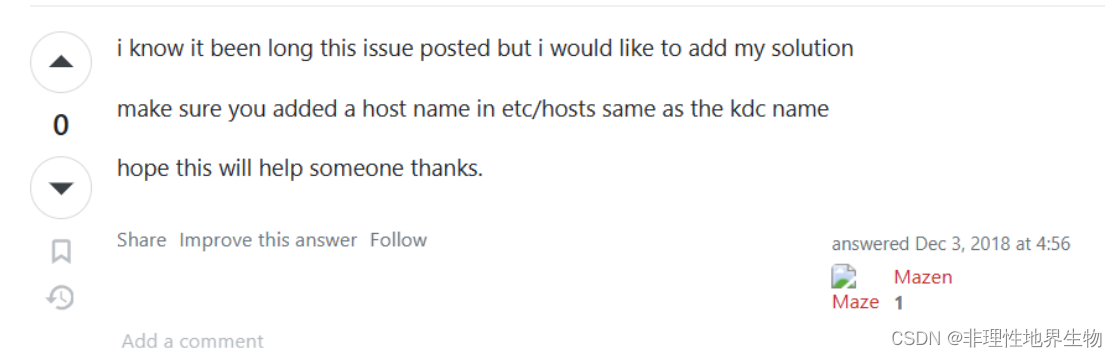

2、可能是 没有在 hosts 中配置 kdc

编辑 /etc/hosts 添加 kdc 和对应 ip 的映射

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

{kdc ip} {kdc}

192.168.50.10 bigdata3.example.com

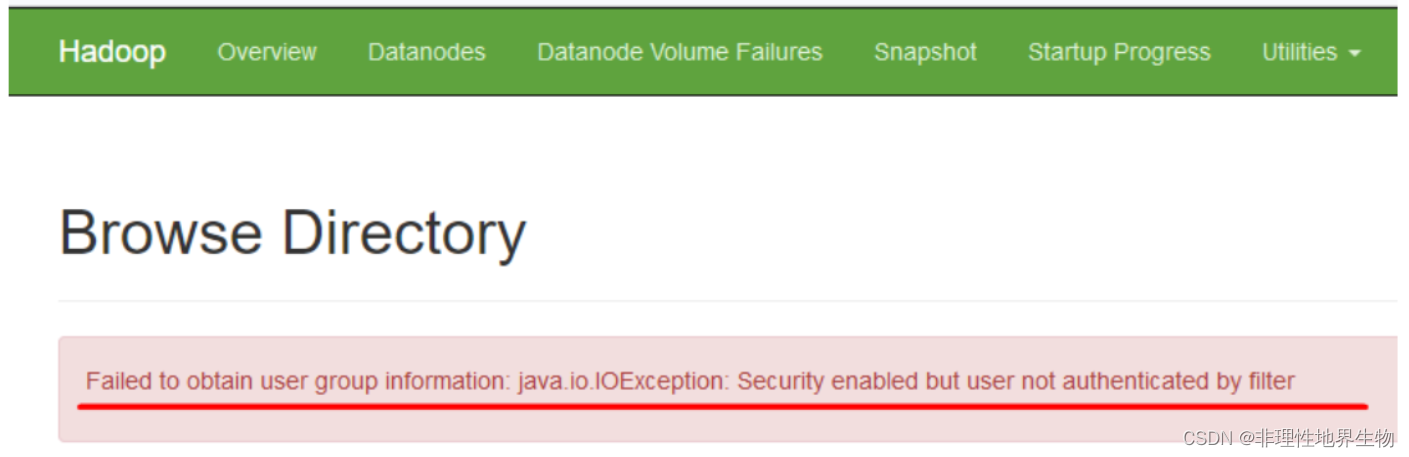

<https://serverfault.com/questions/612869/kinit-cannot-contact-any-kdc-for-realm-ubuntu-while-getting-initial-credentia>  ##### kinit 命令找错依赖 kinit: relocation error: kinit: symbol krb5\_get\_init\_creds\_opt\_set\_pac\_request, version krb5\_3\_MIT not defined in file libkrb5.so.3 with link time reference 用 ldd ( w h i c h k i n i t ) 发现指向了非 / l i b 64 下面的 l i b k r b 5. s o . 3 依赖。执行 e x p o r t L D L I B R A R Y P A T H = / l i b 64 : (which kinit) 发现指向了非 /lib64 下面的 libkrb5.so.3 依赖。执行 export LD\_LIBRARY\_PATH=/lib64: (whichkinit)发现指向了非/lib64下面的libkrb5.so.3依赖。执行exportLDLIBRARYPATH=/lib64:{LD\_LIBRARY\_PATH} 即可。 <https://community.broadcom.com/communities/community-home/digestviewer/viewthread?MID=797304#:~:text=To%20solve%20this%20error%2C%20the%20only%20workaround%20found,to%20use%20system%20libraries%20%24%20export%20LD_LIBRARY_PATH%3D%2Flib64%3A%24%20%7BLD_LIBRARY_PATH%7D> --- ##### ccache id 非法 kinit: Invalid Uid in persistent keyring name while getting default ccache 修改 /etc/krb5.conf 中的 default\_ccache\_name 值。 <https://unix.stackexchange.com/questions/712857/kinit-invalid-uid-in-persistent-keyring-name-while-getting-default-ccache-while#:~:text=If%20running%20unset%20KRB5CCNAME%20did%20not%20resolve%20it%2C,of%20%22default_ccache_name%22%20in%20%2Fetc%2Fkrb5.conf%20to%20a%20local%20file%3A> ##### KrbException: Message stream modified (41) 据说和 jre 版本有关系,删除 krb5.conf 配置文件里的 `renew_lifetime = xxx` 即可。 #### 5.2 Hadoop 相关错误 哪个节点的哪个服务有错误,可以在对应日志(或manager日志)中查看是否有异常信息。比如一些 so 文件找不到,不上即可。 hdfs web 上报错 Failed to obtain user group information: java.io.IOException: Security enabled but user not authenticated by filter <https://issues.apache.org/jira/browse/HDFS-16441> ### 6 提交 Spark on yarn 1、直接提交: 命令:

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

bin/spark-submit \

–master yarn

–deploy-mode cluster

–class org.apache.spark.examples.SparkPi

examples/jars/spark-examples_2.12-3.3.0.jar

- 1

- 2

[root@bigdata3 spark-3.3.0-bin-hadoop3]# bin/spark-submit --master yarn --deploy-mode cluster --class org.apache.spark.examples.SparkPi examples/jars/spark-examples\_2.12-3.3.0.jar 23/10/12 13:53:05 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 23/10/12 13:53:05 INFO DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at bigdata0.example.com/192.168.50.7:8032 23/10/12 13:53:06 WARN Client: Exception encountered while connecting to the server org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS] at org.apache.hadoop.security.SaslRpcClient.selectSaslClient(SaslRpcClient.java:179) at org.apache.hadoop.security.SaslRpcClient.saslConnect(SaslRpcClient.java:392) at org.apache.hadoop.ipc.Client$Connection.setupSaslConnection(Client.java:623) at org.apache.hadoop.ipc.Client$Connection.access$2300(Client.java:414) at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:843) at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:839) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878) at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:839) at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:414) at org.apache.hadoop.ipc.Client.getConnection(Client.java:1677) at org.apache.hadoop.ipc.Client.call(Client.java:1502) at org.apache.hadoop.ipc.Client.call(Client.java:1455) at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:242) at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:129) at com.sun.proxy.$Proxy24.getNewApplication(Unknown Source) at org.apache.hadoop.yarn.api.impl.pb.client.ApplicationClientProtocolPBClientImpl.getNewApplication(ApplicationClientProtocolPBClientImpl.java:286) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95) at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359) at com.sun.proxy.$Proxy25.getNewApplication(Unknown Source) at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.getNewApplication(YarnClientImpl.java:284) at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.createApplication(YarnClientImpl.java:292) at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:200) at org.apache.spark.deploy.yarn.Client.run(Client.scala:1327) at org.apache.spark.deploy.yarn.YarnClusterApplication.start(Client.scala:1764) at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958) at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180) at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203) at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90) at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) Exception in thread "main" java.io.IOException: DestHost:destPort bigdata0.example.com:8032 , LocalHost:localPort bigdata3.example.com/192.168.50.10:0. Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS] at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:913) at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:888) at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1616) at org.apache.hadoop.ipc.Client.call(Client.java:1558) at org.apache.hadoop.ipc.Client.call(Client.java:1455) at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:242) at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:129) at com.sun.proxy.$Proxy24.getNewApplication(Unknown Source) at org.apache.hadoop.yarn.api.impl.pb.client.ApplicationClientProtocolPBClientImpl.getNewApplication(ApplicationClientProtocolPBClientImpl.java:286) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95) at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359) at com.sun.proxy.$Proxy25.getNewApplication(Unknown Source) at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.getNewApplication(YarnClientImpl.java:284) at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.createApplication(YarnClientImpl.java:292) at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:200) at org.apache.spark.deploy.yarn.Client.run(Client.scala:1327) at org.apache.spark.deploy.yarn.YarnClusterApplication.start(Client.scala:1764) at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958) at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180) at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203) at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90) at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) Caused by: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS] at org.apache.hadoop.ipc.Client$Connection$1.run(Client.java:798) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878) at org.apache.hadoop.ipc.Client$Connection.handleSaslConnectionFailure(Client.java:752) at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:856) at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:414) at org.apache.hadoop.ipc.Client.getConnection(Client.java:1677) at org.apache.hadoop.ipc.Client.call(Client.java:1502) ... 27 more Caused by: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS] at org.apache.hadoop.security.SaslRpcClient.selectSaslClient(SaslRpcClient.java:179) at org.apache.hadoop.security.SaslRpcClient.saslConnect(SaslRpcClient.java:392) at org.apache.hadoop.ipc.Client$Connection.setupSaslConnection(Client.java:623) at org.apache.hadoop.ipc.Client$Connection.access$2300(Client.java:414) at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:843) at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:839) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878) at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:839) ... 30 more 23/10/12 13:53:06 INFO ShutdownHookManager: Shutdown hook called 23/10/12 13:53:06 INFO ShutdownHookManager: Deleting directory /tmp/spark-b9b19f53-4839-4cf0-82d0-065f4956f830

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

2、添加 spark.kerberos.keytab 与 spark.kerberos.principal 参数提交。

在提交的机器上添加 Kerberos 账户提交

- 1

- 2

- 3

- 4

- 5

- 6

[root@bigdata3 spark-3.3.0-bin-hadoop3]# kadmin.local

Authenticating as principal root/admin@EXAMPLE.COM with password.

kadmin.local:

kadmin.local: addprinc -randkey nn/bigdata3.example.com@EXAMPLE.COM

WARNING: no policy specified for nn/bigdata3.example.com@EXAMPLE.COM; defaulting to no policy

Principal "nn/bigdata3.example.com@EXAMPLE.COM" created.

kadmin.local: ktadd -k /etc/security/keytabs/nn.service.keytab nn/bigdata3.example.com@EXAMPLE.COM

- 1

- 2

- 3

- 4

- 5

- 6

- 7

提交命令:

- 1

- 2

- 3

- 4

- 5

bin/spark-submit \

–master yarn

–deploy-mode cluster

–conf spark.kerberos.keytab=/etc/security/keytabs/nn.service.keytab

–conf spark.kerberos.principal=nn/bigdata3.example.com@EXAMPLE.COM

–class org.apache.spark.examples.SparkPi

examples/jars/spark-examples_2.12-3.3.0.jar

结果:

- 1

- 2

- 3

- 4

- 5

[root@bigdata3 spark-3.3.0-bin-hadoop3]# bin/spark-submit --master yarn --deploy-mode cluster --conf spark.kerberos.keytab=/etc/security/keytabs/nn.service.keytab --conf spark.kerberos.principal=nn/bigdata3.example.com@EXAMPLE.COM --class org.apache.spark.examples.SparkPi examples/jars/spark-examples\_2.12-3.3.0.jar 23/10/12 13:53:30 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 23/10/12 13:53:31 INFO Client: Kerberos credentials: principal = nn/bigdata3.example.com@EXAMPLE.COM, keytab = /etc/security/keytabs/nn.service.keytab 23/10/12 13:53:31 INFO DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at bigdata0.example.com/192.168.50.7:8032 23/10/12 13:53:32 INFO Configuration: resource-types.xml not found 23/10/12 13:53:32 INFO ResourceUtils: Unable to find 'resource-types.xml'. 23/10/12 13:53:32 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container) 23/10/12 13:53:32 INFO Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead 23/10/12 13:53:32 INFO Client: Setting up container launch context for our AM 23/10/12 13:53:32 INFO Client: Setting up the launch environment for our AM container 23/10/12 13:53:32 INFO Client: Preparing resources for our AM container 23/10/12 13:53:32 INFO Client: To enable the AM to login from keytab, credentials are being copied over to the AM via the YARN Secure Distributed Cache. 23/10/12 13:53:32 INFO Client: Uploading resource file:/etc/security/keytabs/nn.service.keytab -> hdfs://bigdata0.example.com:8020/user/hdfs/.sparkStaging/application_1697089814250_0001/nn.service.keytab 23/10/12 13:53:34 WARN Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME. 23/10/12 13:53:44 INFO Client: Uploading resource file:/tmp/spark-83cea039-6e72-4958-b097-4537a008e792/__spark_libs__1911433509069709237.zip -> hdfs://bigdata0.example.com:8020/user/hdfs/.sparkStaging/application_1697089814250_0001/__spark_libs__1911433509069709237.zip 23/10/12 13:54:16 INFO Client: Uploading resource file:/bigdata/spark-3.3.0-bin-hadoop3/examples/jars/spark-examples_2.12-3.3.0.jar -> hdfs://bigdata0.example.com:8020/user/hdfs/.sparkStaging/application_1697089814250_0001/spark-examples_2.12-3.3.0.jar 23/10/12 13:54:16 INFO Client: Uploading resource file:/tmp/spark-83cea039-6e72-4958-b097-4537a008e792/__spark_conf__8266642042189366964.zip -> hdfs://bigdata0.example.com:8020/user/hdfs/.sparkStaging/application_1697089814250_0001/__spark_conf__.zip 23/10/12 13:54:17 INFO SecurityManager: Changing view acls to: root,hdfs 23/10/12 13:54:17 INFO SecurityManager: Changing modify acls to: root,hdfs 23/10/12 13:54:17 INFO SecurityManager: Changing view acls groups to: 23/10/12 13:54:17 INFO SecurityManager: Changing modify acls groups to: 23/10/12 13:54:17 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root, hdfs); groups with view permissions: Set(); users with modify permissions: Set(root, hdfs); groups with modify permissions: Set() 23/10/12 13:54:17 INFO HadoopDelegationTokenManager: Attempting to login to KDC using principal: nn/bigdata3.example.com@EXAMPLE.COM 23/10/12 13:54:17 INFO HadoopDelegationTokenManager: Successfully logged into KDC. 23/10/12 13:54:17 INFO HiveConf: Found configuration file null 23/10/12 13:54:17 INFO HadoopFSDelegationTokenProvider: getting token for: DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_1847188333_1, ugi=nn/bigdata3.example.com@EXAMPLE.COM (auth:KERBEROS)]] with renewer rm/bigdata0.example.com@EXAMPLE.COM 23/10/12 13:54:17 INFO DFSClient: Created token for hdfs: HDFS_DELEGATION_TOKEN owner=nn/bigdata3.example.com@EXAMPLE.COM, renewer=yarn, realUser=, issueDate=1697090057412, maxDate=1697694857412, sequenceNumber=7, masterKeyId=8 on 192.168.50.7:8020 23/10/12 13:54:17 INFO HadoopFSDelegationTokenProvider: getting token for: DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_1847188333_1, ugi=nn/bigdata3.example.com@EXAMPLE.COM (auth:KERBEROS)]] with renewer nn/bigdata3.example.com@EXAMPLE.COM 23/10/12 13:54:17 INFO DFSClient: Created token for hdfs: HDFS_DELEGATION_TOKEN owner=nn/bigdata3.example.com@EXAMPLE.COM, renewer=hdfs, realUser=, issueDate=1697090057438, maxDate=1697694857438, sequenceNumber=8, masterKeyId=8 on 192.168.50.7:8020 23/10/12 13:54:17 INFO HadoopFSDelegationTokenProvider: Renewal interval is 86400032 for token HDFS_DELEGATION_TOKEN 23/10/12 13:54:19 INFO Client: Submitting application application_1697089814250_0001 to ResourceManager 23/10/12 13:54:21 INFO YarnClientImpl: Submitted application application_1697089814250_0001 23/10/12 13:54:22 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED) 23/10/12 13:54:22 INFO Client: client token: Token { kind: YARN_CLIENT_TOKEN, service: } diagnostics: N/A ApplicationMaster host: N/A ApplicationMaster RPC port: -1 queue: root.hdfs start time: 1697090060093 final status: UNDEFINED tracking URL: https://bigdata0.example.com:8090/proxy/application_1697089814250_0001/ user: hdfs 23/10/12 13:54:23 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED) 23/10/12 13:54:24 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED) 23/10/12 13:54:25 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

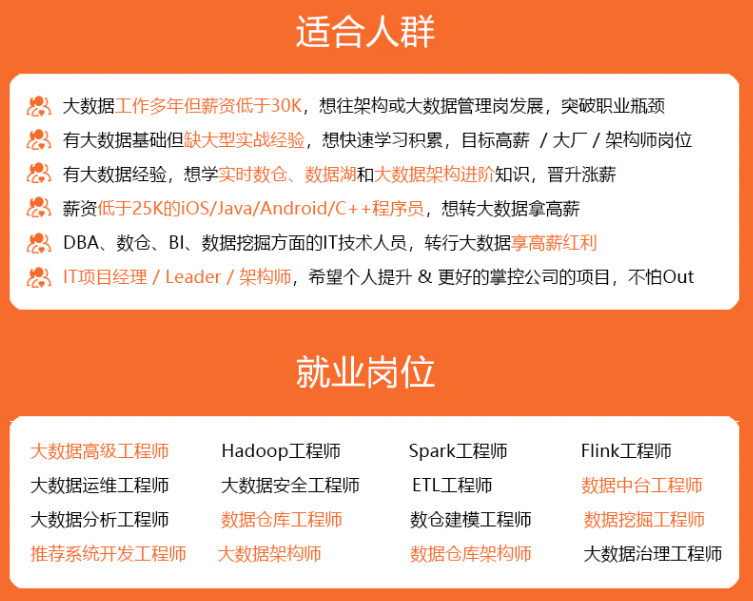

- 46

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

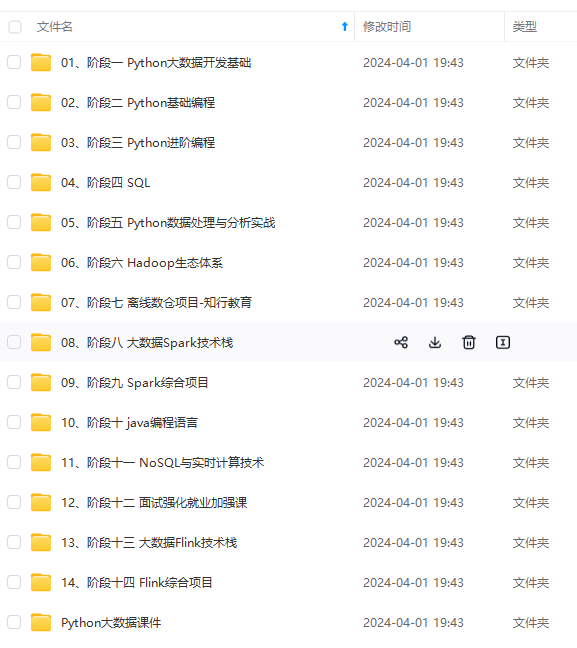

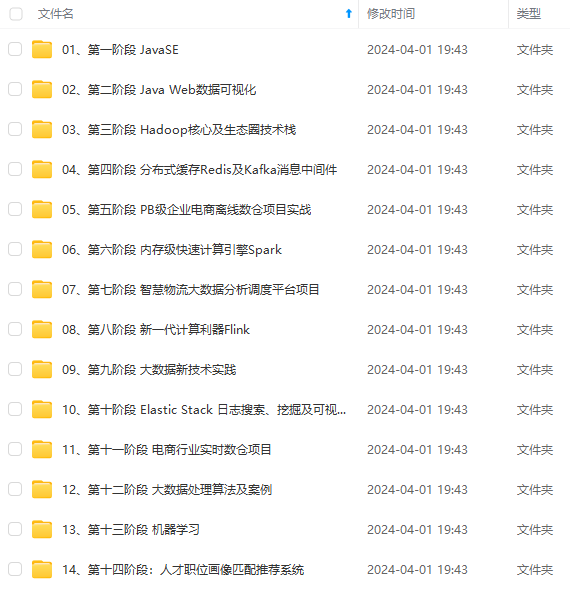

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

ken { kind: YARN_CLIENT_TOKEN, service: }

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.hdfs

start time: 1697090060093

final status: UNDEFINED

tracking URL: https://bigdata0.example.com:8090/proxy/application_1697089814250_0001/

user: hdfs

23/10/12 13:54:23 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)

23/10/12 13:54:24 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)

23/10/12 13:54:25 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)

[外链图片转存中…(img-sdzb1cTc-1715241074909)]

[外链图片转存中…(img-QtSIkFUG-1715241074909)]

[外链图片转存中…(img-kkl9IqAr-1715241074909)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新