热门标签

热门文章

- 1AI推介-多模态视觉语言模型VLMs论文速览(arXiv方向):2024.01.15-2024.01.20

- 2Vue3 + ElementPlus的管理后台系统_vue3-element-plus-admin

- 3鸿蒙OS开源代码精要解读之——init_鸿蒙os代码 开源

- 4python tkinter random messagebox 实现一个界面化的石头剪刀布!_python实现界面化

- 5HarmonyOS Data Ability的了解和使用_harmonyos dataability

- 6Gradle sync failed “read time out“_gradle sync failed: read timed out

- 7List.js 组件_list.js官网下载

- 8手把手教程,用AI制作微信红包封面!

- 9限流之 Guava RateLimiter 实现原理浅析_ratelimiter.tryacquire() 一直false

- 10日期与数组与字符串的方法复习总结_日期使用字符数组的方法

当前位置: article > 正文

flume环境配置-传输Hadoop日志(namenode或datanode日志)_启动flume传输hadoop日志(namenode或datanode日志),查看hdfs中/tmp

作者:菜鸟追梦旅行 | 2024-03-15 02:23:20

赞

踩

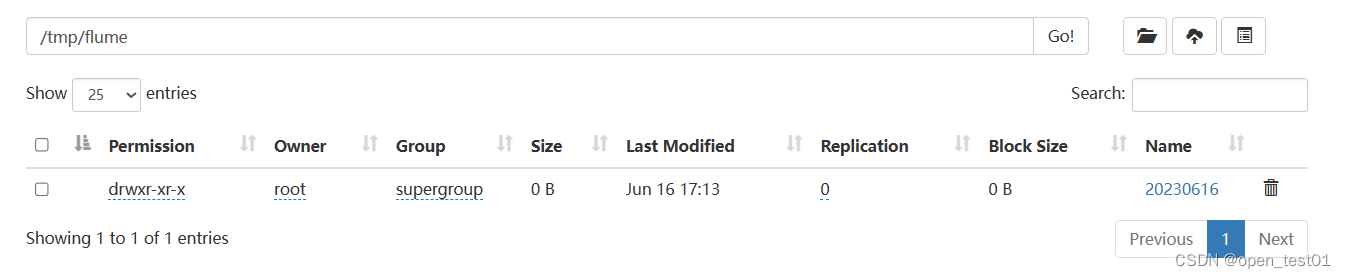

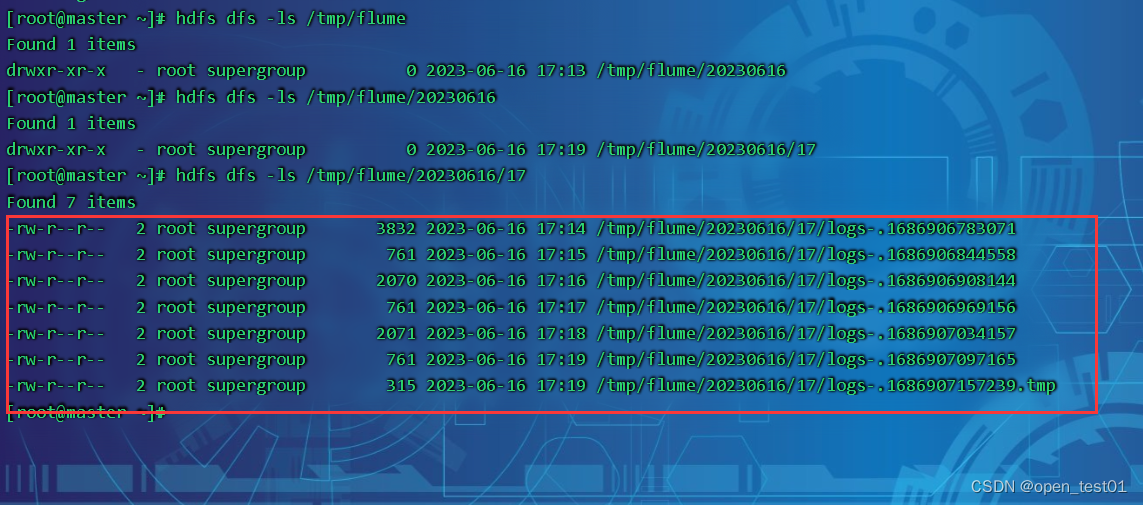

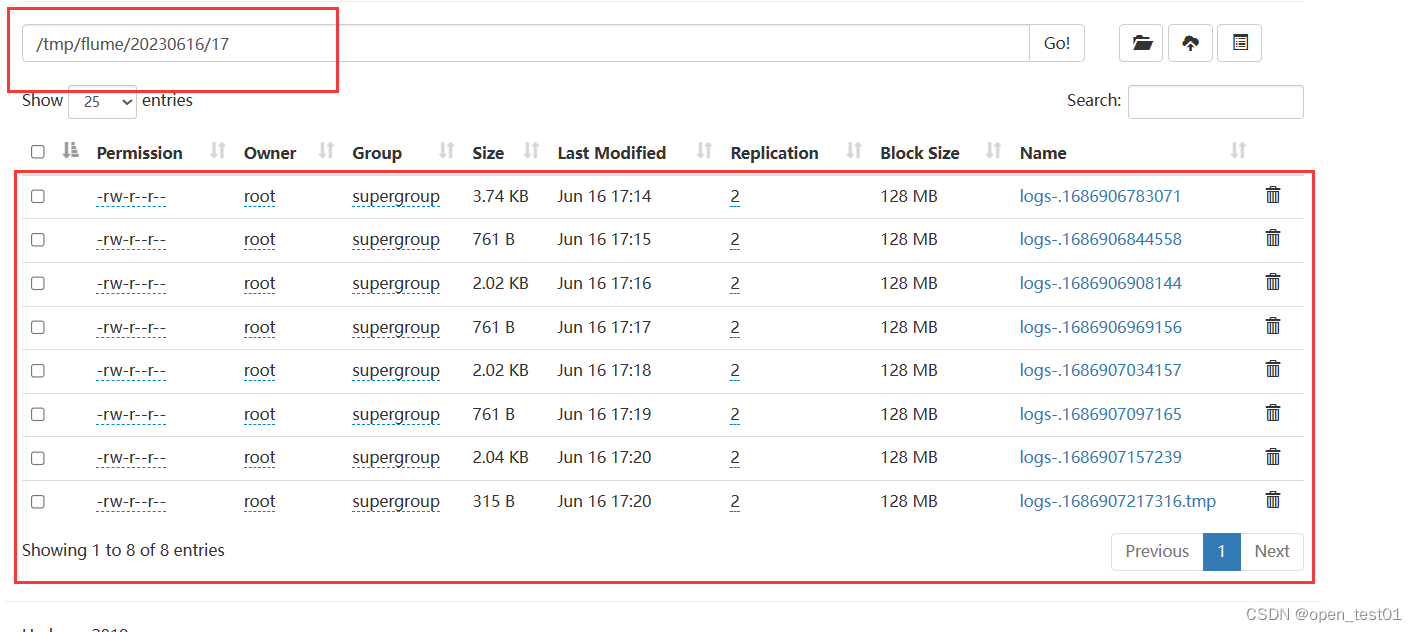

启动flume传输hadoop日志(namenode或datanode日志),查看hdfs中/tmp/flume目录下生

将flume-env.sh.template改名为flume-env.sh, 并修改其配置

解压文件

tar -zxvf apache-flume-1.9.0-bin.tar.gz -C /opt/module修改文件名

mv apache-flume-1.9.0-bin flume配置环境变量

vim /etc/profile

- export FLUME_HOME=/opt/module/flume

- export PATH=$FLUME_HOME/bin

source /etc/profile执行flume-ng version

将flume-env.sh.template改名为flume-env.sh, 并修改其配置

在flume/conf目录下

- cp flume-env.sh.template flume-env.sh

- vi flume/conf/flume-env.sh

export JAVA_HOME=/opt/jdk1.8配置文件监控NameNode 日志文件

flume/conf目录下

cp flume-conf.properties.template flume-conf.properties在 Hadoop 的默认配置下,NameNode 的日志文件位于 $HADOOP_HOME/logs/hadoop-hdfs-namenode-[hostname].log。

其中,$HADOOP_HOME 为 Hadoop 的安装目录,[hostname] 为运行 NameNode 的主机名。如果启用了安全模式,还会有一个专门的安全模式日志文件,路径为 $HADOOP_HOME/logs/hadoop-hdfs-namenode-[hostname]-safemode.log。

vim flume-conf.properties- a1.sources = r1

- a1.sinks = k1

- a1.channels = c1

-

- a1.sources.r1.type = exec

- a1.sources.r1.command = tail -F /opt/module/hadoop-3.1.3/logs/hadoop-root-namenode-master.log

-

- a1.sinks.k1.type = hdfs

- a1.sinks.k1.hdfs.path = hdfs://master:9000/tmp/flume/%Y%m%d

- a1.sinks.k1.hdfs.filePrefix = log-

- a1.sinks.k1.hdfs.fileType = DataStream

- a1.sinks.k1.hdfs.useLocalTimeStamp = true

-

- a1.channels.c1.type = memory

- a1.sources.r1.channels = c1

- a1.sinks.k1.channel = c1

启动Flume传输Hadoop日志

flume必须持有hadoop相关的包才能将数据输出到hdfs, 将如下包上传到flume/lib下

- cp $HADOOP_HOME/share/hadoop/common/hadoop-common-3.1.3.jar /opt/flume/lib

- cp $HADOOP_HOME/share/hadoop/common/lib/hadoop-auth-3.1.3.jar /opt/flume/lib

- cp $HADOOP_HOME/share/hadoop/common/lib/commons-configuration2-2.1.1.jar /opt/flume/lib

将hadoop的hdfs-site.xml和core-site.xml 放到flume/conf下

- cp $HADOOP_HOME/etc/hadoop/core-site.xml //opt/flume/conf

- cp $HADOOP_HOME/etc/hadoop/hdfs-site.xml //opt/flume/conf

flume-1.9.0/conf目录下

rm /opt/flume-1.9.0/lib/guava-11.0.2.jar

启动flume

flume-ng agent --conf conf/ --conf-file /opt/flume/conf/flume-conf.properties --name a1 -Dflume.root.logger=DEBUG,console在hdfs上查看内容

hdfs dfs -ls /tmp/flume

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/菜鸟追梦旅行/article/detail/238486

推荐阅读

相关标签