- 1python画矩形函数drawrectangle_Python3 tkinter基础 Canvas create_rectangle 画矩形

- 2RabbitMQ 6种应用场景_rabbitmq 使用场景

- 3mosec部署chatglm2-6B_mosec 推理

- 4LLVM教程(一)-- LLVM的简介_llvm 教程

- 5Perl 语言入门学习_perl学习

- 62023最新Flutter零基础快速入门学习资料,建议人手一份_mk甄选-2024年flutter零基础极速入门到进阶实战[同步更新中]

- 7【自然语言处理】python之人工智能应用篇——文本生成技术

- 8Docker安装Python_docker 安装python3.9

- 9MNIST手写数字识别代码详细备注版【零基础入门使用】

- 10DataX数据同步工具使用

在Kubernetes上部署Elasticsearch集群_kubernetes部署elasticsearch集群

赞

踩

2018-12-13更新:

鉴于最近很多网友询问关于Elasticsearch部署的问题,主要问题在于容器操作系统的内核参数限制,在此将最新的部署文件贴出来,仅供参考:--- apiVersion: v1 kind: ServiceAccount metadata: labels: app: elasticsearch name: elasticsearch-admin namespace: ns-elastic --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: elasticsearch-admin labels: app: elasticsearch roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: elasticsearch-admin namespace: ns-elastic --- kind: Deployment apiVersion: apps/v1 metadata: labels: app: elasticsearch role: master name: elasticsearch-master namespace: ns-elastic spec: replicas: 3 revisionHistoryLimit: 10 selector: matchLabels: app: elasticsearch role: master template: metadata: labels: app: elasticsearch role: master spec: serviceAccountName: elasticsearch-admin containers: - name: elasticsearch-master image: 192.168.101.88:5000/elastic/elasticsearch:6.2.3 command: ["bash", "-c", "ulimit -l unlimited && sysctl -w vm.max_map_count=262144 && exec su elasticsearch docker-entrypoint.sh"] ports: - containerPort: 9200 protocol: TCP - containerPort: 9300 protocol: TCP env: - name: "cluster.name" value: "elasticsearch-cluster" - name: "bootstrap.memory_lock" value: "true" - name: "discovery.zen.ping.unicast.hosts" value: "elasticsearch-discovery" - name: "discovery.zen.minimum_master_nodes" value: "2" - name: "discovery.zen.ping_timeout" value: "5s" - name: "node.master" value: "true" - name: "node.data" value: "false" - name: "node.ingest" value: "false" - name: "ES_JAVA_OPTS" value: "-Xms512m -Xmx512m" securityContext: privileged: true --- kind: Service apiVersion: v1 metadata: labels: app: elasticsearch name: elasticsearch-discovery namespace: ns-elastic spec: ports: - port: 9300 targetPort: 9300 selector: app: elasticsearch role: master --- apiVersion: v1 kind: Service metadata: name: elasticsearch-data-service namespace: ns-elastic labels: app: elasticsearch role: data spec: ports: - port: 9200 name: outer - port: 9300 name: inner clusterIP: None selector: app: elasticsearch role: data --- kind: StatefulSet apiVersion: apps/v1 metadata: labels: app: elasticsearch role: data name: elasticsearch-data namespace: ns-elastic spec: replicas: 2 revisionHistoryLimit: 10 selector: matchLabels: app: elasticsearch serviceName: elasticsearch-data-service template: metadata: labels: app: elasticsearch role: data spec: serviceAccountName: elasticsearch-admin containers: - name: elasticsearch-data image: 192.168.101.88:5000/elastic/elasticsearch:6.2.3 command: ["bash", "-c", "ulimit -l unlimited && sysctl -w vm.max_map_count=262144 && chown -R elasticsearch:elasticsearch /usr/share/elasticsearch/data && exec su elasticsearch docker-entrypoint.sh"] ports: - containerPort: 9200 protocol: TCP - containerPort: 9300 protocol: TCP env: - name: "cluster.name" value: "elasticsearch-cluster" - name: "bootstrap.memory_lock" value: "true" - name: "discovery.zen.ping.unicast.hosts" value: "elasticsearch-discovery" - name: "node.master" value: "false" - name: "node.data" value: "true" - name: "ES_JAVA_OPTS" value: "-Xms1024m -Xmx1024m" volumeMounts: - name: elasticsearch-data-volume mountPath: /usr/share/elasticsearch/data securityContext: privileged: true securityContext: fsGroup: 1000 # volumes: # - name: elasticsearch-data-volume # emptyDir: {} volumeClaimTemplates: - metadata: name: elasticsearch-data-volume spec: accessModes: ["ReadWriteOnce"] storageClassName: "vsphere-volume-sc" resources: requests: storage: 15Gi --- kind: Service apiVersion: v1 metadata: labels: app: elasticsearch name: elasticsearch-service namespace: ns-elastic spec: ports: - port: 9200 targetPort: 9200 selector: app: elasticsearch --- apiVersion: extensions/v1beta1 kind: Ingress metadata: labels: app: elasticsearch name: elasticsearch-ingress namespace: ns-elastic spec: rules: - host: elasticsearch.kube.com http: paths: - backend: serviceName: elasticsearch-service servicePort: 9200

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

如果需要定时清理历史数据,可以参考:

apiVersion: batch/v1beta1 kind: CronJob metadata: name: elasticsearch labels: app.kubernetes.io/name: elasticsearch helm.sh/chart: elasticsearch-0.1.0 app.kubernetes.io/instance: elasticsearch app.kubernetes.io/managed-by: Tiller spec: schedule: "0 1 * * *" concurrencyPolicy: Forbid jobTemplate: spec: template: spec: containers: - name: hello image: "192.168.101.88:5000/busybox:1.29.3" imagePullPolicy: IfNotPresent command: - "sh" - "-c" - > history=$(date -D '%s' +"%Y.%m.%d" -d "$(( `date +%s`-60*60*24*6 ))"); echo ${history}; hostname=elasticsearch.ns-monitor; echo ${hostname}; echo -ne "DELETE /*${history}* HTTP/1.1\r\nHost: ${hostname}\r\n\r\n" | nc -v -i 1 ${hostname} 9200 restartPolicy: OnFailure

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

------------------------------------------------原文如下------------------------------------------------

在Kubernetes上部署Elasticsearch集群

尝试在上一篇文章中搭建的K8S集群上部署ES集群,去年年中的时候,未来搭建ELK,学习过一段时间的ES,在虚拟机里搭建过简单的集群环境。现在K8S里再次搭建的时候,发现当时学过的很多概念都生疏了,又找到之前的学习记录复习了一遍——好记忆不如烂笔头。言归正传

1、环境清单

1.1、系统清单

| IP | Hostname | Role | OS |

|---|---|---|---|

| 192.168.119.160 | k8s-master | Master | CentOS 7 |

| 192.168.119.161 | k8s-node-1 | Node | CentOS 7 |

| 192.168.119.162 | k8s-node-2 | Node | CentOS 7 |

| 192.168.119.163 | k8s-node-3 | Node | CentOS 7 |

在之前的基础上多加了一个node节点,因为在两个node的时候部署ES集群,内存不够,然后一直提示提示OPENJDK GC Kill之类的信息。

1.2、镜像清单

下载地址参考:https://www.docker.elastic.co,下文附网盘下载链接。

2、部署说明

2.1、导入镜像

将镜像导入私库或者你的所有k8s节点上的docker内。

2.2、编写Dockerfile,修改镜像

[root@k8s-master dockerfile]# pwd /root/dockerfile [root@k8s-master dockerfile]# ll -rw-r--r--. 1 root root 156 3月 5 16:28 Dockerfile -rw-r--r--. 1 root root 98 3月 2 15:26 run.sh ### Dockerfile文件内容 ### [root@k8s-master dockerfile]# vi Dockerfile FROM docker.elastic.co/elasticsearch/elasticsearch:6.2.2 MAINTAINER chenlei leichen.china@gmail.com COPY run.sh / RUN chmod 775 /run.sh CMD ["/run.sh"] ### 启动脚本内容 ### [root@k8s-master dockerfile]# vi run.sh #!/bin/bash # 设置memlock无限制 ulimit -l unlimited exec su elasticsearch /usr/local/bin/docker-entrypoint.sh ### 构建镜像 ### [root@k8s-master dockerfile]# docker build --tag docker.elastic.co/elasticsearch/elasticsearch:6.2.2-1 .

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

之所以要修改镜像,是因为在k8s中不能直接修改系统参数(ulimit)。官网上的事例是在docker上部署的,docker可以通过ulimits来修改用户资源限制。但是k8s上各种尝试各种碰壁,老外也有不少争论。

通过上述方式,将镜像的入口命令修改为我们自己的run.sh,然后在脚本内设置memlock,最后调研原本的启动脚本来达到目的。

2.3、ES集群规划

Elasticsearch我选用的6.2.2的最新版本,集群节点分饰三种角色:master、data、ingest,每个节点担任至少一种角色。每个觉得均有对应的参数指定,下文将描述。

标注为master的节点表示master候选节点,具备成为master的资格,实际的master节点在运行时由其他节点投票产生,集群健康的情况下,同一时刻只有一个master节点,否则称之为脑裂,会导致数据不一致。

| ES节点(POD) | 是否Master | 是否Data | 是否Ingest |

|---|---|---|---|

| 节点1 | Y | N | N |

| 节点2 | Y | N | N |

| 节点3 | Y | N | N |

| 节点4 | N | Y | Y |

| 节点5 | N | Y | Y |

2.4、编写YML文件并部署集群

仅此一个文件:elasticsearch-cluster.yml,内容如下:

--- apiVersion: v1 kind: Namespace metadata: name: ns-elasticsearch labels: name: ns-elasticsearch --- apiVersion: v1 kind: ServiceAccount metadata: labels: elastic-app: elasticsearch name: elasticsearch-admin namespace: ns-elasticsearch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: elasticsearch-admin labels: elastic-app: elasticsearch roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: elasticsearch-admin namespace: ns-elasticsearch --- kind: Deployment apiVersion: apps/v1beta2 metadata: labels: elastic-app: elasticsearch role: master name: elasticsearch-master namespace: ns-elasticsearch spec: replicas: 3 revisionHistoryLimit: 10 selector: matchLabels: elastic-app: elasticsearch role: master template: metadata: labels: elastic-app: elasticsearch role: master spec: containers: - name: elasticsearch-master image: docker.elastic.co/elasticsearch/elasticsearch:6.2.2-1 lifecycle: postStart: exec: command: ["/bin/bash", "-c", "sysctl -w vm.max_map_count=262144; ulimit -l unlimited;"] ports: - containerPort: 9200 protocol: TCP - containerPort: 9300 protocol: TCP env: - name: "cluster.name" value: "elasticsearch-cluster" - name: "bootstrap.memory_lock" value: "true" - name: "discovery.zen.ping.unicast.hosts" value: "elasticsearch-discovery" - name: "discovery.zen.minimum_master_nodes" value: "2" - name: "discovery.zen.ping_timeout" value: "5s" - name: "node.master" value: "true" - name: "node.data" value: "false" - name: "node.ingest" value: "false" - name: "ES_JAVA_OPTS" value: "-Xms256m -Xmx256m" securityContext: privileged: true serviceAccountName: elasticsearch-admin tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: elastic-app: elasticsearch name: elasticsearch-discovery namespace: ns-elasticsearch spec: ports: - port: 9300 targetPort: 9300 selector: elastic-app: elasticsearch role: master --- kind: Deployment apiVersion: apps/v1beta2 metadata: labels: elastic-app: elasticsearch role: data name: elasticsearch-data namespace: ns-elasticsearch spec: replicas: 2 revisionHistoryLimit: 10 selector: matchLabels: elastic-app: elasticsearch template: metadata: labels: elastic-app: elasticsearch role: data spec: containers: - name: elasticsearch-data image: docker.elastic.co/elasticsearch/elasticsearch:6.2.2-1 lifecycle: postStart: exec: command: ["/bin/bash", "-c", "sysctl -w vm.max_map_count=262144; ulimit -l unlimited;"] ports: - containerPort: 9200 protocol: TCP - containerPort: 9300 protocol: TCP volumeMounts: - name: esdata mountPath: /usr/share/elasticsearch/data env: - name: "cluster.name" value: "elasticsearch-cluster" - name: "bootstrap.memory_lock" value: "true" - name: "discovery.zen.ping.unicast.hosts" value: "elasticsearch-discovery" - name: "node.master" value: "false" - name: "node.data" value: "true" - name: "ES_JAVA_OPTS" value: "-Xms256m -Xmx256m" securityContext: privileged: true volumes: - name: esdata emptyDir: {} serviceAccountName: elasticsearch-admin tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: elastic-app: elasticsearch-service name: elasticsearch-service namespace: ns-elasticsearch spec: ports: - port: 9200 targetPort: 9200 selector: elastic-app: elasticsearch type: NodePort

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

通过环境变量设置:node.master、node.data和node.ingest来控制对应的角色,三个选项的默认值均是true;

通过postStart来设置系统内核参数,但是对memlock无效;网上也有通过initPod进行修改的,暂时没有尝试过。

通过设置discovery.zen.minimum_master_nodes来防止脑裂,表示多少个master候选节点在线时集群可用;

通过设置discovery.zen.ping_timeout来降低网络延时带来的脑裂现象;

节点通过单播来发现和加入进群,设置elasticsearch-discovery服务作为进群发现入口;

serviceAccount是否有必要带验证!

### 在K8S上发布ES集群 ###

[root@k8s-master elasticsearch-cluster]# kubectl apply -f elasticsearch-cluster.yml

- 1

- 2

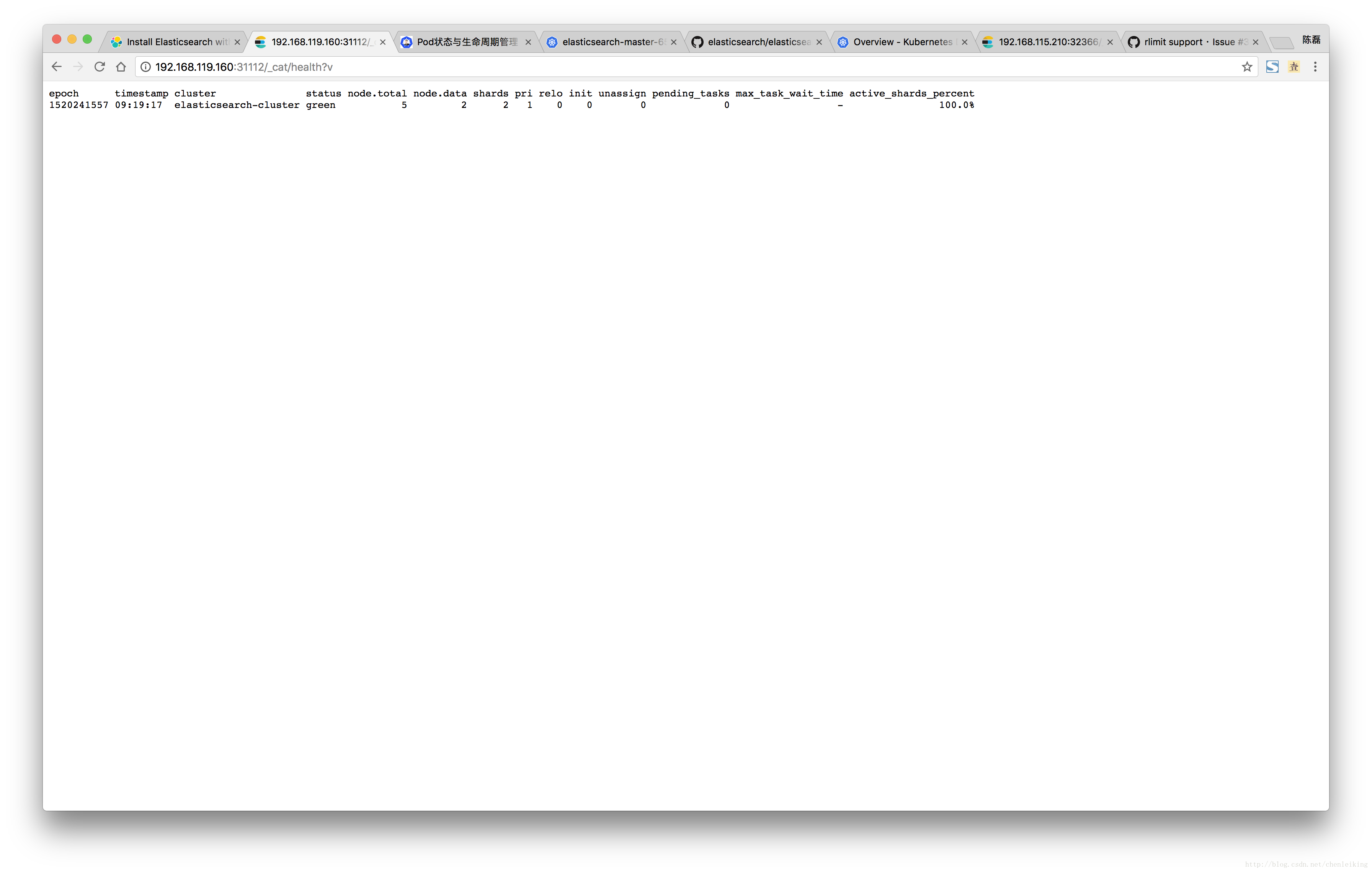

2.5、检查并测试集群

[root@k8s-master elasticsearch-cluster]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system etcd-k8s-master 1/1 Running 7 5d kube-system kube-apiserver-k8s-master 1/1 Running 7 5d kube-system kube-controller-manager-k8s-master 1/1 Running 4 5d kube-system kube-dns-6f4fd4bdf-mwddx 3/3 Running 10 5d kube-system kube-flannel-ds-nwxcl 1/1 Running 5 5d kube-system kube-flannel-ds-pdxhs 1/1 Running 5 5d kube-system kube-flannel-ds-qgbmf 1/1 Running 3 5h kube-system kube-flannel-ds-qrkwd 1/1 Running 3 5d kube-system kube-proxy-7lsqw 1/1 Running 5 5d kube-system kube-proxy-bq6kg 1/1 Running 0 5h kube-system kube-proxy-f9kps 1/1 Running 5 5d kube-system kube-proxy-g47nx 1/1 Running 3 5d kube-system kube-scheduler-k8s-master 1/1 Running 4 5d kube-system kubernetes-dashboard-845747bdd4-gcj47 1/1 Running 3 5d ns-elasticsearch elasticsearch-master-65c8cc584c-jcsq4 1/1 Running 0 4h ns-elasticsearch elasticsearch-master-65c8cc584c-kwg69 1/1 Running 0 4h ns-elasticsearch elasticsearch-master-65c8cc584c-rdcbz 1/1 Running 0 4h ns-elasticsearch elasticsearch-node-6f9d5fbd6c-6jktr 1/1 Running 0 4h ns-elasticsearch elasticsearch-node-6f9d5fbd6c-x7qk8 1/1 Running 0 4h [root@k8s-master elasticsearch-cluster]# kubectl get svc --all-namespaces NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 5d kube-system kubernetes-dashboard NodePort 10.111.158.221 <none> 443:32286/TCP 5d ns-elasticsearch elasticsearch-discovery ClusterIP 10.97.150.85 <none> 9300/TCP 1m ns-elasticsearch elasticsearch-service NodePort 10.101.40.47 <none> 9200:31112/TCP 1m

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

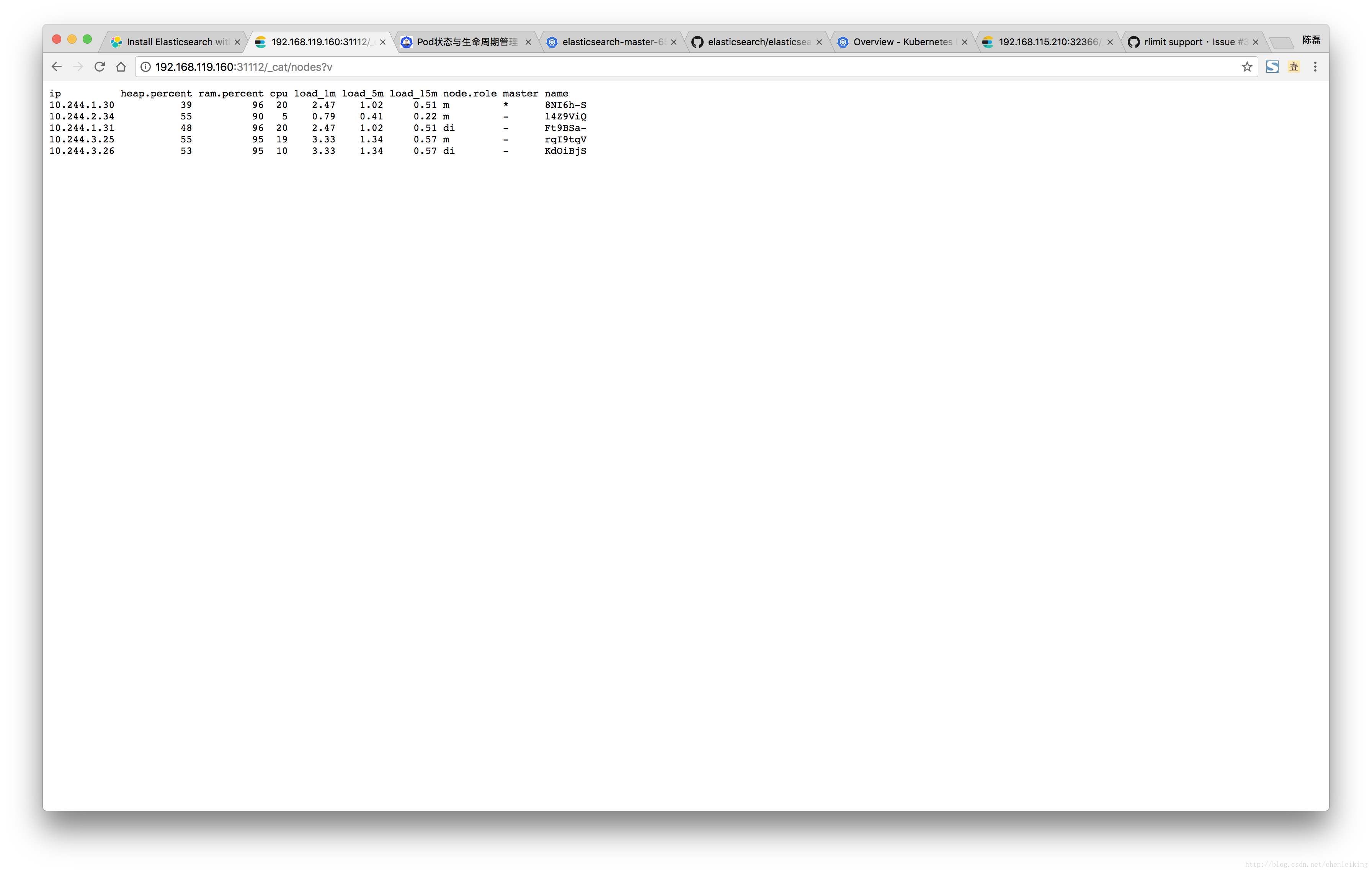

上图中的node.role包含mdi,分别对呀master、data和ingest。

3、注意事项

- 如果k8s节点内存不够,可能导致部分ES节点报错

- 集群发现通过k8s service来实现,ES本身“discovery.zen.ping.unicast.hosts”也不需要配置所有节点列表,新节点只需要连上集群中任意一个有效节点即可找到全部

- ulimit -l unlimited无法在postStart中生效

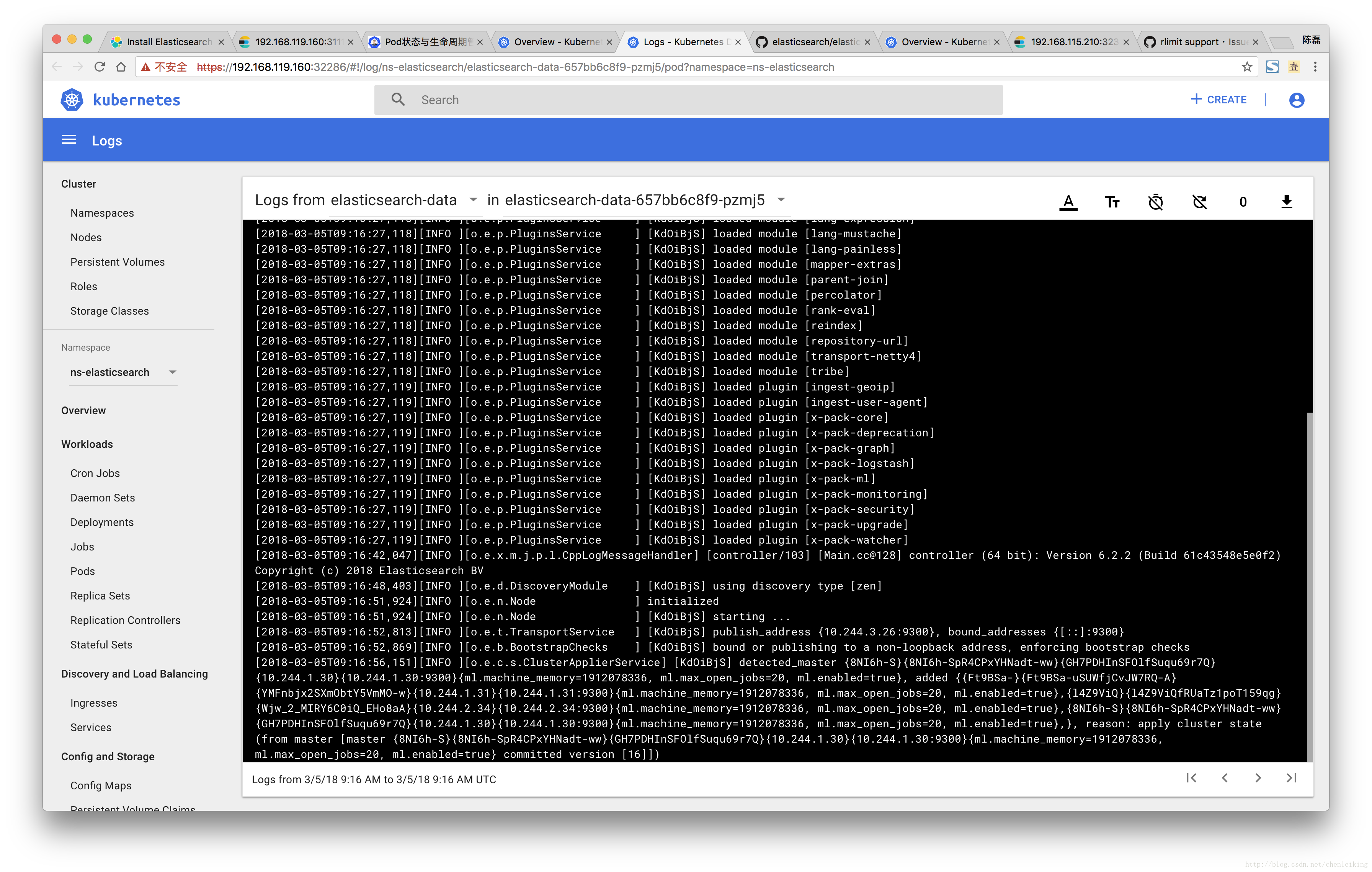

- 在dashboard中可以比较方便的查看日志,当然也可以使用kubectl log命令,查看日志可以快速定位问题

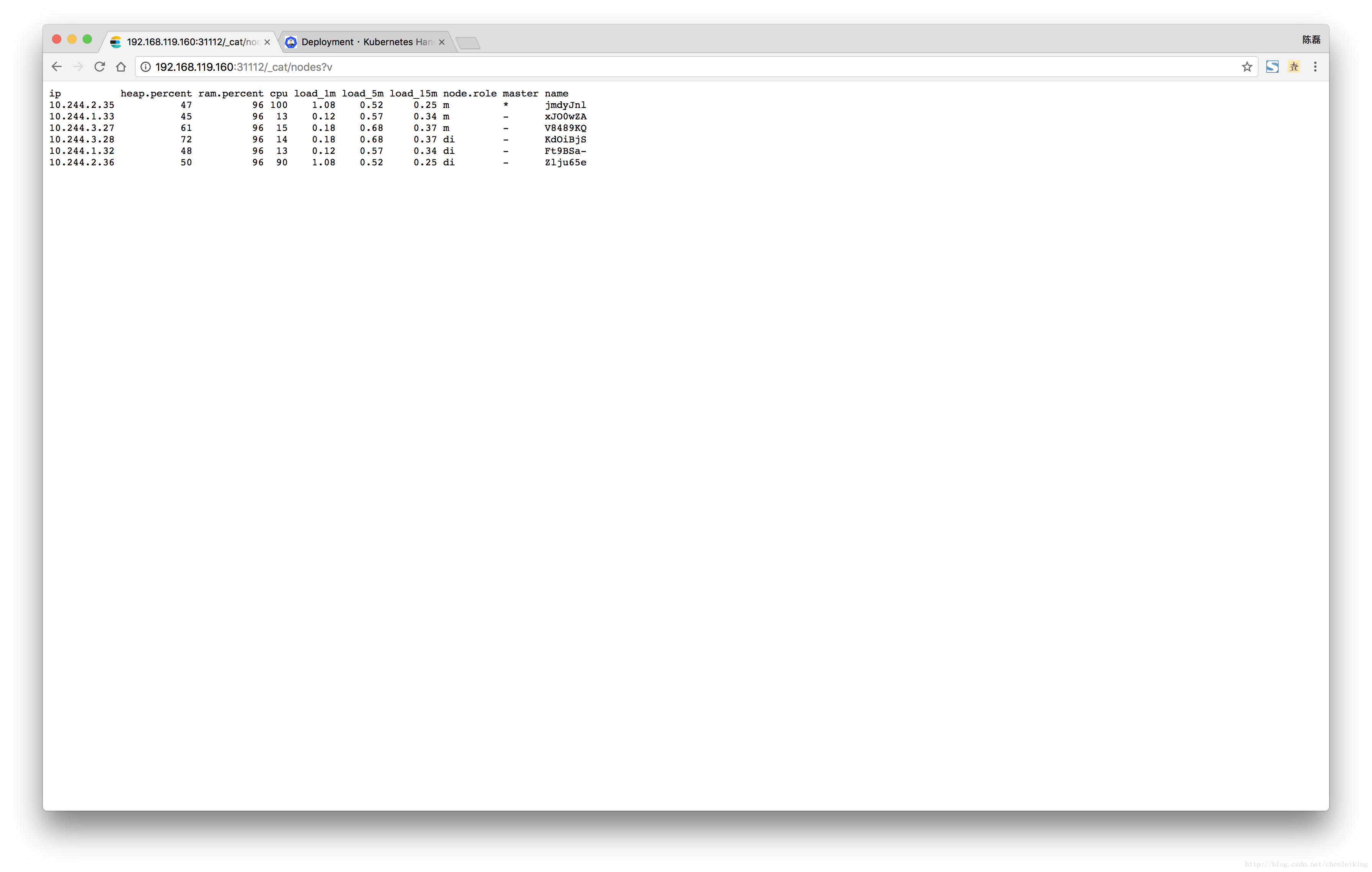

- 尝试增加数据节点

[root@k8s-master ~]# kubectl scale deployment elasticsearch-data --replicas 3 -n ns-elasticsearch [root@k8s-master ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system etcd-k8s-master 1/1 Running 8 5d kube-system kube-apiserver-k8s-master 1/1 Running 8 5d kube-system kube-controller-manager-k8s-master 1/1 Running 5 5d kube-system kube-dns-6f4fd4bdf-mwddx 3/3 Running 13 5d kube-system kube-flannel-ds-nwxcl 1/1 Running 6 5d kube-system kube-flannel-ds-pdxhs 1/1 Running 6 5d kube-system kube-flannel-ds-qgbmf 1/1 Running 4 21h kube-system kube-flannel-ds-qrkwd 1/1 Running 4 5d kube-system kube-proxy-7lsqw 1/1 Running 6 5d kube-system kube-proxy-bq6kg 1/1 Running 1 21h kube-system kube-proxy-f9kps 1/1 Running 6 5d kube-system kube-proxy-g47nx 1/1 Running 4 5d kube-system kube-scheduler-k8s-master 1/1 Running 5 5d kube-system kubernetes-dashboard-845747bdd4-gcj47 1/1 Running 4 5d ns-elasticsearch elasticsearch-data-657bb6c8f9-gpv89 1/1 Running 0 9s ns-elasticsearch elasticsearch-data-657bb6c8f9-jbrkc 1/1 Running 1 15h ns-elasticsearch elasticsearch-data-657bb6c8f9-pzmj5 1/1 Running 1 15h ns-elasticsearch elasticsearch-master-976859f58-qltf5 1/1 Running 1 15h ns-elasticsearch elasticsearch-master-976859f58-ts65d 1/1 Running 1 15h ns-elasticsearch elasticsearch-master-976859f58-zwqgv 1/1 Running 1 15h

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

上图出现新节点可能需要等待一分钟左右

4、附件下载

网盘地址:https://pan.baidu.com/s/1PN0ku0BNYWZUI_5moXS9cA

解压:

[root@k8s-master elasticsearch-cluster]# tar -zxvf elasticsearch-6.2.2.tar.gz elasticsearch-6.2.2.tar

- 1

- 2

导入:

[root@k8s-master elasticsearch-cluster]# docker load -i elasticsearch-6.2.2.tar

- 1

5、参考资料

- http://blog.csdn.net/zwgdft/article/details/54585644

- http://blog.csdn.net/zwgdft/article/details/54585644

- http://blog.csdn.net/a19860903/article/details/72467996

- https://www.elastic.co/guide/en/elasticsearch/reference/6.2/docker.html

- https://github.com/cesargomezvela/elasticsearch

- https://github.com/kubernetes/kubernetes/issues/3595