热门标签

热门文章

- 1【JeecgBoot】Mac M1 微服务启动JeecgBoot + 启动JeecgBoot-vue3版本_jeecg 微服务启动

- 2Python-Flask 快学_python flask

- 3kafka使用_使用多线程增加kafka消费能力

- 4最详细的ubuntu 安装 docker教程_ubuntu安装docker和jupter docker

- 5【算法训练营】最长公共子序列,倒水问题,奶牛吃草(Python实现)

- 6力扣HOT100 - 146. LRU缓存

- 7linux之静态库与动态库_linux动态库和静态库

- 8Rust使用国内镜像安装依赖_rust国内镜像

- 9(1)2000~2019 年各区县指标大全(面板数据,485 个指标,4w+ 观测值)(2)各区县距离海岸线和港口的距离(3)1998~2016 年各省、各城市、各区县 PM2.5 浓度面板数据_区县尺度的数据有哪些

- 10华为 任正非说,“很多找工作的人问我,来公司工作有没有双休?需不需要加班?_华为总部工作有双休吗

当前位置: article > 正文

机器人技能学习-构建自己的数据集并进行训练_robomimic

作者:盐析白兔 | 2024-04-26 13:01:44

赞

踩

robomimic

概要

若想训练自己的场景,数据集的重要性不做过多赘述,下面就基于 robomimic 和 robosuite 构建自己的数据集进行讲解,同时,也会附上 train 和 run 的流程,这样,就形成了闭环。

自建数据集

采集数据

采集数据可使用脚本 collect_human_demonstrations.py 完成,在采集过程中,需要自己定义 env 的相关信息,在实际使用时,存在以下几个问题:

- 无法控制机器人精准的完成抓取工作

- 机器人在某些姿态下,运动会出现漂移的情况

格式转换

该功能脚本为 convert_robosuite.py,在进行执行是:

$ python conversion/convert_robosuite.py --dataset /path/to/demo.hdf5

- 1

生成OBS用于训练

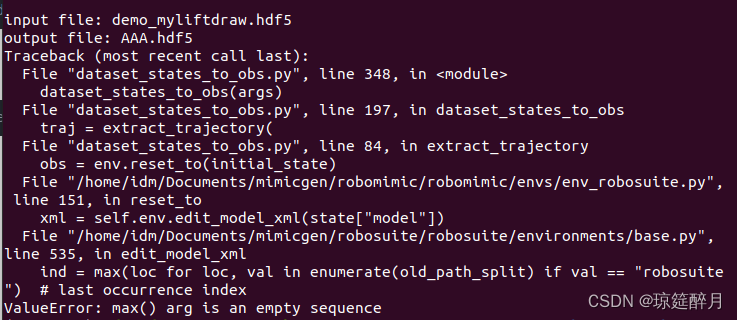

python dataset_states_to_obs.py --dataset demo_myliftdraw.hdf5 --output AAA.hdf5

- 1

BUG记录

Bug1

在进行 conver_to_robosuite时,也有个小bug,当数据集中 demo数量较少时,比如为1,那么 convert 就会失败,这是因为在进行 split 时数据太少导致的,

""" Helper script to convert a dataset collected using robosuite into an hdf5 compatible with this repository. Takes a dataset path corresponding to the demo.hdf5 file containing the demonstrations. It modifies the dataset in-place. By default, the script also creates a 90-10 train-validation split. For more information on collecting datasets with robosuite, see the code link and documentation link below. Code: https://github.com/ARISE-Initiative/robosuite/blob/offline_study/robosuite/scripts/collect_human_demonstrations.py Documentation: https://robosuite.ai/docs/algorithms/demonstrations.html Example usage: python convert_robosuite.py --dataset /path/to/your/demo.hdf5 """ import h5py import json import argparse import robomimic.envs.env_base as EB from robomimic.scripts.split_train_val import split_train_val_from_hdf5 if __name__ == "__main__": parser = argparse.ArgumentParser() parser.add_argument( "--dataset", type=str, help="path to input hdf5 dataset", ) args = parser.parse_args() f = h5py.File(args.dataset, "a") # edit mode # import ipdb; ipdb.set_trace() # store env meta env_name = f["data"].attrs["env"] env_info = json.loads(f["data"].attrs["env_info"]) env_meta = dict( type=EB.EnvType.ROBOSUITE_TYPE, env_name=env_name, env_version=f["data"].attrs["repository_version"], env_kwargs=env_info, ) if "env_args" in f["data"].attrs: del f["data"].attrs["env_args"] f["data"].attrs["env_args"] = json.dumps(env_meta, indent=4) print("====== Stored env meta ======") print(f["data"].attrs["env_args"]) # store metadata about number of samples total_samples = 0 for ep in f["data"]: # ensure model-xml is in per-episode metadata assert "model_file" in f["data/{}".format(ep)].attrs # add "num_samples" into per-episode metadata if "num_samples" in f["data/{}".format(ep)].attrs: del f["data/{}".format(ep)].attrs["num_samples"] n_sample = f["data/{}/actions".format(ep)].shape[0] f["data/{}".format(ep)].attrs["num_samples"] = n_sample total_samples += n_sample # add total samples to global metadata if "total" in f["data"].attrs: del f["data"].attrs["total"] f["data"].attrs["total"] = total_samples f.close() # create 90-10 train-validation split in the dataset split_train_val_from_hdf5(hdf5_path=args.dataset, val_ratio=0.1)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

最后一行,只需要将 val_ration = 1 就可以了。

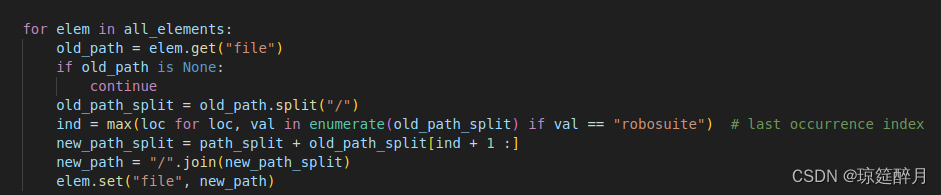

Bug2

描述:同样的操作过程,用自己新建的 env环境类生成数据,在进行 obs 生成时报错,但是用官方的 env 是没有问题的

解决方法:不要试图随性构建自己的模型库,因为这玩意是按照固定路径搜索的,坑坑坑,忙了半天,发现是路径的问题,里面有个 robosuite 的固定关键词,这个会影响 to obs 的。

Train && Test

Train 需要调用的脚本是:

$ python robomimic/robomimic/scripts/train.py --config bc_rnn.json

- 1

Test调用的脚本为:

$ python robomimic/robomimic/scripts/run_trained_agent.py --agent /path/to/model.pth --n_rollouts 50 --horizon 400 --seed 0 --video_path /path/to/output.mp4 --camera_names agentview robot0_eye_in_hand

- 1

在进行训练时,需要对算法进行配置,即导入的 json 文件,以下是一个配置文件的案例,该文件来源于 mimicgen 生成的,其中,里面 有对于算法的部分参数, 比如 "algo_name": "bc",如果功底不够深厚的话,建议大家只改下数据集的 path 就可以了:

{ "algo_name": "bc", "experiment": { "name": "source_draw_low_dim", "validate": false, "logging": { "terminal_output_to_txt": true, "log_tb": true, "log_wandb": false, "wandb_proj_name": "debug" }, "save": { "enabled": true, "every_n_seconds": null, "every_n_epochs": 50, "epochs": [], "on_best_validation": false, "on_best_rollout_return": false, "on_best_rollout_success_rate": true }, "epoch_every_n_steps": 100, "validation_epoch_every_n_steps": 10, "env": null, "additional_envs": null, "render": false, "render_video": true, "keep_all_videos": false, "video_skip": 5, "rollout": { "enabled": true, "n": 50, "horizon": 400, "rate": 50, "warmstart": 0, "terminate_on_success": true } }, "train": { "data": "/home/idm/Documents/mimicgen/robosuite/robosuite/scripts/generate_dataset/tmp/draw_data/demo_obs.hdf5", "output_dir": "/home/idm/Documents/mimicgen/robosuite/robosuite/scripts/generate_dataset/tmp/draw_data/trained_models", "num_data_workers": 0, "hdf5_cache_mode": "all", "hdf5_use_swmr": true, "hdf5_load_next_obs": false, "hdf5_normalize_obs": false, "hdf5_filter_key": null, "hdf5_validation_filter_key": null, "seq_length": 10, "pad_seq_length": true, "frame_stack": 1, "pad_frame_stack": true, "dataset_keys": [ "actions", "rewards", "dones" ], "goal_mode": null, "cuda": true, "batch_size": 100, "num_epochs": 2000, "seed": 1 }, "algo": { "optim_params": { "policy": { "optimizer_type": "adam", "learning_rate": { "initial": 0.001, "decay_factor": 0.1, "epoch_schedule": [], "scheduler_type": "multistep" }, "regularization": { "L2": 0.0 } } }, "loss": { "l2_weight": 1.0, "l1_weight": 0.0, "cos_weight": 0.0 }, "actor_layer_dims": [], "gaussian": { "enabled": false, "fixed_std": false, "init_std": 0.1, "min_std": 0.01, "std_activation": "softplus", "low_noise_eval": true }, "gmm": { "enabled": true, "num_modes": 5, "min_std": 0.0001, "std_activation": "softplus", "low_noise_eval": true }, "vae": { "enabled": false, "latent_dim": 14, "latent_clip": null, "kl_weight": 1.0, "decoder": { "is_conditioned": true, "reconstruction_sum_across_elements": false }, "prior": { "learn": false, "is_conditioned": false, "use_gmm": false, "gmm_num_modes": 10, "gmm_learn_weights": false, "use_categorical": false, "categorical_dim": 10, "categorical_gumbel_softmax_hard": false, "categorical_init_temp": 1.0, "categorical_temp_anneal_step": 0.001, "categorical_min_temp": 0.3 }, "encoder_layer_dims": [ 300, 400 ], "decoder_layer_dims": [ 300, 400 ], "prior_layer_dims": [ 300, 400 ] }, "rnn": { "enabled": true, "horizon": 10, "hidden_dim": 400, "rnn_type": "LSTM", "num_layers": 2, "open_loop": false, "kwargs": { "bidirectional": false } }, "transformer": { "enabled": false, "context_length": 10, "embed_dim": 512, "num_layers": 6, "num_heads": 8, "emb_dropout": 0.1, "attn_dropout": 0.1, "block_output_dropout": 0.1, "sinusoidal_embedding": false, "activation": "gelu", "supervise_all_steps": false, "nn_parameter_for_timesteps": true } }, "observation": { "modalities": { "obs": { "low_dim": [ "robot0_eef_pos", "robot0_eef_quat", "robot0_gripper_qpos", "object" ], "rgb": [], "depth": [], "scan": [] }, "goal": { "low_dim": [], "rgb": [], "depth": [], "scan": [] } }, "encoder": { "low_dim": { "core_class": null, "core_kwargs": {}, "obs_randomizer_class": null, "obs_randomizer_kwargs": {} }, "rgb": { "core_class": "VisualCore", "core_kwargs": {}, "obs_randomizer_class": null, "obs_randomizer_kwargs": {} }, "depth": { "core_class": "VisualCore", "core_kwargs": {}, "obs_randomizer_class": null, "obs_randomizer_kwargs": {} }, "scan": { "core_class": "ScanCore", "core_kwargs": {}, "obs_randomizer_class": null, "obs_randomizer_kwargs": {} } } }, "meta": { "hp_base_config_file": null, "hp_keys": [], "hp_values": [] } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

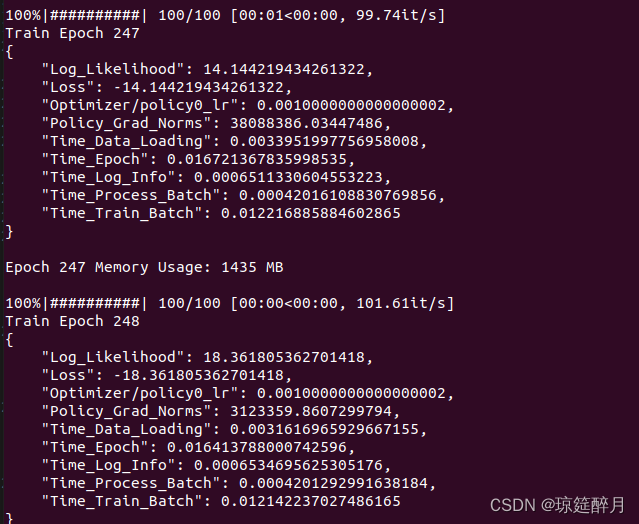

部分训练截图如下,可以看出,因数据太少,导致模型不收敛:

参考资料

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/盐析白兔/article/detail/491099

推荐阅读

相关标签