热门标签

热门文章

- 1zip的压缩和解压命令_zip压缩命令

- 2paho.mqtt.embedded-c 移植

- 3基于SSM框架的学生成绩管理系统_基于ssm学生成绩管理系统

- 4Centos7 怎么关闭防火墙_centos7 关闭iptables

- 5计算机网络的性能指标_带宽使用率接近于100

- 6开源社区的力量:合作与创新_开源社区 促进创新

- 774:第六章:开发文章服务:7:定时发布文章;(使用到了Spring的定时任务;)(这儿的方案,需要定期全局扫描article表,这效率是很低的,后面会使用MQ来做优化;)_java今日头条资讯定时发布功能实现

- 8Oracle Java Cloud Service Security_cloud server security

- 9Apk安装之——安装确认页面的初始化_channel=phlhu028

- 10centos7 环境下使用centos docker中运行flask应用及后台运行实战_怎么让flask程序在centos后台一直运行

当前位置: article > 正文

【k8s管理--集群日志管理elk】

作者:2023面试高手 | 2024-03-06 09:31:31

赞

踩

【k8s管理--集群日志管理elk】

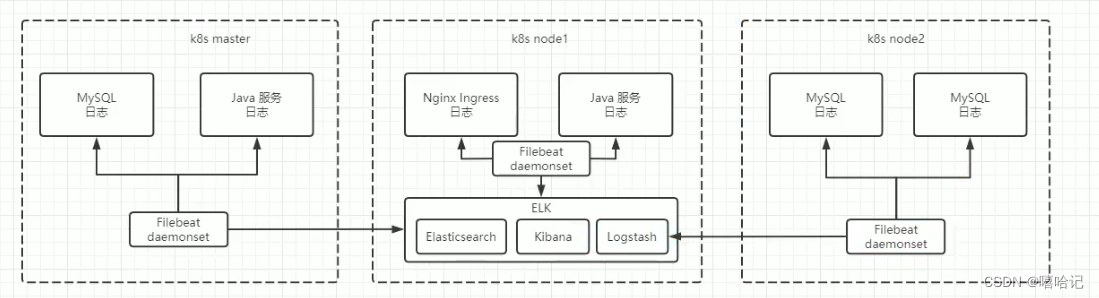

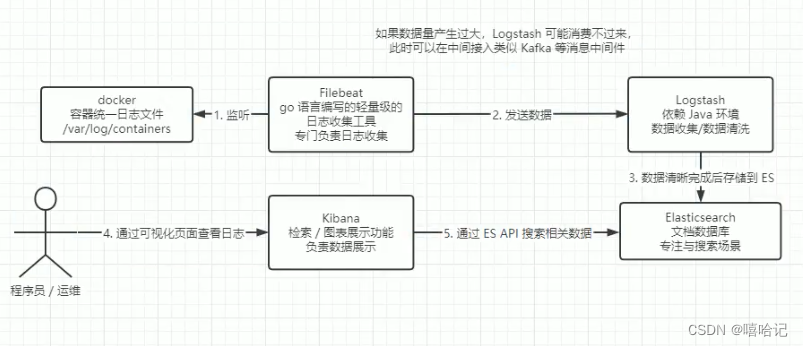

1、ELKF日志部署框架

- 使用docker部署的k8s集群所有的容器日志统一都在目录:/var/log/containers/

- 1、filebeat是一个轻量级的日志手机工具,主要功能是收集日志

- 2、logstash通可以收集日志,也可以进行数据清洗,但是一般不用logstash来做日志收集,其依赖java环境,并且数据量过大,会占用过多资源,所以logstash一般用来进行数据清洗

- 3、logstash清洗完的数据会交给elasticsearch进行存储

- 4、用户通过kibana进行可视化页面查看日志,kibana主要用途是负责数据的展示,类似于grafana。

- 5、kibana中展示得数据是通过elasticsearch的api进行相关数据的搜索。

2、ELK部署

2.1 创建配置文件

2.1.1 创建命名空间的配置

apiVersion: v1

kind: Namespace

metadata:

name: kube-logging

- 1

- 2

- 3

- 4

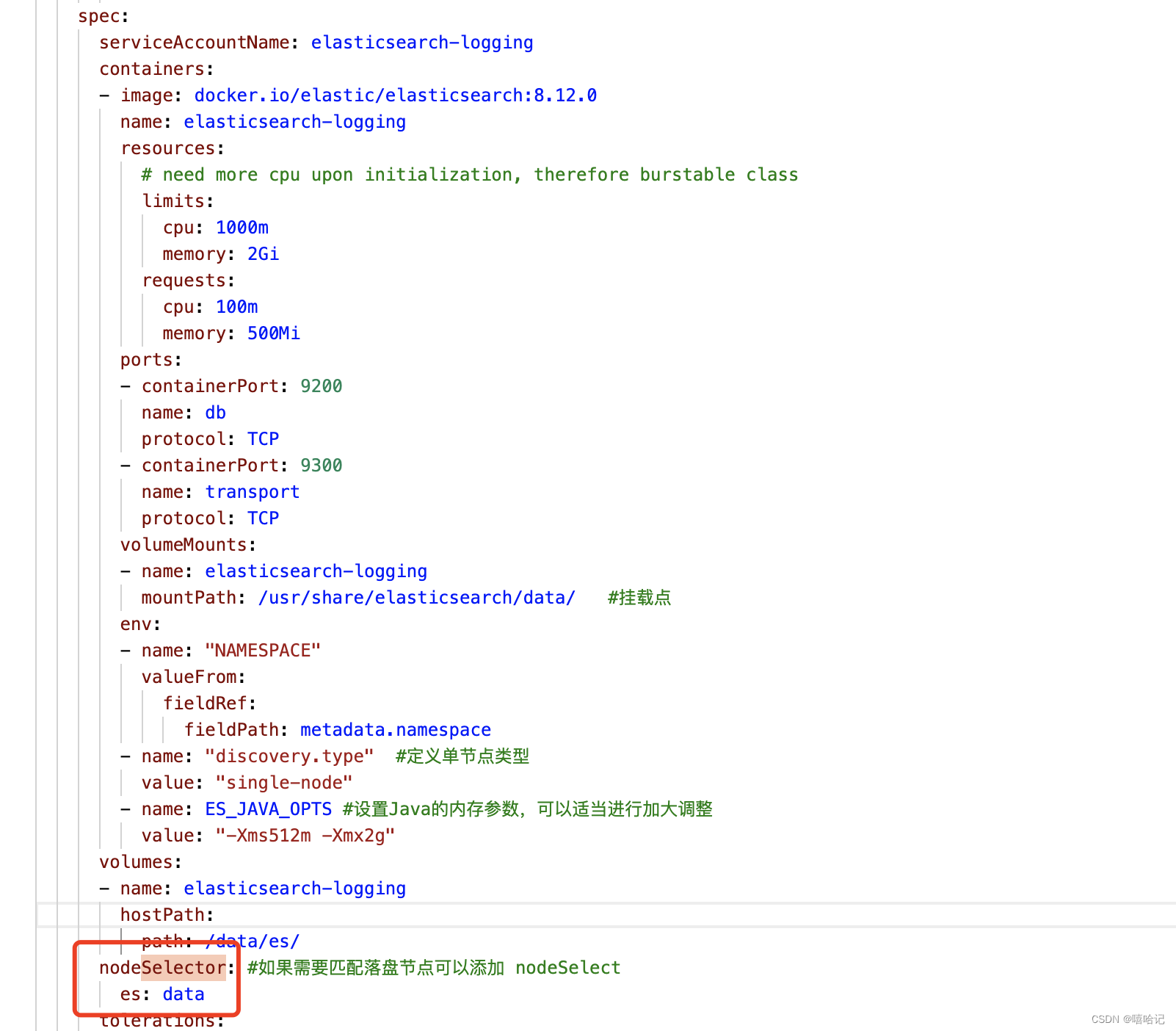

2.1.2 创建es的配置

--- apiVersion: v1 kind: Service metadata: name: elasticsearch-logging namespace: kube-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Elasticsearch" spec: ports: - port: 9200 protocol: TCP targetPort: db selector: k8s-app: elasticsearch-logging --- # RBAC authn and authz apiVersion: v1 kind: ServiceAccount metadata: name: elasticsearch-logging namespace: kube-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: elasticsearch-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile rules: - apiGroups: - "" resources: - "services" - "namespaces" - "endpoints" verbs: - "get" --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: kube-logging name: elasticsearch-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile subjects: - kind: ServiceAccount name: elasticsearch-logging namespace: kube-logging apiGroup: "" roleRef: kind: ClusterRole name: elasticsearch-logging apiGroup: "" --- # Elasticsearch deployment itself apiVersion: apps/v1 kind: StatefulSet #使用statefulset创建Pod metadata: name: elasticsearch-logging #pod名称,使用statefulSet创建的Pod是有序号有顺序的 namespace: kube-logging #命名空间 labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile srv: srv-elasticsearch spec: serviceName: elasticsearch-logging #与svc相关联,这可以确保使用以下DNS地址访问Statefulset中的每个pod (es-cluster-[0,1,2].elasticsearch.elk.svc.cluster.local) replicas: 1 #副本数量,单节点 selector: matchLabels: k8s-app: elasticsearch-logging #和pod template配置的labels相匹配 template: metadata: labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" spec: serviceAccountName: elasticsearch-logging containers: - image: docker.io/library/elasticsearch:7.9.3 name: elasticsearch-logging resources: # need more cpu upon initialization, therefore burstable class limits: cpu: 1000m memory: 2Gi requests: cpu: 100m memory: 500Mi ports: - containerPort: 9200 name: db protocol: TCP - containerPort: 9300 name: transport protocol: TCP volumeMounts: - name: elasticsearch-logging mountPath: /usr/share/elasticsearch/data/ #挂载点 env: - name: "NAMESPACE" valueFrom: fieldRef: fieldPath: metadata.namespace - name: "discovery.type" #定义单节点类型 value: "single-node" - name: ES_JAVA_OPTS #设置Java的内存参数,可以适当进行加大调整 value: "-Xms512m -Xmx2g" volumes: - name: elasticsearch-logging hostPath: path: /data/es/ nodeSelector: #如果需要匹配落盘节点可以添加 nodeSelect es: data tolerations: - effect: NoSchedule operator: Exists # Elasticsearch requires vm.max_map_count to be at least 262144. # If your OS already sets up this number to a higher value, feel free # to remove this init container. initContainers: #容器初始化前的操作 - name: elasticsearch-logging-init image: alpine:3.6 command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"] #添加mmap计数限制,太低可能造成内存不足的错误 securityContext: #仅应用到指定的容器上,并且不会影响Volume privileged: true #运行特权容器 - name: increase-fd-ulimit image: busybox imagePullPolicy: IfNotPresent command: ["sh", "-c", "ulimit -n 65536"] #修改文件描述符最大数量 securityContext: privileged: true - name: elasticsearch-volume-init #es数据落盘初始化,加上777权限 image: alpine:3.6 command: - chmod - -R - "777" - /usr/share/elasticsearch/data/ volumeMounts: - name: elasticsearch-logging mountPath: /usr/share/elasticsearch/data/

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

2.1.3 创建logstash的配置

--- apiVersion: v1 kind: Service metadata: name: logstash namespace: kube-logging spec: ports: - port: 5044 targetPort: beats selector: type: logstash clusterIP: None --- apiVersion: apps/v1 kind: Deployment metadata: name: logstash namespace: kube-logging spec: selector: matchLabels: type: logstash template: metadata: labels: type: logstash srv: srv-logstash spec: containers: - image: docker.io/kubeimages/logstash:7.9.3 #该镜像支持arm64和amd64两种架构 name: logstash ports: - containerPort: 5044 name: beats command: - logstash - '-f' - '/etc/logstash_c/logstash.conf' env: - name: "XPACK_MONITORING_ELASTICSEARCH_HOSTS" value: "http://elasticsearch-logging:9200" volumeMounts: - name: config-volume mountPath: /etc/logstash_c/ - name: config-yml-volume mountPath: /usr/share/logstash/config/ - name: timezone mountPath: /etc/localtime resources: #logstash一定要加上资源限制,避免对其他业务造成资源抢占影响 limits: cpu: 1000m memory: 2048Mi requests: cpu: 512m memory: 512Mi volumes: - name: config-volume configMap: name: logstash-conf items: - key: logstash.conf path: logstash.conf - name: timezone hostPath: path: /etc/localtime - name: config-yml-volume configMap: name: logstash-yml items: - key: logstash.yml path: logstash.yml --- apiVersion: v1 kind: ConfigMap metadata: name: logstash-conf namespace: kube-logging labels: type: logstash data: logstash.conf: |- input { beats { port => 5044 } } filter { # 处理 ingress 日志 if [kubernetes][container][name] == "nginx-ingress-controller" { json { source => "message" target => "ingress_log" } if [ingress_log][requesttime] { mutate { convert => ["[ingress_log][requesttime]", "float"] } } if [ingress_log][upstremtime] { mutate { convert => ["[ingress_log][upstremtime]", "float"] } } if [ingress_log][status] { mutate { convert => ["[ingress_log][status]", "float"] } } if [ingress_log][httphost] and [ingress_log][uri] { mutate { add_field => {"[ingress_log][entry]" => "%{[ingress_log][httphost]}%{[ingress_log][uri]}"} } mutate { split => ["[ingress_log][entry]","/"] } if [ingress_log][entry][1] { mutate { add_field => {"[ingress_log][entrypoint]" => "%{[ingress_log][entry][0]}/%{[ingress_log][entry][1]}"} remove_field => "[ingress_log][entry]" } } else { mutate { add_field => {"[ingress_log][entrypoint]" => "%{[ingress_log][entry][0]}/"} remove_field => "[ingress_log][entry]" } } } } # 处理以srv进行开头的业务服务日志 if [kubernetes][container][name] =~ /^srv*/ { json { source => "message" target => "tmp" } if [kubernetes][namespace] == "kube-logging" { drop{} } if [tmp][level] { mutate{ add_field => {"[applog][level]" => "%{[tmp][level]}"} } if [applog][level] == "debug"{ drop{} } } if [tmp][msg] { mutate { add_field => {"[applog][msg]" => "%{[tmp][msg]}"} } } if [tmp][func] { mutate { add_field => {"[applog][func]" => "%{[tmp][func]}"} } } if [tmp][cost]{ if "ms" in [tmp][cost] { mutate { split => ["[tmp][cost]","m"] add_field => {"[applog][cost]" => "%{[tmp][cost][0]}"} convert => ["[applog][cost]", "float"] } } else { mutate { add_field => {"[applog][cost]" => "%{[tmp][cost]}"} } } } if [tmp][method] { mutate { add_field => {"[applog][method]" => "%{[tmp][method]}"} } } if [tmp][request_url] { mutate { add_field => {"[applog][request_url]" => "%{[tmp][request_url]}"} } } if [tmp][meta._id] { mutate { add_field => {"[applog][traceId]" => "%{[tmp][meta._id]}"} } } if [tmp][project] { mutate { add_field => {"[applog][project]" => "%{[tmp][project]}"} } } if [tmp][time] { mutate { add_field => {"[applog][time]" => "%{[tmp][time]}"} } } if [tmp][status] { mutate { add_field => {"[applog][status]" => "%{[tmp][status]}"} convert => ["[applog][status]", "float"] } } } mutate { rename => ["kubernetes", "k8s"] remove_field => "beat" remove_field => "tmp" remove_field => "[k8s][labels][app]" } } output { elasticsearch { hosts => ["http://elasticsearch-logging:9200"] codec => json index => "logstash-%{+YYYY.MM.dd}" #索引名称以logstash+日志进行每日新建 } } --- apiVersion: v1 kind: ConfigMap metadata: name: logstash-yml namespace: kube-logging labels: type: logstash data: logstash.yml: |- http.host: "0.0.0.0" xpack.monitoring.elasticsearch.hosts: http://elasticsearch-logging:9200

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

2.1.4 创建filebeat的配置

--- apiVersion: v1 kind: ConfigMap metadata: name: filebeat-config namespace: kube-logging labels: k8s-app: filebeat data: filebeat.yml: |- filebeat.inputs: - type: container enable: true paths: - /var/log/containers/*.log #这里是filebeat采集挂载到pod中的日志目录 processors: - add_kubernetes_metadata: #添加k8s的字段用于后续的数据清洗 host: ${NODE_NAME} matchers: - logs_path: logs_path: "/var/log/containers/" #output.kafka: #如果日志量较大,es中的日志有延迟,可以选择在filebeat和logstash中间加入kafka # hosts: ["kafka-log-01:9092", "kafka-log-02:9092", "kafka-log-03:9092"] # topic: 'topic-test-log' # version: 2.0.0 output.logstash: #因为还需要部署logstash进行数据的清洗,因此filebeat是把数据推到logstash中 hosts: ["logstash:5044"] enabled: true --- apiVersion: v1 kind: ServiceAccount metadata: name: filebeat namespace: kube-logging labels: k8s-app: filebeat --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: filebeat labels: k8s-app: filebeat rules: - apiGroups: [""] # "" indicates the core API group resources: - namespaces - pods verbs: ["get", "watch", "list"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: filebeat subjects: - kind: ServiceAccount name: filebeat namespace: kube-logging roleRef: kind: ClusterRole name: filebeat apiGroup: rbac.authorization.k8s.io --- apiVersion: apps/v1 kind: DaemonSet metadata: name: filebeat namespace: kube-logging labels: k8s-app: filebeat spec: selector: matchLabels: k8s-app: filebeat template: metadata: labels: k8s-app: filebeat spec: serviceAccountName: filebeat terminationGracePeriodSeconds: 30 containers: - name: filebeat image: docker.io/kubeimages/filebeat:7.9.3 #该镜像支持arm64和amd64两种架构 args: [ "-c", "/etc/filebeat.yml", "-e","-httpprof","0.0.0.0:6060" ] #ports: # - containerPort: 6060 # hostPort: 6068 env: - name: NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName - name: ELASTICSEARCH_HOST value: elasticsearch-logging - name: ELASTICSEARCH_PORT value: "9200" securityContext: runAsUser: 0 # If using Red Hat OpenShift uncomment this: #privileged: true resources: limits: memory: 1000Mi cpu: 1000m requests: memory: 100Mi cpu: 100m volumeMounts: - name: config #挂载的是filebeat的配置文件 mountPath: /etc/filebeat.yml readOnly: true subPath: filebeat.yml - name: data #持久化filebeat数据到宿主机上 mountPath: /usr/share/filebeat/data - name: varlibdockercontainers #这里主要是把宿主机上的源日志目录挂载到filebeat容器中,如果没有修改docker或者containerd的runtime进行了标准的日志落盘路径,可以把mountPath改为/var/lib mountPath: /var/lib readOnly: true - name: varlog #这里主要是把宿主机上/var/log/pods和/var/log/containers的软链接挂载到filebeat容器中 mountPath: /var/log/ readOnly: true - name: timezone mountPath: /etc/localtime volumes: - name: config configMap: defaultMode: 0600 name: filebeat-config - name: varlibdockercontainers hostPath: #如果没有修改docker或者containerd的runtime进行了标准的日志落盘路径,可以把path改为/var/lib path: /var/lib - name: varlog hostPath: path: /var/log/ # data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart - name: inputs configMap: defaultMode: 0600 name: filebeat-inputs - name: data hostPath: path: /data/filebeat-data type: DirectoryOrCreate - name: timezone hostPath: path: /etc/localtime tolerations: #加入容忍能够调度到每一个节点 - effect: NoExecute key: dedicated operator: Equal value: gpu - effect: NoSchedule operator: Exists

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

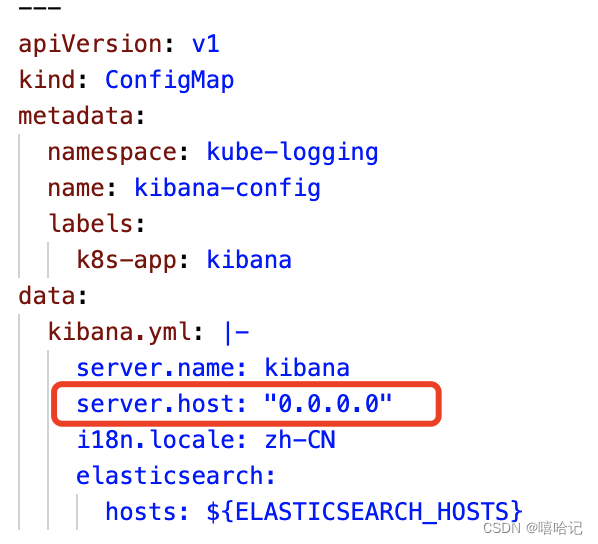

2.1.5 创建kibana的配置

--- apiVersion: v1 kind: ConfigMap metadata: namespace: kube-logging name: kibana-config labels: k8s-app: kibana data: kibana.yml: |- server.name: kibana server.host: "0.0.0.0" i18n.locale: zh-CN #设置默认语言为中文 elasticsearch: hosts: ${ELASTICSEARCH_HOSTS} #es集群连接地址,由于我这都都是k8s部署且在一个ns下,可以直接使用service name连接 --- apiVersion: v1 kind: Service metadata: name: kibana namespace: kube-logging labels: k8s-app: kibana kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Kibana" srv: srv-kibana spec: type: NodePort ports: - port: 5601 protocol: TCP targetPort: ui selector: k8s-app: kibana --- apiVersion: apps/v1 kind: Deployment metadata: name: kibana namespace: kube-logging labels: k8s-app: kibana kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile srv: srv-kibana spec: replicas: 1 selector: matchLabels: k8s-app: kibana template: metadata: labels: k8s-app: kibana spec: containers: - name: kibana image: docker.io/kubeimages/kibana:7.9.3 #该镜像支持arm64和amd64两种架构 resources: # need more cpu upon initialization, therefore burstable class limits: cpu: 1000m requests: cpu: 100m env: - name: ELASTICSEARCH_HOSTS value: http://elasticsearch-logging:9200 ports: - containerPort: 5601 name: ui protocol: TCP volumeMounts: - name: config mountPath: /usr/share/kibana/config/kibana.yml readOnly: true subPath: kibana.yml volumes: - name: config configMap: name: kibana-config --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: kibana namespace: kube-logging spec: ingressClassName: nginx rules: - host: kibana.lan-he.com.cn http: paths: - path: / pathType: Prefix backend: service: name: kibana port: number: 5601

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

2.2 部署elk服务

2.2.1 部署elk服务以及查看状态

[root@k8s-master elk]# kubectl apply -f namespace.yaml namespace/kube-logging created [root@k8s-master elk]# kubectl apply -f es.yaml service/elasticsearch-logging created serviceaccount/elasticsearch-logging created clusterrole.rbac.authorization.k8s.io/elasticsearch-logging created clusterrolebinding.rbac.authorization.k8s.io/elasticsearch-logging created statefulset.apps/elasticsearch-logging created [root@k8s-master elk]# kubectl apply -f logstash.yaml service/logstash created deployment.apps/logstash created configmap/logstash-conf created configmap/logstash-yml created [root@k8s-master elk]# kubectl apply -f kibana.yaml configmap/kibana-config created service/kibana created deployment.apps/kibana created ingress.networking.k8s.io/kibana created [root@k8s-master elk]# kubectl apply -f filebeat.yaml configmap/filebeat-config created serviceaccount/filebeat created clusterrole.rbac.authorization.k8s.io/filebeat created clusterrolebinding.rbac.authorization.k8s.io/filebeat created daemonset.apps/filebeat created [root@k8s-master elk]# kubectl get all -n kube-logging NAME READY STATUS RESTARTS AGE pod/elasticsearch-logging-0 0/1 Pending 0 27s pod/filebeat-2fz26 1/1 Running 0 2m17s pod/filebeat-j4qf2 1/1 Running 0 2m17s pod/filebeat-r97lm 1/1 Running 0 2m17s pod/kibana-86ddf46d47-9q79f 0/1 ContainerCreating 0 8s pod/logstash-5f774b55bb-992qj 0/1 Pending 0 20s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/elasticsearch-logging ClusterIP 10.1.16.54 <none> 9200/TCP 28s service/kibana NodePort 10.1.181.71 <none> 5601:32274/TCP 8s service/logstash ClusterIP None <none> 5044/TCP 21s NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/filebeat 3 3 2 3 2 <none> 3m26s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/kibana 0/1 1 0 8s deployment.apps/logstash 0/1 1 0 21s NAME DESIRED CURRENT READY AGE replicaset.apps/kibana-86ddf46d47 1 1 0 8s replicaset.apps/logstash-5f774b55bb 1 1 0 20s NAME READY AGE statefulset.apps/elasticsearch-logging 0/1 27s

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

2.2.2 Q1:es的pod状态是Pending

2.2.2.1 查看pod的描述信息

-

信息一: node(s) had untolerated taint {node-role.kubernetes.io/control-plane: },

- 这表示有一个节点(控制平面节点)被标记(tainted)为 node-role.kubernetes.io/control-plane,并且没有 Pod 能够容忍(tolerate)这个标记。通常,控制平面节点(即 Kubernetes 集群中的 master 节点)是不应该运行工作负载的。

-

信息二: 1 node(s) had untolerated taint {node.kubernetes.io/unreachable: }:

- 这表明还有一个节点被认为是不可达的,因此 Pod 无法被调度到该节点上。

-

信息三: 2 Insufficient memory. preemption:

- 这表示有两个节点上的可用内存不足以满足 Pod 的资源请求。

-

信息四: 0/3 nodes are available

- 这表示即使考虑 Pod 抢占,也没有可用的节点可以供 Pod 使用。

-

信息五: 1 No preemption victims found for incoming pod, 2 Preemption is not helpful for scheduling.

- 这两条信息表明,对于即将到来的 Pod,没有找到可以被抢占(即终止并重新调度)的低优先级 Pod,或者即使进行了抢占,也不会对调度有帮助。

[root@k8s-master elk]# kubectl describe -n kube-logging po elasticsearch-logging-0 Name: elasticsearch-logging-0 Namespace: kube-logging Priority: 0 Service Account: elasticsearch-logging Node: <none> Labels: controller-revision-hash=elasticsearch-logging-bcd67584 k8s-app=elasticsearch-logging kubernetes.io/cluster-service=true statefulset.kubernetes.io/pod-name=elasticsearch-logging-0 Annotations: <none> Status: Pending IP: IPs: <none> Controlled By: StatefulSet/elasticsearch-logging Init Containers: elasticsearch-logging-init: Image: alpine:3.6 Port: <none> Host Port: <none> Command: /sbin/sysctl -w vm.max_map_count=262144 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-fwbvr (ro) increase-fd-ulimit: Image: busybox Port: <none> Host Port: <none> Command: sh -c ulimit -n 65536 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-fwbvr (ro) elasticsearch-volume-init: Image: alpine:3.6 Port: <none> Host Port: <none> Command: chmod -R 777 /usr/share/elasticsearch/data/ Environment: <none> Mounts: /usr/share/elasticsearch/data/ from elasticsearch-logging (rw) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-fwbvr (ro) Containers: elasticsearch-logging: Image: docker.io/elastic/elasticsearch:8.12.0 Ports: 9200/TCP, 9300/TCP Host Ports: 0/TCP, 0/TCP Limits: cpu: 1 memory: 2Gi Requests: cpu: 100m memory: 500Mi Environment: NAMESPACE: kube-logging (v1:metadata.namespace) discovery.type: single-node ES_JAVA_OPTS: -Xms512m -Xmx2g Mounts: /usr/share/elasticsearch/data/ from elasticsearch-logging (rw) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-fwbvr (ro) Conditions: Type Status PodScheduled False Volumes: elasticsearch-logging: Type: HostPath (bare host directory volume) Path: /data/es/ HostPathType: kube-api-access-fwbvr: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true QoS Class: Burstable Node-Selectors: es=data Tolerations: :NoSchedule op=Exists node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 2m37s default-scheduler 0/3 nodes are available: 2 Insufficient memory, 3 node(s) didn't match Pod's node affinity/selector. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

2.2.2.2 给master打一个标签

- 查看es的yaml文件中得到pod需要往哪个node上调用,标签如下图

[root@k8s-master elk]# kubectl label nodes k8s-master es='data' node/k8s-master labeled [root@k8s-master elk]# kubectl get all -n kube-logging NAME READY STATUS RESTARTS AGE pod/elasticsearch-logging-0 0/1 Init:0/3 0 16m pod/filebeat-2fz26 1/1 Running 0 2m17s pod/filebeat-j4qf2 1/1 Running 0 2m17s pod/filebeat-r97lm 1/1 Running 0 2m17s pod/kibana-86ddf46d47-6vmrb 0/1 ContainerCreating 0 59s pod/kibana-86ddf46d47-9q79f 1/1 Terminating 0 16m pod/logstash-5f774b55bb-992qj 0/1 Pending 0 16m NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/filebeat 3 3 2 3 2 <none> 3m26s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/elasticsearch-logging ClusterIP 10.1.16.54 <none> 9200/TCP 16m service/kibana NodePort 10.1.181.71 <none> 5601:32274/TCP 16m service/logstash ClusterIP None <none> 5044/TCP 16m NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/filebeat 3 3 2 3 2 <none> 3m26s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/kibana 0/1 1 0 16m deployment.apps/logstash 0/1 1 0 16m NAME DESIRED CURRENT READY AGE replicaset.apps/kibana-86ddf46d47 1 1 0 16m replicaset.apps/logstash-5f774b55bb 1 1 0 16m NAME READY AGE statefulset.apps/elasticsearch-logging 0/1 16m

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

2.2.3 Q2:logstash的状态也是Pending

2.2.3.1 查看pod的描述信息

- 问题和刚才es启动的时候问题差不多一致

[root@k8s-master elk]# kubectl describe -n kube-logging po logstash-5f774b55bb-992qj Name: logstash-5f774b55bb-992qj Namespace: kube-logging Priority: 0 Service Account: default Node: <none> Labels: pod-template-hash=5f774b55bb srv=srv-logstash type=logstash Annotations: <none> Status: Pending IP: IPs: <none> Controlled By: ReplicaSet/logstash-5f774b55bb Containers: logstash: Image: docker.io/elastic/logstash:8.12.0 Port: 5044/TCP Host Port: 0/TCP Command: logstash -f /etc/logstash_c/logstash.conf Limits: cpu: 1 memory: 2Gi Requests: cpu: 512m memory: 512Mi Environment: XPACK_MONITORING_ELASTICSEARCH_HOSTS: http://elasticsearch-logging:9200 Mounts: /etc/localtime from timezone (rw) /etc/logstash_c/ from config-volume (rw) /usr/share/logstash/config/ from config-yml-volume (rw) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-2x2t5 (ro) Conditions: Type Status PodScheduled False Volumes: config-volume: Type: ConfigMap (a volume populated by a ConfigMap) Name: logstash-conf Optional: false timezone: Type: HostPath (bare host directory volume) Path: /etc/localtime HostPathType: config-yml-volume: Type: ConfigMap (a volume populated by a ConfigMap) Name: logstash-yml Optional: false kube-api-access-2x2t5: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true QoS Class: Burstable Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 5m59s (x2 over 10m) default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 1 node(s) had untolerated taint {node.kubernetes.io/unreachable: }, 2 Insufficient memory. preemption: 0/3 nodes are available: 1 No preemption victims found for incoming pod, 2 Preemption is not helpful for scheduling. Warning FailedScheduling 3m23s (x5 over 21m) default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 2 Insufficient memory. preemption: 0/3 nodes are available: 1 Preemption is not helpful for scheduling, 2 No preemption victims found for incoming pod.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

2.2.3.2 释放资源和添加标签

[root@k8s-master ~]# kubectl label nodes k8s-node-01 type="logstash"

node/k8s-node-01 labeled

# 删除之前创建的prometheus资源

[root@k8s-master ~]# kubectl delete -f kube-prometheus-0.12.0/manifests/

- 1

- 2

- 3

- 4

- 5

2.2.4 Q3:kibana状态是:CrashLoopBackOff

[root@k8s-master ~]# kubectl get po -n kube-logging -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

elasticsearch-logging-0 1/1 Running 3 (4m54s ago) 71m 10.2.0.4 k8s-master <none> <none>

filebeat-2fz26 1/1 Running 0 6m7s 10.2.0.5 k8s-master <none> <none>

filebeat-j4qf2 1/1 Running 0 6m7s 10.2.1.20 k8s-node-01 <none> <none>

filebeat-r97lm 1/1 Running 0 6m7s 10.2.2.17 k8s-node-02 <none> <none>

kibana-86ddf46d47-6vmrb 0/1 CrashLoopBackOff 20 (43s ago) 93m 10.2.2.12 k8s-node-02 <none> <none>

logstash-5f774b55bb-992qj 1/1 Running 0 108m 10.2.1.19 k8s-node-01 <none> <none>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

2.2.4.1、查看pod的描述信息

[root@k8s-master ~]# kubectl describe -n kube-logging po kibana-86ddf46d47-d97cj Name: kibana-86ddf46d47-d97cj Namespace: kube-logging Priority: 0 Service Account: default Node: k8s-node-02/192.168.0.191 Start Time: Sat, 02 Mar 2024 15:23:10 +0800 Labels: k8s-app=kibana pod-template-hash=86ddf46d47 Annotations: <none> Status: Running IP: 10.2.2.15 IPs: IP: 10.2.2.15 Controlled By: ReplicaSet/kibana-86ddf46d47 Containers: kibana: Container ID: docker://c5a6e6ee69e7766dd38374be22bad6d44c51deae528f36f00624e075e4ffe1d2 Image: docker.io/elastic/kibana:8.12.0 Image ID: docker-pullable://elastic/kibana@sha256:596700740fdb449c5726e6b6a6dd910c2140c8a37e68a301a5186e86b0834c9f Port: 5601/TCP Host Port: 0/TCP State: Waiting Reason: CrashLoopBackOff Last State: Terminated Reason: Error Exit Code: 1 Started: Sat, 02 Mar 2024 15:24:50 +0800 Finished: Sat, 02 Mar 2024 15:24:54 +0800 Ready: False Restart Count: 4 Limits: cpu: 1 Requests: cpu: 100m Environment: ELASTICSEARCH_HOSTS: http://elasticsearch-logging:9200 Mounts: /usr/share/kibana/config/kibana.yml from config (ro,path="kibana.yml") /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-fpcqx (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: config: Type: ConfigMap (a volume populated by a ConfigMap) Name: kibana-config Optional: false kube-api-access-fpcqx: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true QoS Class: Burstable Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 2m12s default-scheduler Successfully assigned kube-logging/kibana-86ddf46d47-d97cj to k8s-node-02 Normal Pulled 32s (x5 over 2m10s) kubelet Container image "docker.io/elastic/kibana:8.12.0" already present on machine Normal Created 32s (x5 over 2m10s) kubelet Created container kibana Normal Started 32s (x5 over 2m10s) kubelet Started container kibana Warning BackOff 1s (x9 over 2m) kubelet Back-off restarting failed container

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

2.2.4.2、查看容器的日志信息

# 1、查看pod的描述信息 [root@k8s-master ~]# kubectl logs -n kube-logging kibana-86ddf46d47-d97cj Kibana is currently running with legacy OpenSSL providers enabled! For details and instructions on how to disable see https://www.elastic.co/guide/en/kibana/8.12/production.html#openssl-legacy-provider {"log.level":"info","@timestamp":"2024-03-02T07:24:52.627Z","log.logger":"elastic-apm-node","ecs.version":"8.10.0","agentVersion":"4.2.0","env":{"pid":7,"proctitle":"/usr/share/kibana/bin/../node/bin/node","os":"linux 3.10.0-1160.105.1.el7.x86_64","arch":"x64","host":"kibana-86ddf46d47-d97cj","timezone":"UTC+00","runtime":"Node.js v18.18.2"},"config":{"active":{"source":"start","value":true},"breakdownMetrics":{"source":"start","value":false},"captureBody":{"source":"start","value":"off","commonName":"capture_body"},"captureHeaders":{"source":"start","value":false},"centralConfig":{"source":"start","value":false},"contextPropagationOnly":{"source":"start","value":true},"environment":{"source":"start","value":"production"},"globalLabels":{"source":"start","value":[["git_rev","e9092c0a17923f4ed984456b8a5db619b0a794b3"]],"sourceValue":{"git_rev":"e9092c0a17923f4ed984456b8a5db619b0a794b3"}},"logLevel":{"source":"default","value":"info","commonName":"log_level"},"metricsInterval":{"source":"start","value":120,"sourceValue":"120s"},"serverUrl":{"source":"start","value":"https://kibana-cloud-apm.apm.us-east-1.aws.found.io/","commonName":"server_url"},"transactionSampleRate":{"source":"start","value":0.1,"commonName":"transaction_sample_rate"},"captureSpanStackTraces":{"source":"start","sourceValue":false},"secretToken":{"source":"start","value":"[REDACTED]","commonName":"secret_token"},"serviceName":{"source":"start","value":"kibana","commonName":"service_name"},"serviceVersion":{"source":"start","value":"8.12.0","commonName":"service_version"}},"activationMethod":"require","message":"Elastic APM Node.js Agent v4.2.0"} [2024-03-02T07:24:54.467+00:00][INFO ][root] Kibana is starting [2024-03-02T07:24:54.550+00:00][INFO ][root] Kibana is shutting down [2024-03-02T07:24:54.554+00:00][FATAL][root] Reason: [config validation of [server].host]: value must be a valid hostname (see RFC 1123). Error: [config validation of [server].host]: value must be a valid hostname (see RFC 1123). at ObjectType.validate (/usr/share/kibana/node_modules/@kbn/config-schema/src/types/type.js:94:13) at ConfigService.validateAtPath (/usr/share/kibana/node_modules/@kbn/config/src/config_service.js:253:19) at /usr/share/kibana/node_modules/@kbn/config/src/config_service.js:263:204 at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/operators/map.js:10:37 at OperatorSubscriber._this._next (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/operators/OperatorSubscriber.js:33:21) at OperatorSubscriber.Subscriber.next (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Subscriber.js:51:18) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/operators/distinctUntilChanged.js:18:28 at OperatorSubscriber._this._next (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/operators/OperatorSubscriber.js:33:21) at OperatorSubscriber.Subscriber.next (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Subscriber.js:51:18) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/operators/map.js:10:24 at OperatorSubscriber._this._next (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/operators/OperatorSubscriber.js:33:21) at OperatorSubscriber.Subscriber.next (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Subscriber.js:51:18) at ReplaySubject._subscribe (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/ReplaySubject.js:54:24) at ReplaySubject.Observable._trySubscribe (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:41:25) at ReplaySubject.Subject._trySubscribe (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Subject.js:123:47) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:35:31 at Object.errorContext (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/util/errorContext.js:22:9) at ReplaySubject.Observable.subscribe (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:26:24) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/operators/share.js:66:18 at OperatorSubscriber.<anonymous> (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/util/lift.js:14:28) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:30:30 at Object.errorContext (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/util/errorContext.js:22:9) at Observable.subscribe (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:26:24) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/operators/map.js:9:16 at OperatorSubscriber.<anonymous> (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/util/lift.js:14:28) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:30:30 at Object.errorContext (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/util/errorContext.js:22:9) at Observable.subscribe (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:26:24) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/operators/distinctUntilChanged.js:13:16 at OperatorSubscriber.<anonymous> (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/util/lift.js:14:28) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:30:30 at Object.errorContext (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/util/errorContext.js:22:9) at Observable.subscribe (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:26:24) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/operators/map.js:9:16 at SafeSubscriber.<anonymous> (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/util/lift.js:14:28) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:30:30 at Object.errorContext (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/util/errorContext.js:22:9) at Observable.subscribe (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/Observable.js:26:24) at /usr/share/kibana/node_modules/rxjs/dist/cjs/internal/firstValueFrom.js:24:16 at new Promise (<anonymous>) at firstValueFrom (/usr/share/kibana/node_modules/rxjs/dist/cjs/internal/firstValueFrom.js:8:12) at EnvironmentService.preboot (/usr/share/kibana/node_modules/@kbn/core-environment-server-internal/src/environment_service.js:50:169) at Server.preboot (/usr/share/kibana/node_modules/@kbn/core-root-server-internal/src/server.js:146:55) at Root.preboot (/usr/share/kibana/node_modules/@kbn/core-root-server-internal/src/root/index.js:47:32) at processTicksAndRejections (node:internal/process/task_queues:95:5) at bootstrap (/usr/share/kibana/node_modules/@kbn/core-root-server-internal/src/bootstrap.js:97:9) at Command.<anonymous> (/usr/share/kibana/src/cli/serve/serve.js:241:5) FATAL Error: [config validation of [server].host]: value must be a valid hostname (see RFC 1123).

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

2.2.4.3 kibana的配置文件中有个hosts信息填写错误

# 1、更新配置文件 [root@k8s-master ~]# kubectl replace -f elk/kibana.yaml configmap/kibana-config replaced service/kibana replaced deployment.apps/kibana replaced ingress.networking.k8s.io/kibana replaced # 2、删除旧的pod [root@k8s-master ~]# kubectl delete po kibana-86ddf46d47-d97cj -n kube-logging pod "kibana-86ddf46d47-d97cj" deleted # 3、全部服务都跑起来了 [root@k8s-master ~]# kubectl get all -n kube-logging NAME READY STATUS RESTARTS AGE pod/elasticsearch-logging-0 1/1 Running 3 (14m ago) 80m pod/filebeat-2fz26 1/1 Running 0 4m20s pod/filebeat-j4qf2 1/1 Running 0 4m20s pod/filebeat-r97lm 1/1 Running 0 4m20s pod/kibana-86ddf46d47-d2ddz 1/1 Running 0 3s pod/logstash-5f774b55bb-992qj 1/1 Running 0 117m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/elasticsearch-logging ClusterIP 10.1.16.54 <none> 9200/TCP 117m service/kibana NodePort 10.1.181.71 <none> 5601:32274/TCP 117m service/logstash ClusterIP None <none> 5044/TCP 117m NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/filebeat 3 3 2 3 2 <none> 3m26s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/kibana 1/1 1 1 117m deployment.apps/logstash 1/1 1 1 117m NAME DESIRED CURRENT READY AGE replicaset.apps/kibana-86ddf46d47 1 1 1 117m replicaset.apps/logstash-5f774b55bb 1 1 1 117m NAME READY AGE statefulset.apps/elasticsearch-logging 1/1 117m

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

2.2.5 查看elk可视化界面,显示 Kibana 服务器尚未准备就绪。

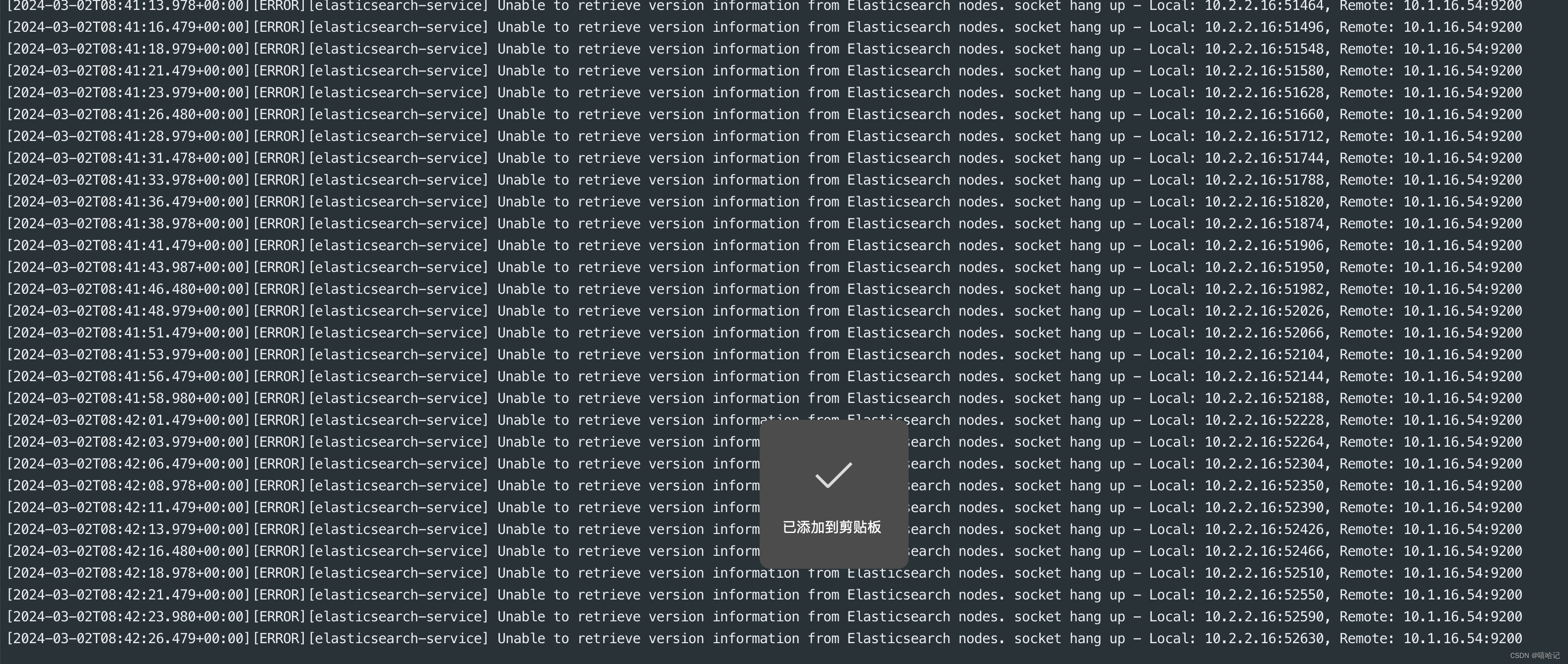

# 1、查看ingress控制器的信息 [root@k8s-master ~]# kubectl get ingressclasses.networking.k8s.io NAME CONTROLLER PARAMETERS AGE nginx k8s.io/ingress-nginx <none> 12h # 2、查看ingress 信息 [root@k8s-master ~]# kubectl get ingress -n kube-logging NAME CLASS HOSTS ADDRESS PORTS AGE kibana nginx kibana.lan-he.com.cn 10.1.86.240 80 131m # 3、查看ingress的pod 所在那个node节点 [root@k8s-master ~]# kubectl get po -n monitoring -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES ingress-nginx-controller-6gz5k 1/1 Running 2 (110m ago) 11h 10.10.10.177 k8s-node-01 <none> <none> # 4、查看kibana的日志,如下图 [root@k8s-master elk]# kubectl logs -f kibana-86ddf46d47-d2ddz -n kube-logging

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

注意:本文未添加安装ingress-nginx控制器的教程,所以在使用elk的时候需要提前把ingress-ngin控制器安装好。另外就是需要注意es的pod共享数据卷的问题,如果没有权限也会导致安装失败,如下图kibana无法访问到es的服务。

[root@k8s-master elk]# kubectl apply -f kibana.yaml

Warning: resource configmaps/kibana-config is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

configmap/kibana-config configured

Warning: resource services/kibana is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

service/kibana configured

Warning: resource deployments/kibana is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

deployment.apps/kibana configured

Warning: resource ingresses/kibana is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

ingress.networking.k8s.io/kibana configured

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/2023面试高手/article/detail/197553

推荐阅读

相关标签