热门标签

热门文章

- 1Android里的虚拟机_android虚拟机

- 2ps一点通精品知识库

- 3互联网金融系统——交易防重设计实战

- 4计算机类期刊投稿心得 【添加中...现37种】_系统工程与电子技术好中吗

- 5Spring——IOC容器启动及Bean生成流程_ioc 如何创建bean

- 6SpringBoot中引入WebSocket

- 7关于将表中自增长字段赋值给另外一个字段的方法_mysql 在增加一行新记录的时候,怎样把自动增长的id赋值给另一个字段?

- 8[Spring学习]04 Spring IOC创建Bean的几种方式_spring ioc 创建java bean创建几种方式

- 9Android设备与VMware Ubuntu系统利用无线实现Socket通信_android系统与ubuntu通信

- 10吃饭 睡觉 打豆豆!!!_python题目“吃饭,睡觉,打豆豆”

当前位置: article > 正文

训练自己的yolo3模型用于识别机动车及部分道路信息_yolo 车辆识别教程

作者:2023面试高手 | 2024-03-17 04:00:08

赞

踩

yolo 车辆识别教程

训练自己的yolo3模型用于识别机动车及部分道路信息

本文主要记录自己训练yolo3模型的整个过程,大部分参考Keras/Tensorflow+python+yolo3训练自己的数据集,感谢原作者。

我的环境如下

python 3.7.3

tensorflow 1.14.0

opencv 3.4.1

- 1

- 2

- 3

1.数据采集与处理

- 数据采集

使用python的opencv库将训练的视频的每一帧截取保存为一张图片,最后共计1782张图片作为训练数据,如图所示。

- 数据处理

使用lableimg软件对每一张图片中的机动车、红绿灯、横道线、道路行驶箭头、车牌进行标注,如图所示

lableimg具体操作参照labelimg使用教程

注意:标注的同类别需要相同的命名,比如机动车命名为car,道路停止线命名为line。

最后形成1782个xml文件,xml文件内容如图所示,记录了类别名与坐标信息。

至此数据准备完毕。

2.模型训练

- 下载代码,做好准备工作

大佬的yolo3代码

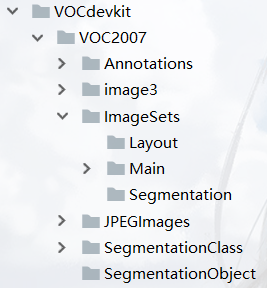

下载yolo3代码,在里面新建VOCdevkit文件夹,然后在VOCdevkit文件夹中陆续新建如图的文件夹(我的方法很蠢QAQ)

将自己用于训练的1782张图片放入JPEGImages里面,如图所示。

将labelimg生成的xml文件放入Annotations里面,如图所示。

- 在VOC2007文件夹中新建test.py脚本并运行,在Main中生成trainval.test.train.val四个txt文件,代码如下。

import os import random trainval_percent = 0.1 train_percent = 0.9 xmlfilepath = 'Annotations' txtsavepath = 'ImageSets\Main' total_xml = os.listdir(xmlfilepath) num = len(total_xml) list = range(num) tv = int(num * trainval_percent) tr = int(tv * train_percent) trainval = random.sample(list, tv) train = random.sample(trainval, tr) ftrainval = open('ImageSets/Main/trainval.txt', 'w') ftest = open('ImageSets/Main/test.txt', 'w') ftrain = open('ImageSets/Main/train.txt', 'w') fval = open('ImageSets/Main/val.txt', 'w') for i in list: name = total_xml[i][:-4] + '\n' if i in trainval: ftrainval.write(name) if i in train: ftest.write(name) else: fval.write(name) else: ftrain.write(name) ftrainval.close() ftrain.close() fval.close() ftest.close()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 找到voc_annotation.py脚本,将代码中classes列表的元素改为用labelimg标注每个类别的命名,再运行代码。

在keras-yolo3-master中生成2007_train.txt、2007_val.txt、2007_test.txt三个文件夹,重命名三个文件夹分别为train.txt、val.txt、test.txt。 - 在keras-yolo3-master中找到yolo3.cfg文件并打开,ctrl+f搜 yolo, 总共会搜出3个含有yolo的地方,将classes改为自己类别的个数(也就是使用labelimg标记类别的个数),filters改为如图的样子。一共要改三处。

- 找到model_data文件夹下的coco_classes.txt和voc_classes.txt,将内容更改为自己标记的类别名称,如图所示,两个txt文件夹内容一样。

- 找到train.py将内容替换为如下代码。

import numpy as np import keras.backend as K from keras.layers import Input, Lambda from keras.models import Model from keras.callbacks import TensorBoard, ModelCheckpoint, EarlyStopping from yolo3.model import preprocess_true_boxes, yolo_body, tiny_yolo_body, yolo_loss from yolo3.utils import get_random_data def _main(): annotation_path = 'train.txt' log_dir = 'logs/000/' classes_path = 'model_data/voc_classes.txt' anchors_path = 'model_data/yolo_anchors.txt' class_names = get_classes(classes_path) anchors = get_anchors(anchors_path) input_shape = (416,416) # multiple of 32, hw model = create_model(input_shape, anchors, len(class_names) ) train(model, annotation_path, input_shape, anchors, len(class_names), log_dir=log_dir) def train(model, annotation_path, input_shape, anchors, num_classes, log_dir='logs/'): model.compile(optimizer='adam', loss={ 'yolo_loss': lambda y_true, y_pred: y_pred}) logging = TensorBoard(log_dir=log_dir) checkpoint = ModelCheckpoint(log_dir + "ep{epoch:03d}-loss{loss:.3f}-val_loss{val_loss:.3f}.h5", monitor='val_loss', save_weights_only=True, save_best_only=True, period=1) batch_size = 10 val_split = 0.1 with open(annotation_path) as f: lines = f.readlines() np.random.shuffle(lines) num_val = int(len(lines)*val_split) num_train = len(lines) - num_val print('Train on {} samples, val on {} samples, with batch size {}.'.format(num_train, num_val, batch_size)) model.fit_generator(data_generator_wrap(lines[:num_train], batch_size, input_shape, anchors, num_classes), steps_per_epoch=max(1, num_train//batch_size), validation_data=data_generator_wrap(lines[num_train:], batch_size, input_shape, anchors, num_classes), validation_steps=max(1, num_val//batch_size), epochs=500, initial_epoch=0) model.save_weights(log_dir + 'trained_weights.h5') def get_classes(classes_path): with open(classes_path) as f: class_names = f.readlines() class_names = [c.strip() for c in class_names] return class_names def get_anchors(anchors_path): with open(anchors_path) as f: anchors = f.readline() anchors = [float(x) for x in anchors.split(',')] return np.array(anchors).reshape(-1, 2) def create_model(input_shape, anchors, num_classes, load_pretrained=False, freeze_body=False, weights_path='model_data/yolo_weights.h5'): K.clear_session() # get a new session image_input = Input(shape=(None, None, 3)) h, w = input_shape num_anchors = len(anchors) y_true = [Input(shape=(h//{0:32, 1:16, 2:8}[l], w//{0:32, 1:16, 2:8}[l], \ num_anchors//3, num_classes+5)) for l in range(3)] model_body = yolo_body(image_input, num_anchors//3, num_classes) print('Create YOLOv3 model with {} anchors and {} classes.'.format(num_anchors, num_classes)) if load_pretrained: model_body.load_weights(weights_path, by_name=True, skip_mismatch=True) print('Load weights {}.'.format(weights_path)) if freeze_body: # Do not freeze 3 output layers. num = len(model_body.layers)-7 for i in range(num): model_body.layers[i].trainable = False print('Freeze the first {} layers of total {} layers.'.format(num, len(model_body.layers))) model_loss = Lambda(yolo_loss, output_shape=(1,), name='yolo_loss', arguments={'anchors': anchors, 'num_classes': num_classes, 'ignore_thresh': 0.5})( [*model_body.output, *y_true]) model = Model([model_body.input, *y_true], model_loss) return model def data_generator(annotation_lines, batch_size, input_shape, anchors, num_classes): n = len(annotation_lines) np.random.shuffle(annotation_lines) i = 0 while True: image_data = [] box_data = [] for b in range(batch_size): i %= n image, box = get_random_data(annotation_lines[i], input_shape, random=True) image_data.append(image) box_data.append(box) i += 1 image_data = np.array(image_data) box_data = np.array(box_data) y_true = preprocess_true_boxes(box_data, input_shape, anchors, num_classes) yield [image_data, *y_true], np.zeros(batch_size) def data_generator_wrap(annotation_lines, batch_size, input_shape, anchors, num_classes): n = len(annotation_lines) if n==0 or batch_size<=0: return None return data_generator(annotation_lines, batch_size, input_shape, anchors, num_classes) if __name__ == '__main__': _main()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

找到yolo_video.py将内容替换为如下代码。

import sys import argparse from yolo import YOLO, detect_video from PIL import Image import os # def detect_img(yolo): # while True: # img = input('Input image filename:') # try: # image = Image.open(img) # except: # print('Open Error! Try again!') # continue # else: # r_image = yolo.detect_image(image) # r_image.show() # yolo.close_session() import glob def detect_img(yolo): path = "VOCdevkit/VOC2007/JPEGImages/*.jpg" outdir = "VOCdevkit/VOC2007/SegmentationClass" for jpgfile in glob.glob(path): img = Image.open(jpgfile) img = yolo.detect_image(img) img.save(os.path.join(outdir, os.path.basename(jpgfile))) yolo.close_session() FLAGS = None if __name__ == '__main__': # class YOLO defines the default value, so suppress any default here parser = argparse.ArgumentParser(argument_default=argparse.SUPPRESS) ''' Command line options ''' parser.add_argument( '--model', type=str, help='path to model weight file, default ' + YOLO.get_defaults("model_path") ) parser.add_argument( '--anchors', type=str, help='path to anchor definitions, default ' + YOLO.get_defaults("anchors_path") ) parser.add_argument( '--classes', type=str, help='path to class definitions, default ' + YOLO.get_defaults("classes_path") ) parser.add_argument( '--gpu_num', type=int, help='Number of GPU to use, default ' + str(YOLO.get_defaults("gpu_num")) ) parser.add_argument( '--image', default=False, action="store_true", help='Image detection mode, will ignore all positional arguments' ) ''' Command line positional arguments -- for video detection mode ''' parser.add_argument( "--input", nargs='?', type=str,required=False,default='./path2your_video', help = "Video input path" ) parser.add_argument( "--output", nargs='?', type=str, default="", help = "[Optional] Video output path" ) FLAGS = parser.parse_args() if FLAGS.image: """ Image detection mode, disregard any remaining command line arguments """ print("Image detection mode") if "input" in FLAGS: print(" Ignoring remaining command line arguments: " + FLAGS.input + "," + FLAGS.output) detect_img(YOLO(**vars(FLAGS))) elif "input" in FLAGS: detect_video(YOLO(**vars(FLAGS)), FLAGS.input, FLAGS.output) else: print("Must specify at least video_input_path. See usage with --help.")

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 运行train.py训练模型,loss达到10几的时候效果就可以了。最后在log的000文件夹中生成trained_weights.h5权重文件。

3.测试模型

进入终端cd到keras-yolo3-master文件夹下输入命名:python yolo_video.py --image进行图片的识别,输入命令:python yolo_video.py --input 视频路径\video_1.avi --output 视频保存路径\res_1.avi进行视频的识别。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/2023面试高手/article/detail/253890

推荐阅读

相关标签