热门标签

热门文章

- 1git add . 报错: LF will be replaced by CRLF the next time Git touches it

- 2Git 03---用idea操作怎么退回add或者commit或者push或者改动了变蓝了但是想返回改动_idea git 回退到某个commit

- 3微信小游戏换量导航组件升级,微信云模式支持二阶跳

- 4cropper固定宽高裁剪_GitHub - UnknownShadow/wx-cropper: 通用微信小程序图片裁剪组件,支持宽高比例约束裁剪。...

- 5[深度学习]yolov8+pyqt5搭建精美界面GUI设计源码实现一_pyqt5与yolov8的结合

- 6纯净卸载工具_setupprod offscrub

- 7后端知识点链接(一):C++、数据结构、算法、编译原理、设计模式_编译原理和数据结构的区别

- 8一位年薪35W的测试被开除,回怼的一番话,令人沉思

- 9Android学习系列(22)--App主界面比较

- 10api-ms-win-crt-runtime-l1-1-0.dll文件丢失的修复方法,最全面的解析_api-ms-win-crt-runtime-l1-1-0.dll修复什么意思

当前位置: article > 正文

ResNet训练四种天气分类模型_识别图片季节和白天或晚上的大模型

作者:2023面试高手 | 2024-05-17 04:23:02

赞

踩

识别图片季节和白天或晚上的大模型

任务:训练图片分类模型,将雨,雪,雾,晴天四种天气图像分类

参考文章:CNN经典网络模型(五):ResNet简介及代码实现(PyTorch超详细注释版)

目录

1.数据集准备

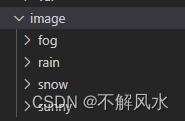

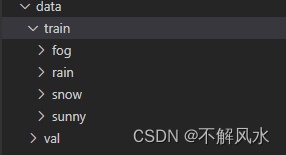

数据集中有四种天气图像,每一类都有10000张图片,将其分好类放在不同的文件夹下。建立image文件夹如下:

- spilit_data.py:划分给定的数据集为训练集和测试集

- import os

- from shutil import copy, rmtree

- import random

-

-

- def mk_file(file_path: str):

- if os.path.exists(file_path):

- # 如果文件夹存在,则先删除原文件夹再重新创建

- rmtree(file_path)

- os.makedirs(file_path)

-

-

- def main():

- # 保证随机可复现

- random.seed(0)

-

- # 将数据集中10%的数据划分到验证集中

- split_rate = 0.1

-

- # 指向解压后的flower_photos文件夹

- # getcwd():该函数不需要传递参数,获得当前所运行脚本的路径

- cwd = os.getcwd()

- # join():用于拼接文件路径,可以传入多个路径

- data_root = os.path.join(cwd, "")

- origin_flower_path = os.path.join(data_root, "image")

- # 确定路径存在,否则反馈错误

- assert os.path.exists(origin_flower_path), "path '{}' does not exist.".format(origin_flower_path)

- # isdir():判断某一路径是否为目录

- # listdir():返回指定的文件夹包含的文件或文件夹的名字的列表

- flower_class = [cla for cla in os.listdir(origin_flower_path)

- if os.path.isdir(os.path.join(origin_flower_path, cla))]

-

- # 创建训练集train文件夹,并由类名在其目录下创建子目录

- train_root = os.path.join(data_root, "/data/train")

- mk_file(train_root)

- for cla in flower_class:

- # 建立每个类别对应的文件夹

- mk_file(os.path.join(train_root, cla))

-

- # 创建验证集val文件夹,并由类名在其目录下创建子目录

- val_root = os.path.join(data_root, "/data/val")

- mk_file(val_root)

- for cla in flower_class:

- # 建立每个类别对应的文件夹

- mk_file(os.path.join(val_root, cla))

-

- # 遍历所有类别的图像并按比例分成训练集和验证集

- for cla in flower_class:

- cla_path = os.path.join(origin_flower_path, cla)

- # iamges列表存储了该目录下所有图像的名称

- images = os.listdir(cla_path)

- num = len(images)

- # 随机采样验证集的索引

- # 从images列表中随机抽取k个图像名称

- # random.sample:用于截取列表的指定长度的随机数,返回列表

- # eval_index保存验证集val的图像名称

- eval_index = random.sample(images, k=int(num*split_rate))

- for index, image in enumerate(images):

- if image in eval_index:

- # 将分配至验证集中的文件复制到相应目录

- image_path = os.path.join(cla_path, image)

- new_path = os.path.join(val_root, cla)

- copy(image_path, new_path)

- else:

- # 将分配至训练集中的文件复制到相应目录

- image_path = os.path.join(cla_path, image)

- new_path = os.path.join(train_root, cla)

- copy(image_path, new_path)

- # '\r'回车,回到当前行的行首,而不会换到下一行,如果接着输出,本行以前的内容会被逐一覆盖

- # end="":将print自带的换行用end中指定的str代替

- print("\r[{}] processing [{}/{}]".format(cla, index+1, num), end="")

- print()

-

- print("processing done!")

-

-

- if __name__ == '__main__':

- main()

运行后得到划分好的训练集和测试集

2.模型

- model.py :定义ResNet网络模型

- import torch.nn as nn

- import torch

-

-

- # 定义ResNet18/34的残差结构,为2个3x3的卷积

- class BasicBlock(nn.Module):

- # 判断残差结构中,主分支的卷积核个数是否发生变化,不变则为1

- expansion = 1

-

- # init():进行初始化,申明模型中各层的定义

- # downsample=None对应实线残差结构,否则为虚线残差结构

- def __init__(self, in_channel, out_channel, stride=1, downsample=None, **kwargs):

- super(BasicBlock, self).__init__()

- self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

- kernel_size=3, stride=stride, padding=1, bias=False)

- # 使用批量归一化

- self.bn1 = nn.BatchNorm2d(out_channel)

- # 使用ReLU作为激活函数

- self.relu = nn.ReLU()

- self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

- kernel_size=3, stride=1, padding=1, bias=False)

- self.bn2 = nn.BatchNorm2d(out_channel)

- self.downsample = downsample

-

- # forward():定义前向传播过程,描述了各层之间的连接关系

- def forward(self, x):

- # 残差块保留原始输入

- identity = x

- # 如果是虚线残差结构,则进行下采样

- if self.downsample is not None:

- identity = self.downsample(x)

-

- out = self.conv1(x)

- out = self.bn1(out)

- out = self.relu(out)

- # -----------------------------------------

- out = self.conv2(out)

- out = self.bn2(out)

- # 主分支与shortcut分支数据相加

- out += identity

- out = self.relu(out)

-

- return out

-

-

- # 定义ResNet50/101/152的残差结构,为1x1+3x3+1x1的卷积

- class Bottleneck(nn.Module):

- # expansion是指在每个小残差块内,减小尺度增加维度的倍数,如64*4=256

- # Bottleneck层输出通道是输入的4倍

- expansion = 4

-

- # init():进行初始化,申明模型中各层的定义

- # downsample=None对应实线残差结构,否则为虚线残差结构,专门用来改变x的通道数

- def __init__(self, in_channel, out_channel, stride=1, downsample=None,

- groups=1, width_per_group=64):

- super(Bottleneck, self).__init__()

-

- width = int(out_channel * (width_per_group / 64.)) * groups

-

- self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=width,

- kernel_size=1, stride=1, bias=False)

- # 使用批量归一化

- self.bn1 = nn.BatchNorm2d(width)

- # -----------------------------------------

- self.conv2 = nn.Conv2d(in_channels=width, out_channels=width, groups=groups,

- kernel_size=3, stride=stride, bias=False, padding=1)

- self.bn2 = nn.BatchNorm2d(width)

- # -----------------------------------------

- self.conv3 = nn.Conv2d(in_channels=width, out_channels=out_channel * self.expansion,

- kernel_size=1, stride=1, bias=False)

- self.bn3 = nn.BatchNorm2d(out_channel * self.expansion)

- # 使用ReLU作为激活函数

- self.relu = nn.ReLU(inplace=True)

- self.downsample = downsample

-

- # forward():定义前向传播过程,描述了各层之间的连接关系

- def forward(self, x):

- # 残差块保留原始输入

- identity = x

- # 如果是虚线残差结构,则进行下采样

- if self.downsample is not None:

- identity = self.downsample(x)

-

- out = self.conv1(x)

- out = self.bn1(out)

- out = self.relu(out)

-

- out = self.conv2(out)

- out = self.bn2(out)

- out = self.relu(out)

-

- out = self.conv3(out)

- out = self.bn3(out)

- # 主分支与shortcut分支数据相加

- out += identity

- out = self.relu(out)

-

- return out

-

-

- # 定义ResNet类

- class ResNet(nn.Module):

- # 初始化函数

- def __init__(self,

- block,

- blocks_num,

- num_classes=4,

- include_top=True,

- groups=1,

- width_per_group=64):

- super(ResNet, self).__init__()

- self.include_top = include_top

- # maxpool的输出通道数为64,残差结构输入通道数为64

- self.in_channel = 64

-

- self.groups = groups

- self.width_per_group = width_per_group

-

- self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2,

- padding=3, bias=False)

- self.bn1 = nn.BatchNorm2d(self.in_channel)

- self.relu = nn.ReLU(inplace=True)

- self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

- # 浅层的stride=1,深层的stride=2

- # block:定义的两种残差模块

- # block_num:模块中残差块的个数

- self.layer1 = self._make_layer(block, 64, blocks_num[0])

- self.layer2 = self._make_layer(block, 128, blocks_num[1], stride=2)

- self.layer3 = self._make_layer(block, 256, blocks_num[2], stride=2)

- self.layer4 = self._make_layer(block, 512, blocks_num[3], stride=2)

- if self.include_top:

- # 自适应平均池化,指定输出(H,W),通道数不变

- self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

- # 全连接层

- self.fc = nn.Linear(512 * block.expansion, num_classes)

- # 遍历网络中的每一层

- # 继承nn.Module类中的一个方法:self.modules(), 他会返回该网络中的所有modules

- for m in self.modules():

- # isinstance(object, type):如果指定对象是指定类型,则isinstance()函数返回True

- # 如果是卷积层

- if isinstance(m, nn.Conv2d):

- # kaiming正态分布初始化,使得Conv2d卷积层反向传播的输出的方差都为1

- # fan_in:权重是通过线性层(卷积或全连接)隐性确定

- # fan_out:通过创建随机矩阵显式创建权重

- nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

-

- # 定义残差模块,由若干个残差块组成

- # block:定义的两种残差模块,channel:该模块中所有卷积层的基准通道数。block_num:模块中残差块的个数

- def _make_layer(self, block, channel, block_num, stride=1):

- downsample = None

- # 如果满足条件,则是虚线残差结构

- if stride != 1 or self.in_channel != channel * block.expansion:

- downsample = nn.Sequential(

- nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False),

- nn.BatchNorm2d(channel * block.expansion))

-

- layers = []

- layers.append(block(self.in_channel,

- channel,

- downsample=downsample,

- stride=stride,

- groups=self.groups,

- width_per_group=self.width_per_group))

- self.in_channel = channel * block.expansion

-

- for _ in range(1, block_num):

- layers.append(block(self.in_channel,

- channel,

- groups=self.groups,

- width_per_group=self.width_per_group))

- # Sequential:自定义顺序连接成模型,生成网络结构

- return nn.Sequential(*layers)

-

- # forward():定义前向传播过程,描述了各层之间的连接关系

- def forward(self, x):

- # 无论哪种ResNet,都需要的静态层

- x = self.conv1(x)

- x = self.bn1(x)

- x = self.relu(x)

- x = self.maxpool(x)

- # 动态层

- x = self.layer1(x)

- x = self.layer2(x)

- x = self.layer3(x)

- x = self.layer4(x)

-

- if self.include_top:

- x = self.avgpool(x)

- x = torch.flatten(x, 1)

- x = self.fc(x)

-

- return x

-

- # ResNet()中block参数对应的位置是BasicBlock或Bottleneck

- # ResNet()中blocks_num[0-3]对应[3, 4, 6, 3],表示残差模块中的残差数

- # 34层的resnet

- def resnet34(num_classes=1000, include_top=True):

- # https://download.pytorch.org/models/resnet34-333f7ec4.pth

- return ResNet(BasicBlock, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

-

-

- # 50层的resnet

- def resnet50(num_classes=1000, include_top=True):

- # https://download.pytorch.org/models/resnet50-19c8e357.pth

- return ResNet(Bottleneck, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

-

-

- # 101层的resnet

- def resnet101(num_classes=1000, include_top=True):

- # https://download.pytorch.org/models/resnet101-5d3b4d8f.pth

- return ResNet(Bottleneck, [3, 4, 23, 3], num_classes=num_classes, include_top=include_top)

3.训练

- train.py:加载数据集并训练,计算loss和accuracy,保存训练好的网络参数

- import os

- import sys

- import json

-

- import torch

- import torch.nn as nn

- import torch.optim as optim

- from torchvision import transforms, datasets

- from tqdm import tqdm

- # 训练resnet34

- from model import resnet34

-

-

- def main():

- # 如果有NVIDA显卡,转到GPU训练,否则用CPU

- device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

- print("using {} device.".format(device))

-

- data_transform = {

- # 训练

- # Compose():将多个transforms的操作整合在一起

- "train": transforms.Compose([

- # RandomResizedCrop(224):将给定图像随机裁剪为不同的大小和宽高比,然后缩放所裁剪得到的图像为给定大小

- transforms.RandomResizedCrop(224),

- # RandomVerticalFlip():以0.5的概率竖直翻转给定的PIL图像

- transforms.RandomHorizontalFlip(),

- # ToTensor():数据转化为Tensor格式

- transforms.ToTensor(),

- # Normalize():将图像的像素值归一化到[-1,1]之间,使模型更容易收敛

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

- # 验证

- "val": transforms.Compose([transforms.Resize(256),

- transforms.CenterCrop(224),

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

- # abspath():获取文件当前目录的绝对路径

- # join():用于拼接文件路径,可以传入多个路径

- # getcwd():该函数不需要传递参数,获得当前所运行脚本的路径

- data_root = os.path.abspath(os.getcwd())

- # 得到数据集的路径

- image_path = os.path.join(data_root, "flower_data")

- # exists():判断括号里的文件是否存在,可以是文件路径

- # 如果image_path不存在,序会抛出AssertionError错误,报错为参数内容“ ”

- assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

- train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

- transform=data_transform["train"])

- # 训练集长度

- train_num = len(train_dataset)

-

- # {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

- # class_to_idx:获取分类名称对应索引

- flower_list = train_dataset.class_to_idx

- # dict():创建一个新的字典

- # 循环遍历数组索引并交换val和key的值重新赋值给数组,这样模型预测的直接就是value类别值

- cla_dict = dict((val, key) for key, val in flower_list.items())

- # 把字典编码成json格式

- json_str = json.dumps(cla_dict, indent=4)

- # 把字典类别索引写入json文件

- with open('class_indices.json', 'w') as json_file:

- json_file.write(json_str)

-

- # 一次训练载入16张图像

- batch_size = 16

- # 确定进程数

- # min():返回给定参数的最小值,参数可以为序列

- # cpu_count():返回一个整数值,表示系统中的CPU数量,如果不确定CPU的数量,则不返回任何内容

- nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8])

- print('Using {} dataloader workers every process'.format(nw))

- # DataLoader:将读取的数据按照batch size大小封装给训练集

- # dataset (Dataset):输入的数据集

- # batch_size (int, optional):每个batch加载多少个样本,默认: 1

- # shuffle (bool, optional):设置为True时会在每个epoch重新打乱数据,默认: False

- # num_workers(int, optional): 决定了有几个进程来处理,默认为0意味着所有的数据都会被load进主进程

- train_loader = torch.utils.data.DataLoader(train_dataset,

- batch_size=batch_size, shuffle=True,

- num_workers=nw)

- # 加载测试数据集

- validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

- transform=data_transform["val"])

- # 测试集长度

- val_num = len(validate_dataset)

- validate_loader = torch.utils.data.DataLoader(validate_dataset,

- batch_size=batch_size, shuffle=False,

- num_workers=nw)

-

- print("using {} images for training, {} images for validation.".format(train_num,

- val_num))

-

- # 模型实例化

- net = resnet34()

- net.to(device)

- # 加载预训练模型权重

- # model_weight_path = "./resnet34-pre.pth"

- # exists():判断括号里的文件是否存在,可以是文件路径

- # assert os.path.exists(model_weight_path), "file {} does not exist.".format(model_weight_path)

- # net.load_state_dict(torch.load(model_weight_path, map_location='cpu'))

- # 输入通道数

- # in_channel = net.fc.in_features

- # 全连接层

- # net.fc = nn.Linear(in_channel, 5)

-

- # 定义损失函数(交叉熵损失)

- loss_function = nn.CrossEntropyLoss()

-

- # 抽取模型参数

- params = [p for p in net.parameters() if p.requires_grad]

- # 定义adam优化器

- # params(iterable):要训练的参数,一般传入的是model.parameters()

- # lr(float):learning_rate学习率,也就是步长,默认:1e-3

- optimizer = optim.Adam(params, lr=0.0001)

-

- # 迭代次数(训练次数)

- epochs = 3

- # 用于判断最佳模型

- best_acc = 0.0

- # 最佳模型保存地址

- save_path = './resNet34.pth'

- train_steps = len(train_loader)

- for epoch in range(epochs):

- # 训练

- net.train()

- running_loss = 0.0

- # tqdm:进度条显示

- train_bar = tqdm(train_loader, file=sys.stdout)

- # train_bar: 传入数据(数据包括:训练数据和标签)

- # enumerate():将一个可遍历的数据对象(如列表、元组或字符串)组合为一个索引序列,同时列出数据和数据下标,一般用在for循环当中

- # enumerate返回值有两个:一个是序号,一个是数据(包含训练数据和标签)

- # x:训练数据(inputs)(tensor类型的),y:标签(labels)(tensor类型)

- for step, data in enumerate(train_bar):

- # 前向传播

- images, labels = data

- # 计算训练值

- logits = net(images.to(device))

- # 计算损失

- loss = loss_function(logits, labels.to(device))

- # 反向传播

- # 清空过往梯度

- optimizer.zero_grad()

- # 反向传播,计算当前梯度

- loss.backward()

- optimizer.step()

-

- # item():得到元素张量的元素值

- running_loss += loss.item()

-

- # 进度条的前缀

- # .3f:表示浮点数的精度为3(小数位保留3位)

- train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

- epochs,

- loss)

-

- # 测试

- # eval():如果模型中有Batch Normalization和Dropout,则不启用,以防改变权值

- net.eval()

- acc = 0.0

- # 清空历史梯度,与训练最大的区别是测试过程中取消了反向传播

- with torch.no_grad():

- val_bar = tqdm(validate_loader, file=sys.stdout)

- for val_data in val_bar:

- val_images, val_labels = val_data

- outputs = net(val_images.to(device))

- # torch.max(input, dim)函数

- # input是具体的tensor,dim是max函数索引的维度,0是每列的最大值,1是每行的最大值输出

- # 函数会返回两个tensor,第一个tensor是每行的最大值;第二个tensor是每行最大值的索引

- predict_y = torch.max(outputs, dim=1)[1]

- # 对两个张量Tensor进行逐元素的比较,若相同位置的两个元素相同,则返回True;若不同,返回False

- # .sum()对输入的tensor数据的某一维度求和

- acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

-

- val_bar.desc = "valid epoch[{}/{}]".format(epoch + 1,

- epochs)

-

- val_accurate = acc / val_num

- print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

- (epoch + 1, running_loss / train_steps, val_accurate))

-

- # 保存最好的模型权重

- if val_accurate > best_acc:

- best_acc = val_accurate

- # torch.save(state, dir)保存模型等相关参数,dir表示保存文件的路径+保存文件名

- # model.state_dict():返回的是一个OrderedDict,存储了网络结构的名字和对应的参数

- torch.save(net.state_dict(), save_path)

-

- print('Finished Training')

-

-

- if __name__ == '__main__':

- main()

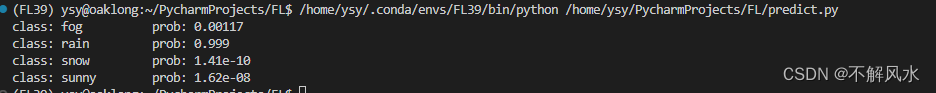

4.测试

- predict.py:用自己的数据集进行分类测试

- import os

- import sys

- import json

-

- import torch

- import torch.nn as nn

- import torch.optim as optim

- from torchvision import transforms, datasets

- from tqdm import tqdm

- # 训练resnet34

- from model import resnet34

-

-

- def main():

- # 如果有NVIDA显卡,转到GPU训练,否则用CPU

- device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

- print("using {} device.".format(device))

-

- data_transform = {

- # 训练

- # Compose():将多个transforms的操作整合在一起

- "train": transforms.Compose([

- # RandomResizedCrop(224):将给定图像随机裁剪为不同的大小和宽高比,然后缩放所裁剪得到的图像为给定大小

- transforms.RandomResizedCrop(224),

- # RandomVerticalFlip():以0.5的概率竖直翻转给定的PIL图像

- transforms.RandomHorizontalFlip(),

- # ToTensor():数据转化为Tensor格式

- transforms.ToTensor(),

- # Normalize():将图像的像素值归一化到[-1,1]之间,使模型更容易收敛

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

- # 验证

- "val": transforms.Compose([transforms.Resize(256),

- transforms.CenterCrop(224),

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

- # abspath():获取文件当前目录的绝对路径

- # join():用于拼接文件路径,可以传入多个路径

- # getcwd():该函数不需要传递参数,获得当前所运行脚本的路径

- data_root = os.path.abspath(os.getcwd())

- # 得到数据集的路径

- image_path = os.path.join(data_root, "data")

- # exists():判断括号里的文件是否存在,可以是文件路径

- # 如果image_path不存在,序会抛出AssertionError错误,报错为参数内容“ ”

- assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

- train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

- transform=data_transform["train"])

- # 训练集长度

- train_num = len(train_dataset)

-

- # {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

- # class_to_idx:获取分类名称对应索引

- flower_list = train_dataset.class_to_idx

- # dict():创建一个新的字典

- # 循环遍历数组索引并交换val和key的值重新赋值给数组,这样模型预测的直接就是value类别值

- cla_dict = dict((val, key) for key, val in flower_list.items())

- # 把字典编码成json格式

- json_str = json.dumps(cla_dict, indent=4)

- # 把字典类别索引写入json文件

- with open('class_indices.json', 'w') as json_file:

- json_file.write(json_str)

-

- # 一次训练载入16张图像

- batch_size = 16

- # 确定进程数

- # min():返回给定参数的最小值,参数可以为序列

- # cpu_count():返回一个整数值,表示系统中的CPU数量,如果不确定CPU的数量,则不返回任何内容

- nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8])

- print('Using {} dataloader workers every process'.format(nw))

- # DataLoader:将读取的数据按照batch size大小封装给训练集

- # dataset (Dataset):输入的数据集

- # batch_size (int, optional):每个batch加载多少个样本,默认: 1

- # shuffle (bool, optional):设置为True时会在每个epoch重新打乱数据,默认: False

- # num_workers(int, optional): 决定了有几个进程来处理,默认为0意味着所有的数据都会被load进主进程

- train_loader = torch.utils.data.DataLoader(train_dataset,

- batch_size=batch_size, shuffle=True,

- num_workers=nw)

- # 加载测试数据集

- validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

- transform=data_transform["val"])

- # 测试集长度

- val_num = len(validate_dataset)

- validate_loader = torch.utils.data.DataLoader(validate_dataset,

- batch_size=batch_size, shuffle=False,

- num_workers=nw)

-

- print("using {} images for training, {} images for validation.".format(train_num,

- val_num))

-

- # 模型实例化

- net = resnet34()

- net.to(device)

- # 加载预训练模型权重

- # model_weight_path = "./resnet34-pre.pth"

- # exists():判断括号里的文件是否存在,可以是文件路径

- # assert os.path.exists(model_weight_path), "file {} does not exist.".format(model_weight_path)

- # net.load_state_dict(torch.load(model_weight_path, map_location='cpu'))

- # 输入通道数

- # in_channel = net.fc.in_features

- # 全连接层

- # net.fc = nn.Linear(in_channel, 5)

-

- # 定义损失函数(交叉熵损失)

- loss_function = nn.CrossEntropyLoss()

-

- # 抽取模型参数

- params = [p for p in net.parameters() if p.requires_grad]

- # 定义adam优化器

- # params(iterable):要训练的参数,一般传入的是model.parameters()

- # lr(float):learning_rate学习率,也就是步长,默认:1e-3

- optimizer = optim.Adam(params, lr=0.0001)

-

- # 迭代次数(训练次数)

- epochs = 100

- # 用于判断最佳模型

- best_acc = 0.0

- # 最佳模型保存地址

- save_path = './resNet34.pth'

- train_steps = len(train_loader)

- for epoch in range(epochs):

- # 训练

- net.train()

- running_loss = 0.0

- # tqdm:进度条显示

- train_bar = tqdm(train_loader, file=sys.stdout)

- # train_bar: 传入数据(数据包括:训练数据和标签)

- # enumerate():将一个可遍历的数据对象(如列表、元组或字符串)组合为一个索引序列,同时列出数据和数据下标,一般用在for循环当中

- # enumerate返回值有两个:一个是序号,一个是数据(包含训练数据和标签)

- # x:训练数据(inputs)(tensor类型的),y:标签(labels)(tensor类型)

- for step, data in enumerate(train_bar):

- # 前向传播

- images, labels = data

- # 计算训练值

- logits = net(images.to(device))

- # 计算损失

- loss = loss_function(logits, labels.to(device))

- # 反向传播

- # 清空过往梯度

- optimizer.zero_grad()

- # 反向传播,计算当前梯度

- loss.backward()

- optimizer.step()

-

- # item():得到元素张量的元素值

- running_loss += loss.item()

-

- # 进度条的前缀

- # .3f:表示浮点数的精度为3(小数位保留3位)

- train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

- epochs,

- loss)

-

- # 测试

- # eval():如果模型中有Batch Normalization和Dropout,则不启用,以防改变权值

- net.eval()

- acc = 0.0

- # 清空历史梯度,与训练最大的区别是测试过程中取消了反向传播

- with torch.no_grad():

- val_bar = tqdm(validate_loader, file=sys.stdout)

- for val_data in val_bar:

- val_images, val_labels = val_data

- outputs = net(val_images.to(device))

- # torch.max(input, dim)函数

- # input是具体的tensor,dim是max函数索引的维度,0是每列的最大值,1是每行的最大值输出

- # 函数会返回两个tensor,第一个tensor是每行的最大值;第二个tensor是每行最大值的索引

- predict_y = torch.max(outputs, dim=1)[1]

- # 对两个张量Tensor进行逐元素的比较,若相同位置的两个元素相同,则返回True;若不同,返回False

- # .sum()对输入的tensor数据的某一维度求和

- acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

-

- val_bar.desc = "valid epoch[{}/{}]".format(epoch + 1,

- epochs)

-

- val_accurate = acc / val_num

- print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

- (epoch + 1, running_loss / train_steps, val_accurate))

-

- # 保存最好的模型权重

- if val_accurate > best_acc:

- best_acc = val_accurate

- # torch.save(state, dir)保存模型等相关参数,dir表示保存文件的路径+保存文件名

- # model.state_dict():返回的是一个OrderedDict,存储了网络结构的名字和对应的参数

- torch.save(net.state_dict(), save_path)

-

- print('Finished Training')

-

-

- if __name__ == '__main__':

- main()

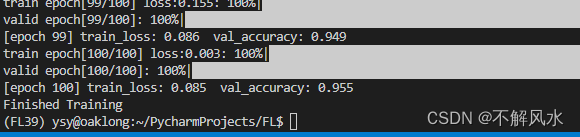

5.模型对比

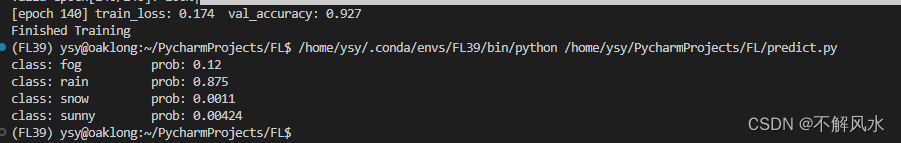

ResNet34

训练了140轮,但其实在50轮的时候就已经达到了0.9的accuracy,后面收敛缓慢,于是想换模型。训练时间为一分钟一轮。

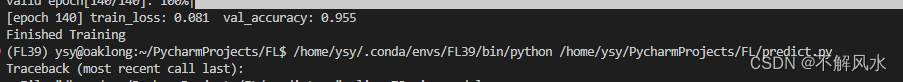

ResNet50

训练时间大概两分钟一轮

50确实比34情况要好,但还是同样的问题,前期收敛到0.955之后就一直摇摆,也没有突破

这个时候还在想换模型,于是换了152

ResNet152

现在感觉不是模型的问题了,应该是数据集哪里出了问题,想寻找新数据集。模型的话ResNet50就够用了,因为152训练时间太长了,大概5分钟才一轮。

6.新数据集训练

持续更新中

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/2023面试高手/article/detail/581958

推荐阅读

相关标签