- 14步实现Java爬取酷狗音乐,so easy。_java webmagic爬取酷狗音乐

- 2ruby gem install rails 错误解决方法_gem install rails报错

- 3力扣 62. 不同路径 Java_力扣java第61

- 4Git之深入解析如何在应用中嵌入Git

- 5使用Python爬虫获取网络投票和调查数据进行分析_wps问卷调查结果可以爬虫吗

- 6五中常见的跨域问题以及处理方案_alert('fail')报错

- 72024年网安最新网络安全习题集_把密钥发出去,可以说是一个难度很高,并且重中之重的事。其中比较正确的原因是

- 8简单的学生信息管理系统——C语言实现_c语言学生简单的信息管理系统

- 9【SpringBoot框架篇】36.整合Tess4J搭建提供图片文字识别的Web服务_springboot整合tess4j

- 10NGINX 路由配置与参数详解(https配置、跨域配置、socket配置)_ng 配置socket链接

HBase集群部署(完全分布式)及部署过程遇到问题浅析解决_分布式文件系统hbase产生的问题

赞

踩

环境

- OS: Ubuntu 16.04 LTS

- Java: open-jdk1.8.0_111(需要1.7+)

- Hadoop: hadoop-2.7.3

- Zookeeper: zookeeper-3.4.9

- Hbase: hbase-1.2.4

集群规划

| IP | HostName | Master | RegionServer |

|---|---|---|---|

| 10.100.3.88 | Master | yes | no |

| 10.100.3.89 | Slave1 | no | yes |

| 10.100.3.90 | Slave2 | no | yes |

下载解压

从官网下载HBase最新的stable版本,hbase-1.2.4-bin.tar.gz,是支持Hadoop-2.7.1+版本的

解压:

tar zxf hbase-1.2.4-bin.tar.gz -C /你的hbase路径- 1

配置HBase

1)配置HBase环境变量

export HBASE_HOME=/xxx/hbase

export PATH=$PATH:$HBASE_HOME:$PATH- 1

- 2

使环境变量生效:

source ~/.bashrc

source /etc/profile- 1

- 2

2)编辑hbase-env.sh文件(在hbase/conf目录中),添加JAVA_HOME:

#set jdk environment

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64- 1

- 2

3)编辑conf/hbase-site.xml文件:

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://Master:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>Master,Slave1,Slave2</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/opt/zookeeper/data</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

注:第一个属性指定本机hbase的存储目录,必须与hadoop集群的core-site.xml配置一致;第二个属性指定hbase的运行模式是完全分布式;第三个属性指定Zookeeper管理的机器;第四个属性是数据存放的路径,如果是用本机zookeeper(非HBase内置zookeeper)则需要与zookeeper配置中zoo.cfg中的dataDir一致!

4)配置conf/regionservers:

Slave1

Slave2- 1

- 2

regionservers文件列出运行hbase的机器(即HRegionServer),此处只配两个从节点,主节点Master做HMaster用

5)所有文件配置完之后,copy到从节点Slave1和Slave2

scp -r hbase xxx@Slave1:~

scp -r hbase xxx@Slave2:~- 1

- 2

运行HBase

在Master上运行:

start-all.sh #启动Hadoop、YARN集群,由于HBase集群依赖于HDFS,所以必须先启动HDFS- 1

在各节点运行:

$ZOOKEEPER_HOME/bin/zkServer.sh start #启动zookeeper- 1

在Master节点运行:

$HBASE_HOME/bin/start-hbase.sh #启动HBase集群- 1

此时!系统报错:

Master: mkdir: cannot create directory ‘/opt/hbase/logs’: Permission denied

Slave2: starting zookeeper, logging to /opt/hbase/logs/hbase-jhcomn-zookeeper-Slave2.out

Master: starting zookeeper, logging to /opt/hbase/logs/hbase-jhcomn-zookeeper-Master.out

Master: /opt/hbase/bin/hbase-daemon.sh: line 189: /opt/hbase/logs/hbase-jhcomn-zookeeper-Master.out: No such file or directory

Master: head: cannot open '/opt/hbase/logs/hbase-jhcomn-zookeeper-Master.out' for reading: No such file or directory

Slave1: starting zookeeper, logging to /opt/hbase/logs/hbase-jhcomn-zookeeper-Slave1.out

mkdir: cannot create directory ‘/opt/hbase/logs’: Permission denied

starting master, logging to /opt/hbase/logs/hbase-jhcomn-master-Master.out

/opt/hbase/bin/hbase-daemon.sh: line 189: /opt/hbase/logs/hbase-jhcomn-master-Master.out: No such file or directory

head: cannot open '/opt/hbase/logs/hbase-jhcomn-master-Master.out' for reading: No such file or directory

Slave2: starting regionserver, logging to /opt/hbase/logs/hbase-jhcomn-regionserver-Slave2.out

Slave2: OpenJDK 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Slave2: OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

Slave1: starting regionserver, logging to /opt/hbase/logs/hbase-jhcomn-regionserver-Slave1.out

Slave1: OpenJDK 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Slave1: OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

-

看系统提示,发现有两个错误:

- 1)/opt/hbase 权限不足:原本该文件夹及其子文件所有者是root:root(解决方法:修改文件夹所有者及用户组“sudo chown -R xxx:xxx /opt/hbase”)

- 2)查看Slave节点log:

"hbase-jhcomn-zookeeper-Slave1.out" 16L, 1178C 1,1 All

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/hbase/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

java.net.BindException: Address already in use

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:433)

at sun.nio.ch.Net.bind(Net.java:425)

at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223)

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74)

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:67)

at org.apache.zookeeper.server.NIOServerCnxnFactory.configure(NIOServerCnxnFactory.java:95)

at org.apache.zookeeper.server.quorum.QuorumPeerMain.runFromConfig(QuorumPeerMain.java:130)

at org.apache.hadoop.hbase.zookeeper.HQuorumPeer.runZKServer(HQuorumPeer.java:89)

at org.apache.hadoop.hbase.zookeeper.HQuorumPeer.main(HQuorumPeer.java:79)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

发现第二个错误原因在于:zookeeper端口被占用!因为HBase启动会启动内置的zookeeper,而一开始我们已经启动自己本机的zookeeper集群,所以HBase内置的zookeeper启动自然失败

解决方法:使用本机zookeeper集群管理HBase,不使用HBase内置zookeeper,只需修改每个节点下HBASE_HOME/conf/hbase-env.sh:

# Tell HBase whether it should manage it's own instance of Zookeeper or not.

# export HBASE_MANAGES_ZK=true

export HBASE_MANAGES_ZK=false- 1

- 2

- 3

将内置zookeeper设置为false

最后,重新运行hbase

xxx@Master:/opt/hbase$ ./bin/start-hbase.sh

starting master, logging to /opt/hbase/logs/hbase-jhcomn-master-Master.out

OpenJDK 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

Slave2: starting regionserver, logging to /opt/hbase/logs/hbase-jhcomn-regionserver-Slave2.out

Slave2: OpenJDK 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Slave2: OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

Slave1: starting regionserver, logging to /opt/hbase/logs/hbase-jhcomn-regionserver-Slave1.out

Slave1: OpenJDK 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Slave1: OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

通过jps查看下:

Master节点:

2545 QuorumPeerMain

1746 NameNode

2164 ResourceManager

2486 JobHistoryServer

3512 HMaster

3753 Jps

1978 SecondaryNameNode- 1

- 2

- 3

- 4

- 5

- 6

- 7

Slave节点:

2688 Jps

1857 QuorumPeerMain

1699 NodeManager

1557 DataNode- 1

- 2

- 3

- 4

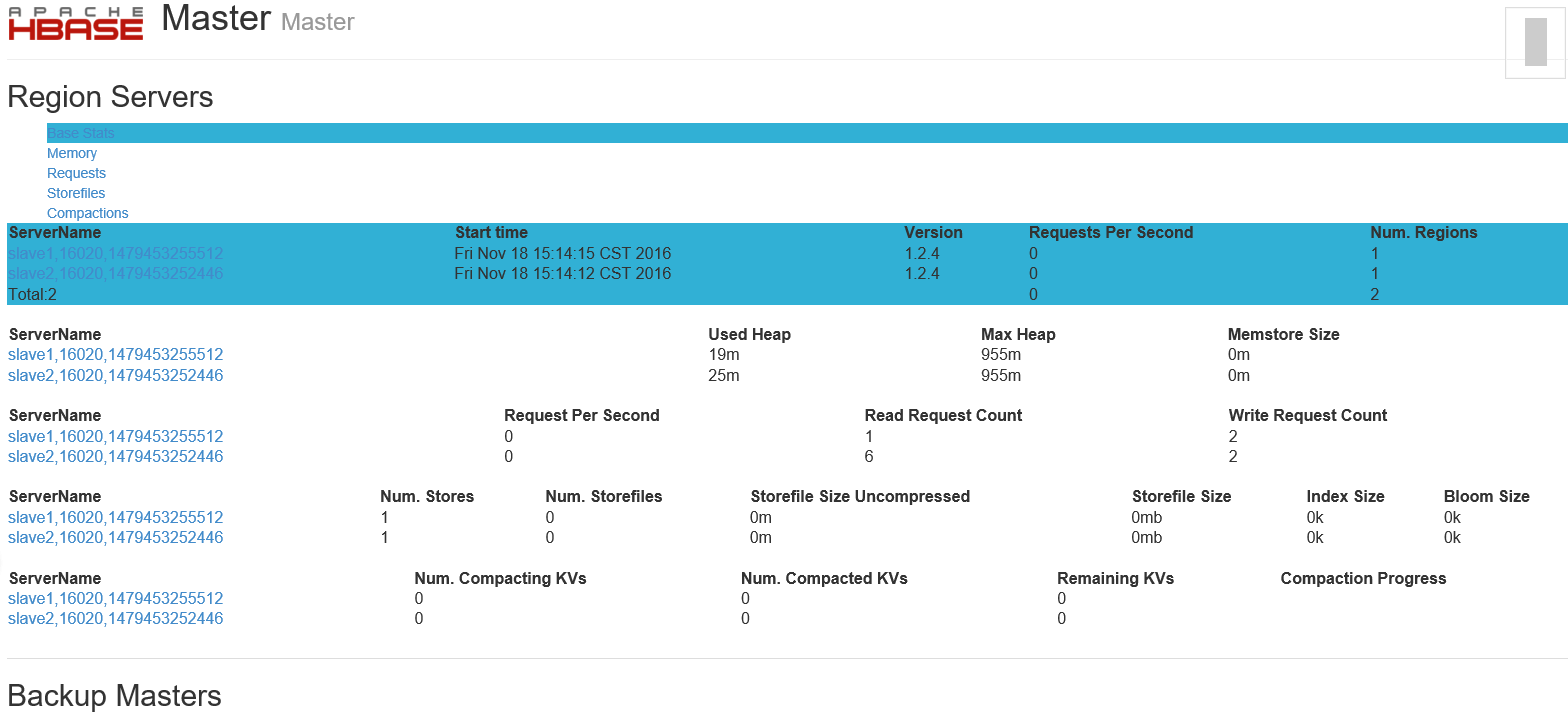

验证HBase是否成功安装

在浏览器访问主节点16010端口(即http://10.100.3.88:16010),可以查看HBase Web UI