- 1语音算法论文中frame-level,segment-level,utterance-level fearure

- 2阿里云产品介绍_阿里云产品 其他云

- 3情感分析的未来趋势:AI与人工智能的融合

- 4应用程序开发(ArkTS)_arkts单例模式

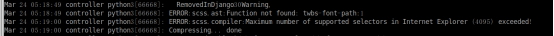

- 5Python之Django 基本使用_django python

- 6GPT-4:模型架构、训练方法与 Fine-tuning 详解_gpt4 finetune

- 7python 之jieba分词

- 8数据增强技术在智能客服中的应用:了解如何将数据集用于训练和评估智能客服模型_电商客服机器人训练数据集

- 9SAP 将smartforms的报表转成PDF_abap编程 smartforms 自动打印为pdf

- 10百度 文心一言 sdk 试用_wenxin-sdk-java

openstack部署及使用过程中遇到的问题汇总_error: failed to apply catalog: can't retrieve rou

赞

踩

报错01

Applying 10.130.0.148_controller.pp

10.130.0.148_controller.pp: [ ERROR ]

Applying Puppet manifests [ ERROR ]

ERROR : Error appeared during Puppet run: 10.130.0.148_controller.pp

Error: /Stage[main]/Gnocchi::Db::Sync/Exec[gnocchi-db-sync]: Failed to call refresh: gnocchi-upgrade --config-file /etc/gnocchi/gnocchi.conf returned 1 instead of one of [0]

You will find full trace in log /var/tmp/packstack/20210308-151630-LdDNev/manifests/10.130.0.148_controller.pp.log

Please check log file /var/tmp/packstack/20210308-151630-LdDNev/openstack-setup.log for more information

Additional information:

- A new answerfile was created in: /root/packstack-answers-20210308-151632.txt

- Time synchronization installation was skipped. Please note that unsynchronized time on server instances might be problem for some OpenStack components.

- Warning: NetworkManager is active on 10.130.0.148. OpenStack networking currently does not work on systems that have the Network Manager service enabled.

- File /root/keystonerc_admin has been created on OpenStack client host 10.130.0.148. To use the command line tools you need to source the file.

- To access the OpenStack Dashboard browse to http://10.130.0.148/dashboard .

Please, find your login credentials stored in the keystonerc_admin in your home directory.

解决方案

查看/var/tmp/packstack/20210308-151630-LdDNev/openstack-setup.log发现以下提示信息

^[[mNotice: /Stage[main]/Gnocchi::Db::Sync/Exec[gnocchi-db-sync]/returns: pkg_resources.DistributionNotFound: setuptools>=30.3^[[0m

^[[1;31mError: /Stage[main]/Gnocchi::Db::Sync/Exec[gnocchi-db-sync]: Failed to call refresh: gnocchi-upgrade --config-file /etc/gnocchi/gnocchi.conf returned 1 instead of one of [0]^[[0 m

查看实际环境中对应组件版本为python-setuptools-0.9.8

[root@controller ~]# rpm -qa | grep setuptools

python-setuptools-0.9.8-7.lns7.noarch

手动安装

[root@controller ~]# yum install -y python2-setuptools

完成后重新执行packstack --allinone

报错02

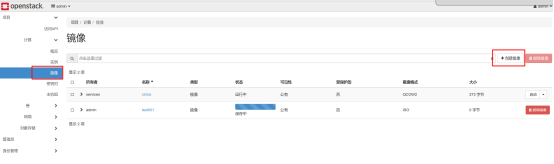

镜像->创建镜像报错

原因:

镜像创建过程中是将本地的iso或者其他格式的镜像上传至openstack服务器,因此过程中涉及到拷贝,所以需要对本地环境设置免密登陆

解决:

ssh-copy-id root@10.130.0.148

实例创建后启动失败,报错:

报错03

No valid host was found. There are not enough hosts available.

解决

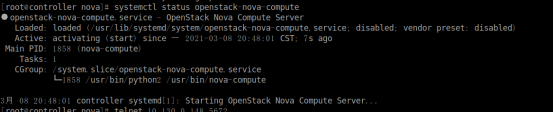

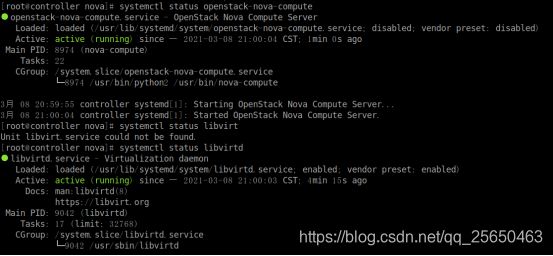

网上查了说是可能和openstack-nova-compute有关

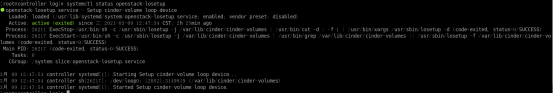

查看服务状态,异常,多次重启依旧异常

查看日志/var/log/nova/nova-compute.log提示Connection to libvirt failed: error from service: CheckAuthorization: Connection is closed: libvirtError: error from service: CheckAuthorization: Connection is closed

怀疑和libvirt有关,重启libvirtd然后重启systemctl restart openstack-nova-compute

然后openstack-nova-compute服务正常启动

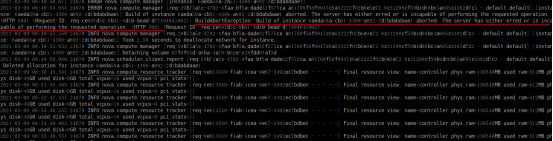

报错04

Build of instance e0d58ee2-5738-4aa3-87da-f1a448650c7b aborted: Volume 3d6505f3-53c6-48ce-b54c-3b98f080e8e2 did not finish being created even after we waited 193 seconds or 61 attempts. And its status is downloading.

从错误原因上推测,OpenStack在不停尝试61次后,宣告创建实例失败。此时,卷创建依然还未完成。所以,实例创建失败的原因可能为,卷创建需要的时间比较久,在卷创建成功完成之前,Nova组件等待超时了。

解决办法

在/etc/nova/nova.conf中有一个控制卷设备重试的参数:block_device_allocate_retries,可以通过修改此参数延长等待时间。

该参数默认值为60,这个对应了之前实例创建失败消息里的61 attempts。我们可以将此参数设置的大一点,例如:300。这样Nova组件就不会等待卷创建超时,也即解决了此问题。

报错05

Build of instance 6aeda58a-cb51-4388-a892-2d1bdabdaae1 aborted: The server has either erred or is incapable of performing the requested operation. (HTTP 500) (Request-ID: req-ce697d3c-8b41-4d4b-bead-d77494641962)

报错06

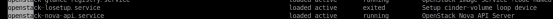

openstack-losetup.service服务状态经常出现exited

https://bugs.launchpad.net/tripleo/+bug/1821309

https://opendev.org/openstack/kolla/commit/04c5cfb59f2295bd730712bbe12b515498ac2cda

报错07

2021-03-16 02:52:55::DEBUG::sequences::52::root:: Traceback (most recent call last):

File “/usr/lib/python3.6/site-packages/packstack/installer/core/sequences.py”, line 50, in run

self.function(config, messages)

File “/usr/lib/python3.6/site-packages/packstack/plugins/puppet_950.py”, line 214, in apply_puppet_manifest

wait_for_puppet(currently_running, messages)

File “/usr/lib/python3.6/site-packages/packstack/plugins/puppet_950.py”, line 128, in wait_for_puppet

validate_logfile(log)

File “/usr/lib/python3.6/site-packages/packstack/modules/puppet.py”, line 107, in validate_logfile

raise PuppetError(message)

packstack.installer.exceptions.PuppetError: Error appeared during Puppet run: 10.2.5.156_controller.pp

^[[mNotice: /Stage[main]/Nova::Db::Sync/Exec[nova-db-sync]/returns: Error: (pymysql.err.OperationalError) (1045, “Access denied for user ‘nova’@‘openstack’ (using password: YES)”)^[[0m

You will find full trace in log /var/tmp/packstack/20210316-023224-_zcrtdam/manifests/10.2.5.156_controller.pp.log

2021-03-16 02:52:55::ERROR::run_setup::1062::root:: Traceback (most recent call last):

File “/usr/lib/python3.6/site-packages/packstack/installer/run_setup.py”, line 1057, in main

_main(options, confFile, logFile)

File “/usr/lib/python3.6/site-packages/packstack/installer/run_setup.py”, line 681, in _main

runSequences()

File “/usr/lib/python3.6/site-packages/packstack/installer/run_setup.py”, line 648, in runSequences

controller.runAllSequences()

File “/usr/lib/python3.6/site-packages/packstack/installer/setup_controller.py”, line 81, in runAllSequences

sequence.run(config=self.CONF, messages=self.MESSAGES)

File “/usr/lib/python3.6/site-packages/packstack/installer/core/sequences.py”, line 109, in run

step.run(config=config, messages=messages)

File “/usr/lib/python3.6/site-packages/packstack/installer/core/sequences.py”, line 50, in run

self.function(config, messages)

File “/usr/lib/python3.6/site-packages/packstack/plugins/puppet_950.py”, line 214, in apply_puppet_manifest

wait_for_puppet(currently_running, messages)

File “/usr/lib/python3.6/site-packages/packstack/plugins/puppet_950.py”, line 128, in wait_for_puppet

validate_logfile(log)

File “/usr/lib/python3.6/site-packages/packstack/modules/puppet.py”, line 107, in validate_logfile

raise PuppetError(message)

packstack.installer.exceptions.PuppetError: Error appeared during Puppet run: 10.2.5.156_controller.pp

^[[mNotice: /Stage[main]/Nova::Db::Sync/Exec[nova-db-sync]/returns: Error: (pymysql.err.OperationalError) (1045, “Access denied for user ‘nova’@‘openstack’ (using password: YES)”)^[[0m

You will find full trace in log /var/tmp/packstack/20210316-023224-_zcrtdam/manifests/10.2.5.156_controller.pp.log

修改

#Email address for the Identity service ‘admin’ user. Defaults to

CONFIG_KEYSTONE_ADMIN_EMAIL=root@admin

关于使用packstack -d --answer-file=openstack-victoria-20210101.txt进行初始化时建议修改默认密码,否则在使用用户密码登陆mysql的时候将比较麻烦

执行mysql -u nova -p,然后用openstack-victoria-20210101.txt中对应密码进行登陆,验证密码是否正确

报错08

File “/usr/lib/python3.6/site-packages/packstack/installer/core/sequences.py”, line 50, in run

self.function(config, messages)

File “/usr/lib/python3.6/site-packages/packstack/plugins/puppet_950.py”, line 214, in apply_puppet_manifest

wait_for_puppet(currently_running, messages)

File “/usr/lib/python3.6/site-packages/packstack/plugins/puppet_950.py”, line 128, in wait_for_puppet

validate_logfile(log)

File “/usr/lib/python3.6/site-packages/packstack/modules/puppet.py”, line 107, in validate_logfile

raise PuppetError(message)

packstack.installer.exceptions.PuppetError: Error appeared during Puppet run: 10.2.5.156_controller.pp

Error: Failed to apply catalog: Execution of ‘/usr/bin/openstack flavor list --quiet --format csv --long --all’ returned 1: Unknown Error (HTTP 500) (tried 29, for a total of 170 seconds)^[[0m

You will find full trace in log /var/tmp/packstack/20210316-043006-vp2u2jc8/manifests/10.2.5.156_controller.pp.log

2021-03-16 04:36:48::ERROR::run_setup::1062::root:: Traceback (most recent call last):

File “/usr/lib/python3.6/site-packages/packstack/installer/run_setup.py”, line 1057, in main

_main(options, confFile, logFile)

File “/usr/lib/python3.6/site-packages/packstack/installer/run_setup.py”, line 681, in _main

runSequences()

File “/usr/lib/python3.6/site-packages/packstack/installer/run_setup.py”, line 648, in runSequences

controller.runAllSequences()

File “/usr/lib/python3.6/site-packages/packstack/installer/setup_controller.py”, line 81, in runAllSequences

sequence.run(config=self.CONF, messages=self.MESSAGES)

File “/usr/lib/python3.6/site-packages/packstack/installer/core/sequences.py”, line 109, in run

step.run(config=config, messages=messages)

File “/usr/lib/python3.6/site-packages/packstack/installer/core/sequences.py”, line 50, in run

self.function(config, messages)

File “/usr/lib/python3.6/site-packages/packstack/plugins/puppet_950.py”, line 214, in apply_puppet_manifest

wait_for_puppet(currently_running, messages)

File “/usr/lib/python3.6/site-packages/packstack/plugins/puppet_950.py”, line 128, in wait_for_puppet

validate_logfile(log)

File “/usr/lib/python3.6/site-packages/packstack/modules/puppet.py”, line 107, in validate_logfile

raise PuppetError(message)

packstack.installer.exceptions.PuppetError: Error appeared during Puppet run: 10.2.5.156_controller.pp

Error: Failed to apply catalog: Execution of ‘/usr/bin/openstack flavor list --quiet --format csv --long --all’ returned 1: Unknown Error (HTTP 500) (tried 29, for a total of 170 seconds)^[[0m

You will find full trace in log /var/tmp/packstack/20210316-043006-vp2u2jc8/manifests/10.2.5.156_controller.pp.log

按照日志是执行/usr/bin/openstack flavor list --quiet --format csv --long --all时返回了1,手动执行/usr/bin/openstack flavor list --quiet --format csv --long --all并echo $?确实是1

解决方案

出现这个错误,主要是因为环境变量的问题创建admin-openrc.sh

[root@openstack zwl]# cat admin-openrc.sh

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

并执行

[root@openstack zwl]# source admin-openrc.sh

参考

https://www.cnblogs.com/cz-boy/articles/11454937.html

https://blog.csdn.net/qq_42533216/article/details/107730926

[root@openstack system]# openstack flavor list --quiet --format csv --long --all

Unable to establish connection to http://10.2.5.156:35357/v3/auth/tokens: HTTPConnectionPool(host=‘10.2.5.156’, port=35357): Max retries exceeded with url: /v3/auth/tokens (Caused by NewConnectionError(’<urllib3.connection.HTTPConnection object at 0x7f9ce98869e8>: Failed to establish a new connection: [Errno 111] Connection refused’,))

1 ]# netstat -anp|grep 35357 //使用命令查看是否有这个服务端口 2 3 4 5 如果没有35357 端口 6 ]# vim /etc/keystone/keystone.conf 7 8 admin_port = 35357 //取消注释

报错09

ERROR : Error appeared during Puppet run: 10.2.5.142_controller.pp

Error: Execution of ‘/usr/bin/dnf -d 0 -e 1 -y install ntpdate’ returned 1: Error: Unable to find a match: ntpdate

解决

CentOS8系统中,原有的时间同步服务 ntp/ntpdate服务已经无法使用,使用yum安装,提示已不存在,将answer文件中与ntp相关的配置项致空无需配置即可

报错10

ERROR : Error appeared during Puppet run: 10.2.5.156_controller.pp

Error: Could not prefetch mysql_user provider ‘mysql’: Execution of ‘/usr/bin/mysql --defaults-extra-file=/root/.my.cnf -NBe SELECT CONCAT(User, ‘@’,Host) AS User FROM mysql.user’ returned 1: ERROR 1045 (28000): Access denied for user ‘root’@‘localhost’ (using password: YES)

解决

更改answer文件中对应的密码为简单密码解决

报错11

Applying 10.2.5.142_controller.pp

10.2.5.142_controller.pp: [ ERROR ]

Applying Puppet manifests [ ERROR ]

ERROR : Error appeared during Puppet run: 10.2.5.142_controller.pp

Error: Function lookup() did not find a value for the name ‘DEFAULT_EXEC_TIMEOUT’

解决,因为配yum源的时候引入了红冒的源,导致puppet的版本与centos8 中默认提供的哦版本不一致

查看环境中安装的pupeet版本为puppet-6.17.0-2.el8.noarch,与centos8而centos8中提供的puppet版本为puppet-6.14.0-2.el8.noarch,修正yum源后解决此问题

参考:

https://bugzilla.redhat.com/show_bug.cgi?id=1881226

报错12

报错

ERROR : Error appeared during Puppet run: 10.2.5.157_controller.pp

Error: Could not prefetch keystone_domain provider ‘openstack’: Execution of ‘/usr/bin/openstack domain list --quiet --format csv’ returned 1: module ‘urllib3.packages.six’ has no attribute ‘ensure_text’ (tried 38, for a total of 170 seconds)

解决

pip3 uninstall six

pip3 install six==1.12.0

pip3 uninstall urllib3

Successfully uninstalled urllib3-1.25.7

Pip3 install urllib3==1.25.11

Successfully installed urllib3-1.26.4

报错13

Error: /Stage[main]/Packstack::Swift::Ringbuilder/Ring_object_device[dev_10.2.5.157_swiftloopback]: Could not evaluate: Execution of ‘/usr/bin/swift-ring-builder /etc/swift/object.builder’ returned 1: Traceback (most recent call last):

解决

单独执行/usr/bin/swift-ring-builder /etc/swift/object.builder

提示six模块找不到

但是单独运行python3然后import six,没有报错,查看日志,

只是在运行/usr/bin/swift-ring-builder的时候提示找不到six模块,发现该脚本最顶部使用了#!/usr/bin/python3 -s,怀疑是-s参数导致,删除-s重新执行解决

报错 14

报错

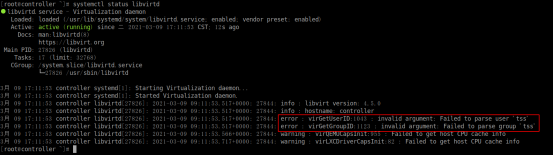

Error: Could not prefetch keystone_domain provider ‘openstack’: Execution of ‘/usr/bin/openstack domain list --quiet --format csv’ returned 1: /usr/lib/python3.6/site-packages/requests/init.py:91: RequestsDependencyWarning: urllib3 (1.26.4) or chardet (3.0.4) doesn’t match a supported version!

You will find full trace in log /var/tmp/packstack/20210324-040056-pt3pvlq5/manifests/10.2.5.157_controller.pp.log

解决

[root@controller ~]# /usr/bin/openstack domain list --quiet --format csv

/usr/lib/python3.6/site-packages/requests/init.py:91: RequestsDependencyWarning: urllib3 (1.26.4) or chardet (3.0.4) doesn’t match a supported version!

RequestsDependencyWarning)

Missing value auth-url required for auth plugin password

查看

/usr/lib/python3.6/site-packages/requests/init.py对软件版本要求如下

实际环境中发现urllib3的版本为1.26.4不满足要求

安装

pip3 install urllib3==1.25.11

报错15

报错

Error: Could not prefetch keystone_domain provider ‘openstack’: Execution of ‘/usr/bin/openstack domain list --quiet --format csv’ returned 1: Could not find versioned identity endpoints when attempting to authenticate. Please check that your auth_url is correct. Internal Server Error (HTTP 500) (tried 38, for a total of 170 seconds)

[root@controller ~]# /usr/bin/openstack domain list --quiet --format csv

Missing value auth-url required for auth plugin password

因为提示http 500,怀疑httpd服务异常,查服务扎u嗯太

日志中确实存在错误

Httpd卸载后重新安装启动报错

Mar 24 05:42:06 controller httpd[73380]: AH00526: Syntax error on line 27 of /etc/httpd/conf.d/10-aodh_wsgi.conf:

Mar 24 05:42:06 controller httpd[73380]: Invalid command ‘WSGIApplicationGroup’, perhaps misspelled or defined by a module not included in the server co>

Mar 24 05:42:06 controller systemd[1]: httpd.service: Main process exited, code=exited, status=1/FAILURE

Mar 24 05:42:06 controller systemd[1]: httpd.service: Failed with result ‘exit-code’.

解决

安装

yum install -y python38-mod_wsgi

再次看看http状态,依旧是thawing

日志

Mar 24 05:43:02 controller httpd[73794]: [Wed Mar 24 05:43:02.136492 2021] [alias:warn] [pid 73794:tid 140560675776832] AH00671: The Alias directive in >

Mar 24 05:43:02 controller httpd[73794]: AH00558: httpd: Could not reliably determine the server’s fully qualified domain name, using 10.2.5.157. Set th

解决

修改

vim /etc/httpd/conf/httpd.conf

#ServerName www.example.com:80为ServerName localhost:80

并重新启动httpd

报错16

报错

Build of instance 5454688a-672f-4b43-9dc4-9d8196b42346 aborted: Image f692a2c8-c1c5-4ec2-9826-9b75b5857ffd is unacceptable: Unable to convert image to raw: Image /var/lib/nova/instances/_base/f0ec1a4b23743d7b7488821509255812e35d698e.part is unacceptable:

解决

重新上传,原因镜像上传不完整导致

报错17

报错

消息

Exceeded maximum number of retries. Exhausted all hosts available for retrying build failures for instance a3604f66-9fae-49ad-8d9a-f399f618c8cb.

编码

500

详情

Traceback (most recent call last): File “/usr/lib/python3.6/site-packages/nova/conductor/manager.py”, line 666, in build_instances raise exception.MaxRetriesExceeded(reason=msg) nova.exception.MaxRetriesExceeded: Exceeded maximum number of retries. Exhausted all hosts available for retrying build failures for instance a3604f66-9fae-49ad-8d9a-f399f618c8cb.

分析

根据提示是nova组建报错,查看nova相关日志,提示查看neutron相关日志

2021-03-25 05:39:29.986 50398 ERROR nova.compute.manager nova.exception.PortBindingFailed: Binding failed for port d5b2d94a-b79a-4d6b-93ec-9c5a19b5221e, please check neutron logs for more information.

查看/var/log/neutron/linuxbridge-agent.log发现该日志一直在刷新,查看neutron相关服务,发现neutron-linuxbridge-agent服务一直在重启

2021-03-25 07:07:06.327 469548 WARNING oslo.privsep.daemon [-] privsep log: PermissionError: [Errno 13] Permission denied: ‘/var/log/neutron/privsep-helper.log’

2021-03-25 07:07:06.432 469548 CRITICAL oslo.privsep.daemon [-] privsep helper command exited non-zero (1)

2021-03-25 07:07:06.433 469548 CRITICAL neutron [-] Unhandled error: oslo_privsep.daemon.FailedToDropPrivileges: privsep helper command exited non-zero (1)

解决

在/etc/sudoers.d/neutron中添加

neutron ALL = (root) NOPASSWD: /usr/bin/privsep-helper *

报错18

报错

ERROR neutron.agent.linux.ip_lib [req-1e55f24e-15df-4306-a8fc-f6f697830dbf - - - - -] Device brq63790fac-ca cannot be used as it has no MAC address

历史创建的虚拟机,问题14修改后,删除之前创建失败的虚拟机,重新创建虚拟机则问题解决

19、keystone安装报错

报错:

root@loongson-pc:/home/zwl# systemctl enable mysql.service

Synchronizing state of mysql.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable mysql

Failed to enable unit: Too many levels of symbolic links

跟因

/etc/systemd/system/mysql.service是一个软链接

lrwxrwxrwx 1 root root 35 5月 27 17:39 /etc/systemd/system/mysql.service -> /lib/systemd/system/mariadb.service

执行systemctl enable mariadb.service命令解决

20、

报错:

root@loongson-pc:/home/zwl# systemctl enable mysql.service

Synchronizing state of mysql.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable mysql

Failed to enable unit: Too many levels of symbolic links

跟因

/etc/systemd/system/mysql.service是一个软链接

lrwxrwxrwx 1 root root 35 5月 27 17:39 /etc/systemd/system/mysql.service -> /lib/systemd/system/mariadb.service

执行systemctl enable mariadb.service命令解决

21、

报错root@controller:~# openstack domain create —description “An Example Domain” example

Failed to discover available identity versions when contacting http://controller:5000/v3. Attempting to parse version from URL.

Internal Server Error (HTTP 500)

查看日志发现以下报错

cat /var/log/keystone/keystone.log

Traceback (most recent call last):

File “/usr/bin/keystone-wsgi-public”, line 6, in

from keystone.server.wsgi import initialize_public_application

File “/usr/lib/python3/dist-packages/keystone/server/wsgi.py”, line 14, in

from keystone.server.flask import core as flask_core

File “/usr/lib/python3/dist-packages/keystone/server/flask/init.py”, line 17, in

from keystone.server.flask.common import APIBase # noqa

File “/usr/lib/python3/dist-packages/keystone/server/flask/common.py”, line 23, in

import flask_restful

File “/usr/lib/python3/dist-packages/flask_restful/init.py”, line 14, in

from flask.helpers import _endpoint_from_view_func

ImportError: cannot import name ‘_endpoint_from_view_func’ from ‘flask.helpers’ (/usr/local/lib/python3.7/dist-packages/flask/helpers.py)

经核实系统中存在两个fask模块,其中一个不存在函数_endpoint_from_view_func,删除对应文件

mv /usr/local/lib/python3.7/dist-packages/flask /usr/local/lib/python3.7/dist-packages/flask.bac

22、执行openstack domain create --description “An Example Domain” example报错

[root@controller 0629-rpm-kvm]# openstack domain create --description “An Example Domain” example

Failed to discover available identity versions when contacting http://controller:5000/v3. Attempting to parse version from URL.

Unable to establish connection to http://controller:5000/v3/auth/tokens: HTTPConnectionPool(host=‘controller’, port=5000): Max retries exceeded with url: /v3/auth/tokens (Caused by NewConnectionError(’<urllib3.connection.HTTPConnection object at 0xffe45a4358>: Failed to establish a new connection: [Errno 113] No route to host’,))

[root@controller 0629-rpm-kvm]# vim /etc/hosts

[root@controller 0629-rpm-kvm]# openstack domain create --description “An Example Domain” example

Internal Server Error (HTTP 500)

发现/etc/hosts配置错误,修改之后依旧报http 500,重新执行以下命令后解决

#填充身份服务数据库

su -s /bin/sh -c “keystone-manage db_sync” keystone

#初始化 Fernet 密钥库

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

#引导身份服务

keystone-manage bootstrap --bootstrap-password loongson

–bootstrap-admin-url http://controller:5000/v3/

–bootstrap-internal-url http://controller:5000/v3/

–bootstrap-public-url http://controller:5000/v3/

–bootstrap-region-id RegionOne

23 、glance组件

正常安装,但是查看日志存在以下报错

tail -f /var/log/glance/glance-api.log

2021-06-01 10:54:31.413 15144 ERROR stevedore.extension [-] Could not load ‘glance.store.s3.Store’: No module named ‘boto3’: ModuleNotFoundError: No module named ‘boto3’^[[00m

…

2021-06-01 10:54:31.878 15143 ERROR stevedore.extension [-] Could not load ‘s3’: No module named ‘boto3’: ModuleNotFoundError: No module named ‘boto3’

复现方法:service glance-api restart

分析:报错中提示不能加载’glance.store.s3.Store’,初步判断是glance_store组建中模块加载失败,查看源码

_drivers/s3.py

from boto3 import session as boto_session

中引入了boto3,但是环境中只存在关于boto的包,不存在boto3,因此软件依赖存在问题,

手动安装boto3解决

apt install python3-boto3

修改glance_store组件打包规则文件

24、nova安装报错

dbconfig-common: flushing administrative password

===> opensatck-pkg-tools: writing db credentials: mysql+pymysql://nova-common:XXXXXX@localhost:3306/novadb …

===> nova-common: Creating novadb_cell0 database:

dbc_dbname=novadb mysql -h “localhost” -u root -p$"{dbc_dbadmpass}"

===> nova-common: Granting permissions on novadb_cell0.* to ‘nova-common’@‘localhost’

===> nova-common: “nova-manage db sync” needs api_database.connection set. Sorry.

Setting up nova-conductor (2:21.1.2-1.1) …

Job for nova-conductor.service failed because the control process exited with error code.

See “systemctl status nova-conductor.service” and “journalctl -xe” for details.

invoke-rc.d: initscript nova-conductor, action “start” failed.

● nova-conductor.service - OpenStack Nova Conductor (nova-conductor)

Loaded: loaded (/lib/systemd/system/nova-conductor.service; disabled; vendor preset: enabled)

Active: activating (auto-restart) (Result: exit-code) since Tue 2021-06-01 17:08:39 CST; 6ms ago

Docs: man:nova-conductor(1)

Process: 14548 ExecStart=/etc/init.d/nova-conductor systemd-start (code=exited, status=1/FAILURE)

Main PID: 14548 (code=exited, status=1/FAILURE)

Job for nova-scheduler.service failed because the control process exited with error code.

See “systemctl status nova-scheduler.service” and “journalctl -xe” for details.

invoke-rc.d: initscript nova-scheduler, action “start” failed.

● nova-scheduler.service - OpenStack Nova Scheduler (nova-scheduler)

Loaded: loaded (/lib/systemd/system/nova-scheduler.service; disabled; vendor preset: enabled)

Active: activating (auto-restart) (Result: exit-code) since Tue 2021-06-01 17:09:03 CST; 7ms ago

Docs: man:nova-scheduler(1)

Process: 15357 ExecStart=/etc/init.d/nova-scheduler systemd-start (code=exited, status=1/FAILURE)

Main PID: 15357 (code=exited, status=1/FAILURE)

以上两个服务对应的日志,发现二者属于同一个问题,日志信息如下:

keystoneauth1.exceptions.catalog.EndpointNotFound: [‘internal’, ‘public’] endpoint for placement service in regionOne region not found

分析:

root@loongson-pc:/etc/apache2# openstack endpoint list

±---------------------------------±----------±-------------±-------------±--------±----------±----------------------------+

| ID | Region | Service Name | Service Type | Enabled | Interface | URL |

±---------------------------------±----------±-------------±-------------±--------±----------±----------------------------+

| 41ce6c6e0a6c42378f93603d749c3211 | regionOne | keystone | identity | True | admin | http://controller:5000/v3/ |

| 582e1bc434764663ba28fc09474c6ddb | RegionOne | placement | placement | True | public | http://controller:8778 |

| 63b17d4ef12249d7b4c545d861bc47d2 | RegionOne | placement | placement | True | internal | http://controller:8778 |

| 6b21908373804f2d882d7e89b40b2903 | RegionOne | glance | image | True | public | http://controller:9292 |

| 6cf386acc6d14f2caa0d2832f12f8f05 | regionOne | keystone | identity | True | public | http://controller:5000/v3/ |

| 8a5347c2b4c24eb0a3a96824f3056d82 | RegionOne | glance | image | True | admin | http://controller:9292 |

| 8dd119d29d7646918a8cc99e94c64337 | RegionOne | nova | compute | True | admin | http://controller:8774/v2.1 |

| c56a8d530a234b9b86d927b1ed8e26d4 | RegionOne | nova | compute | True | internal | http://controller:8774/v2.1 |

| c72c01259dd247068409f4cf6a0fcc64 | regionOne | keystone | identity | True | internal | http://controller:5000/v3/ |

| d7242a4e298a4dfbb7fe68ae405e2bcb | RegionOne | glance | image | True | internal | http://controller:9292 |

| f8037c49a4ff4cc3bfd93da400c5f768 | RegionOne | placement | placement | True | admin | http://controller:8778 |

| fffe71683e8b40ac802cbafa490bc3d7 | RegionOne | nova | compute | True | public | http://controller:8774/v2.1 |

±---------------------------------±----------±-------------±-------------±--------±----------±----------------------------+

在代码中进行定位

2021-06-02 13:06:06.910 25244 ERROR nova File “/usr/lib/python3/dist-packages/keystoneauth1/access/service_catalog.py”, line 473, in endpoint_data_for

2021-06-02 13:06:06.910 25244 ERROR nova raise exceptions.EndpointNotFound(msg)

endpoint_data_for函数的endpoint_data_list进行打印发现获得的值为空

代码:

430 endpoint_data_list = self.get_endpoint_data_list(

431 service_type=service_type,

432 interface=interface,

433 region_name=region_name,

434 service_name=service_name,

435 service_id=service_id,

436 endpoint_id=endpoint_id)

437 logging.warning("+++++++00000++++++++++")

438 #logger = logging.getLogger(endpoint_data_list)

439 #logger.warning(len(endpoint_data_list))

440 #logger.warning(type(endpoint_data_list))

441 #logger.warning(f’endpoint_data_list={endpoint_data_list}’)

442 logging.warning("++++++++++++++++++++")

log:

30009 2021-06-02 15:34:01.139 16615 WARNING root [-] +++++++00000++++++++++

30010 2021-06-02 15:34:01.139 16615 WARNING root [-] 0

30011 2021-06-02 15:34:01.139 16615 WARNING root [-] <class ‘list’>

30012 2021-06-02 15:34:01.140 16615 WARNING root [-] endpoint_data_list=[]

30013 2021-06-02 15:34:01.140 16615 WARNING root [-] ++++++++++++++++++++

进一步追踪

29997 2021-06-02 15:33:57.647 16607 ERROR nova File “/usr/lib/python3/dist-packages/keystoneauth1/identity/base.py”, line 292, in get_endpoint_data

29998 2021-06-02 15:33:57.647 16607 ERROR nova service_name=service_name)

159 def get_endpoint_data函数中跳出

代码:

274 service_catalog = self.get_access(session).service_catalog

275 project_id = self.get_project_id(session)

276 # NOTE(mordred): service_catalog.url_data_for raises if it can’t

277 # find a match, so this will always be a valid object.

278 logging.warning("++++++222222222222++++++")

279 logging.warning(f’service_typr={service_type}’)

280 logging.warning(f’interface={interface}’)

281 logging.warning(f’region_name={region_name}’)

282 logging.warning(f’service_name={service_name}’)

log:

30005 2021-06-02 15:34:01.138 16615 WARNING root [-] service_typr=placement

30006 2021-06-02 15:34:01.138 16615 WARNING root [-] interface=[‘internal’, ‘public’]

30007 2021-06-02 15:34:01.138 16615 WARNING root [-] region_name=regionOne

30008 2021-06-02 15:34:01.138 16615 WARNING root [-] service_name=None

分析,

通过openstack endpoint list获取的信息来看interface=[‘internal’, ‘public’]对应的region_name为RegionOne,第一个字母大写,而代码中获取到的region_name为regionOne,第一个字母为小写,二者不一致,因此认为是配置问题导致

查看之前的所有配置,发现创建 Placement API 服务端点的时候官方指导确实是大写:https://docs.openstack.org/placement/ussuri/install/from-pypi.html

因此使用以下命令重新创建 Placement API 服务端点

openstack endpoint create --region regionOne placement public http://controller:8778

openstack endpoint create --region regionOne placement internal http://controller:8778

openstack endpoint create --region RegionOne placement admin http://controller:8778

创建完成后重新启动nova-conductor.service、nova-scheduler.service,服务正常启动

重启主机后报错

root@loongson-pc:~# openstack endpoint list

Failed to discover available identity versions when contacting http://controller:5000/v3. Attempting to parse version from URL.

Internal Server Error (HTTP 500)

配置后重启机器解决

root@controller:~# cat /etc/hostname

controller

25、nova-compute安装报错

6.1 invoke-rc.d: initscript nova-compute, action “start” failed.

● nova-compute.service - OpenStack Nova Compute (nova-compute)

Loaded: loaded (/lib/systemd/system/nova-compute.service; disabled; vendor preset: enabled)

Active: activating (auto-restart) (Result: timeout) since Wed 2021-06-02 16:37:44 CST; 6ms ago

Docs: man:nova-compute(1)

Process: 25515 ExecStart=/etc/init.d/nova-compute systemd-start (code=killed, signal=TERM)

Main PID: 25515 (code=killed, signal=TERM)

6月 02 16:37:44 controller systemd[1]: nova-compute.service: Main process exited, code=killed, status=15/TERM

6月 02 16:37:44 controller systemd[1]: nova-compute.service: Failed with result ‘timeout’.

6月 02 16:37:44 controller systemd[1]: Failed to start OpenStack Nova Compute (nova-compute).

Created symlink /etc/systemd/system/multi-user.target.wants/nova-compute.service → /lib/systemd/system/nova-compute.service.

查看日志如下

2021-06-02 16:38:07.841 25758 ERROR oslo.messaging._drivers.impl_rabbit [req-371ab1cb-0835-4696-a5be-222fbd73a851 - - - - -] Connection failed: [Errno 111] ECONNREFUSED (retrying in 10.0 seconds): ConnectionRefusedError: [Errno 111] ECONNREFUSED

查看nova相关服务发现nova-compute服务启动异常

nova-compute.service loaded activating start start OpenStack Nova Compute (nova-compute)

判断应该是链接rabbit相关组件连接失败

搜索相关nova rabbit,认为是rabbitmq服务启动问题,查看系统关于rabbit相关服务,并未启动/安装,查看系统端口确实无rabbit相关端口,也无相关服务

查看nova配置文件,确实有相关配置#transport_url = rabbit://guest:loongson@localhost:5672,因此认为是缺少rabbit相关服务导致

在x86上下载相关镜像docker pull rabbitmq:management

docker run -dit --name Myrabbitmq -e RABBITMQ_DEFAULT_USER=admin -e RABBITMQ_DEFAULT_PASS=admin -p 15672:15672 -p 5672:5672 rabbitmq:management

然后修改nova配置为transport_url = rabbit://admin:admin@10.2.5.156:5672,重启systemctl restart nova-compute后查看报错信息,对应问题解决

26、 nova-compute.service服务启动失败,报错日志如下

2021-06-03 08:53:54.045 26812 WARNING nova.conductor.api [req-45c09180-a3af-4366-8d81-b4fc37938aa3 - - - - -] Timed out waiting for nova-conductor. Is it running? Or did this service start before nova-conductor? Reattempting establishment of nova-conductor connection…: oslo_messaging.exceptions.MessagingTimeout: Timed out waiting for a reply to message ID 306e927b3ba14fb2b3bc55675d559ad9

查看nova-conductor相关日志,提示

2021-06-03 09:08:55.677 31922 ERROR oslo_service.service pymysql.err.OperationalError: (1044, “Access denied for user ‘nova-common’@‘localhost’ to database ‘nova’”)

应该为权限问题,尝试查看nova相关配置

查看mysql中数据库,应该为novadb,因此将/etc/nova/nova.conf中nova-common的配置改为如下内容

connection = mysql+pymysql://nova-common:loongson@localhost:3306/novadb

重启nova-conductor

systemctl restart nova-conductor

查看nova-conductor日志,报错消失

查看nova-compute日志,报错消失

27、内核不支持AMD SEV

/var/log/nova/nova-compute.log中存在一下警告

2021-06-03 10:46:49.443 22894 INFO nova.virt.libvirt.host [req-60e3782b-000c-4ae7-9adb-f71357c37c42 - - - - -] kernel doesn’t support AMD SEV

分析

AMD SEV的功能为是客户机操作系统提供增强的安全性,该功能启用具有一定的先决条件,是AMD EPYC 7xx2(代码为“Rome”)或更高版本的 CPU 以及支持的 BIOS。

https://docs.vmware.com/cn/VMware-vSphere/7.0/com.vmware.vsphere.security.doc/GUID-757E2B37-C9D0-416A-AA38-088009C75C56.html

28、执行openstack compute service list报错如下

The server has either erred or is incapable of performing the requested operation. (HTTP 500) (Request-ID: req-24271b2b-0151-4af2-9b04-935f39f8a626)

查看compute相关日志,并未发现任何报错,查看服务状态也正常

nova list报错如下:

ERROR (ClientException): The server has either erred or is incapable of performing the requested operation. (HTTP 500) (Request-ID: req-699fa5d7-bbaf-4ec3-b610-fed7cdee9e58)

注:暂未解决

29、root@controller:~# systemctl restart libvirtd.service nova-compute.service

查看/var/log/nova/nova-compute.log日志存在以下报错

2021-06-03 14:56:18.060 8783 WARNING nova.virt.libvirt.driver [req-9a6384e5-a542-4d37-b5a8-df8cec5c1a33 - - - - -] An error occurred while updating compute node resource provider status to “enabled” for provider: 4b5e3ebb-d252-4546-8d87-1013bf6bdb7e: ValueError: No such provider 4b5e3ebb-d252-4546-8d87-1013bf6bdb7e

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver Traceback (most recent call last):

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver File “/usr/lib/python3/dist-packages/nova/virt/libvirt/driver.py”, line 4390, in _update_compute_provider_status

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver context, rp_uuid, enabled=not service.disabled)

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver File “/usr/lib/python3/dist-packages/nova/compute/manager.py”, line 556, in update_compute_provider_status

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver context, rp_uuid, new_traits)

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver File “/usr/lib/python3/dist-packages/nova/scheduler/client/report.py”, line 72, in wrapper

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver return f(self, *a, **k)

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver File “/usr/lib/python3/dist-packages/nova/scheduler/client/report.py”, line 1021, in set_traits_for_provider

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver if not self._provider_tree.have_traits_changed(rp_uuid, traits):

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver File “/usr/lib/python3/dist-packages/nova/compute/provider_tree.py”, line 584, in have_traits_changed

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver provider = self._find_with_lock(name_or_uuid)

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver File “/usr/lib/python3/dist-packages/nova/compute/provider_tree.py”, line 440, in find_with_lock

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver raise ValueError((“No such provider %s”) % name_or_uuid)

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver ValueError: No such provider 4b5e3ebb-d252-4546-8d87-1013bf6bdb7e

2021-06-03 14:56:18.060 8783 ERROR nova.virt.libvirt.driver

尝试分别重启两个服务,无任何报错,因此猜测是nova-compute服务快速启动,而libvirtd服务加载未完全导致,尝试更换两个服务启动顺序,并未出现报错

30、

/var/log/neutron/neutron-linuxbridge-agent.log中存在以下报错

2021-06-03 17:18:06.123 21760 ERROR neutron.plugins.ml2.drivers.linuxbridge.agent.linuxbridge_neutron_agent [-] Tunneling cannot be enabled without the local_ip bound to an interface on the host. Please configure local_ip None on the host interface to be used for tunneling and restart the agent.

查看neutron-linuxbridge-agent服务状态正常运行,认为是配置问题,同时在/usr/lib/python3/dist-packages/neutron/plugins/ml2/drivers/linuxbridge/agent/linuxbridge_neutron_agent.py增加日志打印

代码:

155 def get_local_ip_device(self):

156 “”“Return the device with local_ip on the host.”""

157 device = self.ip.get_device_by_ip(self.local_ip)

158 logger.warning("+++++++00000++++++++++")

159 logger.warning(f’ self.local_ip={self.local_ip}’)

日志:

2021-06-03 17:47:11.417 28066 INFO neutron.plugins.ml2.drivers.linuxbridge.agent.linuxbridge_neutron_agent [-] Interface mappings: {}

2021-06-03 17:47:11.418 28066 INFO neutron.plugins.ml2.drivers.linuxbridge.agent.linuxbridge_neutron_agent [-] Bridge mappings: {}

2021-06-03 17:47:11.418 28066 WARNING root [-] +++++++00000++++++++++

2021-06-03 17:47:11.418 28066 WARNING root [-] self.local_ip=None

2021-06-03 17:47:11.418 28066 ERROR neutron.plugins.ml2.drivers.linuxbridge.agent.linuxbridge_neutron_agent [-] Tunneling cannot be enabled without the local_ip bound to an interface on the host. Please configure local_ip None on the host interface to be used for tunneling and restart the agent.

获取到的local_ip为空,查看neutron相关配置文件确实存在local_ip且值为空

root@controller:/etc/neutron# grep -rn local_ip

plugins/ml2/openvswitch_agent.ini:232:#local_ip =

plugins/ml2/linuxbridge_agent.ini:242:#local_ip =

修改plugins/ml2/linuxbridge_agent.ini中

local_ip = 10.130.0.137

重启neutron-linuxbridge-agent服务并查看日志,ip可以正常获取,服务状态也正常

service neutron-linuxbridge-agent restart

2021-06-03 17:49:36.049 28261 INFO oslo.privsep.daemon [-] Spawned new privsep daemon via rootwrap

2021-06-03 17:49:35.953 28277 INFO oslo.privsep.daemon [-] privsep daemon starting

2021-06-03 17:49:35.958 28277 INFO oslo.privsep.daemon [-] privsep process running with uid/gid: 0/0

2021-06-03 17:49:35.961 28277 INFO oslo.privsep.daemon [-] privsep process running with capabilities (eff/prm/inh): CAP_DAC_OVERRIDE|CAP_DAC_READ_SEARCH|CAP_NET_ADMIN|CAP_SYS_ADMIN|CAP_SYS_PTRACE/CAP_DAC_OVERRIDE|CAP_DAC_READ_SEARCH|CAP_NET_ADMIN|CAP_SYS_ADMIN|CAP_SYS_PTRACE/none

2021-06-03 17:49:35.962 28277 INFO oslo.privsep.daemon [-] privsep daemon running as pid 28277

2021-06-03 17:49:36.643 28261 WARNING root [-] +++++++00000++++++++++

2021-06-03 17:49:36.643 28261 WARNING root [-] self.local_ip=10.130.0.137

2021-06-03 17:49:36.651 28261 INFO neutron.plugins.ml2.drivers.linuxbridge.agent.linuxbridge_neutron_agent [-] Agent initialized successfully, now running…

2021-06-03 17:49:36.692 28261 INFO neutron.plugins.ml2.drivers.agent._common_agent [req-ef36df10-3b91-45b3-a709-6e03d5a33b84 - - - - -] RPC agent_id: lbb209cab5b664

2021-06-03 17:49:36.698 28261 INFO neutron.agent.agent_extensions_manager [req-ef36df10-3b91-45b3-a709-6e03d5a33b84 - - - - -] Loaded agent extensions: []

2021-06-03 17:49:36.901 28261 INFO neutron.plugins.ml2.drivers.agent._common_agent [req-ef36df10-3b91-45b3-a709-6e03d5a33b84 - - - - -] Linux bridge agent Agent RPC Daemon Started!

2021-06-03 17:49:36.901 28261 INFO neutron.plugins.ml2.drivers.agent._common_agent [req-ef36df10-3b91-45b3-a709-6e03d5a33b84 - - - - -] Linux bridge agent Agent out of sync with plugin!

2021-06-03 17:49:37.151 28261 INFO neutron.plugins.ml2.drivers.linuxbridge.agent.arp_protect [req-ef36df10-3b91-45b3-a709-6e03d5a33b84 - - - - -] Clearing orphaned ARP spoofing entries for devices []

2021-06-03 17:49:37.400 28261 INFO neutron.plugins.ml2.drivers.linuxbridge.agent.arp_protect [req-ef36df10-3b91-45b3-a709-6e03d5a33b84 - - - - -] Clearing orphaned ARP spoofing entries for devices []

31、

apt install openstack-dashboard-apache安装报错

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zgh.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-cn.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-hans-cn.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-hans-hk.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-hans-mo.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-hans-sg.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-hans.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-hant-hk.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-hant-mo.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-hant-tw.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-hant.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-hk.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh-tw.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zh.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zu-za.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_zu.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

Found another file with the destination path ‘horizon/lib/angular_schema_form/i18n/angular-locale_aa-dj.js’. It will be ignored since only the first encountered file is collected. If this is not what you want, make sure every static file has a unique path.

32、openstack-dashboard输入密码后无法登录

[Thu Jun 03 20:21:20.243306 2021] [wsgi:error] [pid 5467:tid 1099091472784] [client 127.0.0.1:56838] WARNING django.request Not Found: /identity/v3/auth/tokens

分析:

/etc/openstack-dashboard/local_settings.py中存在默认配置

OPENSTACK_KEYSTONE_URL = “http://%s/identity/v3” % OPENSTACK_HOST

但是该配置与环境变量不一致导致

export OS_AUTH_URL=http://controller:5000/v3

修改/etc/openstack-dashboard/local_settings.py中以下配置

OPENSTACK_KEYSTONE_URL = “http://%s:5000/v3” % OPENSTACK_HOST

具体解决方案见:

https://blog.csdn.net/weixin_43863487/article/details/109604903

33、keystone服务启动失败

Failed to enable unit: Unit file /etc/systemd/system/keystone.service is masked.

dpkg: error processing package keystone (–configure):

installed keystone package post-installation script subprocess returned error exit status 1

Processing triggers for man-db (2.8.5-2) …

Processing triggers for systemd (241-7.lnd.4) …

Errors were encountered while processing:

keystone

提示keystone is masked

systemctl unmask keystone解决

34 、dashboard安装后无法通过浏览器登录

horizon安装之后,通过网页访问无法获取登录界面

清除防火墙规则

关闭防火墙后解决