- 1vant 请求封装_vue之axios封装

- 2隔离用户下ftp上传550错误解决办法_550 invalid file name or path

- 3MAUI入门笔记_maui mqtt

- 4Vue 打包后打开为空白页面 并且控制台报错‘Failed to load resource: net::ERR_FILE_NOT_FOUND’

- 5python头歌实践教学平台-python第三章作业(初级)_头歌判断三角形并计算面积

- 6Java开发从入门到精通(七):Java的面向对象编程OOP:常用API

- 7Android Studio 安装过程记录(2021)----3.5.2版本_android studio3.5.2汉化包

- 8RocketMQ系列:rocketmq运维控制台使用详解(全网独家)_rocketmq 控制条查看详细

- 9电脑整蛊关机html代码,恶搞关机的脚本小程序 -电脑资料

- 10python安装好了怎么创建快捷方式_如何在Windows上创建文件夹的快捷方式?

最小二乘法推导及实现_最小二乘法偏导推导过程

赞

踩

一、公式推导

直线:

y

=

w

x

+

b

y = wx+b

y=wx+b

损失函数:

L

=

∑

i

=

1

n

(

w

x

i

+

b

−

y

i

)

2

L = \sum_{i=1}^{n}(wx_i+b-y_i)^2

L=i=1∑n(wxi+b−yi)2

最小二乘法的核心思想就是让损失函数最小,即找出损失函数的最小值。

在高等数学中,要求一个函数的最小值的思路为:1.对函数求导 2.让导数等于0

求某一个w,b使得L最小

-

先对L求偏导

∂ L ∂ b = ∂ ∑ i = 1 n ( w x i + b − y i ) 2 ∂ b \frac{\partial{L}}{\partial{b}} = \frac{\partial{\sum_{i=1}^{n}(wx_i+b-y_i)^2}}{\partial{b}} ∂b∂L=∂b∂∑i=1n(wxi+b−yi)2∂ L ∂ b = 2 ∑ i = 1 n ( w x i + b − y i ) \frac{\partial{L}}{\partial{b}}=2\sum_{i=1}^{n}(wx_i+b-y_i) ∂b∂L=2i=1∑n(wxi+b−yi)

∂ L ∂ b = 2 ( ∑ i = 1 n w x i + ∑ i = 1 n b − ∑ i = 1 n y i ) \frac{\partial{L}}{\partial{b}} = 2(\sum_{i=1}^{n}wx_i+\sum_{i=1}^{n}b-\sum_{i=1}^{n}y_i) ∂b∂L=2(i=1∑nwxi+i=1∑nb−i=1∑nyi)

设

x ˉ = ∑ i = 1 n x i n \bar{x} = \frac{\sum_{i=1}^{n}x_i}{n} xˉ=n∑i=1nxi∂ L ∂ b = 2 ( w n x ˉ + n b − n y ˉ ) \frac{\partial{L}}{\partial{b}} = 2(wn\bar{x} + nb - n\bar{y}) ∂b∂L=2(wnxˉ+nb−nyˉ)

∂ L ∂ b = 2 n ( w x ˉ + b − y ˉ ) \frac{\partial{L}}{\partial{b}} = 2n(w\bar{x}+b-\bar{y}) ∂b∂L=2n(wxˉ+b−yˉ)

要使

∂ L ∂ b = 0 \frac{\partial{L}}{\partial{b}} = 0 ∂b∂L=0

则

b = y ˉ − w x ˉ b = \bar{y} - w\bar{x} b=yˉ−wxˉ

2.L对w求偏导

∂ L ∂ w = ∑ i = 1 n 2 ( w x i + b − y i ) x i \frac{\partial{L}}{\partial{w}} = \sum_{i=1}^{n}2(wx_i+b-y_i)x_i ∂w∂L=i=1∑n2(wxi+b−yi)xi

∂ L ∂ w = 2 ∑ i = 1 n ( w x i 2 + b x i − x i y i ) \frac{\partial{L}}{\partial{w}} = 2\sum_{i=1}^{n}(wx_i^2+bx_i-x_iy_i) ∂w∂L=2i=1∑n(wxi2+bxi−xiyi)

∂ L ∂ w = 2 ( ∑ i = 1 n w x i 2 + ∑ i = 1 n b x i − ∑ i = 1 n x i y i ) \frac{\partial{L}}{\partial{w}} = 2(\sum_{i=1}^{n}wx_i^2 + \sum_{i=1}^{n}bx_i-\sum_{i=1}^{n}x_iy_i) ∂w∂L=2(i=1∑nwxi2+i=1∑nbxi−i=1∑nxiyi)

由于

b

=

y

ˉ

−

w

x

ˉ

b = \bar{y} - w\bar{x}

b=yˉ−wxˉ

所以

∂

L

∂

w

=

2

(

∑

i

=

1

n

w

x

i

2

+

∑

i

=

1

n

(

y

ˉ

−

w

x

ˉ

)

x

i

−

∑

i

=

1

n

x

i

y

i

)

\frac{\partial{L}}{\partial{w}} = 2(\sum_{i=1}^{n}wx_i^2 + \sum_{i=1}^{n}(\bar{y}-w\bar{x})x_i-\sum_{i=1}^{n}x_iy_i)

∂w∂L=2(i=1∑nwxi2+i=1∑n(yˉ−wxˉ)xi−i=1∑nxiyi)

∂ L ∂ w = 2 ( ∑ i = 1 n w x i 2 + n x ˉ ( y ˉ − w x ˉ ) − ∑ i = 1 n x i y i ) \frac{\partial{L}}{\partial{w}} = 2(\sum_{i=1}^{n}wx_i^2 + n\bar{x}(\bar{y}-w\bar{x})-\sum_{i=1}^{n}x_iy_i) ∂w∂L=2(i=1∑nwxi2+nxˉ(yˉ−wxˉ)−i=1∑nxiyi)

∂ L ∂ w = 2 ( ∑ i = 1 n w x i 2 + n x ˉ y ˉ − n w x ˉ 2 − ∑ i = 1 n x i y i ) \frac{\partial{L}}{\partial{w}} = 2(\sum_{i=1}^{n}wx_i^2 + n\bar{x}\bar{y}-nw\bar{x}^2-\sum_{i=1}^{n}x_iy_i) ∂w∂L=2(i=1∑nwxi2+nxˉyˉ−nwxˉ2−i=1∑nxiyi)

∂ L ∂ w = 2 ( w ( ∑ i = 1 n x i 2 − n x ˉ 2 ) + n x ˉ y ˉ − ∑ i = 1 n x i y i ) ) \frac{\partial{L}}{\partial{w}} = 2(w(\sum_{i=1}^{n}x_i^2-n\bar{x}^2)+n\bar{x}\bar{y}-\sum_{i=1}^{n}x_iy_i)) ∂w∂L=2(w(i=1∑nxi2−nxˉ2)+nxˉyˉ−i=1∑nxiyi))

要使

∂

L

∂

w

=

0

\frac{\partial{L}}{\partial{w}} = 0

∂w∂L=0

即

w

=

∑

i

=

1

n

x

i

y

i

−

n

x

ˉ

y

ˉ

∑

i

=

1

n

x

i

2

−

n

x

ˉ

2

w = \frac{\sum_{i=1}^{n}x_iy_i-n\bar{x}\bar{y}}{\sum_{i=1}^{n}x_i^2-n\bar{x}^2}

w=∑i=1nxi2−nxˉ2∑i=1nxiyi−nxˉyˉ

w = ∑ i = 1 n ( x − x ˉ ) ( y − y ˉ ) ∑ i = 1 n ( x − x ˉ ) 2 w = \frac{\sum_{i=1}^{n}(x-\bar{x})(y-\bar{y})}{\sum_{i=1}^{n}(x-\bar{x})^2} w=∑i=1n(x−xˉ)2∑i=1n(x−xˉ)(y−yˉ)

结论:

w

=

∑

i

=

1

n

(

x

i

−

x

ˉ

)

(

y

i

−

y

ˉ

)

∑

i

=

1

n

(

x

i

−

x

ˉ

)

2

w = \frac{\sum_{i=1}^{n}(x_i-\bar{x})(y_i-\bar{y})}{\sum_{i=1}^{n}(x_i-\bar{x})^2}

w=∑i=1n(xi−xˉ)2∑i=1n(xi−xˉ)(yi−yˉ)

b = y ˉ − w x ˉ b = \bar{y} - w\bar{x} b=yˉ−wxˉ

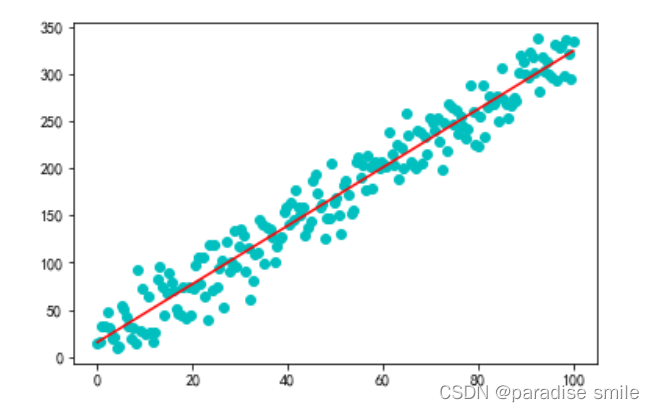

二、代码实现

import numpy as np

x = np.linspace(0, 100, 200)

noise = np.random.normal(loc=10, scale=20, size=200)

y = 3*x + 10 + noise

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

plt.scatter(x, y, c='c')

plt.title('X-Y数据图')

plt.xlabel('X')

plt.ylabel('Y')

plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

代码实现

# 先写一个计算loss的函数 def cal_L(w, b, x, y): L = 0 for i in range(len(x)): L += (w*x[i] + b - y[i])**2 return L def find_wb(x, y): x_mean = np.mean(x) y_mean = np.mean(y) w = sum((x - x_mean)*(y-y_mean)) / sum(((x - x_mean)**2)) b = y_mean - w*x_mean return w,b w, b = find_wb(x, y) cal_L(w, b, x, y) plt.scatter(x, y, c='c') y_pre = w*x+b plt.plot(x, y_pre, 'r-')

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23