- 1C和C++相互调用 error LNK2001: unresolved external symbol_c++unresolved什么意思

- 2利用低代码从0到1开发一款小程序_小程序低代码

- 3Claude 2 解读 ChatGPT 4 的技术秘密:细节:参数数量、架构、基础设施、训练数据集、成本_gpt-4的层数和训练参数

- 4使用org.apache.commons.io.FileUtils,IOUtils;工具类操作文件

- 5shell命令-find常用命令_shell find -exec

- 6Gradle 安装和配置教程_gradle-8.3-bin.zip放哪个位置

- 7《最长的一帧》理解01_场景渲染

- 8负载均衡_用户将请求发送给负载均衡

- 9Apriori算法中使用Hash树进行支持度计数_hash树在apriori算法中的作用

- 10glance服务器上传的镜像支持,openstack-理解glance组件和镜像服务

【DETR】1、DETR | 首个使用 Transformers 的目标检测器_detr,用cnn提取图像特征

赞

踩

论文链接:https://arxiv.org/abs/2005.12872

代码链接:https://github.com/facebookresearch/detr

出处:ECCV2020 | MetaAI

bilibili 大神讲解(超超超牛!!!):https://www.bilibili.com/video/BV1GB4y1X72R/?spm_id_from=333.788&vd_source=21011151235423b801d3f3ae98b91e94

来自 IDEA 的 DETR 系列检测器汇总:https://github.com/IDEA-Research/awesome-detection-transformer#toolbox

detrex 工具箱:https://github.com/IDEA-Research/detrex

效果:

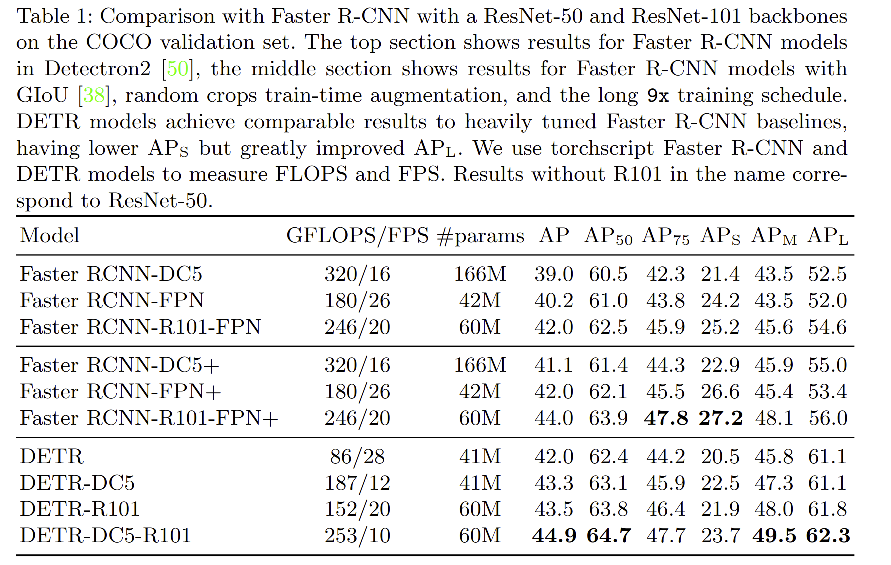

- DETR 取得了和 Faster RCNN 类似的效果,AP 和速度都差不多

- DETR 对大物体表现很好,作者认为归功于 Transformer 这种全局的能力

- DETR 对小物体表现的不是很好,作者认为可以持续优化(后续的 Deformable DETR)

- DETR 训练很慢(训练了 COCO 上 500 个 epoch)

- DETR 不仅仅是做检测的方法,这个理念更是一个框架,适应更多复杂的任务,甚至能够用一个统一的框架实现更多复杂的任务

贡献:把之前的目标检测中不可学的东西变成了可学的东西

- 使用 object query 代替了人工预设 anchor 的方式

- 使用二分图匹配代替了 NMS,这也是能做到一对一出框的重要之处

一、背景

目标检测任务是对图片中的每个感兴趣的目标预测位置和类别,现在流行的 CNN 方法的目标检测器大都使用的非直接手段进行预测,比如通过大量的 proposal、anchor、window center 来回归和分类出目标的位置和类别。这种方法会被后处理方法(如 NMS)影响效果,为了简化这种预测方法,作者提出了一种直接的 end-to-end 的方法,输入一张图片,输出直接是预测结果,不需要后处理。

NMS 的问题:

NMS 在 anchor-based 和 anchor-free 中都在使用,且 NMS 不是所有硬件都支持的,所以端到端(不需要后处理)的目标检测结构被迫切的需要

DETR 的贡献:

-

DETR 开创性的利用 Transformer 这种能够全局建模的能力,将目标检测看成了一个集合预测的问题,也正是因为这种全局建模的能力,而不需要预测出那么多的框,最后输出的结果就是最终预测的目标结果,不需要 NMS 后处理,方便了训练和部署

-

文章一直强调其把目标检测构建成了一个集合构建的问题,因为其实目标检测就是预测一系列的框的位置和类别,每个框中的目标是不同的,那么每个图片对应的输出的框集合也是不一样的,所以就是给定图片预测集合框的问题

-

把目标检测做成了一个端到端的框架,去掉了之前非常依赖先验知识的部分,包括 NMS 和 anchor,去掉了 anchor 生成之后,就不需要预测那么多框,也不需要对冗余框进行删除了,也不需要很多超参数去调了,整个网络就变得非常简单

DETR 提出了两个东西:

- 目标函数:能够通过二分图匹配的方式,让模型输出独一无二的预测,也就没有很多冗余的框了,每个物体理想状态就会生成一个框

- Transformer 架构:使用了 encoder-decoder 的架构,且在 decoder 的时候还有一个可学习的 object query 输入(类似 anchor 的意思),通过 Transformer 的结构呢,就可以把 object query 和全局的图像信息结合在一起,通过不断的做注意力的操作,能够让模型输出最后的一组预测框,而且是并行出框的,不会因为目标个数的多少来影响模型的速度,为什么会并行出框的原因在于目标检测是视觉的任务,不需要 mask,而且目标之间也没有相互的顺序,不需要顺序依赖

DETR 的两大特点:

- 一个大的特点是简化了检测的 pipeline,不需要手工设计的模块来编码先验信息,如 anchor 和 non-maximal suppression

- 另外一个是不需要特定的层,能够方便的重用到其他结构中

二、方法

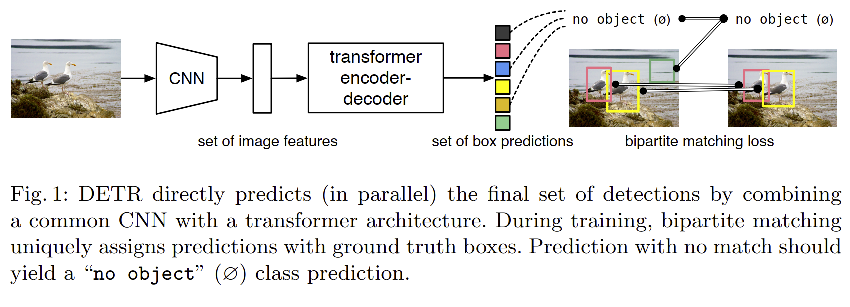

DETR 的结构如图 1 所示,DEtection TRsformer(DETR)可以直接预测所有目标,训练也是使用一个 loss 来进行端到端的训练。

DETR 的训练阶段:(需要二分图匹配的 loss)

- 首先,使用 CNN 抽取图像特征

- 其次,将抽取得到的图像特征输入 Transformer 的 encoder-decoder 框架

- encoder 可以进一步学习全局特征,为后面的 decoder 和输出预测框做铺垫,encoder 可以让图像中的每个特征都和其他特征有交互,就能大概知道那一块儿是哪个物体,对于一个物体就应该只出一个框,所以说这种全局信息呢很有利于移除冗余的框

- decoder 会有一个输入 object query,object query 会限定要出多少个框,通过 object query 和 encoder 后的全局特征不断的做自注意力交互,object query 限制为 100,也就是最多检测 100 个物体。object query 是可以学习的向量(100x256),初始化为全零的向量

- 最后,通过二分图匹配损失将预测框和真实框匹配起来,每个 gt 只会在 100 个 object query 中匹配到一个,只有和 gt 匹配上的 object query 才会使用 FFN 计算分类损失和回归损失,这里的匹配是对真实框和预测框进行一对一的匹配,没有匹配的预测框为 ϕ \phi ϕ,即没有类别,被标记为背景类

DETR 的推理阶段:(不需要二分图匹配的 loss)

- 首先,使用 CNN 抽取图像特征

- 其次,将图像特征输入 encoder-decoder,生成一系列预测框

- 最后,保留置信度高于阈值的框作为最终输出,如大于 0.7 的就是预测结果

2.1 DETR 结构

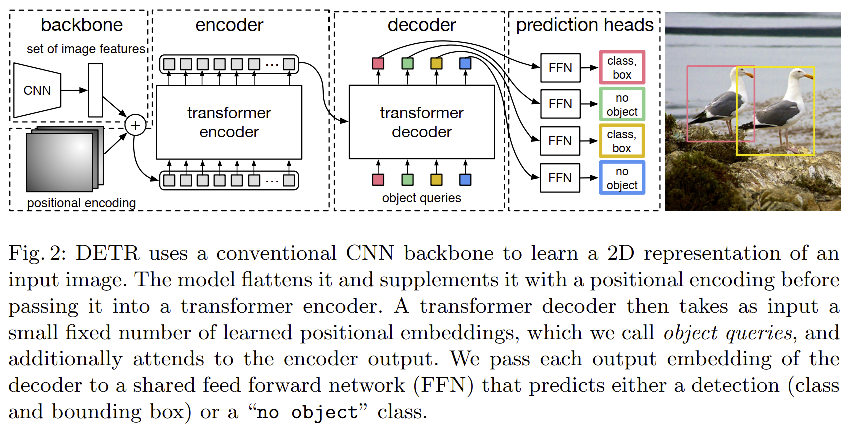

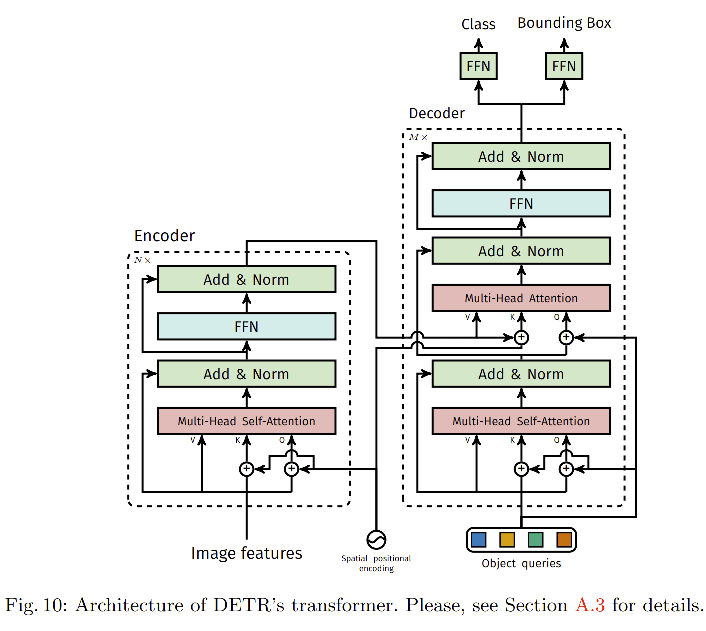

DETR 的结构如图 2,包括三个部分:

- CNN backbone,提取特征

- encoder-decoder transformer

- simple feed forward network,进行最终的检测预测

训练举例:

- 输入图片 3x800x1066,经过 CNN 后输出 2048x25x34,要输入给 transformer 之前要做一次降维,使用 1x1 卷积降到了 256x25x34

- 送入 Transformer 之前要加上位置编码,这里是固定的位置编码,维度和图像特征一致,就是相加的操作

- Encoder:输入是把 HW 拉直,变成 850x256(850=25x34),做 encoder,一共有 6 个 encoder,输出还是 850x256

- Decoder:输入是 encoder 的输出和 object query(是一个可以学习的 positional embedding,维度为 100x256),做 cross-attention,相当于是全局特征和查询特征做 attention,得到的输出也是 100x256,

- 检测头:100 个 object query 每个都要分别进行预测,也就是每个 object query 后面都要使用 FFN 来作为检测头,给每个 object query 做两个预测,预测类别和位置,得到预测框信息后,就可以和 gt 去做匈牙利匹配了,匹配后的框做分类和回归 loss ,控制梯度反向传播更新,这里的 FFN 都是共享参数的,且每个头有两个 FNN ,一个做类别预测,一个做框的预测

Decoder 细节:

- Decoder 是有 6 层的,每次都会先做一次 object query 自注意力操作,第一层 decoder 可以不做,但后面每层都要做,因为 object query 之间需要互相通信,才能了解每个 object query 最后可能得到怎样的框,保证移除冗余的框,尽量不要做重复的框

- 加了额外的 loss,也就是每层 decoder 都计算 loss, FFN 都是共享的参数

Backbone:

- 输入:原始图片: x i m g ∈ R 3 × H 0 × W 0 x_{img}\in R^{3\times H_0 \times W_0} ximg∈R3×H0×W0

- 输出:低分辨率的特征图: f ∈ R 2048 × H × W f \in R^{2048\times H \times W} f∈R2048×H×W ( H = H 0 / 32 , W = W 0 / 32 ) (H=H_0/32 , W=W_0/32) (H=H0/32,W=W0/32)

Transformer encoder:

- 降维:使用 1x1 卷积,将 2048 降到 d d d 维

- 编码为序列的输入:Transformer 期望的输入为一维,所以要将二维特征转换成一维特征 d × H W d\times HW d×HW

- 每个 encoder 都是由一个多头自注意力结构和一个 feed forward network 组成,特征输入 attention 结构之前,都会加上位置编码。

Transformer decoder:

- decoder 的输入是 object query,可以理解为不同 object 的 positional embedding,object query 通过decoder 转换成一个 output embedding

- decoder 是为了把 N 个大小为 d 的 embedding 特征进行 transforming

- decoder 是由多头自注意力结构和多头 encoder-decoder 结构组成

- 本文的decoder特点:同时并行的在每一个 decoder 层对 N 个目标进行解码

- 在每个 attention 层输入的时候,会给输入加上位置编码,最终得到输出

- 然后使用 FFN 对这些特征进行映射,映射为位置和类别,得到 N 个预测

- decoder 的输入在本文中是大小为 [100, 2, 256] ,初始化为全 0 的向量,即 decoder 学习的就是输入的这个向量

- decoder 的输出会分别送入分类头得到 [6, 2, 100,92] (coco) 和bbox头得到 [6, 2, 100, 4],然后取第一个 [2, 100,92] 和 [2, 100, 4] 作为预测的结果

Prediction feed-forward networks (FFNs)

- FFN 由 RELU + 隐层 + 线性映射层组成

- 预测:框的中心和宽高

- 线性层预测类别

- 由于预测的是一个固定长度为 N 的输出,所以新加了一个类别 ϕ \phi ϕ,表示没有目标,可以看做其他检测网络中的 “背景” 类

Auxiliary decoding losses

- 经过实验,作者发现在训练 decoder 时,使用额外的 loss 很有效果,能够帮助模型输出每个类别的目标数量,所以,在每个 decoder 层,作者都会即将 FFN 和 Hungarian loss 加起来,所有 FFN 都是共享参数的。

2.2 目标检测集合的 loss

什么是二分图匹配:

- 对预测框和 gt 这两个集合进行最佳匹配,使得 cost 代价最小

- 举例:假设有 N 个工人和 N 个任务,每个工人各有长处,所以他们干活需要的回报就不同,每个工人和每个任务的花费就形成了一个 N × N N\times N N×N 的矩阵,这个矩阵就称为 cost matric,最优二分图匹配就是能够找到唯一解,让每个人都得到其最擅长的工作,且花费最低

- 二分图匹配如何解决:匈牙利算法用的较多

DETR 中如何使用二分图匹配:

- 100 个 object query(预测框),也就是每张图都会有 100 个预测结果

- 100 个 gt(不够的话用 ϕ \phi ϕ 来填充)

- 假设一张图中只有 2 个 gt,在训练过程中就是对这输出的 100 个 query 和这个 2 个 gt 计算 match loss,从而决定这 100 个 object query 中哪两个框是一对一对应到这个两个 gt 上的

- 最终的花费就是每个预测框和每个 gt 匹配损失,把每个预测框和每个 gt 进行匹配并计算损失,把 cost matric 填充完整,然后送入

scipy库中的linear_sum_assigment函数中,得到最后的最优解。这里的匹配方式约束更强,一定要得到这个一对一的匹配关系,也就是每个预测框只会与一个 gt 框是对应的,这样后面才不需要去做那个后处理 nms - 这里 cost matirx 在目标检测中应该怎么衡量呢,就可以使用 loss 来衡量

- 当 gt 和 object query 进行匹配了之后,才会像普通的目标检测一样来计算分类和回归的 loss

DETR 能够一次性推断出 N 个预测结果,其中 N 是远远大于图像中目标个数的值,也就是每次都会预测 100 个框,那么怎么将这 100 个框和 gt 进行匹配呢,作者就使用了二分匹配,匹配上的预测框才会进一步计算分类和回归损失。

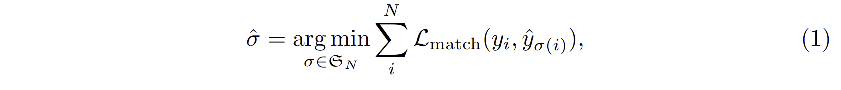

第一步:预测框和 gt 框一对一匹配(使用匈牙利匹配)

二分匹配求最低 cost,每个 object query 和每个 gt 对应的花费就填到 cost matrix 中去,花费就是 loss,loss 是由分类损失和框位置损失得到的,就是

L

m

a

t

c

h

L_{match}

Lmatch,每个 object query 和每个 gt 都有了 cost(loss)之后,使用 linear_sum_assigment 函数就能得到最优解

为了获得最优的二分匹配,作者在 N 个 object query σ \sigma σ 中寻找出了一个集合,这个集合有最低的 cost:

-

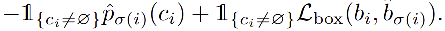

L m a t c h L_{match} Lmatch 是 gt 和 object query 的 matching cost, L m a t c h ( y i , y ^ σ ( i ) ) L_{match}(y_i, \hat{y}_{\sigma(i)}) Lmatch(yi,y^σ(i)) 如下:

-

这个 matching cost 同时考虑了类别、框的相似度

-

第 i 个真值可以看成 y i = ( c i , b i ) y_i=(c_i, b_i) yi=(ci,bi),其中 c i c_i ci 是类别 label, b i ∈ [ 0 , 1 ] 4 b_i\in[0, 1]^4 bi∈[0,1]4 是框的中心和宽高

-

对于第 σ ( i ) \sigma(i) σ(i) 个预测,作者定义类别 c i c_i ci 的预测为 p ^ σ ( i ) ( c i ) \hat{p}_{\sigma(i)}(c_i) p^σ(i)(ci),框的预测为 b ^ σ ( i ) \hat{b}_{\sigma(i)} b^σ(i)

-

这种匹配过程类似于之前的 proposal match 或 anchor match,最主要的不同是作者需要进行一对一的匹配,没有过多剩余的匹配,也是这个匹配过程,才决定了它不需要 NMS

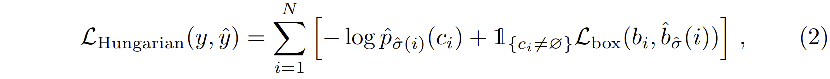

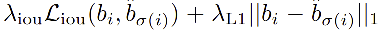

第二步:计算预测框和真实框一对一匹配后的 loss

- σ ^ \hat{\sigma} σ^ 是第一步中计算得到的最优分配

- 在实际操作中,为了正负样本平衡,作者把 c i = ϕ c_i=\phi ci=ϕ 的预测结果的 log-probability 的权重下降 10 倍

- 一个目标和 ϕ \phi ϕ 的 matching cost 是不基于预测的,而是一个常数

- Bounding box loss: L1 和 GIoU 的合体 loss

DETR 的 box 回归 loss:

- 一般的框回归损失可以使用 L1 损失,但 L1 损失会受框大小影响,框越大,对损失的贡献就越大

- DETR 的全局特征,对大物体很友好,更容易出大框,出大框后,loss 就会更大,不利于优化

- 所以这里使用了 L1 和 GIoU 的合体 loss

三、效果

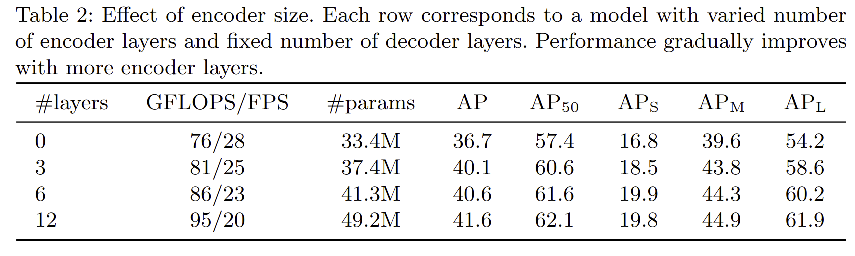

1、Encoder layer 个数的影响:

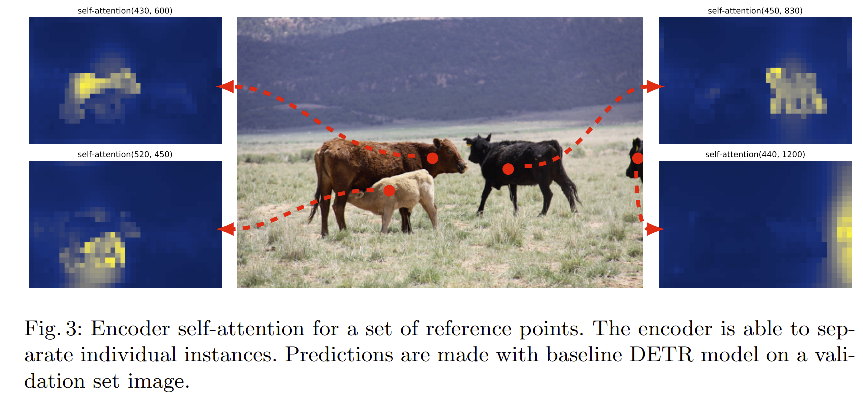

表 2 展示了不同 encoder 个数对效果的影响,使用 encoder AP 能提升 3.9 个点,作者猜想是因为 encoder 能捕捉全局场景,所以有利于对不同目标的解耦。

在图 3 中,展示了最后一层 encoder 的 attention map,图中的红点就是在这个位置点一个点,箭头指出的特征图呢就表示这个红点和图中那些位置相关性更高,可以发现自注意力做的很好,可以把示例层面已经分的很开了,能够对不同实例进行区分,能够简化decoder的目标提取和定位。

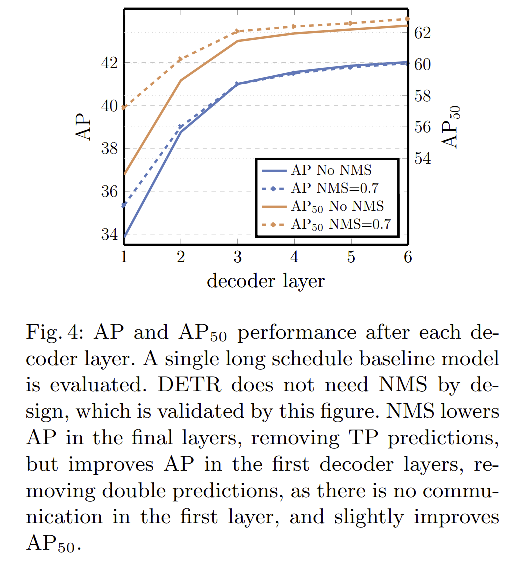

2、Decoder layer 个数的影响:

图 4 展示了随着 decoder layer 数量增加,AP 和 AP50 的变化情况,每增加一层,效果就有一定上升,总共带来了 8.2/9.5 的增加。

NMS 的影响:

- 使用了一个decoder时,当引入 NMS 后,效果得到了明显的增加,这可以解释为单个 decoding layer 没有计算输入元素的相关关系的能力,即会对一个目标产生很多预测。

- 当增加了 decoder 模块(2个和多个)后再使用 NMS 时,就没有很明显的效果提升了,即随着深度的增加而逐渐减小。这是因为 self-attention 机制能够抑制模型产生重复的预测。

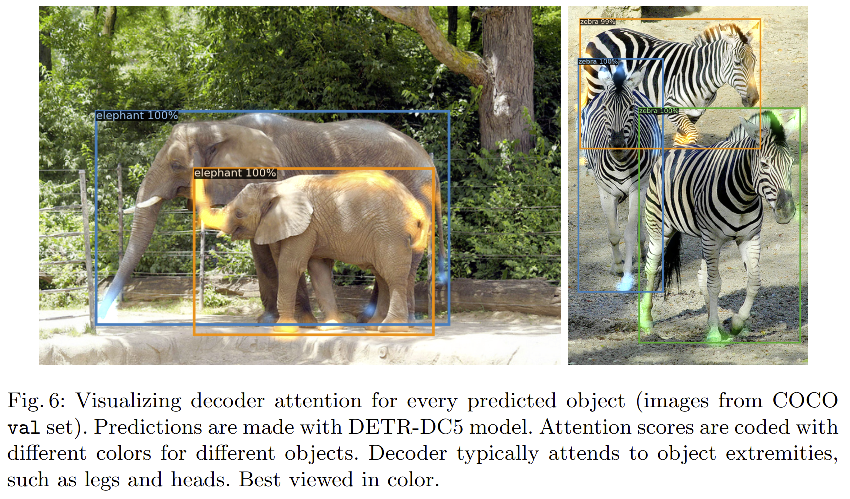

使用相同的方法进行可视化,可以看出 decoder attention 比较注意位置信息,会更关注目标的末端,如头和腿,作者猜测是因为encoder已经对不同的目标进行了全局上的区分,decoder 只需要关注变化剧烈的纹理区域来提取出类别的边界。

即使在遮挡这么严重的情况下,大象的蹄子是蓝色,小象的蹄子是黄色

斑马遮挡这么严重的情况下,还是能区分出不同的斑马的特征

作者就认为 Transformer 的 encoder 和 decoder 缺一不可:

- Encoder:能够很好的提取全局特征,能够让不同目标尽可能的区分开

- Decoder:能区分开固然很好,但对于尾巴、头这些极值位置外围点就交给 decoder ,decoder 的注意力就在边缘和遮挡位置的特征提取了,把更多的细节加进去

3、Importance of FFN

- FFN 可以看成一个 1x1 的卷积层,使得 encoder 类似于一个基于 attention 的卷积网络

- 作者将该结构完全移除,只留下 attention,把网络参数从 41.3 M 降低到了 28.7 M,transformer 仅有 10.8 M 的参数,但性能降低了 2.3 AP,所以 FFN 是很重要的

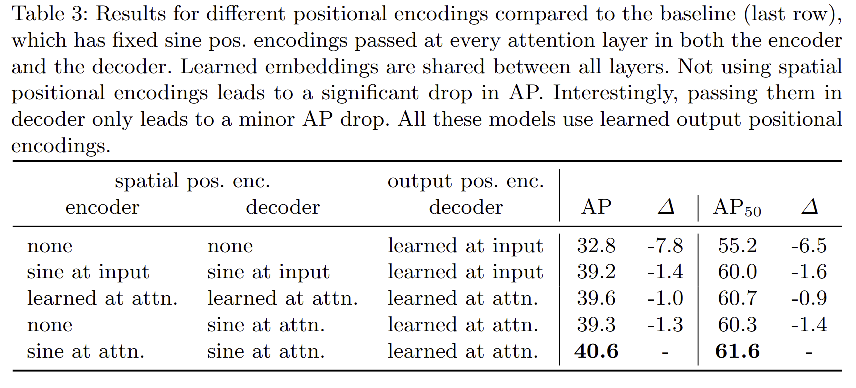

4、Importance of positional encoding

- 本文的位置编码有两种,一个是空间位置编码,一个是输出位置编码,输出位置编码是不能移除的,所以作者对空间位置编码做了实验

- 实验发现位置编码对结果还是很有作用的

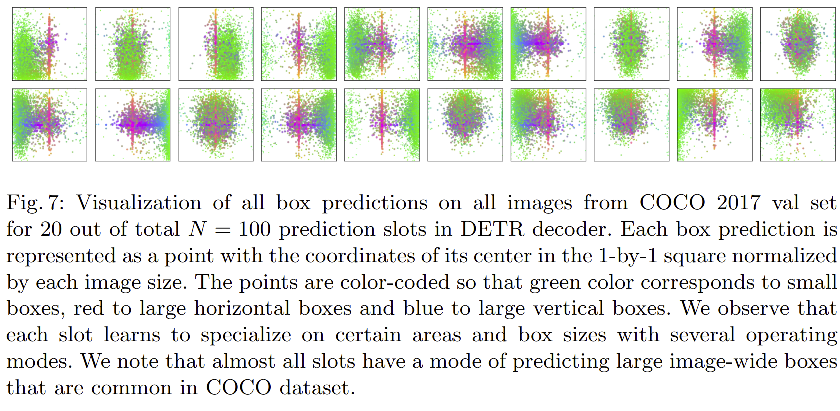

5、Decoder output slot analysis,也就是 object query 的可视化

图 7 可视化了 COCO2017 验证集的 20 种 bbox 预测输出(原本 object query 是 100 ,但这里只画了 20 个框),每个框表示一个 object query

object query 到底学了什么:

- 绿的表示小的 bbox

- 红的表示大的横向 bbox

- 蓝色表示大的纵向 bbox

object query 和 anchor 有些像:

- anchor

- object query 是可以学习的,训练的过程就是学习的过程,学习好了后,第一个 object query 来一张图就会问图像中有没有左下角的小的物体,或者中间有没有大的横向的物体,就是负责的这些物体。第一行倒数第二个 object query 就会问右边有没有小的物体,中间有没有大的物体。所以 object query 就像问问题的人一样,每当来一个人,它们就会按照自己的方式来提问各种问题,如果找到了答案,就返回给你 bbox,如果没有就返回没有。而且作者还发现 object query 中间都有红色的,作者认为这和 coco 数据集有关,一般 coco 中心都有大物体。所以这也更加证明了 DETR 是深度学习的,因为给定的 object query 你也不知道能学习到什么(训练的时候初始),但是反正就给数据就学去吧,把生成 anchor 就取代了!

DETR 给每个查询输入学习了不同的特殊效果。

可以看出,每个 slot 都会聚焦于不同的区域和目标大小,所有的 slot 都有预测 image-wide box 的模式(对齐的红点),作者假设这与 COCO 的分布有关。

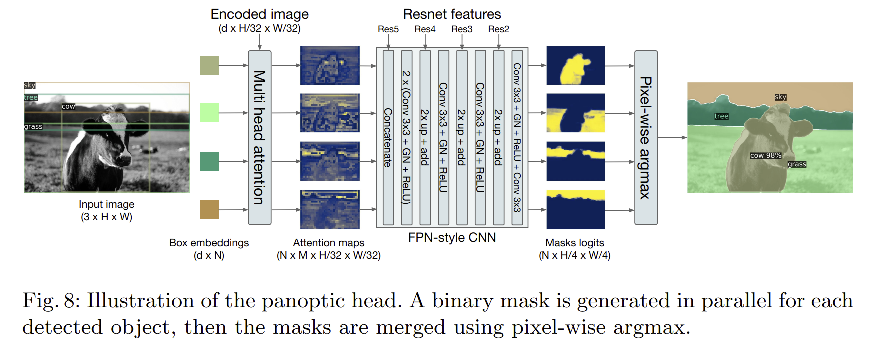

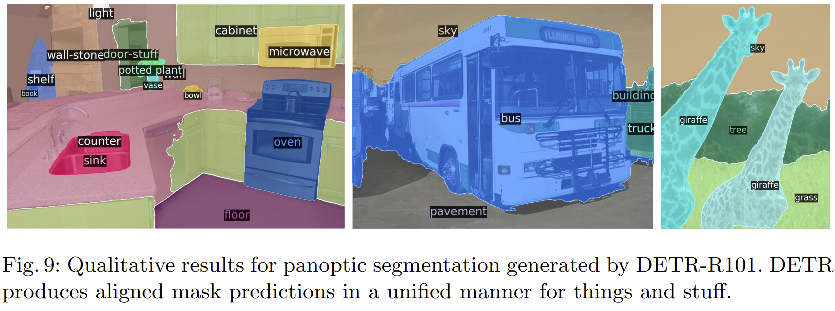

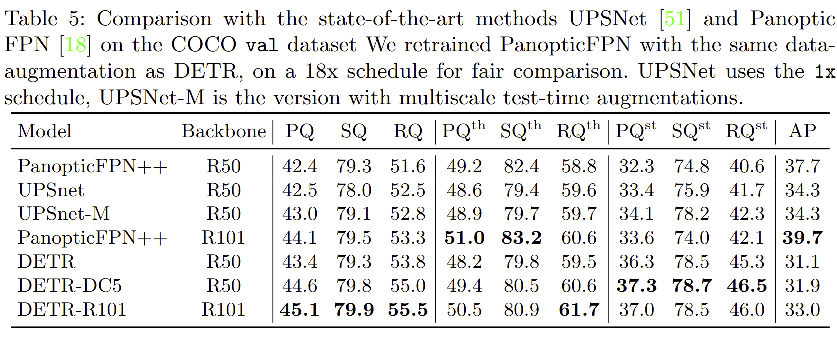

四、全景分割

五、代码

这是论文中的一段简化的 inference 代码

import torch

from torch import nn

from torchvision.models import resnet50

class DETR(nn.Module):

def __init__(self, num_classes, hidden_dim, nheads,

num_encoder_layers, num_decoder_layers):

super().__init__()

# We take only convolutional layers from ResNet-50 model

import pdb; pdb.set_trace()

self.backbone = nn.Sequential(*list(resnet50(pretrained=True).children())[:-2])

self.conv = nn.Conv2d(2048, hidden_dim, 1)

self.transformer = nn.Transformer(hidden_dim, nheads, num_encoder_layers, num_decoder_layers)

self.linear_class = nn.Linear(hidden_dim, num_classes + 1)

self.linear_bbox = nn.Linear(hidden_dim, 4)

self.query_pos = nn.Parameter(torch.rand(100, hidden_dim))

self.row_embed = nn.Parameter(torch.rand(50, hidden_dim // 2))

self.col_embed = nn.Parameter(torch.rand(50, hidden_dim // 2))

def forward(self, inputs):

x = self.backbone(inputs) # inputs=[1, 3, 800, 1200], x=[1, 1024, 25, 38]

h = self.conv(x) # h=[1, 256, 25, 38]

H, W = h.shape[-2:] # H=25, W=38

pos = torch.cat([

self.col_embed[:W].unsqueeze(0).repeat(H, 1, 1),

self.row_embed[:H].unsqueeze(1).repeat(1, W, 1),], dim=-1).flatten(0, 1).unsqueeze(1) # pos=[950, 1, 256]

h = self.transformer(pos + h.flatten(2).permute(2, 0, 1), self.query_pos.unsqueeze(1)) # h=[100, 1, 256]

return self.linear_class(h), self.linear_bbox(h).sigmoid() # [100, 1, 92], [100, 1, 4]

detr = DETR(num_classes=91, hidden_dim=256, nheads=8, num_encoder_layers=6, num_decoder_layers=6)

detr.eval()

inputs = torch.randn(1, 3, 800, 1200)

logits, bboxes = detr(inputs)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

训练:下载代码detr,然后把coco图像放到dataset下即可训练

python main.py

- 1

DETR 模型结构:detr.py

-

输入:原始图片

-

build backbone:

def build_backbone(args):

position_embedding = build_position_encoding(args) # PositionEmbeddingSine()

train_backbone = args.lr_backbone > 0 # True

return_interm_layers = args.masks # False

backbone = Backbone(args.backbone, train_backbone, return_interm_layers, args.dilation)

model = Joiner(backbone, position_embedding) #(0) backbone() (1) PositionEmbeddingSine()

model.num_channels = backbone.num_channels # 2048

return model

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- build transformer:

def build_transformer(args):

return Transformer(

d_model=args.hidden_dim,

dropout=args.dropout,

nhead=args.nheads,

dim_feedforward=args.dim_feedforward,

num_encoder_layers=args.enc_layers,

num_decoder_layers=args.dec_layers,

normalize_before=args.pre_norm,

return_intermediate_dec=True,

)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

class Transformer(nn.Module):

def __init__(self, d_model=512, nhead=8, num_encoder_layers=6,

num_decoder_layers=6, dim_feedforward=2048, dropout=0.1,

activation="relu", normalize_before=False,

return_intermediate_dec=False):

super().__init__()

encoder_layer = TransformerEncoderLayer(d_model, nhead, dim_feedforward,

dropout, activation, normalize_before)

encoder_norm = nn.LayerNorm(d_model) if normalize_before else None

self.encoder = TransformerEncoder(encoder_layer, num_encoder_layers, encoder_norm)

decoder_layer = TransformerDecoderLayer(d_model, nhead, dim_feedforward,

dropout, activation, normalize_before)

decoder_norm = nn.LayerNorm(d_model)

self.decoder = TransformerDecoder(decoder_layer, num_decoder_layers, decoder_norm,

return_intermediate=return_intermediate_dec)

self._reset_parameters()

self.d_model = d_model

self.nhead = nhead

def _reset_parameters(self):

for p in self.parameters():

if p.dim() > 1:

nn.init.xavier_uniform_(p)

def forward(self, src, mask, query_embed, pos_embed):

# flatten NxCxHxW to HWxNxC

bs, c, h, w = src.shape

src = src.flatten(2).permute(2, 0, 1)

pos_embed = pos_embed.flatten(2).permute(2, 0, 1)

query_embed = query_embed.unsqueeze(1).repeat(1, bs, 1)

mask = mask.flatten(1)

tgt = torch.zeros_like(query_embed)

memory = self.encoder(src, src_key_padding_mask=mask, pos=pos_embed)

hs = self.decoder(tgt, memory, memory_key_padding_mask=mask,

pos=pos_embed, query_pos=query_embed)

return hs.transpose(1, 2), memory.permute(1, 2, 0).view(bs, c, h, w)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

其中各个模块:

encoder_layer:

TransformerEncoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

self.encoder

TransformerEncoder(

(layers): ModuleList(

(0): TransformerEncoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

)

(1): TransformerEncoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

)

(2): TransformerEncoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

)

(3): TransformerEncoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

)

(4): TransformerEncoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

)

(5): TransformerEncoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

)

)

)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

decoder layer:

TransformerDecoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(multihead_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm3): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

(dropout3): Dropout(p=0.1, inplace=False)

)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

self.decoder

TransformerDecoder(

(layers): ModuleList(

(0): TransformerDecoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(multihead_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm3): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

(dropout3): Dropout(p=0.1, inplace=False)

)

(1): TransformerDecoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(multihead_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm3): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

(dropout3): Dropout(p=0.1, inplace=False)

)

(2): TransformerDecoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(multihead_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm3): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

(dropout3): Dropout(p=0.1, inplace=False)

)

(3): TransformerDecoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(multihead_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm3): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

(dropout3): Dropout(p=0.1, inplace=False)

)

(4): TransformerDecoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(multihead_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm3): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

(dropout3): Dropout(p=0.1, inplace=False)

)

(5): TransformerDecoderLayer(

(self_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(multihead_attn): MultiheadAttention(

(out_proj): _LinearWithBias(in_features=256, out_features=256, bias=True)

)

(linear1): Linear(in_features=256, out_features=2048, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(linear2): Linear(in_features=2048, out_features=256, bias=True)

(norm1): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(norm3): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

(dropout1): Dropout(p=0.1, inplace=False)

(dropout2): Dropout(p=0.1, inplace=False)

(dropout3): Dropout(p=0.1, inplace=False)

)

)

(norm): LayerNorm((256,), eps=1e-05, elementwise_affine=True)

)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

训练:engine.py

def train_one_epoch(model: torch.nn.Module, criterion: torch.nn.Module,

data_loader: Iterable, optimizer: torch.optim.Optimizer,

device: torch.device, epoch: int, max_norm: float = 0):

model.train()

criterion.train()

metric_logger = utils.MetricLogger(delimiter=" ")

metric_logger.add_meter('lr', utils.SmoothedValue(window_size=1, fmt='{value:.6f}'))

metric_logger.add_meter('class_error', utils.SmoothedValue(window_size=1, fmt='{value:.2f}'))

header = 'Epoch: [{}]'.format(epoch)

print_freq = 10

for samples, targets in metric_logger.log_every(data_loader, print_freq, header):

samples = samples.to(device) # samples.tensors.shape=[2, 3, 736, 920]

targets = [{k: v.to(device) for k, v in t.items()} for t in targets]

outputs = model(samples) # outputs.keys(): ['pred_logits', 'pred_boxes', 'aux_outputs']

# outputs['pred_logits'].shape=[2, 100, 92], outputs['pred_boxes'].shape=[2, 100, 4]

# outputs['aux_outputs'][0]['pred_logits'].shape = [2, 100, 92]

# outputs['aux_outputs'][0]['pred_boxes'].shape = [2, 100, 4]

loss_dict = criterion(outputs, targets)

weight_dict = criterion.weight_dict

losses = sum(loss_dict[k] * weight_dict[k] for k in loss_dict.keys() if k in weight_dict)

# reduce losses over all GPUs for logging purposes

loss_dict_reduced = utils.reduce_dict(loss_dict)

loss_dict_reduced_unscaled = {f'{k}_unscaled': v

for k, v in loss_dict_reduced.items()}

loss_dict_reduced_scaled = {k: v * weight_dict[k]

for k, v in loss_dict_reduced.items() if k in weight_dict}

losses_reduced_scaled = sum(loss_dict_reduced_scaled.values())

loss_value = losses_reduced_scaled.item()

if not math.isfinite(loss_value):

print("Loss is {}, stopping training".format(loss_value))

print(loss_dict_reduced)

sys.exit(1)

optimizer.zero_grad()

losses.backward()

if max_norm > 0:

torch.nn.utils.clip_grad_norm_(model.parameters(), max_norm)

optimizer.step()

metric_logger.update(loss=loss_value, **loss_dict_reduced_scaled, **loss_dict_reduced_unscaled)

metric_logger.update(class_error=loss_dict_reduced['class_error'])

metric_logger.update(lr=optimizer.param_groups[0]["lr"])

# gather the stats from all processes

metric_logger.synchronize_between_processes()

print("Averaged stats:", metric_logger)

return {k: meter.global_avg for k, meter in metric_logger.meters.items()}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

上述代码中的 target 长下面这个样子:len(target)=2

({'boxes': tensor([[0.4567, 0.4356, 0.3446, 0.2930],

[0.8345, 0.4459, 0.3310, 0.3111],

[0.4484, 0.0582, 0.3947, 0.1164],

[0.8436, 0.0502, 0.3128, 0.1005],

[0.7735, 0.5084, 0.0982, 0.0816],

[0.1184, 0.4742, 0.2369, 0.2107],

[0.4505, 0.4412, 0.3054, 0.2844],

[0.8727, 0.4563, 0.1025, 0.0892],

[0.1160, 0.0405, 0.2319, 0.0810],

[0.8345, 0.4266, 0.3310, 0.2843]]),

'labels': tensor([2, 2, 2, 2, 2, 2, 2, 2, 2, 2]),

'image_id': tensor([382006]),

'area': tensor([45937.5547, 46857.6406, 20902.2129, 14303.7432, 3646.2932, 22717.0332, 39523.7812, 4161.9199, 8552.1719, 42826.0391]),

'iscrowd': tensor([0, 0, 0, 0, 0, 0, 0, 0, 0, 0]),

'orig_size': tensor([429, 640]),

'size': tensor([711, 640])},

{'boxes': tensor([[0.7152, 0.3759, 0.0934, 0.0804],

[0.8129, 0.3777, 0.0576, 0.0562],

[0.7702, 0.3866, 0.0350, 0.0511],

[0.7828, 0.6463, 0.0743, 0.2580],

[0.8836, 0.5753, 0.1511, 0.2977],

[0.9162, 0.6202, 0.0880, 0.3273],

[0.8424, 0.3788, 0.0254, 0.0398],

[0.9716, 0.3712, 0.0569, 0.0707],

[0.0615, 0.4210, 0.0242, 0.0645],

[0.8655, 0.3775, 0.0398, 0.0368],

[0.8884, 0.3701, 0.0349, 0.0329],

[0.9365, 0.3673, 0.0144, 0.0221],

[0.5147, 0.1537, 0.0220, 0.0541],

[0.9175, 0.1185, 0.0294, 0.0438],

[0.0675, 0.0934, 0.0223, 0.0596],

[0.9125, 0.3683, 0.0185, 0.0347],

[0.9905, 0.3934, 0.0191, 0.0373],

[0.5370, 0.1577, 0.0211, 0.0553]]),

'labels': tensor([2, 2, 2, 2, 1, 1, 2, 2, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2]),

'image_id': tensor([565286]),

'area': tensor([ 3843.2710, 1264.0637, 753.2280, 8374.9131, 11725.9863, 11407.3760, 373.8589, 1965.6881, 649.5052, 625.6856, 483.2297, 117.1264, 733.8372, 727.9525, 758.3719, 307.3998, 365.8919, 712.7703]),

'iscrowd': tensor([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]),

'orig_size': tensor([512, 640]),

'size': tensor([736, 920])})

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41