- 1openai接口调用-如何接入openai获取 api key_获取 openai key

- 2嵌入式软件开发工程师就业发展前景怎么样?

- 3禁用el-tabs组件自带的键盘切换功能_element-plus 禁止键盘上下左右

- 4机器视觉三维点云分析系统3DPCAgent_3d点云数据测量系统架构

- 5ggplot 图像的保存_error in usemethod("grid.draw") : no applicable me

- 6120款超浪漫❤HTML5七夕情人节表白网页源码❤ HTML+CSS+JavaScript_浪漫网页

- 7Pytorch下查看各层名字及根据layers的name冻结层进行finetune训练;_model = net().cuda() for name, param in model.name

- 8《Python从入门到实践》外星人入侵学习笔记_python外星人入侵 求助 不按play键 一直处于活动状态

- 9Dump分析模式1: Multiple Exceptions(多线程异常)_multipe exceptions

- 10PyQtChart进行柱状图、饼图的基本设置_pyqt5 炫酷饼状图

YOLOv7快速上手训练自定义数据集_yolov7.yaml

赞

踩

YOLOV4的作者团队联合几位大佬已经发布了YOLOv7,记录下如何自定义训练自己的数据集模型。快速上手,如果需要细节操作还是需要自己仔细研究的。本文的目的就是帮助大家快速上手,能跑出模型结果,调优问题需要大家自己研究了。

准备工作

YOLOv7模型下载

首先是YOLOv7的链接地址github-yolov7,下载完解压即可

图像标注软件

图像标注软件有很多labelme,labellmg都可以,标注生成标注文件,然后去制作成数据集格式,我这里采用传统的yolo格式,文件以txt结尾,也可以是xml结尾的那就做VOC数据集就行。

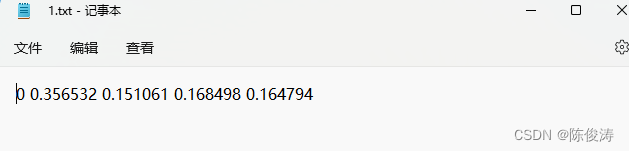

这是标注成的txt标注文件,0代表标签名,从0起步,文件名与图像名字一一对应。

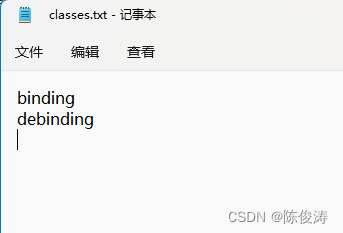

一般会生成一个classes.txt文件,没有就自己做一个放到数据集里,和标签中第一个数字对应的,我的binding代表0,1代表 debinding。

数据集准备

传统数据集格式如下,完成后将数据集放至yolo文件夹下

dataset

-images

--train (放训练集图片)

--val (放验证集图片)

-labels

--train (放训练集标签文件 .txt结尾,加一个classes文件,说明类型和标签的对应)

--val (放验证集标签文件 .txt结尾,加一个classes文件,说明类型和标签的对应)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

VOC格式如下

Annotations (放标注文件 .xml后缀)

ImageSets

--Main

test.txt (放测试集图片地址或者图片名字)

train.txt

trainval.txt

val.txt

JPEGImages (放所有图片)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

安装必要的包

将终端切换至YOLOv7目录下,输入 pip install -r requirements.txt 即可自动安装需要的包,注意GPU训练环境可能要自行去pytorch官网安装对应版本,否则可能会跑不动,版本不对也会有问题。这里建议重开一个虚拟环境来跑,减少问题。

快速设置

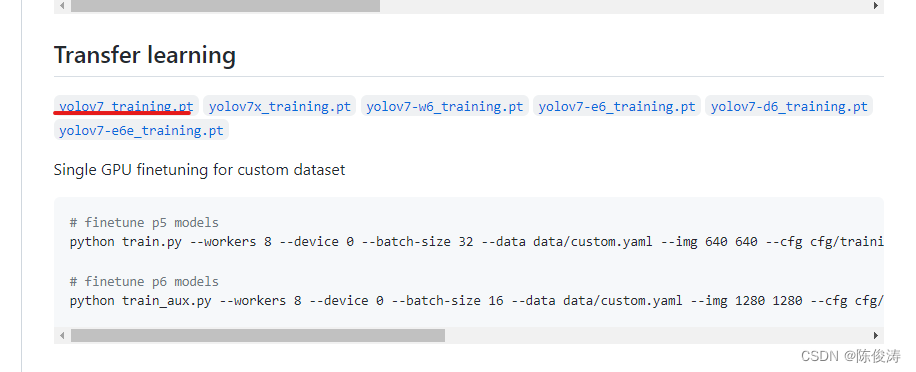

从github页面下载迁移训练模型, 放至文件夹下,可以自己开个权重文件夹放进去,weights。

yaml配置文件修改

在YOLO文件夹下找到data文件夹,新建一个.yaml配置文件。名字随意,我设置的是mytrain.ymal

在文件内写入以下配置即可

我的数据集名称 custom_dataset

train: ./custom_dataset/images/train

val: ./custom_dataset/images/val

test: # test images (optional)

nc: 2 #(类别数)

names: ['binding','debinding'] # 类别名字

- 1

- 2

- 3

- 4

- 5

- 6

- 7

cfg文件修改

在cfg下找到yolov7.yaml,双击打开修改类别为2

训练文件修改

训练文件第522开始是参数设置,可以在default里直接修改参数运行,也可以用终端调用参数的方式进行设置训练。

简单修改前6个参数即可,其他内容可以按需修改

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default='weights/yolov7_training.pt', help='initial weights path')

parser.add_argument('--cfg', type=str, default='cfg/training/yolov7.yaml', help='model.yaml path')

parser.add_argument('--data', type=str, default='data/mytrain.yaml', help='data.yaml path')

parser.add_argument('--hyp', type=str, default='data/hyp.scratch.custom.yaml', help='hyperparameters path')

parser.add_argument('--epochs', type=int, default=300)

parser.add_argument('--batch-size', type=int, default=8, help='total batch size for all GPUs')

parser.add_argument('--img-size', nargs='+', type=int, default=[640, 640], help='[train, test] image sizes')

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

终端运行的方式

python train.py --workers 8 --device 0 --batch-size 8 --data data/mytrain.yaml --cfg cfg/training/yolov7.yaml --weights ‘yolov7_training.pt’ --hyp data/hyp.scratch.custom.yaml

之后就可以开始训练了

tensorboard: Start with 'tensorboard --logdir runs/train', view at http://localhost:6006/ hyperparameters: lr0=0.01, lrf=0.1, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.3, cls_pw=1.0, obj=0.7, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.2, scale=0.9, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.15, copy_paste=0.0, paste_in=0.15 wandb: Install Weights & Biases for YOLOR logging with 'pip install wandb' (recommended) from n params module arguments 0 -1 1 928 models.common.Conv [3, 32, 3, 1] 1 -1 1 18560 models.common.Conv [32, 64, 3, 2] 2 -1 1 36992 models.common.Conv [64, 64, 3, 1] 3 -1 1 73984 models.common.Conv [64, 128, 3, 2] 4 -1 1 8320 models.common.Conv [128, 64, 1, 1] 5 -2 1 8320 models.common.Conv [128, 64, 1, 1] 6 -1 1 36992 models.common.Conv [64, 64, 3, 1] 7 -1 1 36992 models.common.Conv [64, 64, 3, 1] 8 -1 1 36992 models.common.Conv [64, 64, 3, 1] 9 -1 1 36992 models.common.Conv [64, 64, 3, 1] 10 [-1, -3, -5, -6] 1 0 models.common.Concat [1] 11 -1 1 66048 models.common.Conv [256, 256, 1, 1] 12 -1 1 0 models.common.MP [] 13 -1 1 33024 models.common.Conv [256, 128, 1, 1] 14 -3 1 33024 models.common.Conv [256, 128, 1, 1] 15 -1 1 147712 models.common.Conv [128, 128, 3, 2] 16 [-1, -3] 1 0 models.common.Concat [1] 17 -1 1 33024 models.common.Conv [256, 128, 1, 1] 18 -2 1 33024 models.common.Conv [256, 128, 1, 1] 19 -1 1 147712 models.common.Conv [128, 128, 3, 1] 20 -1 1 147712 models.common.Conv [128, 128, 3, 1] 21 -1 1 147712 models.common.Conv [128, 128, 3, 1] 22 -1 1 147712 models.common.Conv [128, 128, 3, 1] 23 [-1, -3, -5, -6] 1 0 models.common.Concat [1] 24 -1 1 263168 models.common.Conv [512, 512, 1, 1] 25 -1 1 0 models.common.MP [] 26 -1 1 131584 models.common.Conv [512, 256, 1, 1] 27 -3 1 131584 models.common.Conv [512, 256, 1, 1] 28 -1 1 590336 models.common.Conv [256, 256, 3, 2] 29 [-1, -3] 1 0 models.common.Concat [1] 30 -1 1 131584 models.common.Conv [512, 256, 1, 1] 31 -2 1 131584 models.common.Conv [512, 256, 1, 1] 32 -1 1 590336 models.common.Conv [256, 256, 3, 1] 33 -1 1 590336 models.common.Conv [256, 256, 3, 1] 34 -1 1 590336 models.common.Conv [256, 256, 3, 1] 35 -1 1 590336 models.common.Conv [256, 256, 3, 1] 36 [-1, -3, -5, -6] 1 0 models.common.Concat [1] 37 -1 1 1050624 models.common.Conv [1024, 1024, 1, 1] 38 -1 1 0 models.common.MP [] 39 -1 1 525312 models.common.Conv [1024, 512, 1, 1] 40 -3 1 525312 models.common.Conv [1024, 512, 1, 1] 41 -1 1 2360320 models.common.Conv [512, 512, 3, 2] 42 [-1, -3] 1 0 models.common.Concat [1] 43 -1 1 262656 models.common.Conv [1024, 256, 1, 1] 44 -2 1 262656 models.common.Conv [1024, 256, 1, 1] 45 -1 1 590336 models.common.Conv [256, 256, 3, 1] 46 -1 1 590336 models.common.Conv [256, 256, 3, 1] 47 -1 1 590336 models.common.Conv [256, 256, 3, 1] 48 -1 1 590336 models.common.Conv [256, 256, 3, 1] 49 [-1, -3, -5, -6] 1 0 models.common.Concat [1] 50 -1 1 1050624 models.common.Conv [1024, 1024, 1, 1] 51 -1 1 7609344 models.common.SPPCSPC [1024, 512, 1] 52 -1 1 131584 models.common.Conv [512, 256, 1, 1] 53 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 54 37 1 262656 models.common.Conv [1024, 256, 1, 1] 55 [-1, -2] 1 0 models.common.Concat [1] 56 -1 1 131584 models.common.Conv [512, 256, 1, 1] 57 -2 1 131584 models.common.Conv [512, 256, 1, 1] 58 -1 1 295168 models.common.Conv [256, 128, 3, 1] 59 -1 1 147712 models.common.Conv [128, 128, 3, 1] 60 -1 1 147712 models.common.Conv [128, 128, 3, 1] 61 -1 1 147712 models.common.Conv [128, 128, 3, 1] 62[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1] 63 -1 1 262656 models.common.Conv [1024, 256, 1, 1] 64 -1 1 33024 models.common.Conv [256, 128, 1, 1] 65 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 66 24 1 65792 models.common.Conv [512, 128, 1, 1] 67 [-1, -2] 1 0 models.common.Concat [1] 68 -1 1 33024 models.common.Conv [256, 128, 1, 1] 69 -2 1 33024 models.common.Conv [256, 128, 1, 1] 70 -1 1 73856 models.common.Conv [128, 64, 3, 1] 71 -1 1 36992 models.common.Conv [64, 64, 3, 1] 72 -1 1 36992 models.common.Conv [64, 64, 3, 1] 73 -1 1 36992 models.common.Conv [64, 64, 3, 1] 74[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1] 75 -1 1 65792 models.common.Conv [512, 128, 1, 1] 76 -1 1 0 models.common.MP [] 77 -1 1 16640 models.common.Conv [128, 128, 1, 1] 78 -3 1 16640 models.common.Conv [128, 128, 1, 1] 79 -1 1 147712 models.common.Conv [128, 128, 3, 2] 80 [-1, -3, 63] 1 0 models.common.Concat [1] 81 -1 1 131584 models.common.Conv [512, 256, 1, 1] 82 -2 1 131584 models.common.Conv [512, 256, 1, 1] 83 -1 1 295168 models.common.Conv [256, 128, 3, 1] 84 -1 1 147712 models.common.Conv [128, 128, 3, 1] 85 -1 1 147712 models.common.Conv [128, 128, 3, 1] 86 -1 1 147712 models.common.Conv [128, 128, 3, 1] 87[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1] 88 -1 1 262656 models.common.Conv [1024, 256, 1, 1] 89 -1 1 0 models.common.MP [] 90 -1 1 66048 models.common.Conv [256, 256, 1, 1] 91 -3 1 66048 models.common.Conv [256, 256, 1, 1] 92 -1 1 590336 models.common.Conv [256, 256, 3, 2] 93 [-1, -3, 51] 1 0 models.common.Concat [1] 94 -1 1 525312 models.common.Conv [1024, 512, 1, 1] 95 -2 1 525312 models.common.Conv [1024, 512, 1, 1] 96 -1 1 1180160 models.common.Conv [512, 256, 3, 1] 97 -1 1 590336 models.common.Conv [256, 256, 3, 1] 98 -1 1 590336 models.common.Conv [256, 256, 3, 1] 99 -1 1 590336 models.common.Conv [256, 256, 3, 1] 100[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1] 101 -1 1 1049600 models.common.Conv [2048, 512, 1, 1] 102 75 1 328704 models.common.RepConv [128, 256, 3, 1] 103 88 1 1312768 models.common.RepConv [256, 512, 3, 1] 104 101 1 5246976 models.common.RepConv [512, 1024, 3, 1] 105 [102, 103, 104] 1 39550 models.yolo.IDetect [2, [[12, 16, 19, 36, 40, 28], [36, 75, 76, 55, 72, 146], [142, 110, 192, 243, 459, 401]], [256, 512, 1024]] E:\Anaconda\envs\yolov7\lib\site-packages\torch\functional.py:568: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at C:\actions-runner\_work\pytorch\pytorch\builder\windows\pytorch\aten\src\ATen\native\TensorShape.cpp:2228.) return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined] Model Summary: 415 layers, 37201950 parameters, 37201950 gradients, 105.1 GFLOPS Transferred 555/566 items from weights/yolov7_training.pt Scaled weight_decay = 0.0005 Optimizer groups: 95 .bias, 95 conv.weight, 98 other train: Scanning 'custom_dataset\labels\train.cache' images and labels... 4000 found, 0 missing, 188 empty, 0 corrupted: 100%|██████████| 4000/4000 [00:00<?, ?it/s] val: Scanning 'custom_dataset\labels\val.cache' images and labels... 500 found, 0 missing, 20 empty, 0 corrupted: 100%|██████████| 500/500 [00:00<?, ?it/s] autoanchor: Analyzing anchors... anchors/target = 6.46, Best Possible Recall (BPR) = 1.0000 Image sizes 640 train, 640 test Using 8 dataloader workers Logging results to runs\train\exp3 Starting training for 300 epochs...

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126