热门标签

热门文章

- 1怎样用python进行机器学习

- 2uni-app网络请求封装跨域_前端面试准备---浏览器和网络篇(一)

- 3玩转贝启科技BQ3588C开源鸿蒙系统开发板 —— 编译构建及此过程中的踩坑填坑(5)_鸿蒙 build.sh product-name dayu210

- 4余承东回应高通对华为恢复 5G 芯片供应;ChatGPT 发布重要更新;微软推出免费 AI 入门课|极客头条...

- 5利用nginx在树莓派上搭建文件服务器_192.168.18.14:8888

- 6iOS微信/支付宝/苹果内付支付流程图1_苹果支付流程图

- 7MFCC特征提取

- 8自练题目c++

- 9解密高并发系统的瓶颈:五大解决方案_微服务 高并发的瓶颈

- 10Python是什么?Python基础教程400集大型视频,全套完整视频赠送给大家_python人马大战csdn

当前位置: article > 正文

【xinference部署大模型超详细教程 gemma-it为例子】_xinference fastgpt

作者:Gausst松鼠会 | 2024-04-04 01:38:00

赞

踩

xinference fastgpt

你的点赞和收藏是我持续分享优质内容的动力哦~

加速

source /etc/network_turbo # 仅限autodl平台

pip config set global.index-url https://mirrors.pku.edu.cn/pypi/web/simple

- 1

- 2

第一步

安装 xinference 和 vLLM:

- vLLM 是一个支持高并发的高性能大模型推理引擎。当满足以下条件时,Xinference 会自动选择 vllm 作为引擎来达到更高的吞吐量:

- 模型的格式必须是 PyTorch 或者 GPTQ

- 量化方式必须是 GPTQ 4 bit 或者 none

- 运行的操作系统必须是 Linux 且至少有一张支持 CUDA 的显卡

- 运行的模型必须在 vLLM 引擎的支持列表里

pip install "xinference[vllm]"

- 1

PyTorch(transformers) 引擎支持几乎所有的最新模型,这是 Pytorch 模型默认使用的引擎:

pip install "xinference[transformers]"

- 1

当使用 GGML 引擎时,建议根据当前使用的硬件手动安装依赖,从而获得最佳的加速效果。

pip install xinference ctransformers

- 1

Nvidia

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python

- 1

AMD

CMAKE_ARGS="-DLLAMA_HIPBLAS=on" pip install llama-cpp-python

- 1

MAC

CMAKE_ARGS="-DLLAMA_METAL=on" pip install llama-cpp-python

- 1

如果使用量化

pip install accelerate

pip install bitsandbytes

- 1

- 2

第二步

安装 nodejs

conda install nodejs

- 1

- 如果出错,尝试运行

conda clean -i

# or $HOME一般是root, 即/root/.condarc

rm $HOME/.condarc

- 1

- 2

- 3

下载源码

git clone https://github.com/xorbitsai/inference.git

cd inference

- 1

- 2

pip install -e .

xinference-local

- 1

- 2

编译前端界面(只使用 api 则不需要编译前端)

npm cache clean --force

npm install

npm run build

# -> 您可以返回到包含 setup.cfg 和 setup.py 文件的目录

pip install -e .

- 1

- 2

- 3

- 4

- 5

- 6

- 7

开始使用

先拉起本地服务

XINFERENCE_HOME=./models/ xinference-local --host 0.0.0.0 --port 9997

- 1

然后启动运行

- quantization: none, 4-bit, 8-bit

- 国内下载加速:HF_ENDPOINT=https://hf-mirror.com XINFERENCE_MODEL_SRC=modelscope

HF_ENDPOINT=https://hf-mirror.com XINFERENCE_MODEL_SRC=modelscope xinference launch --model-name gemma-it --size-in-billions 2 --model-format pytorch --quantization 8-bit

- 1

测试

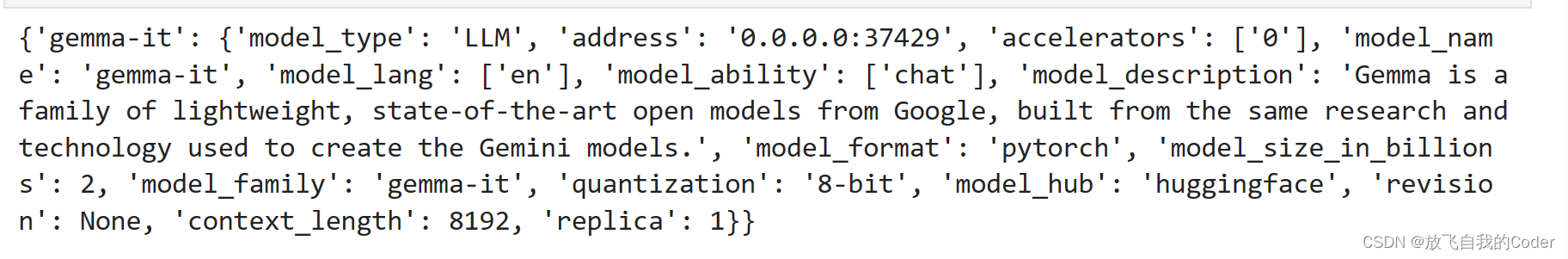

列出模型列表

from xinference.client import Client

client = Client("http://0.0.0.0:9997")

print(client.list_models())

- 1

- 2

- 3

- 4

openai 接口调用

import openai # Assume that the model is already launched. # The api_key can't be empty, any string is OK. model_uid = 'gemma-it' client = openai.Client(api_key="not empty", base_url="http://localhost:9997/v1") client.chat.completions.create( model=model_uid, messages=[ { "content": "What is the largest animal?", "role": "user", } ], max_tokens=1024 )

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

声明:本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:【wpsshop博客】

推荐阅读

相关标签