热门标签

热门文章

- 1php+绕过cookie验证,Selenium中使用Cookies绕过登录

- 2程序员35岁之后不写程序了,该怎样职业规划?_会写程序的人如果不做软件开发还能做哪些相关工作?

- 3docker 部署 dujiaoka 独角数卡自动售货系统 支持 X86 和 ARM 架构

- 4Centos SQL Server保姆级安装教程_centos安装sqlserver

- 5MacOS13+系统运行Stable Diffusion出现的问题及解决方法汇总_stable diffusion 生成全是马赛克

- 6怎么抠图最简单?教你一行Python代码去除照片背景_python去除图片背景

- 7springboot属性注入方式_springboot枚举怎么才能注入

- 8由PyRetri浅谈基于深度学习的图像检索

- 9Appium安装及开发环境配置_appium安装及环境配置

- 10Only fullscreen opaque activities can request orientation比较完美的解决方法,黑白屏问题解决

当前位置: article > 正文

PaddleOCRv3之二:TextRecognitionDataGenerator训练集构造

作者:Gausst松鼠会 | 2024-04-04 18:56:20

赞

踩

textrecognitiondatagenerator

OCR识别部分数据集中字体和背景是比较重要的方面,在实际的场景下收集不到那么多真实的样本,在训练开始的时候手工构造一批训练数据还是很有必要的。可以用这一批数据预训练后面用真实数据来微调,也可以直接把这些数据和真实数据混合在一起训练。

在构造数据集的方面TextRecognitionDataGenerator还是比较好用了,这是按行生成文本的,可以生成bounding boxes,和mask。

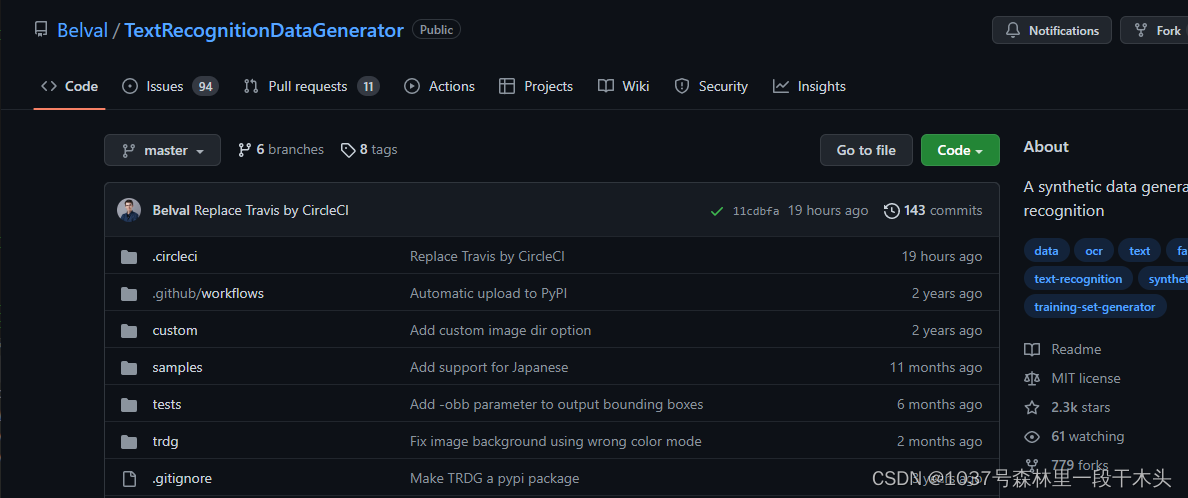

1. 开源项目TextRecognitionDataGenerator

链接TextRecognitionDataGenerator

支持的一些有用的操作

- blur 模糊操作

- 在背景图上写字(这个很有用,选择一些真实场景的背景图,然后把文字写在上面,看起来更真实一些)

- 角度旋转

- 生成boundingbox和mask标签

- 字间距调整

- 字体颜色设置

- 拉丁语系的提供了100多种字体

- …

安装有两种方式,

- pip 安装

pip install trdg

- 1

- 源码安装

下载源码,cd进入setup.py所在目录,然后安装依赖项

pip install -r requirements.txt

- 1

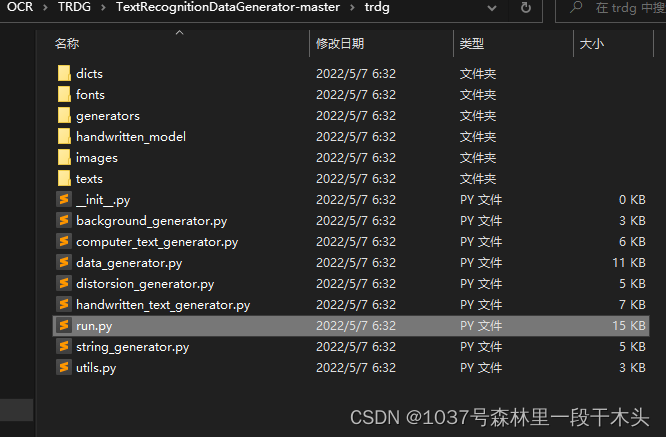

使用的时候用trdg文件夹下的run.py文件生成数据,默认在TextRecognitionDataGenerator-master\trdg路径下

2. 使用

常用选项

python run.py --font_dir fonts\latin #字体文件,可以选择文件夹或者单个字体库 --dict dicts\myDict.txt #字典路径 -c 50 #一共生成多少个图片 --output_dir outputs #保存路径 -k 5 -rk #-k 5表示旋转的角度为5°,后面接-rk表示在-5,+5范围内随机, -bl 3 -rbl #-bl 表示blur 高斯模糊,后面接半径, -rbl表示高斯核的半径在0-3之间 --case upper #upper表示使用大写字符,lower表示用小写 -b 3 -id images #-b表示背景,3表示用图片做背景,-id:image_dir,指定背景图片路径 -f 64 # --format ,生成的图片的高度 -tc #22211f #--text_color 6位的16进制数,RGB格式直接翻译,例如:rgb=[10,15,255]==>#0a 0e ff -obb 1 #生成bonding box, #格式是嵌套的,第一行:4个数,分别为第一个字符左上角x,y 最后一个字符的右下角的x,y #第二行:4个数,分别为第二个字符左上角的x,y,最后一个字符的右下角的x,y #第n行:4个数,分别为第n个字符左上角的x,y,最后一个字符的右下角的x,y

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

例如:

python run.py --font_dir fonts\latin --dict dicts\OCRDict.txt -c 52 --output_dir K:\imageData\OCR\ocr_dataset\test -k 6 -rk -bl 1 -rbl -b 3 -id K:\imageData\OCR\ocr_background\yellow -f 80

- 1

查看使用帮助就能只知道完整的用法了

python run.py --help

- 1

usage: run.py [-h] [--output_dir [OUTPUT_DIR]] [-i [INPUT_FILE]] [-l [LANGUAGE]] -c [COUNT] [-rs] [-let] [-num] [-sym] [-w [LENGTH]] [-r] [-f [FORMAT]] [-t [THREAD_COUNT]] [-e [EXTENSION]] [-k [SKEW_ANGLE]] [-rk] [-wk] [-bl [BLUR]] [-rbl] [-b [BACKGROUND]] [-hw] [-na NAME_FORMAT] [-om OUTPUT_MASK] [-obb OUTPUT_BBOXES] [-d [DISTORSION]] [-do [DISTORSION_ORIENTATION]] [-wd [WIDTH]] [-al [ALIGNMENT]] [-or [ORIENTATION]] [-tc [TEXT_COLOR]] [-sw [SPACE_WIDTH]] [-cs [CHARACTER_SPACING]] [-m [MARGINS]] [-fi] [-ft [FONT]] [-fd [FONT_DIR]] [-id [IMAGE_DIR]] [-ca [CASE]] [-dt [DICT]] [-ws] [-stw [STROKE_WIDTH]] [-stf [STROKE_FILL]] [-im [IMAGE_MODE]] Generate synthetic text data for text recognition. optional arguments: -h, --help show this help message and exit --output_dir [OUTPUT_DIR] The output directory -i [INPUT_FILE], --input_file [INPUT_FILE] When set, this argument uses a specified text file as source for the text -l [LANGUAGE], --language [LANGUAGE] The language to use, should be fr (French), en (English), es (Spanish), de (German), ar (Arabic), cn (Chinese), ja (Japanese) or hi (Hindi) -c [COUNT], --count [COUNT] The number of images to be created. -rs, --random_sequences Use random sequences as the source text for the generation. Set '-let','-num','-sym' to use letters/numbers/symbols. If none specified, using all three. -let, --include_letters Define if random sequences should contain letters. Only works with -rs -num, --include_numbers Define if random sequences should contain numbers. Only works with -rs -sym, --include_symbols Define if random sequences should contain symbols. Only works with -rs -w [LENGTH], --length [LENGTH] Define how many words should be included in each generated sample. If the text source is Wikipedia, this is the MINIMUM length -r, --random Define if the produced string will have variable word count (with --length being the maximum) -f [FORMAT], --format [FORMAT] Define the height of the produced images if horizontal, else the width -t [THREAD_COUNT], --thread_count [THREAD_COUNT] Define the number of thread to use for image generation -e [EXTENSION], --extension [EXTENSION] Define the extension to save the image with -k [SKEW_ANGLE], --skew_angle [SKEW_ANGLE] Define skewing angle of the generated text. In positive degrees -rk, --random_skew When set, the skew angle will be randomized between the value set with -k and it's opposite -wk, --use_wikipedia Use Wikipedia as the source text for the generation, using this paremeter ignores -r, -n, -s -bl [BLUR], --blur [BLUR] Apply gaussian blur to the resulting sample. Should be an integer defining the blur radius -rbl, --random_blur When set, the blur radius will be randomized between 0 and -bl. -b [BACKGROUND], --background [BACKGROUND] Define what kind of background to use. 0: Gaussian Noise, 1: Plain white, 2: Quasicrystal, 3: Image -hw, --handwritten Define if the data will be "handwritten" by an RNN -na NAME_FORMAT, --name_format NAME_FORMAT Define how the produced files will be named. 0: [TEXT]_[ID].[EXT], 1: [ID]_[TEXT].[EXT] 2: [ID].[EXT] + one file labels.txt containing id-to-label mappings -om OUTPUT_MASK, --output_mask OUTPUT_MASK Define if the generator will return masks for the text -obb OUTPUT_BBOXES, --output_bboxes OUTPUT_BBOXES Define if the generator will return bounding boxes for the text, 1: Bounding box file, 2: Tesseract format -d [DISTORSION], --distorsion [DISTORSION] Define a distorsion applied to the resulting image. 0: None (Default), 1: Sine wave, 2: Cosine wave, 3: Random -do [DISTORSION_ORIENTATION], --distorsion_orientation [DISTORSION_ORIENTATION] Define the distorsion's orientation. Only used if -d is specified. 0: Vertical (Up and down), 1: Horizontal (Left and Right), 2: Both -wd [WIDTH], --width [WIDTH] Define the width of the resulting image. If not set it will be the width of the text + 10. If the width of the generated text is bigger that number will be used -al [ALIGNMENT], --alignment [ALIGNMENT] Define the alignment of the text in the image. Only used if the width parameter is set. 0: left, 1: center, 2: right -or [ORIENTATION], --orientation [ORIENTATION] Define the orientation of the text. 0: Horizontal, 1: Vertical -tc [TEXT_COLOR], --text_color [TEXT_COLOR] Define the text's color, should be either a single hex color or a range in the ?,? format. -sw [SPACE_WIDTH], --space_width [SPACE_WIDTH] Define the width of the spaces between words. 2.0 means twice the normal space width -cs [CHARACTER_SPACING], --character_spacing [CHARACTER_SPACING] Define the width of the spaces between characters. 2 means two pixels -m [MARGINS], --margins [MARGINS] Define the margins around the text when rendered. In pixels -fi, --fit Apply a tight crop around the rendered text -ft [FONT], --font [FONT] Define font to be used -fd [FONT_DIR], --font_dir [FONT_DIR] Define a font directory to be used -id [IMAGE_DIR], --image_dir [IMAGE_DIR] Define an image directory to use when background is set to image -ca [CASE], --case [CASE] Generate upper or lowercase only. arguments: upper or lower. Example: --case upper -dt [DICT], --dict [DICT] Define the dictionary to be used -ws, --word_split Split on words instead of on characters (preserves ligatures, no character spacing) -stw [STROKE_WIDTH], --stroke_width [STROKE_WIDTH] Define the width of the strokes -stf [STROKE_FILL], --stroke_fill [STROKE_FILL] Define the color of the contour of the strokes, if stroke_width is bigger than 0 -im [IMAGE_MODE], --image_mode [IMAGE_MODE] Define the image mode to be used. RGB is default, L means 8-bit grayscale images, 1 means 1-bit binary images stored with one pixel per byte, etc.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

3. 示例

- 下面这几张图是原项目中的示例图

- 下面的图片使用的是真实场景下的一些背景图,按字典把字符写在上面

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/Gausst松鼠会/article/detail/360492?site

推荐阅读

相关标签