- 1【python基础语法四】python对文件的操作_python 重写文件

- 2搜索推荐算法挑战赛OGeek-完整方案及代码(亚军)

- 3数组循环及对象循环找key或value_for 循环拿到key

- 4docker配置安装Snipe-IT

- 5掌握Git必备:最常用的50个Git命令

- 6人机融合+学科

- 7python图形化界面开发工具,python图形界面设计工具_python可视化界面设计器

- 8【JeecgBoot】Mac M1 微服务启动JeecgBoot + 启动JeecgBoot-vue3版本_jeecg 微服务启动

- 9JS函数前加!是什么意思_js函数前加!

- 10北大&字节联合发布视觉自动回归建模(VAR):通过下一代预测生成可扩展的图像

Hadoop2.7.5 集群搭建3节点_运用cdh或ambari进行大数据集群的自动化部署实践,要求集群的节点不少于3个。

赞

踩

说明:

集群25号搭好,今日凌晨整理完本文方才发布。

本文目录

5、在 master 安装 hadoop 并创建相应的工作目录

8、设置 master 主机上的 hadoop 普通用户免密登录

9、将 hadoop 安装文件复制到其他 DateNode 节点

(一)准备

jdk8 安装包下载地址:

JDK 下载 华为云镜像站 地址_闭关苦炼内功的博客-CSDN博客

Hadoop 2.7.5 安装包下载地址:

https://archive.apache.org/dist/hadoop/common/hadoop-2.7.5/hadoop-2.7.5.tar.gz

首先,

最最最重要的事情说三遍!!!

宿主机物理内存至少8G

宿主机物理内存至少8G

宿主机物理内存至少8G

----

宿主机就是本地物理主机实际电脑,下方链接了解清楚(本地主机)宿主机与(虚拟机)客户机

----

开启本地主机 CPU 虚拟化(主机或笔记本或服务器)【amd 或者 intel 的 CPU 都阔以】

本地安装 vmware 虚拟机,xshell、xftp 工具

安装一台 centos7 (64bit)纯净版【1G 内存 20G 硬盘】,

无界面版安装步骤链接:

centos7 无界面安装(全截图)_闭关苦炼内功的博客-CSDN博客(也可安装有界面的,这个随意;不过说实话没有界面的开机明显快一些)

并备份一份留作后用(避免出现错误不能恢复)

其次,

从安装好的虚拟机上克隆出完整的三台 centos7 虚拟机

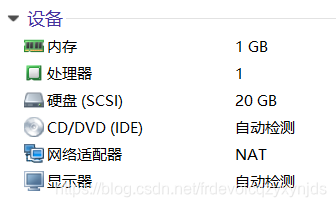

(1)每台虚拟机配置:内存配置 1G(可调大),cpu配置 1 核,具体配置详情见下图

(2)NAT 网络模式,配置虚拟网络 vmnet8,宿主机 ip、子网掩码、默认网关、DNS;

并为三台虚拟机生成不同 mac 物理地址

(3)三台虚拟机开机,查看并配置网络

①配置网关,永久修改机器 hostname,

主机名命名以字母,或者字母+数字命名,可以出现(-)减号,一定不要出现(_)下划线;

配置 ip 地址、DNS 地址;

②设置主机名,配置内网 ip 映射主机名

192.168.40.130 master namenode

192.168.40.131 slave1 datanode

192.168.40.132 slave2 datanode

③重启网络服务

systemctl restart network #重启网络服务

systemctl status network #查看网络状态

④关闭防火墙

systemctl stop firewalld #停止防火墙服务

systemctl disable firewalld #禁止开机启动防火墙

systemctl status firewalld #查看防火墙状态

⑤重启设备

reboot

(二)开始

本次实验三台机器 centos7.2,同时关闭防火墙和 selinux

以 slave2 节点为例

- [root@slave2 ~]# systemctl status firewalld

- [root@slave2 ~]# getenforce

- Enforcing

- [root@slave2 ~]# setenforce 0

- [root@slave2 ~]#

- [root@slave2 ~]# getenforce

- Permissive

------------------------------------------------------------

1、在 master 上配置免密登录

配置在 master 上,可以 ssh 无密码登录 master,slave1,slave2

- [root@master ~]# ssh-keygen //一路回车

- [root@master ~]# ssh-copy-id root@192.168.40.130

- [root@master ~]# ssh-copy-id root@192.168.40.131

- [root@master ~]# ssh-copy-id root@192.168.40.132

- [root@master ~]# ssh master

- [root@master ~]# exit

- 登出

- Connection to master closed.

- [root@master ~]#

- [root@master ~]# ssh slave1

- [root@slave1 ~]# exit

- 登出

- Connection to slave1 closed.

- [root@master ~]#

- [root@master ~]# ssh slave2

- [root@slave2 ~]# exit

- 登出

- Connection to slave2 closed.

- [root@master ~]#

------------------------------------------------------------

2、三台机器上配置 hosts 文件

首先在 master 节点上配置

然后复制到其他两台机器上

scp /etc/hosts root@192.168.40.131:/etc

scp /etc/hosts root@192.168.40.132:/etc

- [root@master ~]# cat /etc/hosts

- #127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

- #::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

- 127.0.0.1 localhost

- 192.168.40.130 master

- 192.168.40.131 slave1

- 192.168.40.132 slave2

- [root@master ~]# scp /etc/hosts root@slave1:/etc

- [root@master ~]# scp /etc/hosts root@slave2:/etc

------------------------------------------------------------

3、在三台节点上创建运行 Hadoop 用户

useradd -u 8000 hadoop

echo hadoop | passwd --stdin hadoop

三台节点都需要创建 hadoop 用户,保持 UID 一致

- [root@master ~]# useradd -u 8000 hadoop

- [root@master ~]# echo hadoop | passwd --stdin hadoop

- [root@master ~]# ssh slave1

- Last login: Wed Mar 25 01:05:48 2020 from master

- [root@slave1 ~]# useradd -u 8000 hadoop

- [root@slave1 ~]# echo hadoop | passwd --stdin hadoop

- [root@slave1 ~]# exit

- 登出

- Connection to slave1 closed.

- [root@master ~]# ssh slave2

- Last login: Wed Mar 25 01:04:29 2020 from master

- [root@slave2 ~]# useradd -u 8000 hadoop

- [root@slave2 ~]# echo hadoop | passwd --stdin hadoop

- [root@slave2 ~]# exit

- 登出

- Connection to slave2 closed.

- [root@master ~]#

4、在三台节点上安装 java 环境 jdk

把 jdk 上传到/home 目录下

1)将 jdk 解压至/usr/local 目录下

- [root@master home]# ls

- centos hadoop jdk-8u112-linux-x64.tar.gz

- [root@master home]# tar -zxvf jdk-8u112-linux-x64.tar.gz -C /usr/local/

- [root@master home]# ls /usr/local/

- bin etc games include jdk1.8.0_112 lib lib64 libexec sbin share src

- [root@master home]#

2)配置 jdk 环境变量

编辑/etc/profile, 在原文件最后加

- [root@master home]# vim /etc/profile

- export JAVA_HOME=/usr/local/jdk1.8.0_112

- export JAVA_BIN=/usr/local/jdk1.8.0_112/bin

- export PATH=${JAVA_HOME}/bin:$PATH

- export CLASSPATH=.:${JAVA_HOME}/lib/dt.jar:${JAVA_HOME}/lib/tools.jar

- [root@master home]# cat /etc/profile

3)执行配置文件生效:

source /etc/profile #使配置文件生效

java -version #验证 java 运行环境是否安装成功

- [root@master home]# source /etc/profile

- [root@master home]# java -version

- java version "1.8.0_112"

- Java(TM) SE Runtime Environment (build 1.8.0_112-b15)

- Java HotSpot(TM) 64-Bit Server VM (build 25.112-b15, mixed mode)

- [root@master home]#

4)将 jdk 部署到另外两台机器上

- [root@master home]# scp -r /usr/local/jdk1.8.0_112/ slave1:/usr/local/

- [root@master home]# scp -r /usr/local/jdk1.8.0_112/ slave2:/usr/local/

- scp /etc/profile slave1:/etc/

- [root@master home]# scp /etc/profile slave1:/etc/

- profile 100% 1945 1.9KB/s 00:00

- [root@master home]# scp /etc/profile slave2:/etc/

- profile 100% 1945 1.9KB/s 00:00

- [root@master home]#

- 使建立的环境立即生效

- [root@slave1 ~]# source /etc/profile

- [root@slave1 ~]# java -version

- java version "1.8.0_112"

- Java(TM) SE Runtime Environment (build 1.8.0_112-b15)

- Java HotSpot(TM) 64-Bit Server VM (build 25.112-b15, mixed mode)

- [root@slave2 ~]# source /etc/profile

- [root@slave2 ~]# java -version

- java version "1.8.0_112"

- Java(TM) SE Runtime Environment (build 1.8.0_112-b15)

- Java HotSpot(TM) 64-Bit Server VM (build 25.112-b15, mixed mode)

-

5、在 master 安装 hadoop 并创建相应的工作目录

- 先上传 hadoop 压缩包到/home 目录下

- 然后解压

- [root@master home]# ls

- centos hadoop hadoop-2.7.5.tar.gz jdk-8u112-linux-x64.tar.gz

- [root@master home]# tar -zxf hadoop-2.7.5.tar.gz -C /home/hadoop/

- [root@master home]# cd /home/hadoop/hadoop-2.7.5/

- 创建 hadoop 相关工作目录

- [root@master ~]# mkdir -p /home/hadoop/tmp

- [root@master ~]# mkdir -p /home/hadoop/dfs/{name,data}

- [root@master ~]# cd /home/hadoop/

- [root@master hadoop]# ls

- dfs hadoop-2.7.5 tmp

6、在 master 节点上配置 hadoop

配置文件位置:

/home/hadoop/hadoop-2.7.5/etc/hadoop/

- [root@master ~]# cd /home/hadoop/hadoop-2.7.5/etc/hadoop/

- [root@master hadoop]# ls

- capacity-scheduler.xml hadoop-policy.xml kms-log4j.properties ssl-client.xml.example

- configuration.xsl hdfs-site.xml kms-site.xml ssl-server.xml.example

- container-executor.cfg httpfs-env.sh log4j.properties yarn-env.cmd

- core-site.xml httpfs-log4j.properties mapred-env.cmd yarn-env.sh

- hadoop-env.cmd httpfs-signature.secret mapred-env.sh yarn-site.xml

- hadoop-env.sh httpfs-site.xml mapred-queues.xml.template

- hadoop-metrics2.properties kms-acls.xml mapred-site.xml.template

- hadoop-metrics.properties kms-env.sh slaves

- [root@master hadoop]#

一共需要修改 7 个配置文件:

1)hadoop-env.sh,指定 hadoop 的 java 运行环境

/usr/local/jdk1.8.0_112/

- [root@master hadoop]# vim hadoop-env.sh

- 24 # The java implementation to use.

- 25 export JAVA_HOME=/usr/local/jdk1.8.0_112/

2)yarn-env.sh,指定 yarn 框架的 java 运行环境

/usr/local/jdk1.8.0_112/

- [root@master hadoop]# vim yarn-env.sh

- 25 #echo "run java in $JAVA_HOME"

- 26 JAVA_HOME=/usr/local/jdk1.8.0_112/

3)slaves,指定 datanode 数据存储服务器

将所有 DataNode 的名字写入此文件中,每个主机名一行,配置如下:

- [root@master hadoop]# vim slaves

- [root@master hadoop]# cat slaves

- #localhost

- slave1

- slave2

4)core-site.xml,指定访问 hadoop web 界面访问路径

hadoop 的核心配置文件,这里需要配置两个属性,

fs.default.FS 配置了 hadoop 的 HDFS 系统的命名,位置为主机的 9000 端口;

hadoop.tmp.dir 配置了 hadoop 的 tmp 目录的根位置。

- [root@master hadoop]# vim core-site.xml

- 19 <configuration>

- 20

- 21 </configuration>

- 19 <configuration>

- 20

- 21 <property>

- 22 <name>fs.defaultFS</name>

- 23 <value>hdfs://master:9000</value>

- 24 </property>

- 25

- 26 <property>

- 27 <name>io.file.buffer.size</name>

- 28 <value>131072</value>

- 29 </property>

- 30

- 31 <property>

- 32 <name>hadoop.tmp.dir</name>

- 33 <value>file:/home/hadoop/tmp</value>

- 34 <description>Abase for other temporary directories.</description>

- 35 </property>

- 36

- 37 </configuration>

- [root@master hadoop]# cat core-site.xml

- <configuration>

- <property>

- <name>fs.defaultFS</name>

- <value>hdfs://master:9000</value>

- </property>

- <property>

- <name>io.file.buffer.size</name>

- <value>131072</value>

- </property>

- <property>

- <name>hadoop.tmp.dir</name>

- <value>file:/home/hadoop/tmp</value>

- <description>Abase for other temporary directories.</description>

- </property>

- </configuration>

- [root@master hadoop]#

5)hdfs-site.xml

hdfs 的配置文件,

dfs.http.address 配置了 hdfs 的 http 的访问位置;

dfs.replication 配置了文件块的副本数,一般不大于从机的个数。

- [root@master hadoop]# more hdfs-site.xml

- <configuration>

- </configuration>

- [root@master hadoop]# vim hdfs-site.xml

- 19 <configuration>

- 20

- 21 </configuration>

- 19 <configuration>

- 20

- 21 <property>

- 22 <name>dfs.namenode.secondary.http-address</name>

- 23 <value>master:9001</value>

- 24 </property>

- 25

- 26 <property>

- 27 <name>dfs.namenode.name.dir</name>

- 28 <value>file:/home/hadoop/dfs/name</value>

- 29 </property>

- 30 <property>

- 31 <name>dfs.datanode.data.dir</name>

- 32 <value>file:/home/hadoop/dfs/data</value>

- 33 </property>

- 34

- 35 <property>

- 36 <name>dfs.replication</name>

- 37 <value>2</value>

- 38 </property>

- 39

- 40 <property>

- 41 <name>dfs.webhdfs.enabled</name>

- 42 <value>true</value>

- 43 </property>

- 44

- 45 </configuration>

- [root@master hadoop]# cat hdfs-site.xml

- <property>

- <name>dfs.namenode.secondary.http-address</name>

- <value>master:9001</value>

- </property>

- <property>

- <name>dfs.namenode.name.dir</name>

- <value>file:/home/hadoop/dfs/name</value>

- </property>

- <property>

- <name>dfs.datanode.data.dir</name>

- <value>file:/home/hadoop/dfs/data</value>

- </property>

- <property>

- <name>dfs.replication</name>

- <value>2</value>

- </property>

- <property>

- <name>dfs.webhdfs.enabled</name>

- <value>true</value>

- </property>

6)mapred-site.xml

mapreduce 任务的配置,由于 hadoop2.x 使用了 yarn 框架,

所以要实现分布式部署,

必须在 mapreduce.framework.name 属性下配置为 yarn。

mapred.map.tasks 和 mapred.reduce.tasks

分别为 map 和 reduce 的任务数。

- # 生成 mapred-site.xml

- [root@master hadoop]# cp mapred-site.xml.template mapred-site.xml

- [root@master hadoop]#

- 编辑:

- [root@master hadoop]# vim mapred-site.xml

- 18

- 19 <configuration>

- 20

- 21 </configuration>

- 19 <configuration>

- 20

- 21 <property>

- 22 <name>mapreduce.framework.name</name>

- 23 <value>yarn</value>

- 24 </property>

- 25

- 26 <property>

- 27 <name>mapreduce.jobhistory.address</name>

- 28 <value>master:10020</value>

- 29 </property>

- 30

- 31 <property>

- 32 <name>mapreduce.jobhistory.webapp.address</name>

- 33 <value>master:19888</value>

- 34 </property>

- 35

- 36 </configuration>

- 查看:

- <property>

- <name>mapreduce.framework.name</name>

- <value>yarn</value>

- </property>

- <property>

- <name>mapreduce.jobhistory.address</name>

- <value>master:10020</value>

- </property>

- <property>

- <name>mapreduce.jobhistory.webapp.address</name>

- <value>master:19888</value>

- </property>

7)yarn-site.xml

该文件为 yarn 框架的配置,主要是一些任务的启动位置

- [root@master hadoop]# vim yarn-site.xml

- 15 <configuration>

- 16

- 17 <!-- Site specific YARN configuration properties -->

- 18

- 19 </configuration>

- 15 <configuration>

- 16

- 17 <!-- Site specific YARN configuration properties -->

- 18

- 19 <property>

- 20 <name>yarn.nodemanager.aux-services</name>

- 21 <value>mapreduce_shuffle</value>

- 22 </property>

- 23

- 24 <property>

- 25 <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

- 26 <value>org.apache.hadoop.mapred.ShuffleHandler</value>

- 27 </property>

- 28

- 29 <property>

- 30 <name>yarn.resourcemanager.address</name>

- 31 <value>master:8032</value>

- 32 </property>

- 33

- 34 <property>

- 35 <name>yarn.resourcemanager.scheduler.address</name>

- 36 <value>master:8030</value>

- 37 </property>

- 38

- 39 <property>

- 40 <name>yarn.resourcemanager.resource-tracker.address</name>

- 41 <value>master:8031</value>

- 42 </property>

- 43

- 44 <property>

- 45 <name>yarn.resourcemanager.admin.address</name>

- 46 <value>master:8033</value>

- 47 </property>

- 48

- 49 <property>

- 50 <name>yarn.resourcemanager.webapp.address</name>

- 51 <value>master:8088</value>

- 52 </property>

- 53

- 54 </configuration>

- ----------------------------------------------------

- 查看:

- <property>

- <name>yarn.nodemanager.aux-services</name>

- <value>mapreduce_shuffle</value>

- </property>

- <property>

- <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

- <value>org.apache.hadoop.mapred.ShuffleHandler</value>

- </property>

- <property>

- <name>yarn.resourcemanager.address</name>

- <value>master:8032</value>

- </property>

- <property>

- <name>yarn.resourcemanager.scheduler.address</name>

- <value>master:8030</value>

- </property>

- <property>

- <name>yarn.resourcemanager.resource-tracker.address</name>

- <value>master:8031</value>

- </property>

- <property>

- <name>yarn.resourcemanager.admin.address</name>

- <value>master:8033</value>

- </property>

- <property>

- <name>yarn.resourcemanager.webapp.address</name>

- <value>master:8088</value>

- </property>

7、修改 hadoop 安装文件的所属者及所属组

- chown -R hadoop.hadoop /home/hadoop

- [root@master ~]# cd /home/hadoop/

- [root@master hadoop]# ll

- 总用量 4

- drwxr-xr-x. 4 root root 28 3 月 25 01:48 dfs

- drwxr-xr-x. 9 20415 101 4096 12 月 16 2017 hadoop-2.7.5

- drwxr-xr-x. 2 root root 6 3 月 25 01:48 tmp

- [root@master hadoop]# chown -R hadoop.hadoop /home/hadoop

- [root@master hadoop]# ll

- 总用量 4

- drwxr-xr-x. 4 hadoop hadoop 28 3 月 25 01:48 dfs

- drwxr-xr-x. 9 hadoop hadoop 4096 12 月 16 2017 hadoop-2.7.5

- drwxr-xr-x. 2 hadoop hadoop 6 3 月 25 01:48 tmp

- [root@master hadoop]#

8、设置 master 主机上的 hadoop 普通用户免密登录

生成基于 hadoop 用户的不输入密码登录:

因为后期使用 hadoop 用户启动 datenode 节点需要直接登录到对应的服务器上启动 datenode 相关服务

- # step 1:切换 hadoop 用户

- [root@master ~]# su - hadoop

- 上一次登录:三 3 月 25 02:32:57 CST 2020pts/0 上

- [hadoop@master ~]$

- # step 2:创建密钥文件

- [hadoop@master ~]$ ssh-keygen //一路回车

- [hadoop@master ~]$

- # step 3:将公钥分别 copy 至 master,slave1,slave2

- [hadoop@master ~]$ ssh-copy-id hadoop@master

- [hadoop@master ~]$ ssh-copy-id hadoop@slave1

- [hadoop@master ~]$ ssh-copy-id hadoop@slave2

- [hadoop@master ~]$

9、将 hadoop 安装文件复制到其他 DateNode 节点

- [hadoop@master ~]$ scp -r /home/hadoop/hadoop-2.7.5/ hadoop@slave1:~/

- [hadoop@master ~]$ scp -r /home/hadoop/hadoop-2.7.5/ hadoop@slave2:~/

-

10、master 上启动 Hadoop

1)格式化 namenode

首先切换到 hadoop 用户,执行 hadoop namenode 的初始化,只需要第一次的时候初始化,之后就不需要了。

- [hadoop@master ~]$ cd /home/hadoop/hadoop-2.7.5/bin/

- [hadoop@master bin]$

- [hadoop@master bin]$ ./hdfs namenode -format

- format 成功

- ---------------------------------------------------------

- 20/03/25 02:48:24 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1015689718-192.168.40.130-

- 1585075704098

- 20/03/25 02:48:24 INFO common.Storage: Storage directory /home/hadoop/dfs/name has been successfully

- formatted.

- 20/03/25 02:48:24 INFO namenode.FSImageFormatProtobuf: Saving image file

- /home/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

- 20/03/25 02:48:24 INFO namenode.FSImageFormatProtobuf: Image file

- /home/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 323 bytes saved in 0 seconds.

- 20/03/25 02:48:24 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

- 20/03/25 02:48:24 INFO util.ExitUtil: Exiting with status 0

- 20/03/25 02:48:24 INFO namenode.NameNode: SHUTDOWN_MSG:

- /************************************************************

- SHUTDOWN_MSG: Shutting down NameNode at master/192.168.40.130

- ************************************************************/

- [hadoop@master bin]$

- ---------------------------------------------------------

2)查看下格式化后生成的文件;

- [hadoop@master ~]$ tree /home/hadoop/dfs/

- bash: tree: 未找到命令...

- [hadoop@master ~]$

-----------------------------------------------------------

没有 tree 命令,等一下再专门搞 tree 命令

- yum provides tree

- yum -y install tree

然后

- [hadoop@master ~]$ tree /home/hadoop/dfs/

- /home/hadoop/dfs/

- ├── data

- └── name

- └── current

- ├── edits_0000000000000000001-0000000000000000002

- ├── edits_0000000000000000003-0000000000000000011

- ├── edits_0000000000000000012-0000000000000000013

- ├── edits_0000000000000000014-0000000000000000015

- ├── edits_0000000000000000016-0000000000000000017

- ├── edits_0000000000000000018-0000000000000000019

- ├── edits_0000000000000000020-0000000000000000021

- ├── edits_0000000000000000022-0000000000000000023

- ├── edits_0000000000000000024-0000000000000000025

- ├── edits_0000000000000000026-0000000000000000027

- ├── edits_0000000000000000028-0000000000000000029

- ├── edits_0000000000000000030-0000000000000000031

- ├── edits_0000000000000000032-0000000000000000032

- ├── edits_0000000000000000033-0000000000000000034

- ├── edits_0000000000000000035-0000000000000000036

- ├── edits_0000000000000000037-0000000000000000037

- ├── edits_0000000000000000038-0000000000000000039

- ├── edits_inprogress_0000000000000000040

- ├── fsimage_0000000000000000037

- ├── fsimage_0000000000000000037.md5

- ├── fsimage_0000000000000000039

- ├── fsimage_0000000000000000039.md5

- ├── seen_txid

- └── VERSION

-

- 3 directories, 24 files

- [hadoop@master ~]$

-----------------------------------------------------------

3)启动 hdfs:./sbin/start-dfs.sh,即启动 HDFS 分布式存储

- [root@master ~]# su - hadoop

- 上一次登录:三 3 月 25 02:33:32 CST 2020pts/0 上

- [hadoop@master ~]$ cd /home/hadoop/hadoop-2.7.5/sbin/

- [hadoop@master sbin]$

- [hadoop@master sbin]$ ./start-dfs.sh

- Starting namenodes on [master]

- master: starting namenode, logging to /home/hadoop/hadoop-2.7.5/logs/hadoop-hadoop-namenode-

- master.out

- slave1: starting datanode, logging to /home/hadoop/hadoop-2.7.5/logs/hadoop-hadoop-datanode-

- slave1.out

- slave2: starting datanode, logging to /home/hadoop/hadoop-2.7.5/logs/hadoop-hadoop-datanode-

- slave2.out

- Starting secondary namenodes [master]

- master: starting secondarynamenode, logging to /home/hadoop/hadoop-2.7.5/logs/hadoop-hadoop-

- secondarynamenode-master.out

- [hadoop@master sbin]$

4)启动 yarn:./sbin/start-yarn.sh 即,启动分布式计算

- [hadoop@master sbin]$ ./start-yarn.sh

- starting yarn daemons

- starting resourcemanager, logging to /home/hadoop/hadoop-2.7.5/logs/yarn-hadoop-resourcemanager-

- master.out

- slave2: starting nodemanager, logging to /home/hadoop/hadoop-2.7.5/logs/yarn-hadoop-nodemanager-

- slave2.out

- slave1: starting nodemanager, logging to /home/hadoop/hadoop-2.7.5/logs/yarn-hadoop-nodemanager-

- slave1.out

- [hadoop@master sbin]$

注意:

其实也可以使用 start-all.sh 脚本依次启动 HDFS 分布式存储及分布式计算。

/home/hadoop/hadoop-2.7.5/sbin/start-all.sh #启动脚本

/home/hadoop/hadoop-2.7.5/sbin/stop-all.sh # 关闭脚本

5)启动历史服务

Hadoop 自带历史服务器,可通过历史服务器查看已经运行完的 Mapreduce 作业记录,

比如用了多少个 Map、用了多少个 Reduce、作业提交时间、作业启动时间、作业完成时间等信息。

默认情况下,Hadoop 历史服务器是没有启动的,我们可以通过下面的命令来启动 Hadoop 历史服务器。

- [hadoop@master sbin]$ ./mr-jobhistory-daemon.sh start historyserver

- starting historyserver, logging to /home/hadoop/hadoop-2.7.5/logs/mapred-hadoop-historyserver-

- master.out

- [hadoop@master sbin]$ cd /home/hadoop/hadoop-2.7.5/bin/

6)查看hdfs

- [hadoop@master bin]$ ./hdfs dfsadmin -report

- Configured Capacity: 37492883456 (34.92 GB)

- Present Capacity: 20249128960 (18.86 GB)

- DFS Remaining: 20249120768 (18.86 GB)

- DFS Used: 8192 (8 KB)

- DFS Used%: 0.00%

- Under replicated blocks: 0

- Blocks with corrupt replicas: 0

- Missing blocks: 0

- Missing blocks (with replication factor 1): 0

- -------------------------------------------------

- Live datanodes (2):

- Name: 192.168.40.132:50010 (slave2)

- Hostname: slave2

- Decommission Status : Normal

- Configured Capacity: 18746441728 (17.46 GB)

- DFS Used: 4096 (4 KB)

- Non DFS Used: 7873466368 (7.33 GB)

- DFS Remaining: 10872971264 (10.13 GB)

- DFS Used%: 0.00%

- DFS Remaining%: 58.00%

- Configured Cache Capacity: 0 (0 B)

- Cache Used: 0 (0 B)

- Cache Remaining: 0 (0 B)

- Cache Used%: 100.00%

- Cache Remaining%: 0.00%

- Xceivers: 1

- Last contact: Wed Mar 25 05:45:40 CST 2020

- Name: 192.168.40.131:50010 (slave1)

- Hostname: slave1

- Decommission Status : Normal

- Configured Capacity: 18746441728 (17.46 GB)

- DFS Used: 4096 (4 KB)

- Non DFS Used: 9370288128 (8.73 GB)

- DFS Remaining: 9376149504 (8.73 GB)

- DFS Used%: 0.00%

- DFS Remaining%: 50.02%

- Configured Cache Capacity: 0 (0 B)

- Cache Used: 0 (0 B)

- Cache Remaining: 0 (0 B)

- Cache Used%: 100.00%

- Cache Remaining%: 0.00%

- Xceivers: 1

- Last contact: Wed Mar 25 05:45:42 CST 2020

- [hadoop@master bin]$

7)查看进程

- [hadoop@master ~]$ jps

- 57920 NameNode

- 58821 Jps

- 58087 SecondaryNameNode

- 58602 JobHistoryServer

- 58287 ResourceManager

- [hadoop@slave1 ~]$ jps

- 54626 NodeManager

- 54486 DataNode

- 54888 Jps

- [hadoop@slave2 ~]$ jps

- 54340 DataNode

- 54473 NodeManager

- 54735 Jps

查看Hadoop面板,浏览器访问

http://192.168.40.130:50070/

查看集群节点,浏览器访问

http://192.168.40.130:8088/

查看历史服务器,浏览器访问

http://192.168.40.130:19888/

若想访问域名,则本地win7/10配置 hosts,

先修改hosts权限

- 192.168.40.130 master

- 192.168.40.131 slave1

- 192.168.40.132 slave2

然后再访问:

http://master:50070/

http://master:8088/

http://master:19888/

--

待续……