- 1xss-labs靶场通关_xsslabs通关挑战

- 2php编写网站页面_用php写一个网页页面(1),2024年最新Linux运维 MVP模式详解_php页面

- 3计算机网络(6) ICMP协议

- 4远程桌面连接出现身份验证错误解决办法_远程桌面出现身份验证错误

- 5XSS平台(四) Pikachu_pikachu平台

- 6【Java数据结构】ArrayList详解_java中的 arraylist 是什么结构

- 7一文深度剖析 ColBERT_colbert算法

- 8【华为OD机考 统一考试机试C卷】结队编程(Java题解)_某部门计划通过结队编程来进行项目开发,已知该部门有n名员工,每个员工有独一

- 9Microsoft 数据仓库架构 !_microsoft 数仓bids

- 10全网最全面最深入 剖析华为“五看三定”战略神器中的“五看”(即市场洞察)(长文干货,建议收藏)_华为的五看三定是什么

超简单【推特文本情感13分类练习赛】高分baseline_文本情感标注测试题

赞

踩

一、推特文本情感分类赛题简介

练习赛地址:https://www.heywhale.com/home/activity/detail/611cbe90ba12a0001753d1e9/content

notebook地址:https://www.heywhale.com/mw/project/6151ca6107bcea0017fd0ea4

此练习赛情感分类位13类,故得分较低。。。。。。

Twitter 的推文有许多特点,首先,与 Facebook 不同的是,推文是基于文本的,可以通过 Twitter 接口注册下载,便于作为自然语言处理所需的语料库。其次,Twitter 规定了每一个推文不超过 140 个字,实际推文中的文本长短不一、长度一般较短,有些只有一个句子甚至一个短语,这对其开展情感分类标注带来许多困难。再者,推文常常是随性所作,内容中包含情感的元素较多,口语化内容居多,缩写随处都在,并且使用了许多网络用语,情绪符号、新词和俚语随处可见。因此,与正式文本非常不同。如果采用那些适合处理正式文本的情感分类方法来对 Twitter 推文进行情感分类,效果将不尽人意。

公众情感在包括电影评论、消费者信心、政治选举、股票走势预测等众多领域发挥着越来越大的影响力。面向公共媒体内容开展情感分析是分析公众情感的一项基础工作。

二、数据基本情况

数据集基于推特用户发表的推文数据集,并且针对部分字段做出了一定的调整,所有的字段信息请以本练习赛提供的字段信息为准

字段信息内容参考如下:

- tweet_id string 推文数据的唯一ID,比如test_0,train_1024

- content string 推特内容

- label int 推特情感的类别,共13种情感

其中训练集train.csv包含3w条数据,字段包括tweet_id,content,label;测试集test.csv包含1w条数据,字段包括tweet_id,content。

tweet_id,content,label

tweet_1,Layin n bed with a headache ughhhh...waitin on your call...,1

tweet_2,Funeral ceremony...gloomy friday...,1

tweet_3,wants to hang out with friends SOON!,2

tweet_4,"@dannycastillo We want to trade with someone who has Houston tickets, but no one will.",3

tweet_5,"I should be sleep, but im not! thinking about an old friend who I want. but he's married now. damn, & he wants me 2! scandalous!",1

tweet_6,Hmmm.

http://www.djhero.com/ is down,4

tweet_7,@charviray Charlene my love. I miss you,1

tweet_8,cant fall asleep,3

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

!head /home/mw/input/Twitter4903/train.csv

- 1

tweet_id,content,label

tweet_0,@tiffanylue i know i was listenin to bad habit earlier and i started freakin at his part =[,0

tweet_1,Layin n bed with a headache ughhhh...waitin on your call...,1

tweet_2,Funeral ceremony...gloomy friday...,1

tweet_3,wants to hang out with friends SOON!,2

tweet_4,"@dannycastillo We want to trade with someone who has Houston tickets, but no one will.",3

tweet_5,"I should be sleep, but im not! thinking about an old friend who I want. but he's married now. damn, & he wants me 2! scandalous!",1

tweet_6,Hmmm. http://www.djhero.com/ is down,4

tweet_7,@charviray Charlene my love. I miss you,1

tweet_8,cant fall asleep,3

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

!head /home/mw/input/Twitter4903/test.csv

- 1

tweet_id,content

tweet_0,Re-pinging @ghostridah14: why didn't you go to prom? BC my bf didn't like my friends

tweet_1,@kelcouch I'm sorry at least it's Friday?

tweet_2,The storm is here and the electricity is gone

tweet_3,So sleepy again and it's not even that late. I fail once again.

tweet_4,"Wondering why I'm awake at 7am,writing a new song,plotting my evil secret plots muahahaha...oh damn it,not secret anymore"

tweet_5,I ate Something I don't know what it is... Why do I keep Telling things about food

tweet_6,so tired and i think i'm definitely going to get an ear infection. going to bed "early" for once.

tweet_7,It is so annoying when she starts typing on her computer in the middle of the night!

tweet_8,Screw you @davidbrussee! I only have 3 weeks...

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

!head /home/mw/input/Twitter4903/submission.csv

- 1

tweet_id,label

tweet_0,0

tweet_1,0

tweet_2,0

tweet_3,0

tweet_4,0

tweet_5,0

tweet_6,0

tweet_7,0

tweet_8,0

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

三、数据集定义

1.环境准备

# 环境准备 (建议gpu环境,速度好。pip install paddlepaddle-gpu)

!pip install paddlepaddle

!pip install -U paddlenlp

- 1

- 2

- 3

2.获取句子最大长度

# 自定义PaddleNLP dataset的read方法

import pandas as pd

train = pd.read_csv('/home/mw/input/Twitter4903/train.csv')

test = pd.read_csv('/home/mw/input/Twitter4903/test.csv')

sub = pd.read_csv('/home/mw/input/Twitter4903/submission.csv')

print('最大内容长度 %d'%(max(train['content'].str.len())))

- 1

- 2

- 3

- 4

- 5

- 6

最大内容长度 166

- 1

3.定义数据集

# 定义读取函数

def read(pd_data):

for index, item in pd_data.iterrows():

yield {

'text': item['content'], 'label': item['label'], 'qid': item['tweet_id'].strip('tweet_')}

- 1

- 2

- 3

- 4

- 5

# 分割训练集、测试机

from paddle.io import Dataset, Subset

from paddlenlp.datasets import MapDataset

from paddlenlp.datasets import load_dataset

dataset = load_dataset(read, pd_data=train,lazy=False)

dev_ds = Subset(dataset=dataset, indices=[i for i in range(len(dataset)) if i % 5== 1])

train_ds = Subset(dataset=dataset, indices=[i for i in range(len(dataset)) if i % 5 != 1])

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

# 查看训练集

for i in range(5):

print(train_ds[i])

- 1

- 2

- 3

{'text': '@tiffanylue i know i was listenin to bad habit earlier and i started freakin at his part =[', 'label': 0, 'qid': '0'}

{'text': 'Funeral ceremony...gloomy friday...', 'label': 1, 'qid': '2'}

{'text': 'wants to hang out with friends SOON!', 'label': 2, 'qid': '3'}

{'text': '@dannycastillo We want to trade with someone who has Houston tickets, but no one will.', 'label': 3, 'qid': '4'}

{'text': "I should be sleep, but im not! thinking about an old friend who I want. but he's married now. damn, & he wants me 2! scandalous!", 'label': 1, 'qid': '5'}

- 1

- 2

- 3

- 4

- 5

# 在转换为MapDataset类型

train_ds = MapDataset(train_ds)

dev_ds = MapDataset(dev_ds)

print(len(train_ds))

print(len(dev_ds))

- 1

- 2

- 3

- 4

- 5

24000

6000

- 1

- 2

四、模型选择

近年来,大量的研究表明基于大型语料库的预训练模型(Pretrained Models, PTM)可以学习通用的语言表示,有利于下游NLP任务,同时能够避免从零开始训练模型。随着计算能力的发展,深度模型的出现(即 Transformer)和训练技巧的增强使得 PTM 不断发展,由浅变深。

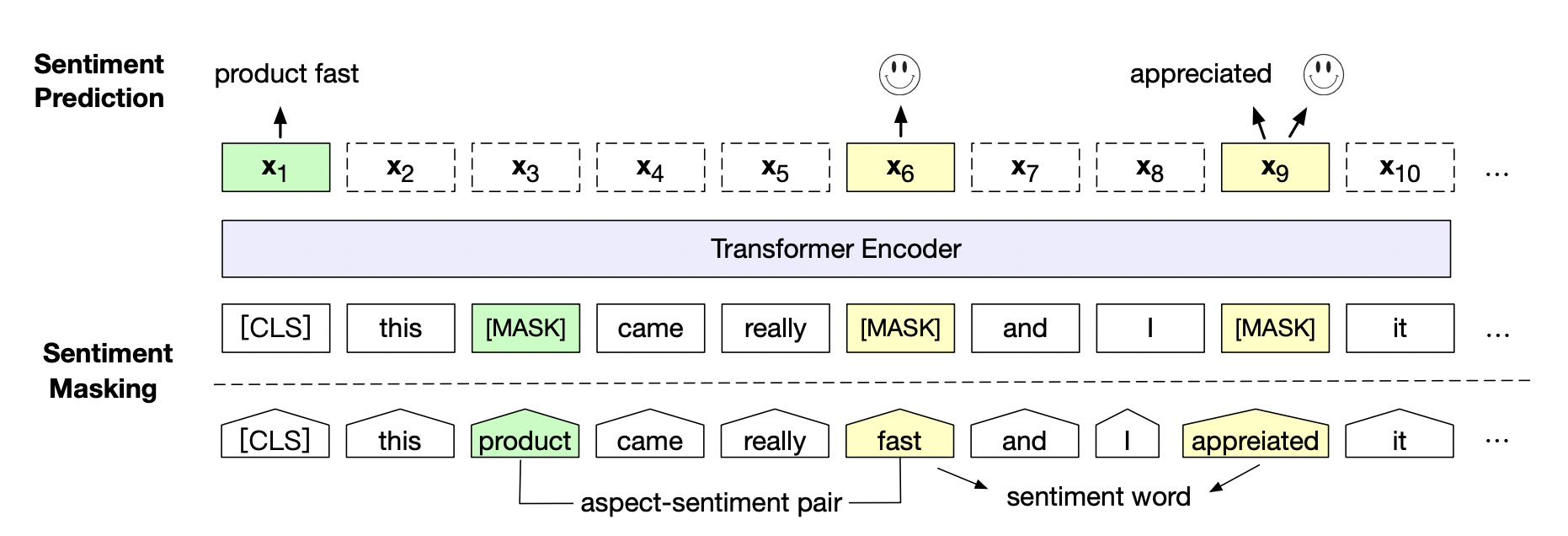

情感预训练模型SKEP(Sentiment Knowledge Enhanced Pre-training for Sentiment Analysis)。SKEP利用情感知识增强预训练模型, 在14项中英情感分析典型任务上全面超越SOTA,此工作已经被ACL 2020录用。SKEP是百度研究团队提出的基于情感知识增强的情感预训练算法,此算法采用无监督方法自动挖掘情感知识,然后利用情感知识构建预训练目标,从而让机器学会理解情感语义。SKEP为各类情感分析任务提供统一且强大的情感语义表示。

论文地址:https://arxiv.org/abs/2005.05635

百度研究团队在三个典型情感分析任务,句子级情感分类(Sentence-level Sentiment Classification),评价目标级情感分类