- 1桥接生物信息学和化学信息学公开的方法数据库

- 2spark的RDD中的action(执行)和transformation(转换)两种操作中常见函数介绍_action transform

- 3内网穿透保姆级教程——内网穿透建立个人网站、远程控制_内网穿透建站

- 4源码开放:基于Pyecharts可视化分析苏州旅游攻略_python画苏州地图

- 5LeetCode比赛(持续更新…)_leetcode周赛几点开始

- 6Git常用命令超级详细_warning: ----------------- security warning ------

- 7力扣每日一题 6/4

- 8【React】React 的StrictMode作用是什么,怎么使用?

- 9Label上增加点击事件及下划线的方法

- 10火车头采集软件如何批量伪原创(火影智能AI文章伪原创)_火车头+ai打造属于你的原创内容

虚拟机 scala 和spark 安装_解压虚拟机中的spark压缩包

赞

踩

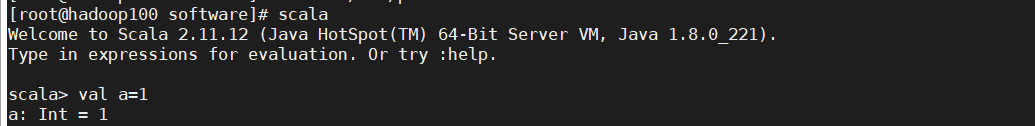

一 、scala 安装

1.下载,解压scala安装包

安装包自行去官网下载

tar -zxvf scala-2.11.12.tgz

2.配置环境变量

export SCALA_HOME=/opt/software/scala2.11

export PATH=

P

A

T

H

:

PATH:

PATH:SCALA_HOME/bin

3.启动scala

scala

二、spark安装

1、下载,解压spark安装包

spark安装包自行下载

tar -axvf spark-2.4.7-bin-hadoop2.6.tgz

2.修改配置文件

修改文件名

修改spark-env,sh

export JAVA_HOME=/root/software/jdk1.8.0_221

export SCALA_HOME=/opt/software/scala2.11

export SPARK_HOME=/opt/software/spark247

export HADOOP_HOME=/root/software/hadoop

export HADOOP_CONF_DIR=/root/software/hadoop/etc/hadoop

export SPARK_MASTER_IP=hadoop100

export SPARK_EXECUTOR_MEMORY=1G

3.配置环境变量

export SPARK_HOME=/opt/software/spark247

export SPARK_CONF_DIR=

S

P

A

R

K

H

O

M

E

/

c

o

n

f

e

x

p

o

r

t

P

A

T

H

=

SPARK_HOME/conf export PATH=

SPARKHOME/confexportPATH=PATH:$SPARK_HOME/bin

4.启动spark

spark-shell

如果是集群,修改 salves 类似hadoop完全分布的slaves,workers

加入集群的ip

还有spark-env.sh 的master_ip都写主节点,每台节点都要安装scala等