热门标签

热门文章

- 1奖金711万!这位“山东宝妈”破解美国运算100万年才可能解开的密码!

- 2Unity Sprite Packer 问题集合_sprites can not be generated from textures with np

- 3程序员该如何确定任务(项目)的排期?_计算机做一个项目具体的排期该怎么样

- 4【初阶数据结构篇】顺序表的实现(赋源码)

- 5exe打包工具,封装exe安装程序--Inno Setup_磁盘跨越必须启用

- 6基于SpringBoot+Vue的游戏分享网站(带1w+文档)

- 730岁还在技术路上前进,软件测试是不是被严重低估了?

- 8C++11 auto关键字详解

- 9支持图片识别语音输入的LobeChat保姆级本地部署流程

- 10【JS逆向补环境】最新mtgsig参数分析与算法还原

当前位置: article > 正文

IOS微软语音转文本,lame压缩音频

作者:IT小白 | 2024-07-29 12:02:12

赞

踩

IOS微软语音转文本,lame压缩音频

在IOS开发中,用微软进行语音转文本操作,并将录音文件压缩后返回

项目中遇到了利用微软SDK进行实时录音转文本操作,如果操作失败,那么就利用原始音频文件通过网络请求操作,最终这份文件上传到阿里云保存,考虑到传输速率,对文件压缩成mp3再上传

遇到的难点

- 微软的示例中只能转文本,微软并不保存这份音频文件,需要自己实现从录音到推流,到获取结果

- 项目是uniapp项目,非原生工程项目,录音管理器需要激活后才能使用

- 关于压缩代码,采用Lame库压缩,网上大部分都是通过文件提取压缩再保存,直接录制音频压缩较少,记录下来以便后续使用

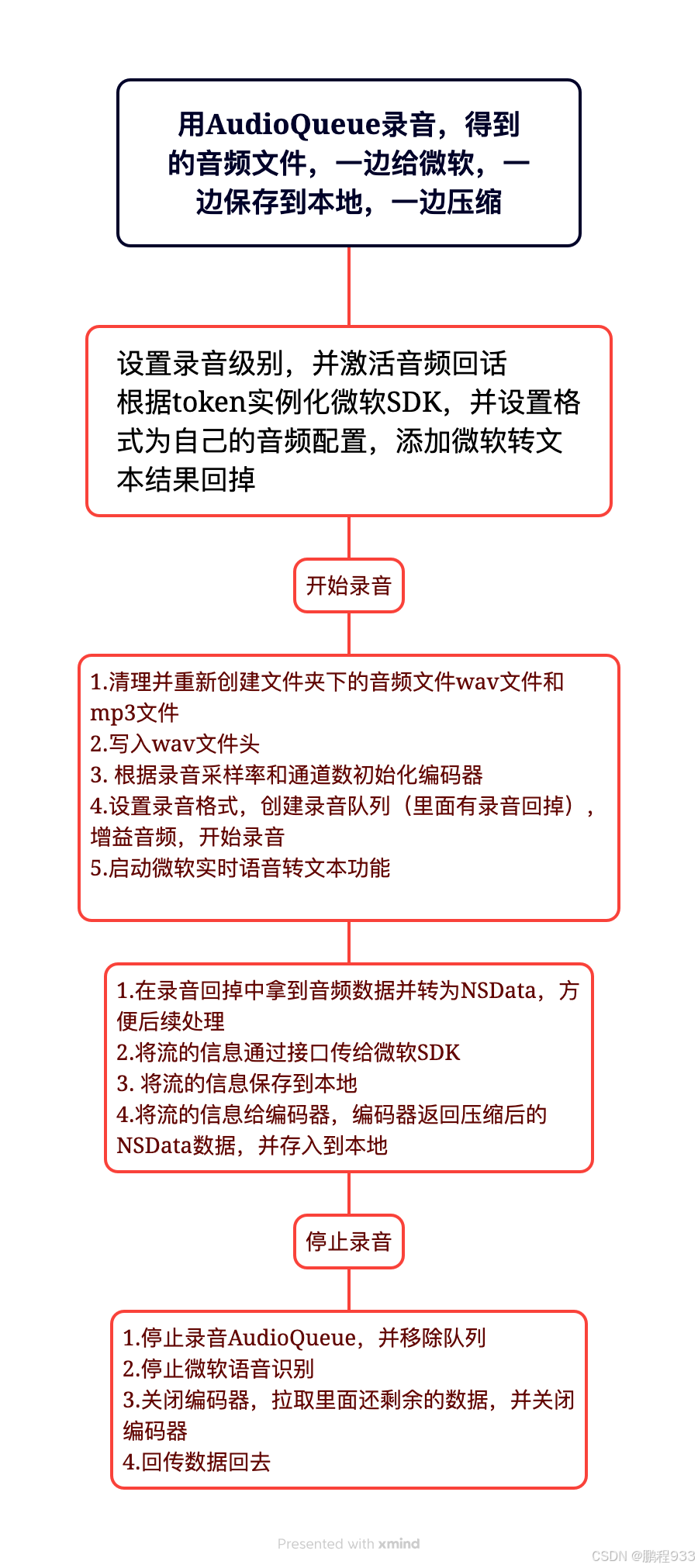

流程图

实现步骤

录音的实现

// 每个缓冲区的大小 #define kBufferSize 2048 // 缓冲区数量 #define kNumberBuffers 3 // 定义结构体,里面保存录音队列ID,录音格式 typedef struct { AudioStreamBasicDescription dataFormat; AudioQueueRef queue; AudioQueueBufferRef buffers[kNumberBuffers]; UInt32 bufferByteSize; __unsafe_unretained id selfRef; } AQRecorderState; AQRecorderState recorderState = {0}; - (instancetype)init { self = [super init]; if (self) { // 设置音频格式 recorderState.dataFormat.mFormatID = kAudioFormatLinearPCM; recorderState.dataFormat.mSampleRate = 16000.0; recorderState.dataFormat.mChannelsPerFrame = 1; recorderState.dataFormat.mBitsPerChannel = 16; recorderState.dataFormat.mBytesPerPacket = recorderState.dataFormat.mBytesPerFrame = recorderState.dataFormat.mChannelsPerFrame * sizeof(SInt16); recorderState.dataFormat.mFramesPerPacket = 1; recorderState.dataFormat.mFormatFlags = kLinearPCMFormatFlagIsSignedInteger | kLinearPCMFormatFlagIsPacked; } return self; } - (void)configureAudioSession { AVAudioSession *session = [AVAudioSession sharedInstance]; NSError *error = nil; // 设置音频会话类别和模式 [session setCategory:AVAudioSessionCategoryPlayAndRecord error:&error]; if (error) { NSLog(@"Error setting category: %@", error.localizedDescription); } // 激活音频会话 [session setActive:YES error:&error]; if (error) { NSLog(@"Error activating session: %@", error.localizedDescription); } } // 开始录音 - (void)startRecording{ // 激活录音文件 [self configureAudioSession]; // 创建录音队列 AudioQueueNewInput(&recorderState.dataFormat, HandleInputBuffer, &recorderState, NULL, kCFRunLoopCommonModes, 0, &recorderState.queue); // 设置录音增益 AudioQueueSetParameter(recorderState.queue, kAudioQueueParam_Volume, 1.0); // 计算缓冲区大小 DeriveBufferSize(recorderState.queue, &recorderState.dataFormat, 0.5, &recorderState.bufferByteSize); // 分配和分配缓冲区 for (int i = 0; i < kNumberBuffers; i++) { AudioQueueAllocateBuffer(recorderState.queue, recorderState.bufferByteSize, &recorderState.buffers[i]); AudioQueueEnqueueBuffer(recorderState.queue, recorderState.buffers[i], 0, NULL); } OSStatus status = AudioQueueStart(recorderState.queue, NULL); if (status != noErr) { NSLog(@"AudioQueueNewInput failed with error: %d", (int)status); } } // 结束录音 - (void)stopRecording{ // 停止录音 AudioQueueStop(recorderState.queue, true); AudioQueueDispose(recorderState.queue, true); }; // 计算缓冲区大小 void DeriveBufferSize(AudioQueueRef audioQueue, AudioStreamBasicDescription *ASBDesc, Float64 seconds, UInt32 *outBufferSize) { static const int maxBufferSize = 0x50000; // 限制缓冲区的最大值 int maxPacketSize = ASBDesc->mBytesPerPacket; if (maxPacketSize == 0) { UInt32 maxVBRPacketSize = sizeof(maxPacketSize); AudioQueueGetProperty(audioQueue, kAudioQueueProperty_MaximumOutputPacketSize, &maxPacketSize, &maxVBRPacketSize); } Float64 numBytesForTime = ASBDesc->mSampleRate * maxPacketSize * seconds; *outBufferSize = (UInt32)(numBytesForTime < maxBufferSize ? numBytesForTime : maxBufferSize); } // 数据处理回调函数 这个里面有个录音回掉的PCM数据 void HandleInputBuffer(void *aqData, AudioQueueRef inAQ, AudioQueueBufferRef inBuffer, const AudioTimeStamp *inStartTime, UInt32 inNumPackets, const AudioStreamPacketDescription *inPacketDesc) { AQRecorderState *pAqData = (AQRecorderState *)aqData; // 如果有数据,就处理 if (inNumPackets > 0) { // 创建NSData对象 NSData *audioData = [NSData dataWithBytes:inBuffer->mAudioData length:inBuffer->mAudioDataByteSize]; // 打印NSData对象内容 NSLog(@"Audio Data: %@", audioData); // 这儿将进行保存文件 // 编码文件 // 推送数据到微软SDK } // 将缓冲区重新加入到队列中 AudioQueueEnqueueBuffer(pAqData->queue, inBuffer, 0, NULL); }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

微软SDK初始化和推流

这个大部分和微软示例差不多,需要注意的是获取微软示例时候传入的是自定义的录音设置,并将自定义录音设置保存起来,在录音回掉中将数据推入流中

- (void)setUpKey:(NSString *)token service:(NSString *)service lang:(NSString *) lang { // 这里将通过token和区域初始化配置类,微软还有其他获取配置类的方法,其他方法示例化也可以 SPXSpeechConfiguration *speechConfig = nil; speechConfig = [[SPXSpeechConfiguration alloc] initWithAuthorizationToken:token region:service]; // 这个是通过token实例化配置类的方式 // speechConfig = [[SPXSpeechConfiguration alloc] initWithSubscription:token region:service]; // 设置语言 en-US格式 [speechConfig setSpeechRecognitionLanguage:lang]; // 设置微软接收到的数据的格式 16000HZ 16位深 单通道 SPXAudioStreamFormat *audioFormat = [[SPXAudioStreamFormat alloc] initUsingPCMWithSampleRate: 16000 bitsPerSample:16 channels:1]; // 获取推流的类,并保存起来,后面就通过它推送数据到SDK self.audioInputStream = [[SPXPushAudioInputStream alloc] initWithAudioFormat:audioFormat]; // 获取录音配置 SPXAudioConfiguration* audioConfig = [[SPXAudioConfiguration alloc] initWithStreamInput:self.audioInputStream]; // 通过配置类和录音类信息获取微软识别器 self.recognizer = [[SPXSpeechRecognizer alloc] initWithSpeechConfiguration:speechConfig audioConfiguration:audioConfig]; // 定义已识别事件的处理函数 [self.recognizer addRecognizedEventHandler:^(SPXSpeechRecognizer *recognizer, SPXSpeechRecognitionEventArgs *eventArgs) { NSString *recognizedText = eventArgs.result.text; NSLog(@"Final recognized text: %@", recognizedText); // 在这里处理最终识别结果 [self.speechToTextResult appendFormat:recognizedText]; }]; // 定义识别中事件的处理函数 [self.recognizer addRecognizingEventHandler:^(SPXSpeechRecognizer *recognizer, SPXSpeechRecognitionEventArgs *eventArgs) { NSString *intermediateText = eventArgs.result.text; NSLog(@"Intermediate recognized text: %@", intermediateText); // 在这里处理中间识别结果 }]; // 定义取消事件的处理函数 [self.recognizer addCanceledEventHandler:^(SPXSpeechRecognizer *recognizer, SPXSpeechRecognitionCanceledEventArgs *eventArgs) { NSLog(@"Recognition canceled. Reason: %ld", (long)eventArgs.reason); if (eventArgs.errorDetails != nil) { NSLog(@"Error details: %@", eventArgs.errorDetails); } }]; } - (void)startRecording{ [self.recognizer startContinuousRecognition]; } - (void)stopRecording{ [self.recognizer stopContinuousRecognition]; } // // 数据处理回调函数 void HandleInputBuffer(void *aqData, AudioQueueRef inAQ, AudioQueueBufferRef inBuffer, const AudioTimeStamp *inStartTime, UInt32 inNumPackets, const AudioStreamPacketDescription *inPacketDesc) { // 在回掉函数中,将数据传递给微软,上面的回掉函数中就能拿到数据了 [self.audioInputStream write:audioData]; }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

保存文件

- 保存文件相对简单,录音开始清除上一次的音频文件,创建新的音频文件

- WAV文件需要添加头文件,才能正常播放

#import "SaveAudioFile.h" #define isValidString(string) (string && [string isEqualToString:@""] == NO) // WAV 文件头结构 typedef struct { char riff[4]; UInt32 fileSize; char wave[4]; char fmt[4]; UInt32 fmtSize; UInt16 formatTag; UInt16 channels; UInt32 samplesPerSec; UInt32 avgBytesPerSec; UInt16 blockAlign; UInt16 bitsPerSample; char data[4]; UInt32 dataSize; } WAVHeader; @implementation SaveAudioFile /** * 清理文件 */ - (void)cleanFile { if (isValidString(self.mp3Path)) { NSFileManager *fileManager = [NSFileManager defaultManager]; BOOL isDir = FALSE; BOOL isDirExist = [fileManager fileExistsAtPath:self.mp3Path isDirectory:&isDir]; if (isDirExist) { [fileManager removeItemAtPath:self.mp3Path error:nil]; NSLog(@" xxx.mp3 file already delete"); } } if (isValidString(self.wavPath)) { NSFileManager *fileManager = [NSFileManager defaultManager]; BOOL isDir = FALSE; BOOL isDirExist = [fileManager fileExistsAtPath:self.wavPath isDirectory:&isDir]; if (isDirExist) { [fileManager removeItemAtPath:self.wavPath error:nil]; NSLog(@" xxx.caf file already delete"); } } } /** * 取得录音文件保存路径 * * @return 录音文件路径 */ -(NSURL *)getSavePath{ // 在Documents目录下创建一个名为FileData的文件夹 NSString *path = [[NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES)lastObject] stringByAppendingPathComponent:@"AudioData"]; NSLog(@"%@",path); NSFileManager *fileManager = [NSFileManager defaultManager]; BOOL isDir = FALSE; BOOL isDirExist = [fileManager fileExistsAtPath:path isDirectory:&isDir]; if(!(isDirExist && isDir)) { BOOL bCreateDir = [fileManager createDirectoryAtPath:path withIntermediateDirectories:YES attributes:nil error:nil]; if(!bCreateDir){ NSLog(@"创建文件夹失败!"); } NSLog(@"创建文件夹成功,文件路径%@",path); } NSString *fileName = @"record"; NSString *wavFileName = [NSString stringWithFormat:@"%@.wav", fileName]; NSString *mp3FileName = [NSString stringWithFormat:@"%@.mp3", fileName]; NSString *wavPath = [path stringByAppendingPathComponent:wavFileName]; NSString *mp3Path = [path stringByAppendingPathComponent:mp3FileName]; self.wavPath = wavPath; self.mp3Path = mp3Path; NSLog(@"file path:%@",mp3Path); NSURL *url=[NSURL fileURLWithPath:mp3Path]; return url; } -(void) startWritingHeaders { [self cleanFile]; [self getSavePath]; // 写入 WAV 头部 WAVHeader header; memcpy(header.riff, "RIFF", 4); header.fileSize = 0; // 将在录音结束时填充 memcpy(header.wave, "WAVE", 4); memcpy(header.fmt, "fmt ", 4); header.fmtSize = 16; header.formatTag = 1; // PCM header.channels = 1; header.samplesPerSec = 16000; header.avgBytesPerSec = 16000 * 2; header.blockAlign = 2; header.bitsPerSample = 16; memcpy(header.data, "data", 4); header.dataSize = 0; // 将在录音结束时填充 // 创建 WAV 文件API [[NSFileManager defaultManager] createFileAtPath:self.wavPath contents:nil attributes:nil]; self.audioFileHandle = [NSFileHandle fileHandleForWritingAtPath:self.wavPath]; [self.audioFileHandle writeData:[NSData dataWithBytes:&header length:sizeof(header)]]; // 创建 mp3 文件API [[NSFileManager defaultManager] createFileAtPath:self.mp3Path contents:nil attributes:nil]; self.audioFileHandle2 = [NSFileHandle fileHandleForWritingAtPath:self.mp3Path]; } - (void) saveAudioFile: (NSData *) data type:(NSString *) type{ if([type isEqualToString:@"wav"]){ // 写入音频数据到 WAV 文件 [self.audioFileHandle writeData:data]; }else{ // 拿到编码过后的数据,保存到本地 [self.audioFileHandle2 writeData:data]; } } @end

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

利用Lame库编码PCM数据

- 下载Lame库并导入项目中操作,参考网上文章https://www.cnblogs.com/XYQ-208910/p/7650759.html

- lame库的使用主要分成3部分

- 初始化Lame 并设置比特率,位深,通道数,压缩程度

- 传入原始的音频数据,得到编码过后的mp3音频数据

- 结束时刷新lame中还剩的数据,关闭Lame

// // LameEncoderMp3.m // SpeechUntil // // Created by 肖鹏程 on 2024/7/25. // #import "LameEncoderMp3.h" @implementation LameEncoderMp3 - (void) settingFormat:(int)sampleRate channels:(int)channels{ // 初始化lame编码器 设置格式 self.lame = lame_init(); lame_set_in_samplerate(self.lame, sampleRate); lame_set_num_channels(self.lame, channels); lame_set_brate(self.lame, 16); // 比特率128 kbps lame_set_mode(self.lame, channels == 1 ? MONO : STEREO); lame_set_quality(self.lame, 7); // 0 = 最高质量(最慢),9 = 最低质量(最快) lame_init_params(self.lame); self.channels = channels; }; - (NSData *)encodePCMToMP3:(NSData *)pcmData{ // PCM数据的指针和长度 const short *pcmBuffer = (const short *)[pcmData bytes]; int pcmLength = (int)[pcmData length] / sizeof(short); NSLog(@"pcmLength %lu", [pcmData length]); // 分配MP3缓冲区 int mp3BufferSize = (int)(1.25 * pcmLength) + 7200; unsigned char *mp3Buffer = (unsigned char *)malloc(mp3BufferSize); // 确保mp3Buffer分配成功 if (mp3Buffer == NULL) { NSLog(@"Failed to allocate memory for MP3 buffer"); return nil; } // PCM编码为MP3 // 注意这个是单通道的方法,如果是双通道调用这个lame_encode_buffer_interleaved( // lame, // recordingData, // numSamples / 2, // 双声道 // mp3Buffer, // mp3BufferSize ); int mp3Length = lame_encode_buffer(self.lame, (short *)pcmBuffer, (short *)pcmBuffer,pcmLength, mp3Buffer, mp3BufferSize); if (mp3Length < 0) { NSLog(@"LAME encoding error: %d", mp3Length); free(mp3Buffer); return nil; } // 创建MP3数据 NSData *mp3Data = [NSData dataWithBytes:mp3Buffer length:mp3Length]; NSLog(@"mp3Length %lu", [mp3Data length]); // 清理 free(mp3Buffer); return mp3Data; } - (NSData *) closeLame{ // 刷新LAME缓冲区 unsigned char mp3Buffer[7200]; int flushLength = lame_encode_flush(self.lame, mp3Buffer, sizeof(mp3Buffer)); NSData *flushData; if (flushLength > 0) { // 将刷新后的数据追加到已有的MP3数据 flushData = [NSData dataWithBytes:mp3Buffer length:flushLength]; } else if (flushLength < 0) { NSLog(@"LAME flushing error: %d", flushLength); } // 关闭 lame_close(self.lame); return flushData; } @end

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/IT小白/article/detail/898642

推荐阅读

相关标签