- 1java 获取excel 中的数字签名_Excel VBA使用数字签名,让你代码开启执行无忧之路...

- 2es基本语句详解 查询语句详解_es查询语句

- 3西安交通大学915考研--编程题Java代码踩坑(2016年真题)_考研编程题

- 4“互联网+工业”下的大数据应用场景分析_工业互联网+大数据应用的判断是什么

- 58 个适用于电脑的顶级免费分区恢复软件_奇客分区大师

- 6给你一个项目,你会如何开展性能测试工作_项目管理系统如何进行性能测试

- 7项目管理 | 什么是项目管理计划?_项目管理计划管理

- 8pycharm社区版、专业版和教育版区别是什么 _pycharm教育版(1)_pycharm教育版和专业版区别

- 9C#实现Json序列化-反射与特性_c# jsonconvert.serializeobject

- 10GIT的使用方法(安装,远程仓库的绑定,文件删除以及更新操作)_git lfs 如何更新远端的文件

Docker搭建kafka集群_docker安装kafka集群

赞

踩

集群规划

镜像版本

-

Zookeeper采用zookeeper

-

Kafka采用wurstmeister/kafka

-

Kafka-Manager采用scjtqs/kafka-manager

kafka为什么需要依赖zookeeper

ZooKeeper 作为给分布式系统提供协调服务的工具被 kafka 所依赖。在分布式系统中,消费者需要知道有哪些生产者是可用的,而如果每次消费者都需要和生产者建立连接并测试是否成功连接,那效率也太低了,显然是不可取的。而通过使用 ZooKeeper 协调服务,Kafka 就能将 Producer,Consumer,Broker 等结合在一起,同时借助 ZooKeeper,Kafka 就能够将所有组件在无状态的条件下建立起生产者和消费者的订阅关系,实现负载均衡

1.Brork管理

在Kafka的设计中,选择了使用Zookeeper来进行所有Broker的管理,体现在zookeeper上会有一个专门用来进行Broker服务器列表记录的点,节点路径为/brokers/ids

Zookeeper用一个专门节点保存Broker服务列表,也就是 /brokers/ids。

broker启动时,向Zookeeper发送注册请求,Zookeeper会在/brokers/ids下创建这个broker节点,如/brokers/ids/[0…N],并保存broker的IP地址和端口,Broker 创建的是临时节点,在连接断开时节点就会自动删除,所以在 ZooKeeper 上就可以通过 Broker 中节点的变化来得到 Broker 的可用性。

2、负载均衡

broker向Zookeeper进行注册后,生产者根据broker节点来感知broker服务列表变化,这样可以实现动态负载均衡。

3、Topic 信息

在 Kafka 中可以定义很多个 Topic,每个 Topic 又被分为很多个 Partition。一般情况下,每个 Partition 独立在存在一个 Broker 上,所有的这些 Topic 和 Broker 的对应关系都由 ZooKeeper 进行维护。

创建docker网络

docker network create kafaka-net --subnet 172.28.10.0/16

- 1

搭建zk集群

新建文件docker-compose-zk.yml

version: '3.4' services: zook1: image: zookeeper:latest #restart: always #自动重新启动 hostname: zook1 container_name: zook1 #容器名称,方便在rancher中显示有意义的名称 ports: - 39181:2181 #将本容器的zookeeper默认端口号映射出去 volumes: # 挂载数据卷 前面是宿主机即本机的目录位置,后面是docker的目录 - "/volumn/kafaka/zkcluster/zook1/data:/data" - "/volumn/kafaka/zkcluster/zook1/datalog:/datalog" - "/volumn/kafaka/zkcluster/zook1/logs:/logs" environment: ZOO_MY_ID: 1 #即是zookeeper的节点值,也是kafka的brokerid值 ZOO_SERVERS: server.1=zook1:2888:3888;2181 server.2=zook2:2888:3888;2181 server.3=zook3:2888:3888;2181 networks: docker-net: ipv4_address: 172.28.10.11 zook2: image: zookeeper:latest #restart: always #自动重新启动 hostname: zook2 container_name: zook2 #容器名称,方便在rancher中显示有意义的名称 ports: - 39182:2181 #将本容器的zookeeper默认端口号映射出去 volumes: - "/volumn/kafaka/zkcluster/zook2/data:/data" - "/volumn/kafaka/zkcluster/zook2/datalog:/datalog" - "/volumn/kafaka/zkcluster/zook2/logs:/logs" environment: ZOO_MY_ID: 2 #即是zookeeper的节点值,也是kafka的brokerid值 ZOO_SERVERS: server.1=zook1:2888:3888;2181 server.2=zook2:2888:3888;2181 server.3=zook3:2888:3888;2181 networks: docker-net: ipv4_address: 172.28.10.12 zook3: image: zookeeper:latest #restart: always #自动重新启动 hostname: zook3 container_name: zook3 #容器名称,方便在rancher中显示有意义的名称 ports: - 39183:2181 #将本容器的zookeeper默认端口号映射出去 volumes: - "/volumn/kafaka/zkcluster/zook3/data:/data" - "/volumn/kafaka/zkcluster/zook3/datalog:/datalog" - "/volumn/kafaka/zkcluster/zook3/logs:/logs" environment: ZOO_MY_ID: 3 #即是zookeeper的节点值,也是kafka的brokerid值 ZOO_SERVERS: server.1=zook1:2888:3888;2181 server.2=zook2:2888:3888;2181 server.3=zook3:2888:3888;2181 networks: docker-net: ipv4_address: 172.28.10.13 networks: docker-net: name: kafaka-net

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

启动

docker compose -p zookeeper -f ./docker-compose-zk.yml up -d

- 1

搭建kafka集群

新建三个挂载文件

挂载原因

主要防止kafka-topics.sh命令创建主题时报错

Error: Exception thrown by the agent : java.rmi.server.ExportException: Port already in use: 9966; nested exception is:

java.net.BindException: Address in use (Bind failed)

- 1

- 2

挂载步骤

mkdir -p /volumn/kafaka/kafka1/bin

mkdir -p /volumn/kafaka/kafka2/bin

mkdir -p /volumn/kafaka/kafka3/bin

vim kafka-run-class.sh

如果你的sh文件是从windows拷贝过来的,kafka启动时会报错:/bin/bash^M: bad interpreter: No such file or directory。vim *.sh 下执行:set ff=unix即可

#!/bin/bash # Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership. # The ASF licenses this file to You under the Apache License, Version 2.0 # (the "License"); you may not use this file except in compliance with # the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. if [ $# -lt 1 ]; then echo "USAGE: $0 [-daemon] [-name servicename] [-loggc] classname [opts]" exit 1 fi # CYGWIN == 1 if Cygwin is detected, else 0. if [[ $(uname -a) =~ "CYGWIN" ]]; then CYGWIN=1 else CYGWIN=0 fi if [ -z "$INCLUDE_TEST_JARS" ]; then INCLUDE_TEST_JARS=false fi # Exclude jars not necessary for running commands. regex="(-(test|test-sources|src|scaladoc|javadoc)\.jar|jar.asc)$" should_include_file() { if [ "$INCLUDE_TEST_JARS" = true ]; then return 0 fi file=$1 if [ -z "$(echo "$file" | egrep "$regex")" ] ; then return 0 else return 1 fi } ISKAFKASERVER="false" if [[ "$*" =~ "kafka.Kafka" ]]; then ISKAFKASERVER="true" fi base_dir=$(dirname $0)/.. if [ -z "$SCALA_VERSION" ]; then SCALA_VERSION=2.13.5 if [[ -f "$base_dir/gradle.properties" ]]; then SCALA_VERSION=`grep "^scalaVersion=" "$base_dir/gradle.properties" | cut -d= -f 2` fi fi if [ -z "$SCALA_BINARY_VERSION" ]; then SCALA_BINARY_VERSION=$(echo $SCALA_VERSION | cut -f 1-2 -d '.') fi # run ./gradlew copyDependantLibs to get all dependant jars in a local dir shopt -s nullglob if [ -z "$UPGRADE_KAFKA_STREAMS_TEST_VERSION" ]; then for dir in "$base_dir"/core/build/dependant-libs-${SCALA_VERSION}*; do CLASSPATH="$CLASSPATH:$dir/*" done fi for file in "$base_dir"/examples/build/libs/kafka-examples*.jar; do if should_include_file "$file"; then CLASSPATH="$CLASSPATH":"$file" fi done if [ -z "$UPGRADE_KAFKA_STREAMS_TEST_VERSION" ]; then clients_lib_dir=$(dirname $0)/../clients/build/libs streams_lib_dir=$(dirname $0)/../streams/build/libs streams_dependant_clients_lib_dir=$(dirname $0)/../streams/build/dependant-libs-${SCALA_VERSION} else clients_lib_dir=/opt/kafka-$UPGRADE_KAFKA_STREAMS_TEST_VERSION/libs streams_lib_dir=$clients_lib_dir streams_dependant_clients_lib_dir=$streams_lib_dir fi for file in "$clients_lib_dir"/kafka-clients*.jar; do if should_include_file "$file"; then CLASSPATH="$CLASSPATH":"$file" fi done for file in "$streams_lib_dir"/kafka-streams*.jar; do if should_include_file "$file"; then CLASSPATH="$CLASSPATH":"$file" fi done if [ -z "$UPGRADE_KAFKA_STREAMS_TEST_VERSION" ]; then for file in "$base_dir"/streams/examples/build/libs/kafka-streams-examples*.jar; do if should_include_file "$file"; then CLASSPATH="$CLASSPATH":"$file" fi done else VERSION_NO_DOTS=`echo $UPGRADE_KAFKA_STREAMS_TEST_VERSION | sed 's/\.//g'` SHORT_VERSION_NO_DOTS=${VERSION_NO_DOTS:0:((${#VERSION_NO_DOTS} - 1))} # remove last char, ie, bug-fix number for file in "$base_dir"/streams/upgrade-system-tests-$SHORT_VERSION_NO_DOTS/build/libs/kafka-streams-upgrade-system-tests*.jar; do if should_include_file "$file"; then CLASSPATH="$file":"$CLASSPATH" fi done if [ "$SHORT_VERSION_NO_DOTS" = "0100" ]; then CLASSPATH="/opt/kafka-$UPGRADE_KAFKA_STREAMS_TEST_VERSION/libs/zkclient-0.8.jar":"$CLASSPATH" CLASSPATH="/opt/kafka-$UPGRADE_KAFKA_STREAMS_TEST_VERSION/libs/zookeeper-3.4.6.jar":"$CLASSPATH" fi if [ "$SHORT_VERSION_NO_DOTS" = "0101" ]; then CLASSPATH="/opt/kafka-$UPGRADE_KAFKA_STREAMS_TEST_VERSION/libs/zkclient-0.9.jar":"$CLASSPATH" CLASSPATH="/opt/kafka-$UPGRADE_KAFKA_STREAMS_TEST_VERSION/libs/zookeeper-3.4.8.jar":"$CLASSPATH" fi fi for file in "$streams_dependant_clients_lib_dir"/rocksdb*.jar; do CLASSPATH="$CLASSPATH":"$file" done for file in "$streams_dependant_clients_lib_dir"/*hamcrest*.jar; do CLASSPATH="$CLASSPATH":"$file" done for file in "$base_dir"/shell/build/libs/kafka-shell*.jar; do if should_include_file "$file"; then CLASSPATH="$CLASSPATH":"$file" fi done for dir in "$base_dir"/shell/build/dependant-libs-${SCALA_VERSION}*; do CLASSPATH="$CLASSPATH:$dir/*" done for file in "$base_dir"/tools/build/libs/kafka-tools*.jar; do if should_include_file "$file"; then CLASSPATH="$CLASSPATH":"$file" fi done for dir in "$base_dir"/tools/build/dependant-libs-${SCALA_VERSION}*; do CLASSPATH="$CLASSPATH:$dir/*" done for cc_pkg in "api" "transforms" "runtime" "file" "mirror" "mirror-client" "json" "tools" "basic-auth-extension" do for file in "$base_dir"/connect/${cc_pkg}/build/libs/connect-${cc_pkg}*.jar; do if should_include_file "$file"; then CLASSPATH="$CLASSPATH":"$file" fi done if [ -d "$base_dir/connect/${cc_pkg}/build/dependant-libs" ] ; then CLASSPATH="$CLASSPATH:$base_dir/connect/${cc_pkg}/build/dependant-libs/*" fi done # classpath addition for release for file in "$base_dir"/libs/*; do if should_include_file "$file"; then CLASSPATH="$CLASSPATH":"$file" fi done for file in "$base_dir"/core/build/libs/kafka_${SCALA_BINARY_VERSION}*.jar; do if should_include_file "$file"; then CLASSPATH="$CLASSPATH":"$file" fi done shopt -u nullglob if [ -z "$CLASSPATH" ] ; then echo "Classpath is empty. Please build the project first e.g. by running './gradlew jar -PscalaVersion=$SCALA_VERSION'" exit 1 fi # JMX settings if [ -z "$KAFKA_JMX_OPTS" ]; then KAFKA_JMX_OPTS="-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false " fi # JMX port to use # if [ $JMX_PORT ]; then if [ $JMX_PORT ] && [ -z "$ISKAFKASERVER" ]; then KAFKA_JMX_OPTS="$KAFKA_JMX_OPTS -Dcom.sun.management.jmxremote.port=$JMX_PORT " fi # Log directory to use if [ "x$LOG_DIR" = "x" ]; then LOG_DIR="$base_dir/logs" fi # Log4j settings if [ -z "$KAFKA_LOG4J_OPTS" ]; then # Log to console. This is a tool. LOG4J_DIR="$base_dir/config/tools-log4j.properties" # If Cygwin is detected, LOG4J_DIR is converted to Windows format. (( CYGWIN )) && LOG4J_DIR=$(cygpath --path --mixed "${LOG4J_DIR}") KAFKA_LOG4J_OPTS="-Dlog4j.configuration=file:${LOG4J_DIR}" else # create logs directory if [ ! -d "$LOG_DIR" ]; then mkdir -p "$LOG_DIR" fi fi # If Cygwin is detected, LOG_DIR is converted to Windows format. (( CYGWIN )) && LOG_DIR=$(cygpath --path --mixed "${LOG_DIR}") KAFKA_LOG4J_OPTS="-Dkafka.logs.dir=$LOG_DIR $KAFKA_LOG4J_OPTS" # Generic jvm settings you want to add if [ -z "$KAFKA_OPTS" ]; then KAFKA_OPTS="" fi # Set Debug options if enabled if [ "x$KAFKA_DEBUG" != "x" ]; then # Use default ports DEFAULT_JAVA_DEBUG_PORT="5005" if [ -z "$JAVA_DEBUG_PORT" ]; then JAVA_DEBUG_PORT="$DEFAULT_JAVA_DEBUG_PORT" fi # Use the defaults if JAVA_DEBUG_OPTS was not set DEFAULT_JAVA_DEBUG_OPTS="-agentlib:jdwp=transport=dt_socket,server=y,suspend=${DEBUG_SUSPEND_FLAG:-n},address=$JAVA_DEBUG_PORT" if [ -z "$JAVA_DEBUG_OPTS" ]; then JAVA_DEBUG_OPTS="$DEFAULT_JAVA_DEBUG_OPTS" fi echo "Enabling Java debug options: $JAVA_DEBUG_OPTS" KAFKA_OPTS="$JAVA_DEBUG_OPTS $KAFKA_OPTS" fi # Which java to use if [ -z "$JAVA_HOME" ]; then JAVA="java" else JAVA="$JAVA_HOME/bin/java" fi # Memory options if [ -z "$KAFKA_HEAP_OPTS" ]; then KAFKA_HEAP_OPTS="-Xmx256M" fi # JVM performance options # MaxInlineLevel=15 is the default since JDK 14 and can be removed once older JDKs are no longer supported if [ -z "$KAFKA_JVM_PERFORMANCE_OPTS" ]; then KAFKA_JVM_PERFORMANCE_OPTS="-server -XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35 -XX:+ExplicitGCInvokesConcurrent -XX:MaxInlineLevel=15 -Djava.awt.headless=true" fi while [ $# -gt 0 ]; do COMMAND=$1 case $COMMAND in -name) DAEMON_NAME=$2 CONSOLE_OUTPUT_FILE=$LOG_DIR/$DAEMON_NAME.out shift 2 ;; -loggc) if [ -z "$KAFKA_GC_LOG_OPTS" ]; then GC_LOG_ENABLED="true" fi shift ;; -daemon) DAEMON_MODE="true" shift ;; *) break ;; esac done # GC options GC_FILE_SUFFIX='-gc.log' GC_LOG_FILE_NAME='' if [ "x$GC_LOG_ENABLED" = "xtrue" ]; then GC_LOG_FILE_NAME=$DAEMON_NAME$GC_FILE_SUFFIX # The first segment of the version number, which is '1' for releases before Java 9 # it then becomes '9', '10', ... # Some examples of the first line of `java --version`: # 8 -> java version "1.8.0_152" # 9.0.4 -> java version "9.0.4" # 10 -> java version "10" 2018-03-20 # 10.0.1 -> java version "10.0.1" 2018-04-17 # We need to match to the end of the line to prevent sed from printing the characters that do not match JAVA_MAJOR_VERSION=$("$JAVA" -version 2>&1 | sed -E -n 's/.* version "([0-9]*).*$/\1/p') if [[ "$JAVA_MAJOR_VERSION" -ge "9" ]] ; then KAFKA_GC_LOG_OPTS="-Xlog:gc*:file=$LOG_DIR/$GC_LOG_FILE_NAME:time,tags:filecount=10,filesize=100M" else KAFKA_GC_LOG_OPTS="-Xloggc:$LOG_DIR/$GC_LOG_FILE_NAME -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=100M" fi fi # Remove a possible colon prefix from the classpath (happens at lines like `CLASSPATH="$CLASSPATH:$file"` when CLASSPATH is blank) # Syntax used on the right side is native Bash string manipulation; for more details see # http://tldp.org/LDP/abs/html/string-manipulation.html, specifically the section titled "Substring Removal" CLASSPATH=${CLASSPATH#:} # If Cygwin is detected, classpath is converted to Windows format. (( CYGWIN )) && CLASSPATH=$(cygpath --path --mixed "${CLASSPATH}") # Launch mode if [ "x$DAEMON_MODE" = "xtrue" ]; then nohup "$JAVA" $KAFKA_HEAP_OPTS $KAFKA_JVM_PERFORMANCE_OPTS $KAFKA_GC_LOG_OPTS $KAFKA_JMX_OPTS $KAFKA_LOG4J_OPTS -cp "$CLASSPATH" $KAFKA_OPTS "$@" > "$CONSOLE_OUTPUT_FILE" 2>&1 < /dev/null & else exec "$JAVA" $KAFKA_HEAP_OPTS $KAFKA_JVM_PERFORMANCE_OPTS $KAFKA_GC_LOG_OPTS $KAFKA_JMX_OPTS $KAFKA_LOG4J_OPTS -cp "$CLASSPATH" $KAFKA_OPTS "$@" fi

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 270

- 271

- 272

- 273

- 274

- 275

- 276

- 277

- 278

- 279

- 280

- 281

- 282

- 283

- 284

- 285

- 286

- 287

- 288

- 289

- 290

- 291

- 292

- 293

- 294

- 295

- 296

- 297

- 298

- 299

- 300

- 301

- 302

- 303

- 304

- 305

- 306

- 307

- 308

- 309

- 310

- 311

- 312

- 313

- 314

- 315

- 316

- 317

- 318

- 319

- 320

- 321

- 322

- 323

- 324

- 325

- 326

- 327

- 328

- 329

- 330

- 331

- 332

- 333

- 334

- 335

- 336

- 337

给文件添加执行权限

chmod 777 /volumn/kafaka/kafka1/bin/kafka-run-class.sh

chmod 777 /volumn/kafaka/kafka2/bin/kafka-run-class.sh

chmod 777 /volumn/kafaka/kafka3/bin/kafka-run-class.sh

新建docker-compose-kafka.yml

version: '2' services: kafka1: image: docker.io/wurstmeister/kafka #restart: always #自动重新启动 hostname: kafka1 container_name: kafka1 ports: - 39093:9093 - 39193:9193 environment: KAFKA_BROKER_ID: 1 KAFKA_LISTENERS: INSIDE://172.28.10.14:9093,OUTSIDE://172.28.10.14:9193 #KAFKA_ADVERTISED_LISTENERS=INSIDE://<container>:9092,OUTSIDE://<host>:9094,outside为java程序连接的公网地址,通过 连接82.156.7.227:39193找到KAFKA_LISTENERS中的172.28.10.14:9193 KAFKA_ADVERTISED_LISTENERS: INSIDE://172.28.10.14:9093,OUTSIDE://82.156.7.227:39193 KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT KAFKA_INTER_BROKER_LISTENER_NAME: INSIDE KAFKA_ZOOKEEPER_CONNECT: zook1:2181,zook2:2181,zook3:2181 ALLOW_PLAINTEXT_LISTENER : 'yes' JMX_PORT: 9999 #开放JMX监控端口,来监测集群数据 volumes: - /volumn/kafaka/kafka1/wurstmeister/kafka:/wurstmeister/kafka - /volumn/kafaka/kafka1/kafka:/kafka - /volumn/kafaka/kafka1/bin/kafka-run-class.sh:/opt/kafka/bin/kafka-run-class.sh external_links: - zook1 - zook2 - zook3 networks: docker-net: ipv4_address: 172.28.10.14 kafka2: image: docker.io/wurstmeister/kafka #restart: always #自动重新启动 hostname: kafka2 container_name: kafka2 ports: - 39094:9094 - 39194:9194 environment: KAFKA_BROKER_ID: 2 KAFKA_LISTENERS: INSIDE://172.28.10.15:9094,OUTSIDE://172.28.10.15:9194 #KAFKA_ADVERTISED_LISTENERS=INSIDE://<container>:9092,OUTSIDE://<host>:9094 KAFKA_ADVERTISED_LISTENERS: INSIDE://172.28.10.15:9094,OUTSIDE://82.156.7.227:39194 KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT KAFKA_INTER_BROKER_LISTENER_NAME: INSIDE KAFKA_ZOOKEEPER_CONNECT: zook1:2181,zook2:2181,zook3:2181 ALLOW_PLAINTEXT_LISTENER : 'yes' JMX_PORT: 9999 #开放JMX监控端口,来监测集群数据 volumes: - /volumn/kafaka/kafka2/wurstmeister/kafka:/wurstmeister/kafka - /volumn/kafaka/kafka2/kafka:/kafka - /volumn/kafaka/kafka2/bin/kafka-run-class.sh:/opt/kafka/bin/kafka-run-class.sh external_links: - zook1 - zook2 - zook3 networks: docker-net: ipv4_address: 172.28.10.15 kafka3: image: docker.io/wurstmeister/kafka #restart: always #自动重新启动 hostname: kafka3 container_name: kafka3 ports: - 39095:9095 - 39195:9195 environment: KAFKA_BROKER_ID: 3 KAFKA_LISTENERS: INSIDE://172.28.10.16:9095,OUTSIDE://172.28.10.16:9195 #KAFKA_ADVERTISED_LISTENERS=INSIDE://<container>:9092,OUTSIDE://<host>:9094 KAFKA_ADVERTISED_LISTENERS: INSIDE://172.28.10.16:9095,OUTSIDE://82.156.7.227:39195 KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT KAFKA_INTER_BROKER_LISTENER_NAME: INSIDE KAFKA_ZOOKEEPER_CONNECT: zook1:2181,zook2:2181,zook3:2181 ALLOW_PLAINTEXT_LISTENER : 'yes' JMX_PORT: 9999 #开放JMX监控端口,来监测集群数据 volumes: - /volumn/kafaka/kafka3/wurstmeister/kafka:/wurstmeister/kafka - /volumn/kafaka/kafka3/kafka:/kafka - /volumn/kafaka/kafka3/bin/kafka-run-class.sh:/opt/kafka/bin/kafka-run-class.sh external_links: - zook1 - zook2 - zook3 networks: docker-net: ipv4_address: 172.28.10.16 networks: docker-net: name: kafaka-net

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

启动集群

docker-compose -f ./docker-compose-kafka.yml up -d

查看日志

docker-compose -f ./docker-compose-kafka.yml logs

- 1

- 2

- 3

安装kafka-manager

新建 docker-compose-kafka-manager.yml

version: '2' services: kafka-manager: image: scjtqs/kafka-manager:latest restart: always hostname: kafka-manager container_name: kafka-manager ports: - 39196:9000 external_links: # 连接本compose文件以外的container - zook1 - zook2 - zook3 - kafka1 - kafka2 - kafka3 environment: ZK_HOSTS: zook1:2181,zook2:2181,zook3:2181 KAFKA_BROKERS: kafka1:9093,kafka2:9094,kafka3:9095 APPLICATION_SECRET: letmein KM_ARGS: -Djava.net.preferIPv4Stack=true networks: docker-net: ipv4_address: 172.28.10.20 networks: docker-net: name: kafaka-net

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

启动kafka-manager

docker compose -f ./docker-compose-kafka-manager.yml up -d

- 1

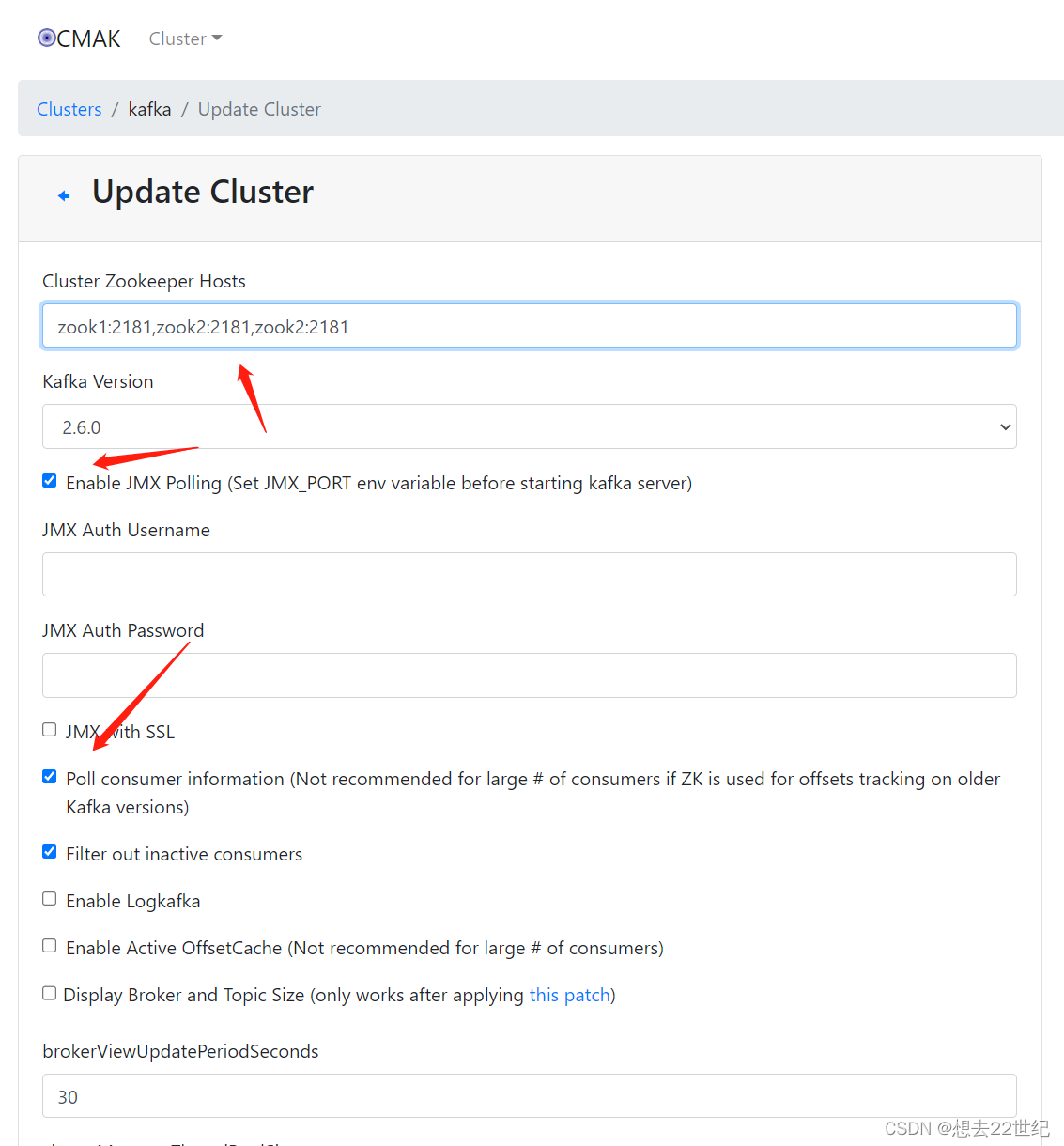

配置cluster

测试

进入kafka

docker exec -ti kafka1 /bin/bash

cd opt/kafka_2.13-2.8.1/

- 1

- 2

创建topic

bin/kafka-topics.sh --create --zookeeper zook1:2181 --replication-factor 2 --partitions 2 --topic partopic

- 1

查看topic的状态

bin/kafka-topics.sh --describe --zookeeper zook1:2181 --topic partopic

- 1

- 2