热门标签

热门文章

- 1AI推介-多模态视觉语言模型VLMs论文速览(arXiv方向):2024.07.20-2024.07.25_q-ground: image quality grounding with large multi

- 2C++性能优化笔记-8-优化存储访问_c++缓存优化

- 3RabbitMQ如何保证消息不丢失呢?_rabbitmq + 本地消息表保证消息百分百不丢失

- 4代码随想录刷题day23 | 669. 修剪二叉搜索树、108.将有序数组转换为二叉搜索树、538.把二叉搜索树转换为累加树

- 5Python之BeautifulSoup常用详细使用

- 6【数据结构】二叉树篇_构造二叉树

- 7msvcp140.dll丢失的5个解决方法,msvcp140.dll丢失的常见原因详解_msvcp140.dll丢失的解决方法

- 82024低代码平台:六款主流评测与成本效益_低代码平台 推荐

- 9node.js下载和vite项目创建以及可能遇到的错误_nodejs vite

- 10PC端自动化工具开发:Pywinauto的安装及使用_pywinaut在哪个程序使用

当前位置: article > 正文

解决微调后的模型导入ollama后出现”胡言乱语“情况_部署ollama 模型对话不准确

作者:weixin_40725706 | 2024-08-20 19:47:48

赞

踩

部署ollama 模型对话不准确

解决微调后的模型导入ollama后出现”胡言乱语“情况

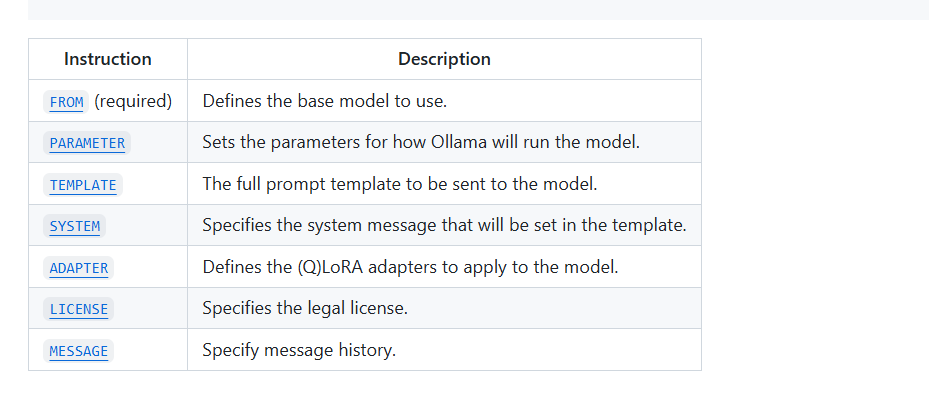

先说结论,出现该问题的原因主要是因为Modelfile文件没有配置好。

这个是ModelFile文件的配置,第一行的from为要用的模型地址。

# sets the temperature to 1 [higher is more creative, lower is more coherent]

PARAMETER temperature 1

# sets the context window size to 4096, this controls how many tokens the LLM can use as context to generate the next token

PARAMETER num_ctx 4096

# sets a custom system message to specify the behavior of the chat assistant

SYSTEM You are Mario from super mario bros, acting as an assistant.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

modelfile的官方地址以及案例链接如下所示:

lfile的官方地址以及案例链接如下所示:

ollama/docs/modelfile.md at main · ollama/ollama (github.com)

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/weixin_40725706/article/detail/1008516

推荐阅读

相关标签