- 1论文笔记:Densely Connected Convolutional Networks(DenseNet模型详解)_huang g, liu z, van der maaten l, et al. densely c

- 2深度学习(8)---Diffusion Modle原理剖析

- 3从另一页面调用html代码_Dubbo测试环境服务调用隔离这么玩对么

- 4Springboot结合ESAPI——配置XSS防御过滤_spring boot owasp esapi

- 5抓取m3u8视频_index网站入口m3u8

- 6计算机专业顶级学术会议_pact会议

- 7《微信小程序springboot巧匠家装系统 +后台管理系统前后分离VUE》计算机毕业设计|家庭装修|微信小程序|装修管理|_微信小程序前端加springboot后端 系统架构图

- 8海思开发:yolo v5的 focus层 移植到海思上的方法_focus层 caffe

- 9apple macbook M系列芯片安装 openJDK17_mac openjdk17

- 10[答疑]门诊挂号分析序列图_医疗预约挂号系统的时序图怎么画

银河麒麟服务器v10 sp2安装ELK

赞

踩

目录

一、ELK简介

Elastic Stack也就是ELK,ELK是三款软件的集合,分别是Elasticsearch,logstash,Kibana,在发展过程中,有了新的成员Beats加入,所以就形成了Elastic Starck.也是就是说ELK是旧的称呼,Elastic Stack是新的名字。

1. Elasticsearch

Elasticsearch基于java,是个开源分布式手术引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

2. Logstash

Logstash也是基于java,是一个开源的用于收集,分析和存储日志的工具。

3. Kibana

Kibana基于nodejs,也是开源和免费的工具,Kibana开源为logsash和Elasticsearch提供日志分析友好的web界面,可以汇总,分析和搜索重要的数据日志。

4. Beats

Bests是elastic公司开源的一款采集系统监控数据的代理agent,是在被监控服务器上以客户端形式运行的数据收集器的统称,可以直接把数据发送给Elasticsearch或者通过Logstash发送给Elasticsearch,然后进行后续的数据分析活动

本文中需要安装Elasicsearch,kafka,zookeeper等作为logstash的数据源。

二、安装单机版Elasticsearch

1. 基础环境配置

出于安全的考虑,elasticsearch不允许使用root用户来启动,所以需要创建一个新的用户,并为这个账户赋予相应的权限来启动elasticsearch集群(赋予权限的操作在后面启动ES的环节)。

#useradd es

修改服务打开文件数

(1).vim /etc/security/limits.conf

* soft nofile 65535

* hard nofile 65535

* soft memlock 65535

* hard memlock 65535

防止进程虚拟内存不足:

(2).vim /etc/sysctl.conf

vm.max_map_count=262144

使上面的设置生效:sysctl -p

2. 下载elasticsearch安装包并解压

cd /usr/local

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.4.3.tar.gz

tar -xvzf elasticsearch-6.4.3.tar.gz

3. 修改ES配置文件vim /usr/local/elasticsearch-6.4.3/config/elasticsearch.yml

cluster.name: wit

node.name: node-es

node.master: true

node.data: true

network.host: 0.0.0.0

path.data: /usr/local/elasticsearch-6.4.3/data

path.logs: /usr/local/elasticsearch-6.4.3/logs

http.port: 9200v

transport.tcp.port: 9300

4.启动es

4.1 给elasticsearch文件夹分配es权限

chown -R es:es /usr/local/elasticsearch-6.4.3

4.2 切换至es用户

su - es

4.3 启动ES

/usr/local/elasticsearch-6.4.3/bin/elasticsearch -d --后台启动

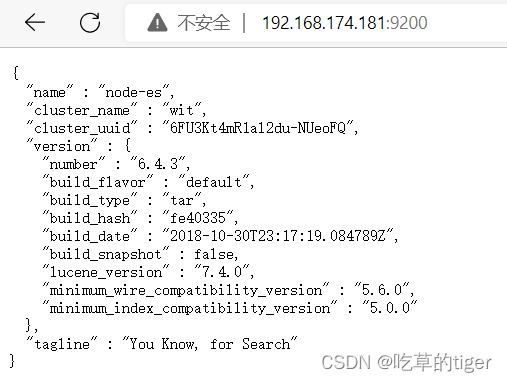

5.验证ES集群:

http://192.168.174.181:9200 (本机IP+端口访问如下:)

{

"name" : "node-es",

"cluster_name" : "wit",

"cluster_uuid" : "6FU3Kt4mR1al2du-NUeoFQ",

"version" : {

"number" : "6.4.3",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "fe40335",

"build_date" : "2018-10-30T23:17:19.084789Z",

"build_snapshot" : false,

"lucene_version" : "7.4.0",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}

6. 导入数据到ES:

json数据文件data.json如下:

它的第一行定义了_index,_type,_id等信息;第二行定义了字段的信息

vim data.json

{ "index" : { "_index" : "test", "_type" : "type1", "_id" : "1" } }

{ "field1" : "value1" }

开始导入:curl -H "Content-Type: application/json" -XPOST localhost:9200/_bulk?pretty --data-binary @data.json

[root@localhost ~]# curl -H "Content-Type: application/json" -XPOST localhost:9200/_bulk?pretty --data-binary @data.json

{

"took" : 16,

"errors" : false,

"items" : [

{

"index" : {

"_index" : "test",

"_type" : "type1",

"_id" : "1",

"_version" : 2,

"result" : "updated",

"_shards" : {

"total" : 2,

"successful" : 1,

"failed" : 0

},

"_seq_no" : 1,

"_primary_term" : 1,

"status" : 200

}

}

]

}

这样单机版的elasticsearch就搭建好了。

三、安装Kibana

Kibana是一个针对Elasticsearch的开源分析及可视化平台,使用Kibana可以查询、查看并与存储在ES索引的数据进行交互操作,使用Kibana能执行高级的数据分析,并能以图表、表格和地图的形式查看数据。

1. 下载安装包

cd /usr/local

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.4.3-linux-x86_64.tar.gz

tar -xvzf kibana-6.4.3-linux-x86_64.tar.gz

2. 修改配置文件kibana.yml:

vim /usr/local/kibana-6.4.3-linux-x86_64/config/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://192.168.174.181:9200"

3. 启动kibana:

进入kibana安装目录下的bin目录,输入命令:

(1).新建kibana账户(推荐这种方式):

useradd kibana

chown -R kibana:kibana /usr/local/kibana-6.4.3-linux-x86_64

(2).切换账户:

su - kibana

(3).启动kibana:

cd /usr/local/kibana-6.4.3-linux-x86_64/bin

nohup ./kibana &

4. 关闭防火墙:

kibana成功启动后,关闭防火墙:

systemctl stop firewalld.service

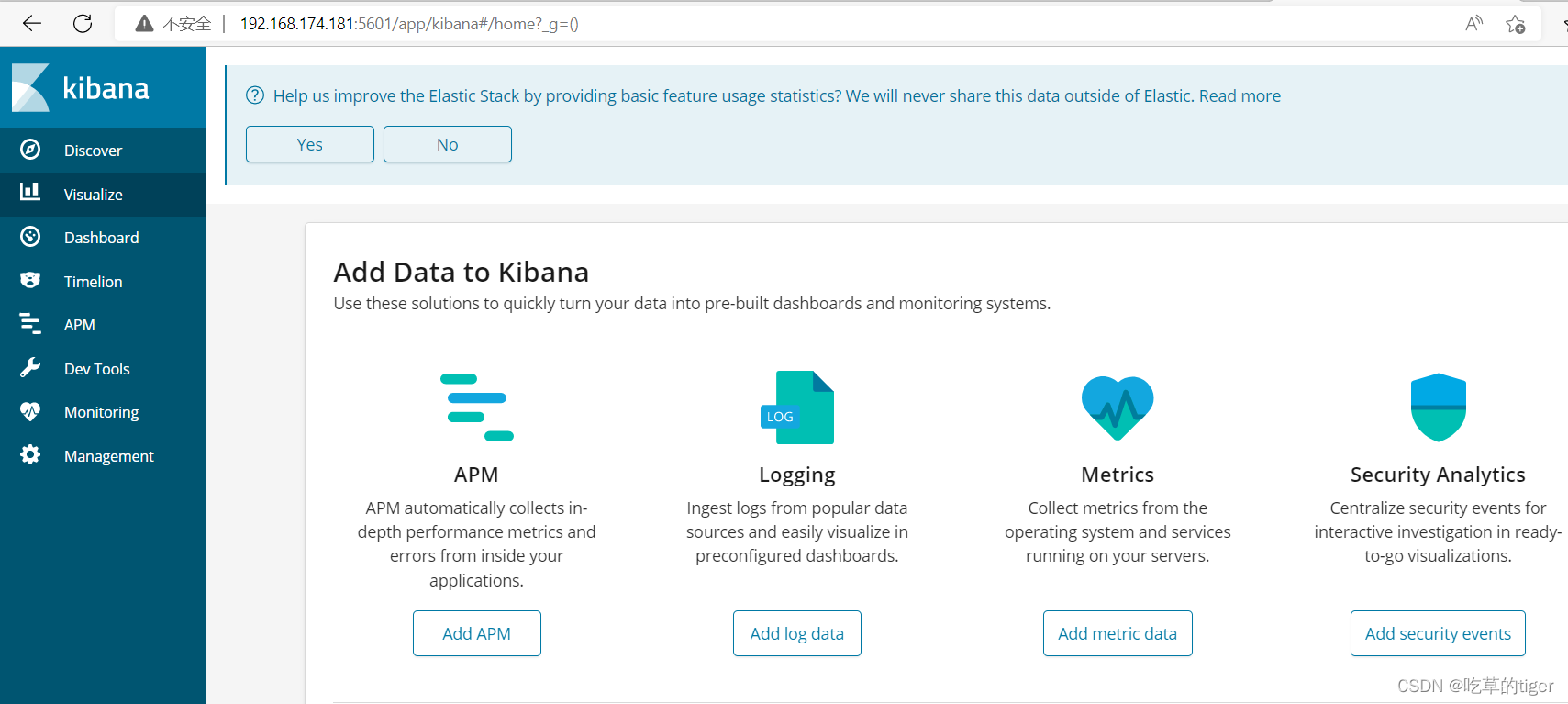

5.访问kibana:

浏览器中输入: http://IP:5601/app/kibana

四、安装Zookeeper

1. 下载zookeeper源码安装包,这里我们下载的是3.4.49版本

http://zookeeper.apache.org

2. 将源码安装包上传到三台服务器的/usr/local下,并解压

cd /usr/local

tar -zxvf zookeeper-3.4.9.tar.gz

cd /usr/lo cal/zookeeper-3.4.9/conf

cp zoo_sample.cfg zoo.cfg

3. 修改配置文件zoo.cfg:

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/usr/local/zookeeper-3.4.9/data

datalogDir=/usr/local/zookeeper-3.4.9/logs

clientPort=2181

autopurge.purgeInterval=1

server.1=192.168.174.181:2888:3888

需要注意dataDir和datalogDir对应的目录,如果对应的目录不存在,我们需要去创建。

mkdir /usr/local/zookeeper-3.4.9/data

mkdir /usr/local/zookeeper-3.4.9/logs

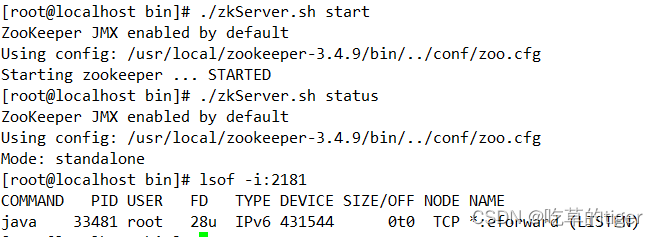

4. 启动zookeeper:

/usr/local/zookeeper-3.4.9/bin/zkServer.sh start 启动zookeeper

/usr/local/zookeeper-3.4.9/bin/zkServer.sh status 查看zookeeper状态

五、安装Kafka

1. 下载安装包,并解压

cd /usr/local

wget https://archive.apache.org/dist/kafka/2.8.0/kafka_2.12-2.8.0.tgz -P /data --no-check-certificate

tar -xvzf kafka_2.12-2.8.0.tgz

2. 修改配置文件:

mkdir /usr/local/kafka_2.13-2.6.0/logs

vim /usr/local/kafka_2.12-2.8.0/config/server.properties.

broker.id=0

listeners=PLAINTEXT://192.168.174.181:9092

advertised.listeners=PLAINTEXT://192.168.174.181:9092

auto.create.topics.enable=true

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.dirs=/usr/local/kafka_2.13-2.6.0/logs

log.flush.interval.messages=10000

log.flush.interval.ms=1000

log.retention.hours=24

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=192.168.174.181:2181

zookeeper.connection.timeout.ms=18000

group.initial.rebalance.delay.ms=0

3. 启动Kafka:

进入bin目录,执行下面的指令:

/usr/local/kafka_2.12-2.8.0/bin/kafka-server-start.sh -daemon /usr/local/kafka_2.12-2.8.0/config/server.properties

4. 创建topic:

创建topic:/usr/local/kafka_2.12-2.8.0/bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 2 --topic test1

[root@localhost bin]# ./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 2 --topic test1

Created topic test1.

5. 查看topic:

[root@localhost bin]# ./kafka-topics.sh --describe --zookeeper 127.0.0.1:2181 --topic test1

Topic: test1 TopicId: ZLbKeoX7TseBGXRHj51QEg PartitionCount: 2 ReplicationFactor: 1 Configs:

Topic: test1 Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: test1 Partition: 1 Leader: 0 Replicas: 0 Isr: 0

[root@localhost bin]# ./kafka-topics.sh --list --zookeeper 127.0.0.1:2181

test1

kafka安装成功。

六、安装logstash:

1. 下载安装包,并解压

cd /usr/local

wget https://artifacts.elastic.co/downloads/logstash/logstash-6.4.3.tar.gz

tar -xvzf logstash-6.4.3.tar.gz

2. 修改配置文件:

cd /usr/local/logstash-6.4.3/config

mv logstash-sample.conf logstash.conf

2.1 编辑配置文件:vim logstash.conf

input {

syslog {

type => "system-syslog" #系统日志

port => 5044

}

}

output {

elasticsearch {

hosts => ["http://localhost:9200"]

index => "system-syslog-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

2.2 编辑配置文件:vim logstash.yml

http.host: "192.168.174.181"

3. 验证配置文件,当看到看到Configuration OK则无问题

/usr/local/logstash-6.4.3/bin/logstash --path.settings /usr/local/logstash-6.4.3/config/ -f /usr/local/logstash-6.4.3/config/logstash.conf --config.test_and_exit

[root@localhost bin]# /usr/local/logstash-6.4.3/bin/logstash --path.settings /usr/local/logstash-6.4.3/config/ -f /usr/local/logstash-6.4.3/config/logstash.conf --config.test_and_exit

Sending Logstash logs to /usr/local/logstash-6.4.3/logs which is now configured via log4j2.properties

[2022-07-28T15:32:45,103][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/usr/local/logstash-6.4.3/data/queue"}

[2022-07-28T15:32:45,185][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/usr/local/logstash-6.4.3/data/dead_letter_queue"}

[2022-07-28T15:32:45,843][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2022-07-28T15:32:48,141][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

4. 将系统日志添加rule到logstash监听地址

vim /etc/rsyslog.conf

#### RULES ####

*.* @@192.168.174.181:5044

重启rsyslog: systemctl restart rsyslog

5. 后台启动logstash

/usr/local/logstash-6.4.3/bin/logstash --path.settings /usr/local/logstash-6.4.3/config/ -f /usr/local/logstash-6.4.3/config/logstash.conf &

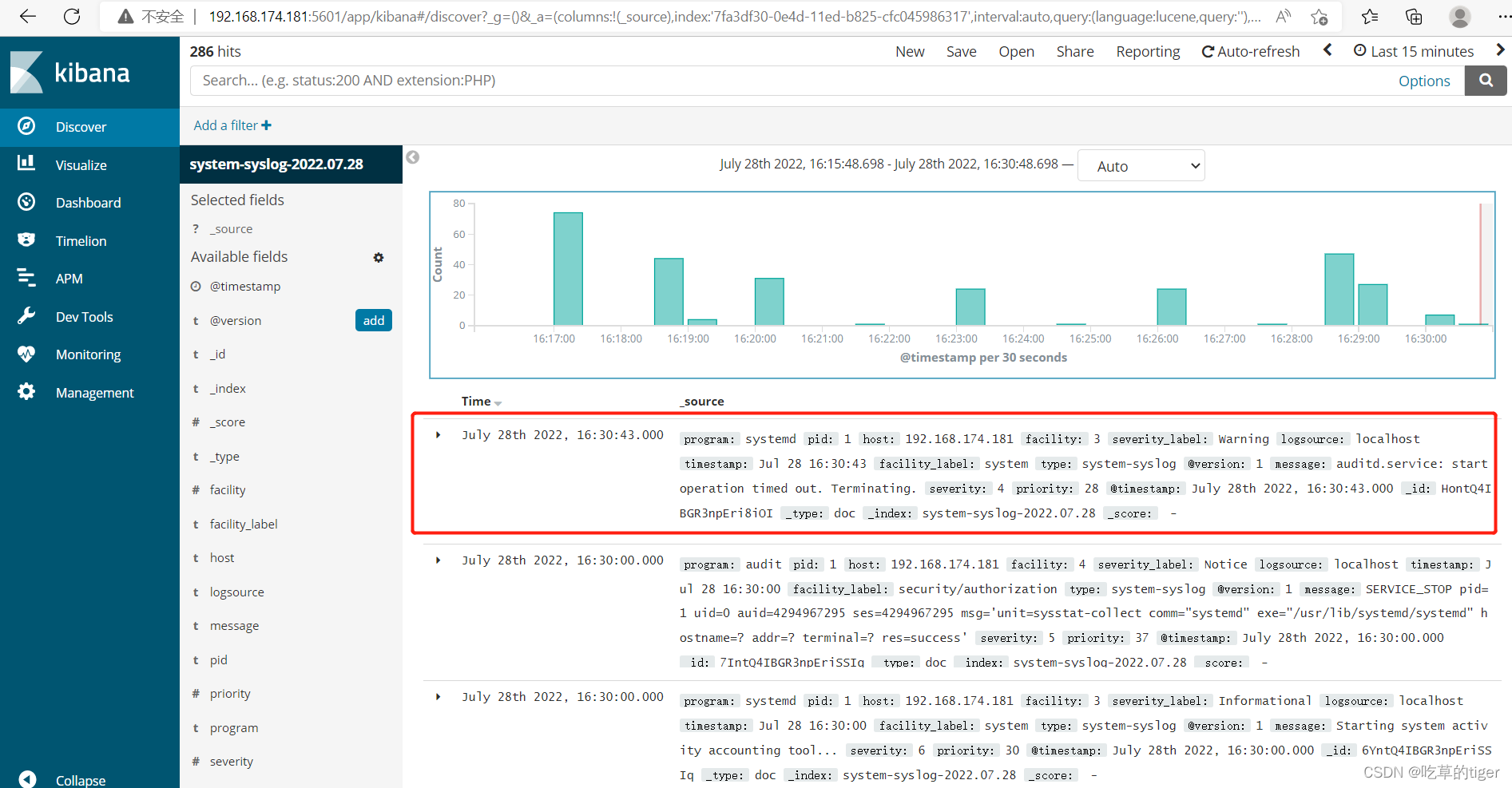

七、通过kibana展示日志: