热门标签

热门文章

- 1GPU:使用阿里云服务器,免费部署一个开源大模型_免费gpu服务器

- 2十分钟搞定时间复杂度(算法的时间复杂度)_渐进时间复杂度怎么算

- 3大数据学习week3_ctas和cte

- 4CommandInvokationFailure: Failed to update Android SDK package list. 报错的解决方法

- 5mysql sqlalchemy 索引_SQLAlchemy中指定想要使用的索引

- 6opencv 编译安装时出现报错 modules/videoio/src/cap_ffmpeg_impl.hpp:585:34: error: ‘AVStream {aka struct AVStre_error: no member named 'codec' in 'avstream

- 7【Java面试系列】ElasticSearch面试题_elastucsearch 面试题

- 8MyBatis-Plus CURD查询入门_mybatis plus cursor

- 93 分钟部署 SeaTunnel Zeta 单节点 Standalone 模式环境

- 10C++版OpenCV里的机器学习

当前位置: article > 正文

python实现word2vec(不使用框架)_word2vec 的vecsize

作者:weixin_40725706 | 2024-05-01 16:09:54

赞

踩

word2vec 的vecsize

import time import numpy as np import math wordHash = {} wordNum = 0 window = 2 words = [] vecSize = 100 u = 0.1 t = 500 #将单词存入map def read_file(): global wordNum,wordHash,words f =open("test.txt",encoding="utf-8") sentences = f.readlines() for sentence in sentences: words = sentence.split(" ") for word in words: if word in wordHash: wordHash[word] += 1 else: wordHash[word] = 1 wordNum+=1 #对单词构建哈弗曼编码 def buildHFMTree(): global wordHash vocab = sorted(wordHash.items(), key=lambda item: item[1], reverse=True) length = len(vocab)*2 weight = [None]*length parent = [None]*length pos = [None]*length for i in range(length): if i < length/2: wordHash[vocab[i][0]] = i weight[i] = vocab[i][1] else: weight[i] = wordNum lp = len(vocab)-1 rp = lp+1 addp = lp+1 while True: if lp<0: if rp+1==addp: break weight[addp] = weight[rp]+weight[rp+1] pos[rp] = 0 pos[rp+1] = 1 parent[rp] = addp parent[rp+1] = addp addp+=1 rp+=2 continue if weight[lp] < weight[rp]: if lp-1>=0 and weight[lp-1] < weight[rp]: min = lp max = lp-1 lp = lp-2 else: min = lp max = rp lp-=1 rp+=1 else: if weight[rp+1] > weight[lp]: min = rp max = lp lp -= 1 rp+=1 else: min = rp max = rp+1 rp+=2 weight[addp] = weight[min]+weight[max] pos[min] = 0 pos[max] = 1 parent[min] = addp parent[max] = addp addp+=1 return pos,parent def sigmiod(n): return np.exp(n)/(1+np.exp(n)) def getHFMCode(word,pos,parent): global wordHash i = wordHash[word] code = [] while parent[i]!=None: code.append(pos[i]) i = parent[i] print("单词'"+word+"'的哈弗曼编码:"+str(code)) return code def updataParam(word,pos,parent,ansVec,projVec,paramVec): global wordHash i = wordHash[word] ll = 0 paramChange = np.zeros((vecSize,wordNum-1)) projChange = np.zeros((vecSize)) while parent[i] != None: d = pos[i] n = ansVec[parent[i]-wordNum] try: ll += (1-d)*math.log(sigmiod(n))+d*math.log(1-sigmiod(n)) except Exception: ll += 0 m = 1-d-sigmiod(n) gradProj = m*projVec gradParam = m * paramVec[:,parent[i] - wordNum] paramChange[:,parent[i]-wordNum] += gradProj*u projChange += gradParam*u i = parent[i] return projChange,paramChange,ll def initVec(): global wordNum,vecSize wordVec = np.random.random((wordNum,vecSize)) paramVec = np.zeros((vecSize,wordNum-1)) for i in range(wordNum): for j in range(vecSize): wordVec[i][j] = (wordVec[i][j]-0.5)/vecSize return wordVec,paramVec def train(wordVec,paramVec,pos,parent): global vecSize for k in range(t+1): paramChange = np.zeros((vecSize, wordNum - 1)) wordChange = np.zeros((wordNum,vecSize)) for i in range(len(words)): projVec = np.zeros(vecSize) n = 0 for j in range(i-window,i): if j<0: continue projVec += wordVec[wordHash[words[j]]] n+=1 for j in range(i+1,i+window): if j>=len(words): continue projVec += wordVec[wordHash[words[j]]] n+=1 projVec = projVec/n ansVec = projVec.dot(paramVec) projChange1,paramChange1,ll = updataParam(words[i],pos,parent,ansVec,projVec,paramVec) for j in range(i - window, i): if j < 0: continue wordChange[wordHash[words[j]]]+=projChange1 for j in range(i + 1, i + window): if j >= len(words): continue wordChange[wordHash[words[j]]]+=projChange1 paramChange+=paramChange1 if k%100==0: print("第"+str(k)+"轮训练中,单词"+words[i]+"的损失为:"+str(ll)) wordVec+=wordChange paramVec+=paramChange print("开始读取单词") read_file() print("读取单词结束") print("开始构建哈弗曼树") pos,parent = buildHFMTree() print("构建完成") #getHFMCode('interpretations',pos,parent) print("开始初始化单词向量") wordVec,paramVec = initVec() print("单词向量初始完成") print("准备训练参数") train(wordVec,paramVec,pos,parent)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

语料:

In the near future the translation history will only be viewable when you log in to your account and it will be centrally managed in my activity record. This upgrade will clear the previous history so if you want the system to record certain translations for future review please be sure to save the translation results

- 1

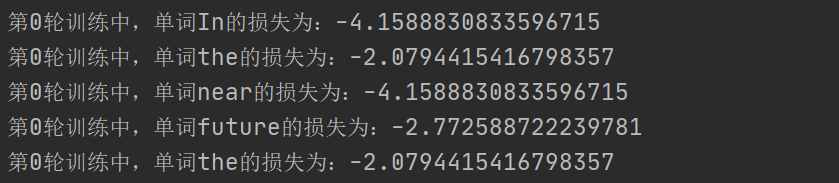

运行结果:

不足

相对于源码,未实现负采样,多线程和指数运算近似来加快性能的功能

声明:本文内容由网友自发贡献,转载请注明出处:【wpsshop】

推荐阅读

相关标签