热门标签

热门文章

- 1集群环境下Kafka启动后自动关闭的解决方案_在一个broker上启动kafka会停止其他broker上的kafka

- 2Redis(01)string字符串_redis 字符串

- 3基于滑膜控制扰动观测器的永磁同步电机PMSM模型_滑模扰动观测器

- 4RabbitMQ--集成Springboot--02--RabbitListener注解_rabbitlistener注解配置

- 5全网最全Stable Diffusion教程及实践_stable-diffusion

- 6华为OD机试C卷-- 符号运算(Java & JS & Python & C)

- 7阿里云产品经理吴华剑:SLS 的产品功能与发展历程_阿里云数据管道

- 8超详细~Windows下PyCharm配置Anaconda环境教程_anaconda pycharm_pycharm2023配置anaconda解释器

- 9游戏场景设计文档案例_游戏基础知识——“游乐场”场景的设计技巧

- 10SuperMap 地图配置,美化,优化_超图设计边界线

当前位置: article > 正文

【Python深度学习】Tensorflow+CNN进行人脸识别实战(附源码和数据集)_tensorflow 实现人脸识别所有过程

作者:weixin_40725706 | 2024-06-16 13:35:03

赞

踩

tensorflow 实现人脸识别所有过程

需要源码和数据集请点赞关注收藏后评论区留言私信~~~

下面利用tensorflow平台进行人脸识别实战,使用的是Olivetti Faces人脸图像 部分数据集展示如下

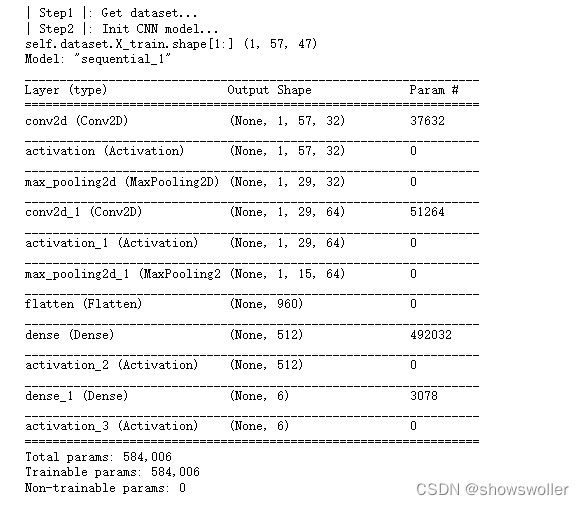

程序训练过程如下

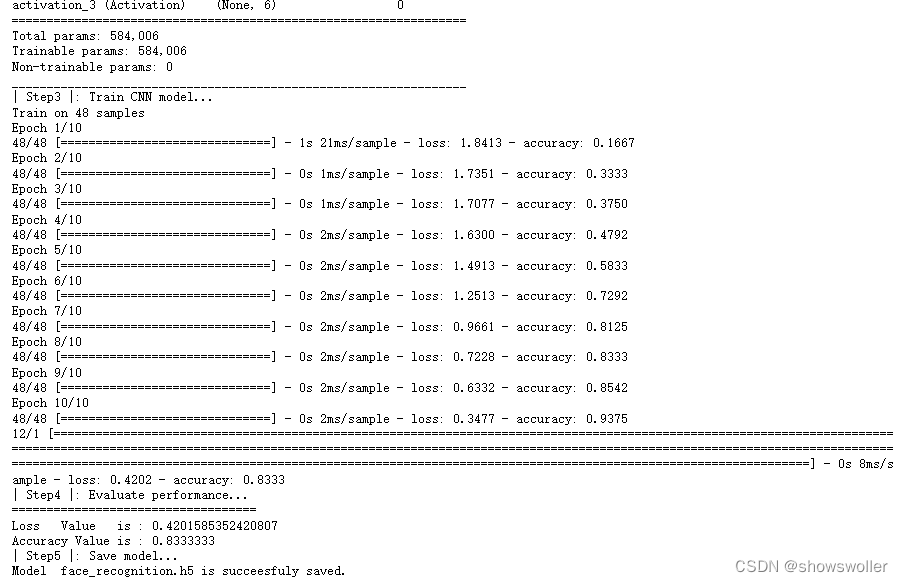

接下来训练CNN模型 可以看到训练进度和损失值变化

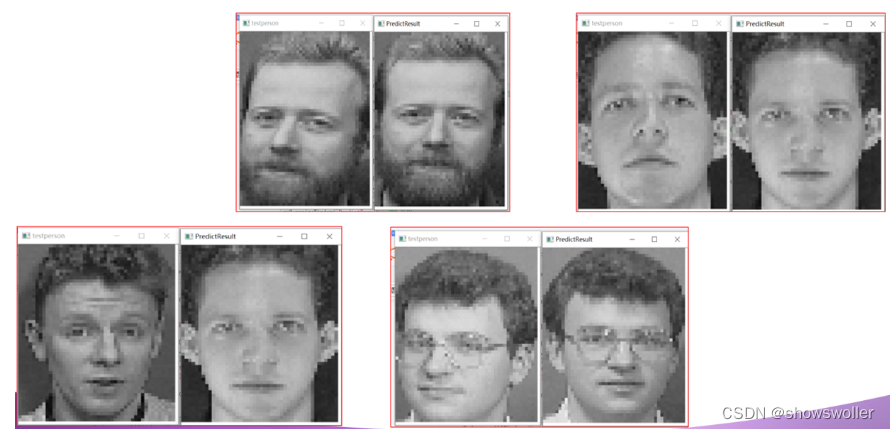

接下来展示人脸识别结果

程序会根据一张图片自动去图片集中寻找相似的人脸 如上图所示

部分代码如下 需要全部源码和数据集请点赞关注收藏后评论区留言私信~~~

- from os import listdir

- import numpy as np

- from PIL import Image

- import cv2

- from tensorflow.keras.models import Sequential, load_model

- from tensorflow.keras.layers import Dense, Activation, Convolution2D, MaxPooling2D, Flatten

- from sklearn.model_selection import train_test_split

- from tensorflow.python.keras.utils import np_utils

-

- # 读取人脸图片数据

- def img2vector(fileNamestr):

- # 创建向量

- returnVect = np.zeros((57,47))

- image = Image.open(fileNamestr).convert('L')

- img = np.asarray(image).reshape(57,47)

- return img

-

- # 制作人脸数据集

- def GetDataset(imgDataDir):

- print('| Step1 |: Get dataset...')

- imgDataDir='faces_4/'

- FileDir = listdir(imgDataDir)

-

- m = len(FileDir)

- imgarray=[]

- hwLabels=[]

- hwdata=[]

-

- # 逐个读取图片文件

- for i in range(m):

- # 提取子目录

- className=i

- subdirName='faces_4/'+str(FileDir[i])+'/'

- fileNames = listdir(subdirName)

- lenFiles=len(fileNames)

- # 提取文件名

- for j in range(lenFiles):

- fileNamestr = subdirName+fileNames[j]

- hwLabels.append(className)

- imgarray=img2vector(fileNamestr)

- hwdata.append(imgarray)

-

- hwdata = np.array(hwdata)

- return hwdata,hwLabels,6

-

- # CNN模型类

- class MyCNN(object):

- FILE_PATH = "face_recognition.h5" # 模型存储/读取目录

- picHeight = 57 # 模型的人脸图片长47,宽57

- picWidth = 47

-

- def __init__(self):

- self.model = None

-

- # 获取训练数据集

- def read_trainData(self, dataset):

- self.dataset = dataset

-

- # 建立Sequential模型,并赋予参数

- def build_model(self):

- print('| Step2 |: Init CNN model...')

- self.model = Sequential()

- print('self.dataset.X_train.shape[1:]',self.dataset.X_train.shape[1:])

- self.model.add( Convolution2D( filters=32,

- kernel_size=(5, 5),

- padding='same',

- #dim_ordering='th',

- input_shape=self.dataset.X_train.shape[1:]))

-

- self.model.add(Activation('relu'))

- self.model.add( MaxPooling2D(pool_size=(2, 2),

- strides=(2, 2),

- padding='same' ) )

- self.model.add(Convolution2D(filters=64,

- kernel_size=(5, 5),

- padding='same') )

- self.model.add(Activation('relu'))

- self.model.add(MaxPooling2D(pool_size=(2, 2),

- strides=(2, 2),

- padding='same') )

- self.model.add(Flatten())

- self.model.add(Dense(512))

- self.model.add(Activation('relu'))

-

- self.model.add(Dense(self.dataset.num_classes))

- self.model.add(Activation('softmax'))

- self.model.summary()

-

- # 模型训练

- def train_model(self):

- print('| Step3 |: Train CNN model...')

- self.model.compile( optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

- # epochs:训练代次、batch_size:每次训练样本数

- self.model.fit(self.dataset.X_train, self.dataset.Y_train, epochs=10, batch_size=20)

-

- def evaluate_model(self):

- loss, accuracy = self.model.evaluate(self.dataset.X_test, self.dataset.Y_test)

- print('| Step4 |: Evaluate performance...')

- print('===================================')

- print('Loss Value is :', loss)

- print('Accuracy Value is :', accuracy)

-

- def save(self, file_path=FILE_PATH):

- print('| Step5 |: Save model...')

- self.model.save(file_path)

- print('Model ',file_path,'is succeesfuly saved.')

-

- # 建立一个用于存储和格式化读取训练数据的类

- class DataSet(object):

- def __init__(self, path):

- self.num_classes = None

- self.X_train = None

- self.X_test = None

- self.Y_train = None

- self.Y_test = None

- self.picWidth = 47

- self.picHeight = 57

- self.makeDataSet(path) # 在这个类初始化的过程中读取path下的训练数据

-

- def makeDataSet(self, path):

- # 根据指定路径读取出图片、标签和类别数

- imgs, labels, clasNum = GetDataset(path)

-

- # 将数据集打乱随机分组

- X_train, X_test, y_train, y_test = train_test_split(imgs, labels, test_size=0.2,random_state=1)

-

- # 重新格式化和标准化

- X_train = X_train.reshape(X_train.shape[0], 1, self.picHeight, self.picWidth) / 255.0

- X_test = X_test.reshape(X_test.shape[0], 1, self.picHeight, self.picWidth) / 255.0

-

- X_train = X_train.astype('float32')

- X_test = X_test.astype('float32')

-

- # 将labels转成 binary class matrices

- Y_train = np_utils.to_categorical(y_train, num_classes=clasNum)

- Y_test = np_utils.to_categorical(y_test, num_classes=clasNum)

-

- # 将格式化后的数据赋值给类的属性上

- self.X_train = X_train

- self.X_test = X_test

- self.Y_train = Y_train

- self.Y_test = Y_test

- self.num_classes = clasNum

- # 人脸图片目录

- dataset = DataSet('faces_4/')

- model = MyCNN()

- model.read_trainData(dataset)

- model.build_model()

- model.train_model()

- model.evaluate_model()

- model.save()

创作不易 觉得有帮助请点赞关注收藏~~~

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/weixin_40725706/article/detail/726859

推荐阅读

相关标签