- 1Kafka核心概念、数据存储设计及Partition数据文件 生产者负载均衡策略、批量发送技巧、消息压缩手段、消费者设计_kafka partition 文件

- 2判断给定序列是否为BST后序遍历序列_判断给定序列是不是某棵bst后序

- 3鸿蒙基础之 ToastDialog 对话框(HarmonyOS鸿蒙开发教程含源码)_鸿蒙toast

- 4MIPS 指令集(共31条)_mips指令表

- 5Stable Diffusion WebUI内存不够爆CUDA Out of memory怎么办?_stable diffusion webui碰到outofmemoryerror:cuda内存不足,

- 6axios post传递 表单数据和json字符串(Request Payload 和 Form Data)_js axios接口如何同时传json数据和表单字符串

- 7命名空间——初识c++

- 8C语言关键字 数据类型 格式符 修饰符 运算符一览_格式修饰符

- 9jmeter-“java.net.SocketException: Socket closed“解决方法_java.net.socketexception socket is closed

- 10使用vitamio-sample 安装在华为 android 6 dlopen failed: library "/system/lib/libhwuibp.so" not found_vitamio 安卓安装包

scrapy 分布式 mysql_使用scrapy实现分布式爬虫

赞

踩

分布式爬虫

搭建一个分布式的集群,让其对一组资源进行分布联合爬取,提升爬取效率

如何实现分布式

1.scrapy框架是否可以自己实现分布式?

不可以!!!

其一:因为多台机器上部署的scrapy会各自拥有各自的调度器,这样就使得多台机器无法分配start_urls列表中的url。(多台机器无法共享同一个调度器)

其二:多台机器爬取到的数据无法通过同一个管道对数据进行统一的数据持久化存储。(多台机器无法共享同一个管道)

2.基于scrapy-redis组件的分布式爬虫

- scrapy-redis组件中为我们封装好了可以被多台机器共享的调度器和管道,我们可以直接使用并实现分布式数据爬取。

- 实现方式:

1.基于该组件的RedisSpider类

2.基于该组件的RedisCrawlSpider类

3.分布式实现流程:上述两种不同方式的分布式实现流程是统一的

- 3.1 下载scrapy-redis组件:pip install scrapy-redis

- 3.2 redis配置文件的配置:

-linux或者mac:redis.conf-windows:redis.windows.conf

修改- 注释该行:bind 127.0.0.1,表示可以让其他ip访问redis- 将yes改为no: protected-mode no,表示可以让其他ip操作redis

3.3 修改爬虫文件中的相关代码:

- 将爬虫类的父类修改成基于RedisSpider或者RedisCrawlSpider。

注意:如果原始爬虫文件是基于Spider的,则应该将父类修改成RedisSpider,如果原始爬虫文件是基于CrawlSpider的,则应该将其父类修改成RedisCrawlSpider。

- 注释或者删除start_urls列表,且加入redis_key属性,属性值为scrpy-redis组件中调度器队列的名称

3.4 在配置文件中进行相关配置,开启使用scrapy-redis组件中封装好的管道

ITEM_PIPELINES ={'scrapy_redis.pipelines.RedisPipeline': 400}

3.5 在配置文件(setting)中进行相关配置,开启使用scrapy-redis组件中封装好的调度器

#增加了一个去重容器类的配置, 作用使用Redis的set集合来存储请求的指纹数据, 从而实现请求去重的持久化

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

#使用scrapy-redis组件自己的调度器

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

#配置调度器是否要持久化, 也就是当爬虫结束了, 要不要清空Redis中请求队列和去重指纹的set。如果是True, 就表示要持久化存储, 就不清空数据, 否则清空数据

SCHEDULER_PERSIST = True

3.6 在配置文件中进行爬虫程序链接redis的配置:

REDIS_HOST = 'redis服务的ip地址'REDIS_PORT= 6379REDIS_ENCODING='utf-8'REDIS_PARAMS= {'password':'xx'}

3.7 开启redis服务器:redis-server 配置文件

3.8 开启redis客户端:redis-cli (-h ip -p 6379)

3.9 运行爬虫文件:scrapy runspider SpiderFile(x.py)

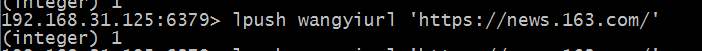

3.10 向调度器队列中扔入一个起始url(在redis客户端中操作):lpush redis_key属性值 起始url

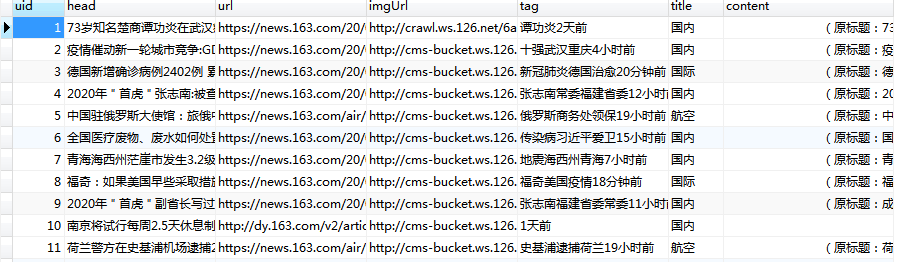

分布式爬虫案例

需求:爬取网易新闻国内,国际,军事等板块内容

基于RedisSpider实现的分布式爬虫

selenium如何被应用到scrapy

a) 在爬虫文件中导入webdriver类

b) 在爬虫文件的爬虫类的构造方法中进行了浏览器实例化的操作

c) 在爬虫类的closed方法中进行浏览器关闭的操作

d) 在下载中间件的process_response方法中编写执行浏览器自动化的操作

spider文件

#-*- coding: utf-8 -*-

importscrapyfrom selenium importwebdriverfrom selenium.webdriver.chrome.options importOptionsfrom scrapy_redis.spiders importRedisCrawlSpiderfrom wangSpider.items importWangspiderItemclassWangSpider(RedisCrawlSpider):

name= 'wang'

#allowed_domains = ['www.xxx.com']

#start_urls = ['https://news.163.com/']

#调度器队列的名称

redis_key='wangyiurl'

def __init__(self):

options=webdriver.ChromeOptions()

options.add_argument('--window-position=0,0'); #chrome 启动初始位置

options.add_argument('--window-size=1080,800'); #chrome 启动初始大小

self.bro =webdriver.Chrome(

executable_path='C://xx/PycharmProjects/djnago_study/spider/wangSpider/chromedriver.exe',

chrome_options=options)defparse(self, response):

lis= response.xpath('//div[@class="ns_area list"]/ul/li')

li_list= [] #存储国内、国际、军事、航空

indexs = [3,4,6,7]for index inindexs:

li_list.append(lis[index])#获取四个板块的文字标题和url

for li inli_list:

url= li.xpath('./a/@href').extract_first()

title= li.xpath('./a/text()').extract_first()#print(url+":"+title)

#对每一个板块对应的url发起请求,获取页面数据(标题,缩略图,关键字,发布时间,url)

yield scrapy.Request(url=url, callback=self.parseSecond, meta={'title': title})defparseSecond(self, response):

div_list= response.xpath('//div[@class="data_row news_article clearfix "]')#print(len(div_list))

for div indiv_list:

head= div.xpath('.//div[@class="news_title"]/h3/a/text()').extract_first()

url= div.xpath('.//div[@class="news_title"]/h3/a/@href').extract_first()

imgUrl= div.xpath('./a/img/@src').extract_first()

tag= div.xpath('.//div[@class="news_tag"]//text()').extract()

tags=[]for t intag:

t= t.strip('\n \t')

tags.append(t)

tag= "".join(tags)#获取meta传递过来的数据值title

title = response.meta['title']#实例化item对象,将解析到的数据值存储到item对象中

item =WangspiderItem()

item['head'] =head

item['url'] =url

item['imgUrl'] =imgUrl

item['tag'] =tag

item['title'] =title#对url发起请求,获取对应页面中存储的新闻内容数据

yield scrapy.Request(url=url, callback=self.getContent, meta={'item': item})#print(head+":"+url+":"+imgUrl+":"+tag)

defgetContent(self,response):#获取传递过来的item

item = response.meta['item']#解析当前页面中存储的新闻数据

content_list = response.xpath('//div[@class="post_text"]/p/text()').extract()

content= "".join(content_list)

item['content'] =contentyielditemdefclosed(self,spider):

self.bro.quit()

中间件文件

from scrapy importsignalsfrom scrapy.http importHtmlResponseimporttimefrom scrapy.contrib.downloadermiddleware.useragent importUserAgentMiddlewareimportrandom#UA池代码的编写(单独给UA池封装一个下载中间件的一个类)#1,导包UserAgentMiddlware类

classRandomUserAgent(UserAgentMiddleware):defprocess_request(self, request, spider):#从列表中随机抽选出一个ua值

ua =random.choice(user_agent_list)#ua值进行当前拦截到请求的ua的写入操作

request.headers.setdefault('User-Agent',ua)#批量对拦截到的请求进行ip更换

classProxy(object):defprocess_request(self, request, spider):#对拦截到请求的url进行判断(协议头到底是http还是https)

#request.url返回值:http://www.xxx.com

h = request.url.split(':')[0] #请求的协议头

if h == 'https':

ip=random.choice(PROXY_https)

request.meta['proxy'] = 'https://'+ipelse:

ip=random.choice(PROXY_http)

request.meta['proxy'] = 'http://' +ipclassWangspiderDownloaderMiddleware(object):#Not all methods need to be defined. If a method is not defined,

#scrapy acts as if the downloader middleware does not modify the

#passed objects.

@classmethoddeffrom_crawler(cls, crawler):#This method is used by Scrapy to create your spiders.

s =cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)returnsdefprocess_request(self, request, spider):#Called for each request that goes through the downloader

#middleware.

#Must either:

#- return None: continue processing this request

#- or return a Response object

#- or return a Request object

#- or raise IgnoreRequest: process_exception() methods of

#installed downloader middleware will be called

returnNone#拦截到响应对象(下载器传递给Spider的响应对象)

#request:响应对象对应的请求对象

#response:拦截到的响应对象

#spider:爬虫文件中对应的爬虫类的实例

defprocess_response(self, request, response, spider):#响应对象中存储页面数据的篡改

if request.url in ['http://news.163.com/domestic/', 'http://news.163.com/world/', 'http://news.163.com/air/','http://war.163.com/']:

spider.bro.get(url=request.url)

js= 'window.scrollTo(0,document.body.scrollHeight)'spider.bro.execute_script(js)

time.sleep(3) #一定要给与浏览器一定的缓冲加载数据的时间

#页面数据就是包含了动态加载出来的新闻数据对应的页面数据

page_text =spider.bro.page_source#篡改响应对象

return HtmlResponse(url=spider.bro.current_url, body=page_text, encoding='utf-8', request=request)else:returnresponsedefprocess_exception(self, request, exception, spider):#Called when a download handler or a process_request()

#(from other downloader middleware) raises an exception.

#Must either:

#- return None: continue processing this exception

#- return a Response object: stops process_exception() chain

#- return a Request object: stops process_exception() chain

pass

defspider_opened(self, spider):

spider.logger.info('Spider opened: %s' %spider.name)

PROXY_http=['153.180.102.104:80','195.208.131.189:56055',

]

PROXY_https=['120.83.49.90:9000','95.189.112.214:35508',

]

user_agent_list=["Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1"

"(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1","Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11"

"(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11","Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6"

"(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6","Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6"

"(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6","Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1"

"(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1","Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5"

"(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5","Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5"

"(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5","Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3"

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3","Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3"

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3","Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3"

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3","Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3"

"(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3","Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3"

"(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3","Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3"

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3","Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3"

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3","Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3"

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3","Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3"

"(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3","Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24"

"(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24","Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24"

"(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"]

管道类文件

因为网络的下载速度和数据库的I/O速度是不一样的

所以有可能会发生下载快,但是写入数据库速度慢,造成线程的堵塞;

这里采用异步写入数据库

#方法2:采用异步的机制写入mysql

importpymysql.cursorsfrom twisted.enterprise importadbapifrom twisted.enterprise importadbapiclassMysqlTwistedPipeline(object):'''异步机制将数据写入到mysql数据库中'''

#创建初始化函数,当通过此类创建对象时首先被调用的方法

def __init__(self, dbpool):

self.dbpool=dbpool#创建一个静态方法,静态方法的加载内存优先级高于init方法,

#在创建这个类的对之前就已将加载到了内存中,所以init这个方法可以调用这个方法产生的对象

@classmethod#名称固定的

deffrom_settings(cls, settings):#先将setting中连接数据库所需内容取出,构造一个地点

dbparms =dict(

host=settings["MYSQL_HOST"],

db=settings["MYSQL_DBNAME"],

user=settings["MYSQL_USER"],

password=settings["MYSQL_PASSWORD"],

charset='utf8mb4',#游标设置

cursorclass=pymysql.cursors.DictCursor,#设置编码是否使用Unicode

use_unicode=True

)#通过Twisted框架提供的容器连接数据库,pymysql是数据库模块名

dbpool = adbapi.ConnectionPool("pymysql",**dbparms)#无需直接导入 dbmodule. 只需要告诉 adbapi.ConnectionPool 构造器你用的数据库模块的名称比如pymysql.

returncls(dbpool)defprocess_item(self, item, spider):#使用Twisted异步的将Item数据插入数据库

query =self.dbpool.runInteraction(self.do_insert, item)

query.addErrback(self.handle_error, item, spider)#这里不往下传入item,spider,handle_error则不需接受,item,spider)

defdo_insert(self, cursor, item):#执行具体的插入语句,不需要commit操作,Twisted会自动进行

insert_sql = """insert into wangyi_content(head,url,imgUrl,tag,title,content

)

VALUES(%s,%s,%s,%s,%s,%s)"""cursor.execute(insert_sql, (item["head"], item["url"], item["imgUrl"],

item["tag"], item["title"], item["content"]))defhandle_error(self, failure, item, spider):#异步插入异常

iffailure:print(failure)

item文件

importscrapyclassWangspiderItem(scrapy.Item):#define the fields for your item here like:

#name = scrapy.Field()

head =scrapy.Field()

url=scrapy.Field()

imgUrl=scrapy.Field()

tag=scrapy.Field()

title=scrapy.Field()

content=scrapy.Field()pass

setting文件

BOT_NAME = 'wangSpider'SPIDER_MODULES= ['wangSpider.spiders']

NEWSPIDER_MODULE= 'wangSpider.spiders'

#Crawl responsibly by identifying yourself (and your website) on the user-agent#USER_AGENT = 'firstBlood (+http://www.yourdomain.com)'

USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36' #伪装请求载体身份#Obey robots.txt rules#ROBOTSTXT_OBEY = True

ROBOTSTXT_OBEY = False #可以忽略或者不遵守robots协议#只显示指定类型的日志信息

LOG_LEVEL='ERROR'

#Configure maximum concurrent requests performed by Scrapy (default: 16)#CONCURRENT_REQUESTS = 32

#Configure a delay for requests for the same website (default: 0)#See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay#See also autothrottle settings and docs#DOWNLOAD_DELAY = 3#The download delay setting will honor only one of:#CONCURRENT_REQUESTS_PER_DOMAIN = 16#CONCURRENT_REQUESTS_PER_IP = 16

#Disable cookies (enabled by default)#COOKIES_ENABLED = False

#Disable Telnet Console (enabled by default)#TELNETCONSOLE_ENABLED = False

DOWNLOADER_MIDDLEWARES={'wangSpider.middlewares.WangspiderDownloaderMiddleware': 543,'wangSpider.middlewares.RandomUserAgent': 542,#'wangSpider.middlewares.Proxy': 541,

}

ITEM_PIPELINES={'wangSpider.pipelines.MysqlTwistedPipeline': 300,'scrapy_redis.pipelines.RedisPipeline': 400}

REDIS_HOST= '192.168.31.125'REDIS_PORT= 6379REDIS_ENCODING= 'utf-8'MYSQL_HOST= 'xx'MYSQL_DBNAME= 'spider_db'MYSQL_USER= 'root'MYSQL_PASSWORD= 'xx'

#增加了一个去重容器类的配置, 作用使用Redis的set集合来存储请求的指纹数据, 从而实现请求去重的持久化

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

#使用scrapy-redis组件自己的调度器

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

#配置调度器是否要持久化, 也就是当爬虫结束了, 要不要清空Redis中请求队列和去重指纹的set。如果是True, 就表示要持久化存储, 就不清空数据, 否则清空数据

SCHEDULER_PERSIST = True

执行

scrapy runspider wang.py

每次重新爬取需要将redis清空。当爬虫停止后,redis数据库中只剩下dupefilter 和 items。dupefilter 中保存的是已经爬取过的url的md5值。如果未清空,爬虫会过滤掉这些网址,不进行该网址的爬取。因此,如果需要重新爬取,需要将redis清空

结果