- 1【华为OD机试真题 Python】字符成环找偶数O_用python实现 给你一个字符串s,字符串s收尾相连组成一个环形,请在环形中找出'o'字

- 2“AI+视频”新模式下城市安全的探索与落实

- 3图解项目管理必备十大管理模型及具体应用建议

- 4Dockerfile简介+使用_执行dockerfile

- 5python添加解释器_mac PyCharm添加Python解释器及添加package路径的方法

- 6微信浏览器禁止下载的处理方法-跳转浏览器打开_微信浏览器禁用scheme

- 7【单片机毕业设计】【mcuclub-dz-069】空气质量检测

- 8虹科分享 | 关于内存取证你应该知道的那些事

- 9移动端web头像上传实现截取和照片方向修复

- 10‘git‘ 不是内部或外部命令,也不是可运行的程序或批处理文件。_git' 不是内部或外部命令,也不是可运行的程序 或批处理文件。

NLP Bi-Encoder和Re-ranker(引流:Cross Encoder 交叉编码器 ReRanker)_cross-encoder based re-ranker

赞

踩

Retrieve & Re-Rank

https://www.sbert.net/examples/applications/retrieve_rerank/README.html

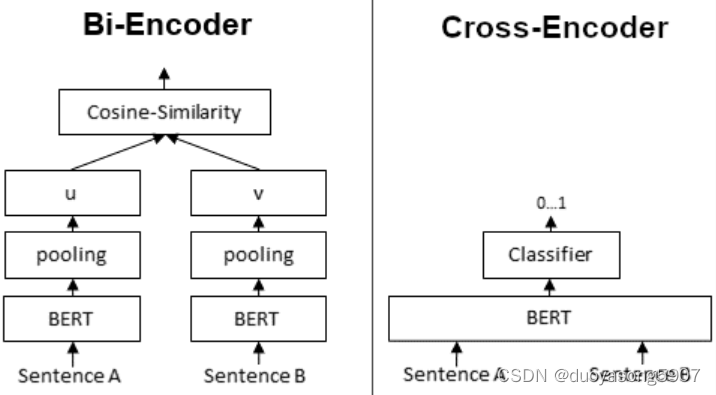

Bi-Encoder vs. Cross-Encoder

https://www.sbert.net/examples/applications/cross-encoder/README.html

Bi-Encoder会用BERT对输入文本编码,再根据cosine相似度分数筛选文本。Cross-Encoder会直接计算两个句子的相关性分数。

如何将BI和Cross Encoder配合使用?可以先用BI-Encoder选出top 100个候选项,再用Cross-Encoder挑选最佳选项。

Combining Bi- and Cross-Encoders

Cross-Encoder achieve higher performance than Bi-Encoders, however, they do not scale well for large datasets.

Here, it can make sense to combine Cross- and Bi-Encoders, for example in Information Retrieval / Semantic Search scenarios:

First, you use an efficient Bi-Encoder to retrieve e.g. the top-100 most similar sentences for a query.

Then, you use a Cross-Encoder to re-rank these 100 hits by computing the score for every (query, hit) combination.