热门标签

热门文章

- 1Android开发之 permission动态权限获取_android import android.manifest

- 2jmeter安装教程与新手入门

- 3Redis搭建及使用

- 4leetcode 160. 相交链表 python_leetcode 相交链表超时

- 5面经八股汇总_bert八股

- 6数据结构入门7-2(散列表)_设散列表的长度为100

- 7【容器化】Oceanbase镜像构建及使用_obclient容器镜像

- 8Zookeeper笔记_zookeeper observer

- 9【计算机毕业设计】基于微信小程序的心理健康测试系统_微信小程序形式,实现特定群体心理健康状态测试、分析并设计干预交友等小功能

- 10在CentOS 7中安装kafka详细步骤_cent os安装kafka systemd

当前位置: article > 正文

js前端实现语言识别(asr)与录音_js 语音识别

作者:不正经 | 2024-05-15 03:49:14

赞

踩

js 语音识别

js前端实现语言识别与录音

前言

实习的时候,领导要求验证一下在web前端实现录音和语音识别,查了一下发现网上有关语音识别也就是语音转文字几乎没有任何教程。

其实有一种方案,前端先录音然后把录音传到后端,后端在请求如百度语音转文字的api进行识别,但是这种就需要再写个后端。如果直接前端请求百度api会遇到跨域问题,何况apikey等写在前端总感觉不是很安全。再一个百度的识别准确率不是很高。。

由此就有了本篇的由来,基于web原生的api实现语音识别

环境

| 名称 | 版本 |

|---|---|

| node | v17.1.0 |

| npm | 8.1.4 |

| @vue/cli | 4.5.15 |

| vue | 2 |

| vant | 2 |

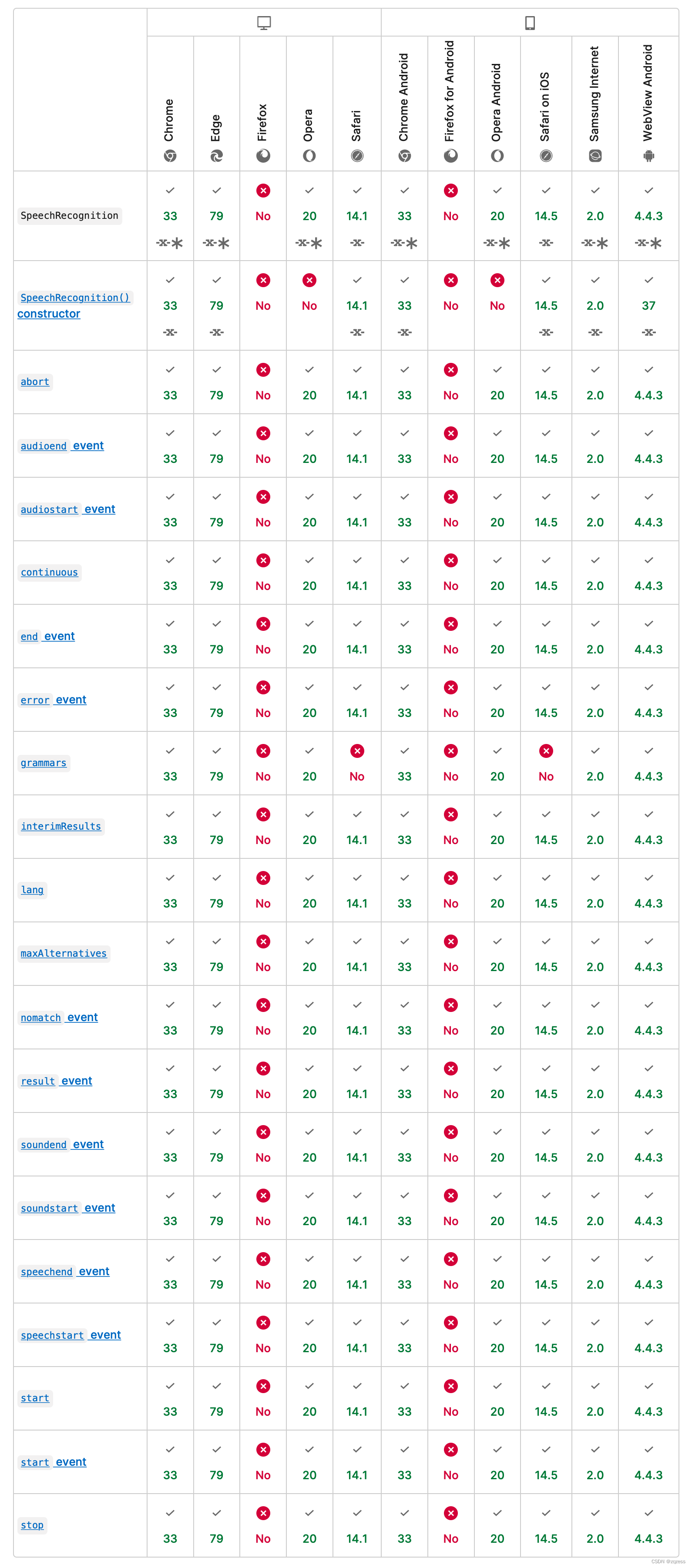

适配率

由图可知常用的浏览器基本都支持,但是实际经过测试,谷歌浏览器由于网络原因时灵时不灵,pc上edge识别表现最好,安卓设备几乎都不可用,苹果ios上用safari完美使用

录音与语音识别

HZRecorder.js封装

这里提供一个网上找来的封装好的js

HZRecorder.js

function HZRecorder (stream, config) { config = config || {} config.sampleBits = config.sampleBits || 16 // 采样数位 8, 16 config.sampleRate = config.sampleRate || 16000 // 采样率16khz let context = new (window.webkitAudioContext || window.AudioContext)() let audioInput = context.createMediaStreamSource(stream) let createScript = context.createScriptProcessor || context.createJavaScriptNode let recorder = createScript.apply(context, [4096, 1, 1]) let audioData = { size: 0 // 录音文件长度 , buffer: [] // 录音缓存 , inputSampleRate: context.sampleRate // 输入采样率 , inputSampleBits: 16 // 输入采样数位 8, 16 , outputSampleRate: config.sampleRate // 输出采样率 , oututSampleBits: config.sampleBits // 输出采样数位 8, 16 , input: function (data) { this.buffer.push(new Float32Array(data)) this.size += data.length } , compress: function () { // 合并压缩 // 合并 let data = new Float32Array(this.size) let offset = 0 for (let i = 0; i < this.buffer.length; i++) { data.set(this.buffer[i], offset) offset += this.buffer[i].length } // 压缩 let compression = parseInt(this.inputSampleRate / this.outputSampleRate) let length = data.length / compression let result = new Float32Array(length) // eslint-disable-next-line one-var let index = 0, j = 0 while (index < length) { result[index] = data[j] j += compression index++ } return result } , encodeWAV: function () { let sampleRate = Math.min(this.inputSampleRate, this.outputSampleRate) let sampleBits = Math.min(this.inputSampleBits, this.oututSampleBits) let bytes = this.compress() let dataLength = bytes.length * (sampleBits / 8) let buffer = new ArrayBuffer(44 + dataLength) let data = new DataView(buffer) let channelCount = 1// 单声道 let offset = 0 let writeString = function (str) { for (let i = 0; i < str.length; i++) { data.setUint8(offset + i, str.charCodeAt(i)) } } // 资源交换文件标识符 writeString('RIFF') offset += 4 // 下个地址开始到文件尾总字节数,即文件大小-8 data.setUint32(offset, 36 + dataLength, true) offset += 4 // WAV文件标志 writeString('WAVE') offset += 4 // 波形格式标志 writeString('fmt ') offset += 4 // 过滤字节,一般为 0x10 = 16 data.setUint32(offset, 16, true) offset += 4 // 格式类别 (PCM形式采样数据) data.setUint16(offset, 1, true) offset += 2 // 通道数 data.setUint16(offset, channelCount, true) offset += 2 // 采样率,每秒样本数,表示每个通道的播放速度 data.setUint32(offset, sampleRate, true) offset += 4 // 波形数据传输率 (每秒平均字节数) 单声道×每秒数据位数×每样本数据位/8 data.setUint32(offset, channelCount * sampleRate * (sampleBits / 8), true) offset += 4 // 快数据调整数 采样一次占用字节数 单声道×每样本的数据位数/8 data.setUint16(offset, channelCount * (sampleBits / 8), true) offset += 2 // 每样本数据位数 data.setUint16(offset, sampleBits, true) offset += 2 // 数据标识符 writeString('data') offset += 4 // 采样数据总数,即数据总大小-44 data.setUint32(offset, dataLength, true) offset += 4 // 写入采样数据 if (sampleBits === 8) { for (let i = 0; i < bytes.length; i++, offset++) { let s = Math.max(-1, Math.min(1, bytes[i])) let val = s < 0 ? s * 0x8000 : s * 0x7FFF val = parseInt(255 / (65535 / (val + 32768))) data.setInt8(offset, val, true) } } else { for (let i = 0; i < bytes.length; i++, offset += 2) { let s = Math.max(-1, Math.min(1, bytes[i])) data.setInt16(offset, s < 0 ? s * 0x8000 : s * 0x7FFF, true) } } return new Blob([data], {type: 'audio/wav'}) } } // 开始录音 this.start = function () { audioInput.connect(recorder) recorder.connect(context.destination) } // 停止 this.stop = function () { recorder.disconnect() } // 获取音频文件 this.getBlob = function () { this.stop() console.log(audioData.encodeWAV()) return audioData.encodeWAV() } // 回放 this.play = function (audio) { let blob = this.getBlob() // saveAs(blob, "F:/3.wav"); // window.open(window.URL.createObjectURL(this.getBlob())) audio.src = window.URL.createObjectURL(this.getBlob()) } // 上传 this.upload = function () { return this.getBlob() } // 音频采集 recorder.onaudioprocess = function (e) { audioData.input(e.inputBuffer.getChannelData(0)) // record(e.inputBuffer.getChannelData(0)); } return this } export { HZRecorder }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

VueJs

<template> <div id="page"> <div class="content"> <div> <div style="display: block;align-items: center;text-align: center;"> <label>识别结果: {{ result }}</label> </div> <div style="display: block;align-items: center;text-align: center;margin: 20px 0 20px 0"> <label>识别结果2: {{ result2 }}</label> </div> <audio ref="audiodiv" type="audio/wav" controls /> </div> <div style="display: inline-flex;margin: 20px 0 20px 0"> <van-button type="warning" @click="speakClick" square >识别点击说话 </van-button> <van-button type="warning" @click="speakEndClick" square >识别结束说话 </van-button> </div> <div> <van-button type="warning" @click="speakClick2" square >录音点击说话 </van-button> <van-button type="warning" @click="speakEndClick2" square >录音关闭说话 </van-button> </div> </div> </div> </template> <script> import {HZRecorder} from '../js/HZRecorder' import {Toast} from 'vant' export default { name: 'home', data () { return { recorder: '', recognition: '', audioSrc: '', result: '', result2: '' } }, created () { const vue = this if (navigator.mediaDevices.getUserMedia || navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia) { this.getUserMedia({ video: false, audio: true }) // 调用用户媒体设备,访问摄像头、录音 } else { console.log('你的浏览器不支持访问用户媒体设备') } }, methods: { speakClick () { const vue = this vue.result2 = '' vue.result = '' console.log('start识别') let SpeechRecognition = window.SpeechRecognition || window.mozSpeechRecognition || window.webkitSpeechRecognition || window.msSpeechRecognition || window.oSpeechRecognition if (SpeechRecognition) { vue.recognition = new SpeechRecognition() vue.recognition.continuous = true vue.recognition.interimResults = true vue.recognition.lang = 'cmn-Hans-CN' // 普通话 (中国大陆) } vue.recognition.start() vue.recognition.onstart = function () { console.log('识别开始...') } // eslint-disable-next-line one-var let final_transcript = '', interim_transcript = '' vue.recognition.onerror = function (event) { console.log('识别出错') console.log(event) if (event.error == 'no-speech') { console.log('no-speech') } if (event.error == 'audio-capture') { console.log('audio-capture') } if (event.error == 'not-allowed') { console.log('not-allowed') } } vue.recognition.onresult = function (event) { console.log('识别成功') if (typeof (event.results) == 'undefined') { console.log('识别结果undefined') vue.recognition.onend = null vue.recognition.stop() } else { console.log(event.results) for (let i = event.resultIndex; i < event.results.length; ++i) { if (event.results[i].isFinal) { final_transcript += event.results[i][0].transcript } else { interim_transcript += event.results[i][0].transcript } } final_transcript = capitalize(final_transcript) console.log('final_transcript: ' + final_transcript) console.log('interim_transcript: ' + interim_transcript) if (final_transcript != 'undefined') { vue.result = final_transcript } if (interim_transcript != 'undefined') { vue.result2 = interim_transcript } } } var two_line = /\n\n/g var one_line = /\n/g function linebreak (s) { return s.replace(two_line, '<p></p>').replace(one_line, '<br>') } let first_char = /\S/ function capitalize (s) { return s.replace(first_char, function (m) { return m.toUpperCase() }) } }, speakEndClick () { const vue = this console.log('end识别') vue.recognition.stop() // 识别停止 vue.recognition.onend = function () { console.log('识别结束') } }, speakClick2 () { const vue = this console.log('start') vue.recorder.start() // 录音 }, speakEndClick2 () { const vue = this console.log('end') let audioData = new FormData() audioData.append('speechFile', vue.recorder.getBlob()) vue.recorder.play(this.$refs.audiodiv) }, getUserMedia (constrains) { let that = this if (navigator.mediaDevices.getUserMedia) { // 最新标准API navigator.mediaDevices.getUserMedia(constrains).then(stream => { that.success(stream) that.recorder = new HZRecorder(stream) console.log('录音初始化准备完成') }).catch(err => { that.error(err) }) } else if (navigator.webkitGetUserMedia) { // webkit内核浏览器 navigator.webkitGetUserMedia(constrains).then(stream => { that.success(stream) that.recorder = new HZRecorder(stream) console.log('录音初始化准备完成') }).catch(err => { that.error(err) }) } else if (navigator.mozGetUserMedia) { // Firefox浏览器 navigator.mozGetUserMedia(constrains).then(stream => { that.success(stream) that.recorder = new HZRecorder(stream) console.log('录音初始化准备完成') }).catch(err => { that.error(err) }) } else if (navigator.getUserMedia) { // 旧版API navigator.getUserMedia(constrains).then(stream => { that.success(stream) that.recorder = new HZRecorder(stream) console.log('录音初始化准备完成') }).catch(err => { that.error(err) }) } }, // 成功的回调函数 success (stream) { console.log('已点击允许,开启成功') }, // 异常的回调函数 error (error) { console.log('访问用户媒体设备失败:', error.name, error.message) } } } </script> <style scoped> #page{ position: absolute; display: flex; width: 100%; height: 100%; align-items: center; text-align: center; vertical-align: middle; } .content{ width: 30%; height: 30%; margin: 0 auto; } </style>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

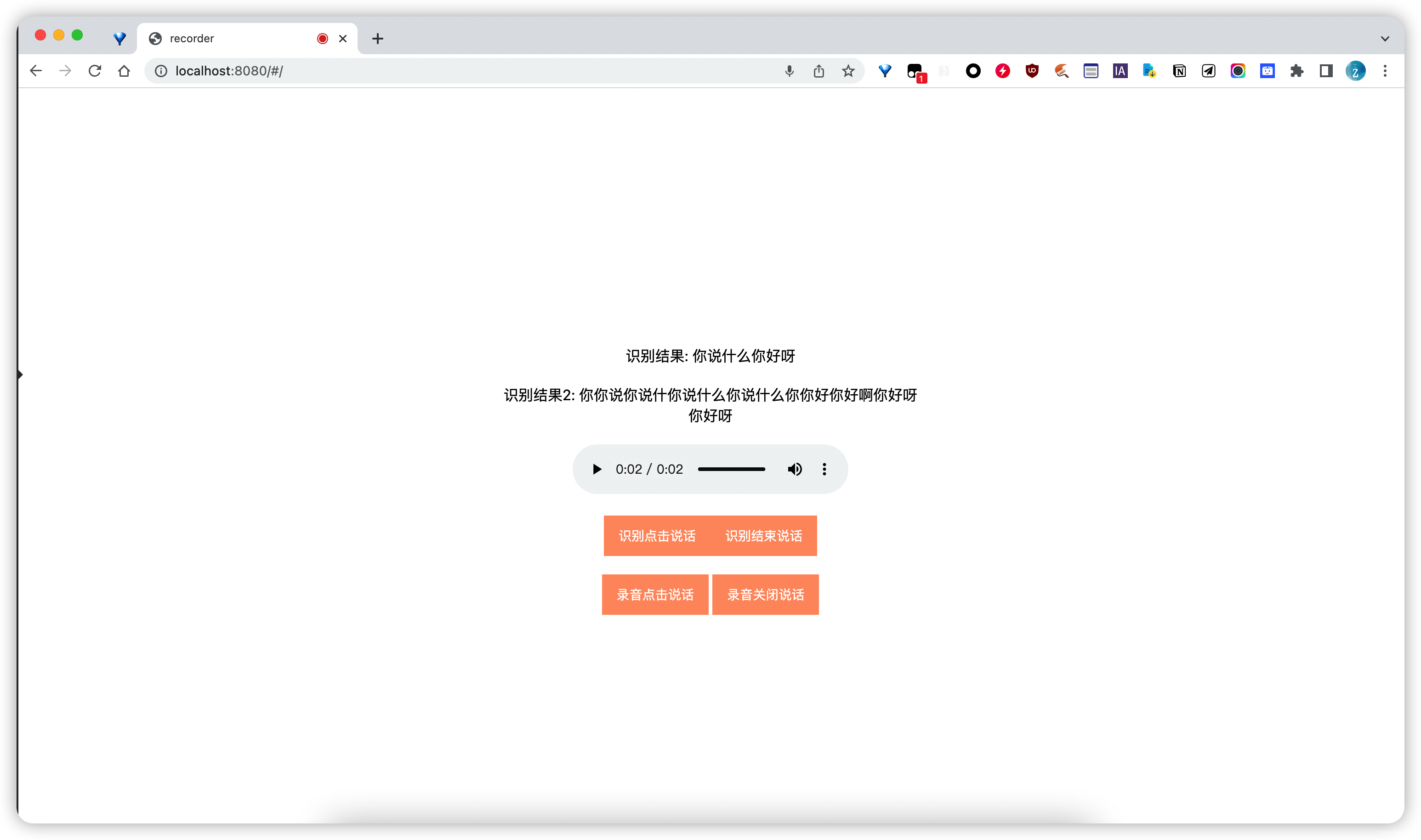

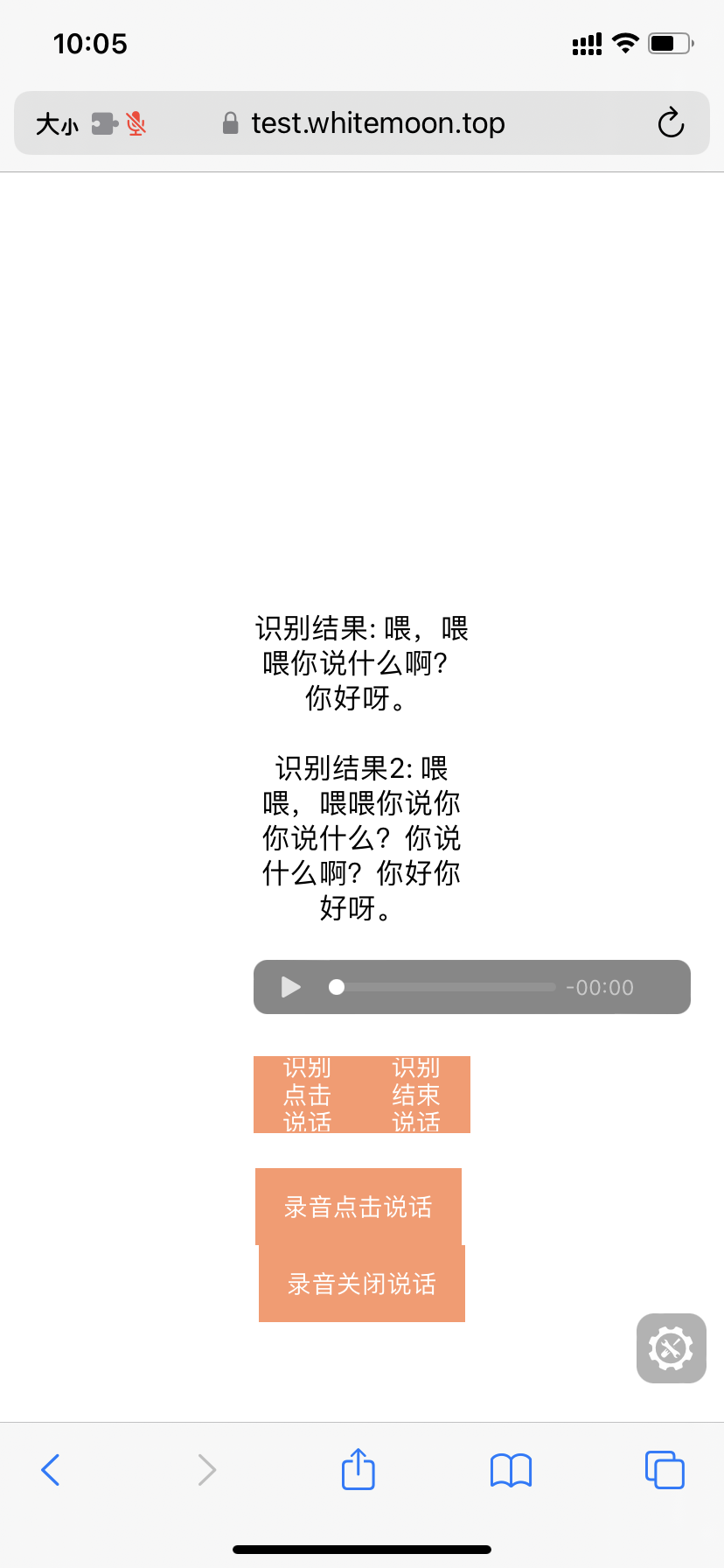

识别效果

Chrome

Safari

ios Safari

重要

在localhost下可以申请并开启麦克风权限,其它环境下需要配置https才可以申请并开启麦克风权限

参考文档

原文载于本人博客whitemoon.top

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/不正经/article/detail/571401

推荐阅读

相关标签