热门标签

热门文章

- 1tcpdump抓linux网络包,使用wireshark分析_linux抓包命令并生成文件

- 2AI大模型探索之路-训练篇16:大语言模型预训练-微调技术之LoRA

- 3uniapp 查找不到uview-ui文件怎么办?_文件查找失败:'uview-ui

- 4Mysql盲注总结_mysql 盲注

- 5NLP系列(5)_从朴素贝叶斯到N-gram语言模型_topical n gram 模型输入

- 6SCUI Admin:快速构建企业级中后台前端的利器 让前端开发更快乐。

- 7大语言模型Prompt工程之使用GPT3.5生成图数据库Cypher_gpt3.5怎么画图

- 8【算法】逃离大迷宫

- 9深入解析NLP情感分析技术:从篇章到属性_情感分析模型

- 10(2023,SSM,门控 MLP,选择性输入,上下文压缩)Mamba:具有选择性状态空间的线性时间序列建模_选择性状态空间序列模型

当前位置: article > 正文

基于聚类的文本摘要实现_利用聚类进行文本摘要

作者:不正经 | 2024-05-15 13:43:32

赞

踩

利用聚类进行文本摘要

基于聚类的文本摘要实现

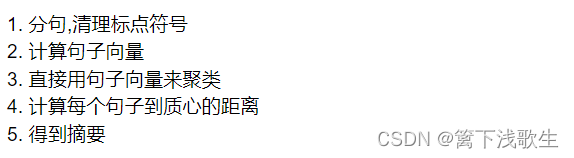

实现步骤:

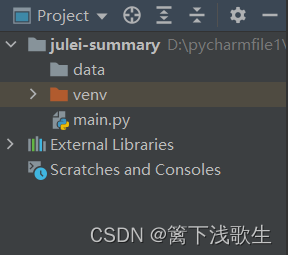

一、文件目录

二、聚类摘要(main.py)

import re import torch from transformers import BertTokenizer#中文分词器 from transformers import AlbertModel#Albert预训练模型获得embedding from nltk.cluster import KMeansClusterer#k均值聚类 from scipy.spatial import distance_matrix#距离计算模块distance import nltk import pandas as pd content = """ 内容 """ title = '摘要' # ********** 分句,清理标点符号 ********** # # 分句,清理标点符号 def split_document(para): line_split = re.split(r'[|。|!|;|?|]|\n|,', para.strip()) _seg_sents = [re.sub(r'[^\w\s]','',sent) for sent in line_split] _seg_sents = [sent for sent in _seg_sents if sent != ''] return _seg_sents # sentences=['新冠肺炎疫情暴发以来', '频繁出现的无症状感染者病例', '再次引起恐慌', '近日'...] sentences = split_document(content) # ********** 计算句子向量 ********** # #Mean Pooling:考虑attention mask以获得正确的平均值 def mean_pooling(model_output, attention_mask): # :所有句子的embedding:token_embeddings=[bs,sentence-len+2,hidden_dim]=[2, 15, 312] token_embeddings = model_output[0] # 扩展attention mask维度:[bs,sentence-len+2]--->[bs,sentence-len+2,hidden_dim] input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float() a = torch.sum(token_embeddings * input_mask_expanded) return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9) # 下载模型 tokenizer = BertTokenizer.from_pretrained('clue/albert_chinese_tiny') model = AlbertModel.from_pretrained('clue/albert_chinese_tiny') # 得到句子的embedding def _get_sentence_embeddings(sentences): # Tokenize sentences # sentences=['新冠肺炎疫情暴发以来', '频繁出现的无症状感染者病例']-->encoded_input : 输出3个tensor #加开始和结束后的'input_ids': tensor([[101,3173,1094,5511,4142,4554,2658,3274,1355,809,3341,102,0,0,0],[101,7574,5246,1139,4385,4638,3187,4568,4307,2697,3381,5442,4567,891,102]]) #'token_type_ids': tensor([[0,0,0,0,0,0,0,0,0,0,0,0,0,0,0],[0,0,0,0,0,0,0,0,0,0,0,0,0,0,0]]) #'attention_mask': tensor([[1,1,1,1,1,1,1,1,1,1,1,1,0,0,0],[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1]]) encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt') # Compute token embeddings # with torch.no_grad的作用:在该模块下,所有计算得出的tensor的requires_grad都自动设置为False。 # model_output:输出2个tensor # last_hidden_status:[bs,sentence-len+2,hidden_dim]=[2, 15, 312] # pooler_output:[bs,hidden_dim]=[2, 312] with torch.no_grad(): model_output = model(**encoded_input) # 考虑attention mask以获得正确的平均值 Mean Pooling=[bs,hidden_dim] sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask']) return sentence_embeddings # 得到句子的embedding=[bs,hidden_dim] sentence_embeddings = _get_sentence_embeddings(sentences) # ********** 直接用句子向量来聚类 ********** # NUM_CLUSTERS=10 # 分为多少组 iterations=25 #迭代次数 X = sentence_embeddings.numpy() # k均值聚类 kclusterer = KMeansClusterer(NUM_CLUSTERS, distance=nltk.cluster.util.cosine_distance,repeats=iterations,avoid_empty_clusters=True) # assigned_clusters =[6, 6, 4, 8, 6, 8, 6, 2...] assigned_clusters = kclusterer.cluster(X, assign_clusters=True) # 计算所有句子的分组 # ********** 计算每个句子到质心的距离 ********** # # data: # sentence embedding cluster centroid distance_from_centroid #0 新冠肺炎疫情暴发以来 [-0.2, 0.3,....] 2 [-0.17, 0.20,...] 3.476364 #1 频繁出现的无症状感染者病例 [-0.2, 0.1,....] 9 [-0.19, -0.16,...] 3.096487 data = pd.DataFrame(sentences) data.columns=['sentence'] data['embedding'] = sentence_embeddings.numpy().tolist()# .tolist()数组转化为列表 # 句子分为10簇 data['cluster']=pd.Series(assigned_clusters, index=data.index) # 每个质心的向量:计算求平均 data['centroid']=data['cluster'].apply(lambda x: kclusterer.means()[x]) # 计算sentence的embedding和质心的距离 def distance_from_centroid(row): return distance_matrix([row['embedding']], [row['centroid'].tolist()])[0][0] data['distance_from_centroid'] = data.apply(distance_from_centroid, axis=1) # ********** 得到摘要 ********** # # 1. 按照cluster 进行分组 # 2. 组内排序 # 3. 按照文章顺序顺序取原来的句子 summary=data.sort_values('distance_from_centroid',ascending = True).groupby('cluster').head(1).sort_index()['sentence'].tolist() print(summary)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

实验结果

摘要10句

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/不正经/article/detail/573101

推荐阅读

相关标签