- 1Flutter 上了 Apple 第三方重大列表,2024 春季 iOS 的隐私清单究竟是什么?_privacy tracking domains

- 2PL/SQL实现1到100素数判断_plsql求素数

- 3【单片机家电产品学习记录--红外线】

- 4网络工程师——常用必背专业词汇_网络工程师常用英语汇总

- 5【并查集】Leetcode 547.省份数量_并查集 547

- 6【Python】成功解决AttributeError: ‘list‘ object has no attribute ‘replace‘_list' object has no attribute 'replace

- 7oracle 12c vs oracle 11204 优化器特性(optimizer_features_enable)差异_oracle _optimizer_partial_join_eval

- 82023年第十四届蓝桥杯javaB组 蜗牛解题思路(动态规划 O(n))_蓝桥杯蜗牛

- 9Python免费下载安装全流程(Python 最新版本),新手小白必看!_python下载教程

- 10华为某正式员工哀叹:自己被劝退了,同期入职的OD还好好的,正式员工还没外包稳定!...

2.HDFS介绍、HDFS完全分布式搭建

赞

踩

3.HDFS介绍

3.1 HDFS介绍

- 产生背景

随着数据量越来越大,在一台电脑上存不下所有的数据,那么就分配到更多的电脑组成的集群上,但是不方便管理和维护,于是就需要一种可以在集群中来管理多台机器上文件的系统,即分布式文件关系系统。HDFS便是分布式文件管理系统中的一员。 - 定义:

HDFS (Hadoop Distributed File System):分布式文件系统,用于存在文件,通过目录树来定位文件;构建在分布式集群上,集群中的服务器有各自的角色。 - 适用场景

适合一次写入,多次读取的场景。适合用来做大数据分析。 - HDFS优点

- 可构建在廉价的机器上

- 高容错

-

数据自动保存多个副本,通过增加副本的形式,提高容错性。

-

当某一个副本数据块丢失后,通过自动恢复保持副本数量。

-

- 适合存储大量数据

- HDFS上的一个典型文件大小一般都在G字节至T字节。MB GB TB PB ZB

- HDFS支持大文件存储。

- 单一HDFS实例能支撑数以千万计的文件。

- 简单的一致性模型

- HDFS应用遵循“一次写入多次读取”的文件访问模型。

- 简化了数据一致性问题,并且使高吞吐量的数据访问成为可能。

- Map/Reduce应用或者网络爬虫应用都非常适合这个模型。

- HDFS缺点

- 不适合低延迟的数据访问。

- 无法高效的对大量小文件进行存储。

- 不支持对同一个文件的并发写入。

- 不支持文件的随机修改。

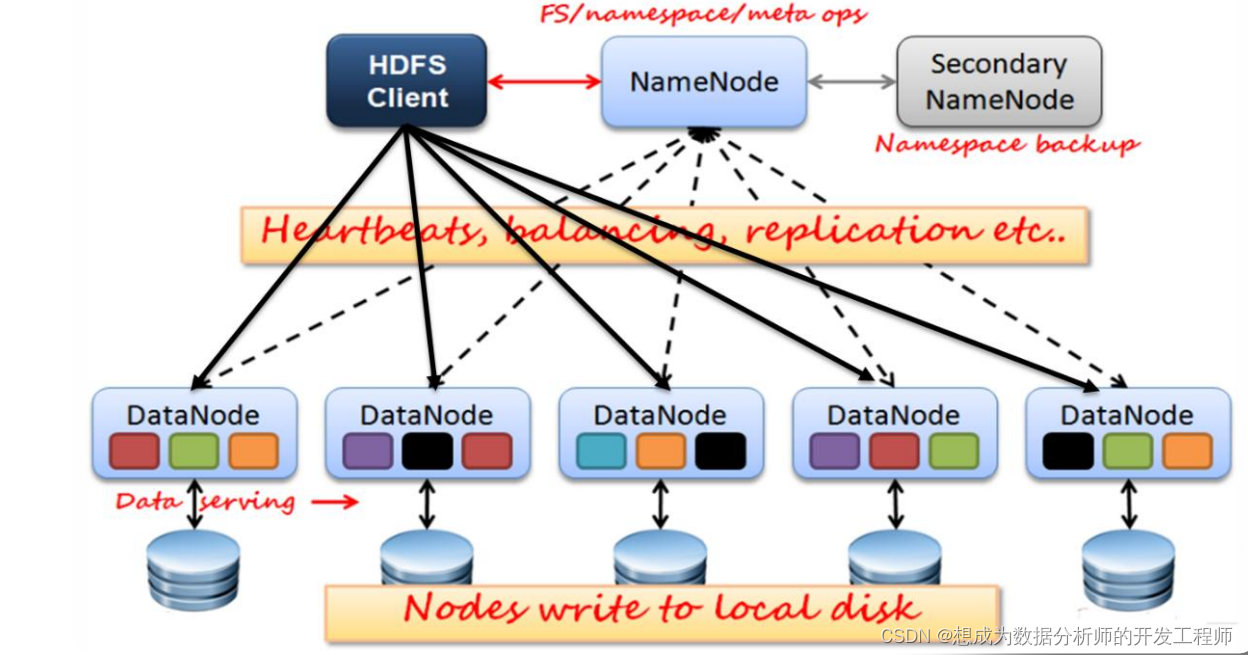

3.2 HDFS架构剖析

HDFS架构图:

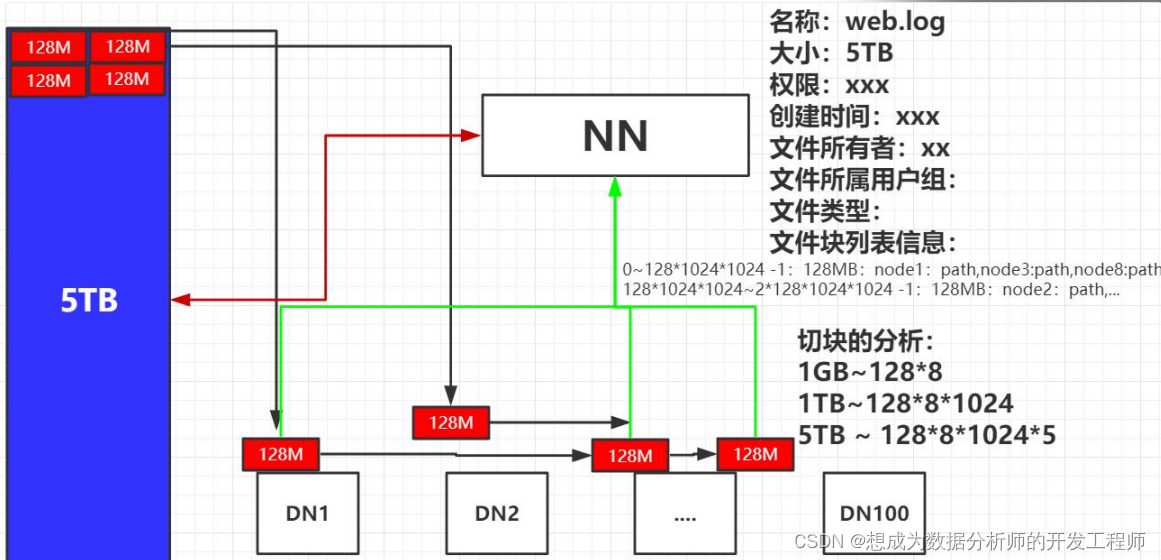

思考: 100台服务器,存储空间单个8TB,总存储空间为800TB,那么5TB文件如何存储?

128MB一块 128MB8=1GB 12881024=1TB

5TB数据分成的128MB的块数8192 *5。

- 清单:

5TB文件分的块: - 元数据:

文件名称:web.log,大小:5TB ,创建时间,权限,文件所有者,文件所属的用户组,文件类型等。 - 文件块列表信息:

0~12810241024 -1:128MB:node1:path,node3:path,node8:path

12810241024~212810241024 -1:128MB:node2:path,node4:path,node9:path

212810241024~31281024*1024 -1:128MB:node3:path,…

4.HDFS完全分布式搭建

hadoop运行模式:本地模式、伪分布式模式、完全分布式模式、高可用的完全分布式模式。

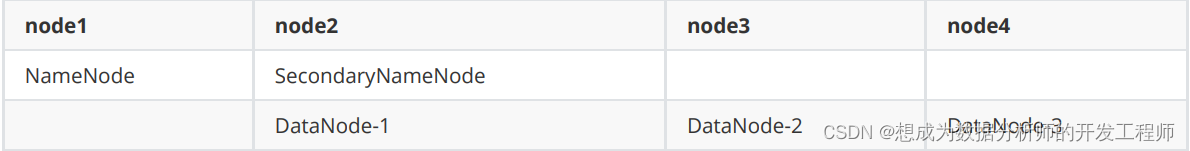

4.1 规划

首先将四台虚拟机均拍摄一个快照:hadoop完全分布式Pre。如果安装失败,那么很方便进行重置。

注意: NameNode和SecondaryNameNode都比较消耗内存,所以不要将它们安装在同一台服务器上。

4.2 前置环境

4.2.1 超详细版四台虚拟机之间实现免密登录(免密钥设置)

首先将node1和node2拍摄快照,并还原到初始化快照上。

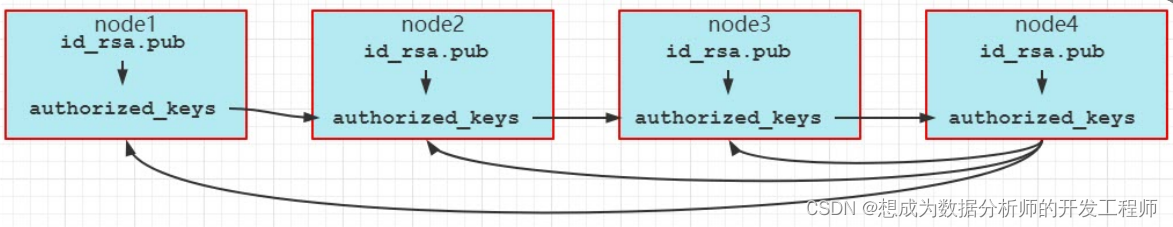

由于后续hadoop等需要四台服务器之间互相均可以免密登录,所以本次直接配置四台服务器的彼此之间的免密登录。配置思路如下:

思路:

先将node0的公钥保存到文件中,文件发送给node1后,把node1的公钥追加到文件后发给node2;再将node2的公钥追加到文件后发给node3;node3保存的文件依次发给node2、node1、node0.

在四台虚拟机上都实现生成私钥、公钥(四台都执行)

[root@localhost ~]# ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

- 1

[root@localhost ~]# cd .ssh/

[root@localhost .ssh]# pwd

# 路径

/root/.ssh

[root@localhost .ssh]# ls

# 保存私钥 公钥文件

id_dsa id_dsa.pub

- 1

- 2

- 3

- 4

- 5

- 6

- 7

在node0中,追加公钥到文件中

[root@localhost .ssh]# cat id_dsa.pub

[root@node0 .ssh]# scp ~/.ssh/authorized_keys node1:/root/.ssh/

root@node1's password:

authorized_keys 100% 616 320.0KB/s 00:00

- 1

- 2

- 3

- 4

将文件拷贝给node1:

[root@node1 .ssh]# cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys # 追加node1公钥倒文件

[root@node1 .ssh]# cat authorized_keys # 查看当前公钥

[root@node1 .ssh]# scp ~/.ssh/authorized_keys node2:/root/.ssh # 发送给node2

The authenticity of host 'node2 (192.168.188.140)' can't be established.

ECDSA key fingerprint is SHA256:7ZxgAoXKgGH64MJTj2TzgGPuwcOwEIJyKN7QF/xEmCk.

ECDSA key fingerprint is MD5:84:5c:99:79:3d:f7:36:53:0f:da:2f:80:e5:46:c7:a1.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node2,192.168.188.140' (ECDSA) to the list of known hosts.

root@node2's password:

authorized_keys 100% 1216 1.4MB/s 00:00

[root@node1 .ssh]#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

在node2上

[root@node2 .ssh]# cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

[root@node2 .ssh]# scp ~/.ssh/authorized_keys node3:/root/.ssh/

The authenticity of host 'node3 (192.168.188.138)' can't be established.

ECDSA key fingerprint is SHA256:V1FmSLM17pvWnfapKkbNsNWSOfufaDrR4bdWGkIxQxA.

ECDSA key fingerprint is MD5:d5:48:cd:2c:40:65:83:6a:51:36:96:54:bf:26:61:cb.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node3,192.168.188.138' (ECDSA) to the list of known hosts.

root@node3's password:

authorized_keys 100% 1832 839.5KB/s 00:00

[root@node2 .ssh]#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

在node3上

[root@node3 .ssh]# cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys # 追加公钥到文件中

# 将文件分别发送给node0 node1 node2

[root@node3 .ssh] scp ~/.ssh/authorized_keys node0:/root/.ssh

[root@node3 .ssh] scp ~/.ssh/authorized_keys node1:/root/.ssh

[root@node3 .ssh] scp ~/.ssh/authorized_keys node2:/root/.ssh

- 1

- 2

- 3

- 4

- 5

登录其他虚拟机

[root@node0 .ssh]# ssh node1 #登录虚拟机

Last login: Thu Mar 2 21:04:50 2023 from 192.168.188.1

[root@node1 ~]# ssh node2

Last login: Thu Mar 2 21:14:20 2023 from 192.168.188.1

[root@node2 ~]# ssh node3

Last login: Thu Mar 2 21:04:48 2023 from 192.168.188.1

[root@node3 ~]# exit# 退出登录

logout

Connection to node3 closed.

[root@node2 ~]# exit

logout

Connection to node2 closed.

[root@node1 ~]# exit

logout

Connection to node1 closed.

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

4.2.2 虚拟机JDK安装和环境变量配置

如何打开窗口,在XShell同时对多台虚拟机发送命令

在node0-node3上创建目录/opt/apps

mkdir /opt/apps

- 1

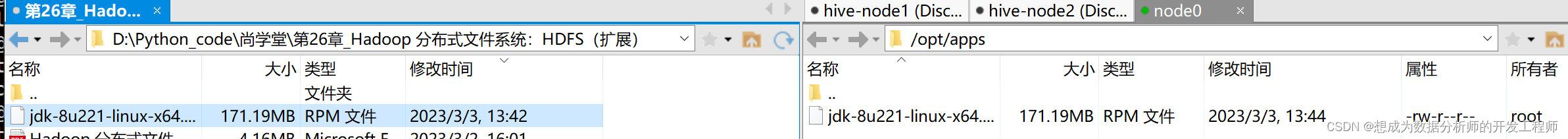

将下载好的jdk-8u221-linux-x64.rpm上传到node0/opt/apps中(每个虚拟机都需要,可以上传4次或者发送的方式)

[root@node0 apps]# ls

jdk-8u221-linux-x64.rpm

[root@node0 apps]# scp jdk-8u221-linux-x64.rpm node1:/opt/apps

[root@node0 apps]# scp jdk-8u221-linux-x64.rpm node2:/opt/apps

[root@node0 apps]# scp jdk-8u221-linux-x64.rpm node3:/opt/apps

- 1

- 2

- 3

- 4

- 5

在四台虚拟机上安装jdk并配置profile文件

[root@node0 apps]# vim /etc/profile

[root@node0 apps]# source /etc/profile

# 最下面添加两行

export JAVA_HOME=/usr/java/default

export PATH=$PATH:$JAVA_HOME/bin

# 测试是否成功

[root@node0 apps]# java -version

openjdk version "1.8.0_262"

OpenJDK Runtime Environment (build 1.8.0_262-b10)

OpenJDK 64-Bit Server VM (build 25.262-b10, mixed mode)

[root@node0 apps]# ll /usr/java/

total 0

lrwxrwxrwx. 1 root root 16 Mar 2 21:48 default -> /usr/java/latest

drwxr-xr-x. 8 root root 258 Mar 2 21:48 jdk1.8.0_221-amd64

lrwxrwxrwx. 1 root root 28 Mar 2 21:48 latest -> /usr/java/jdk1.8.0_221-amd64

# 将文件发送给剩下三台虚拟机

[root@node0 apps]# scp /etc/profile node1:/etc/

profile 100% 1888 391.1KB/s 00:00

[root@node0 apps]# scp /etc/profile node2:/etc/

profile 100% 1888 348.5KB/s 00:00

[root@node0 apps]# scp /etc/profile node3:/etc/

profile 100% 1888 644.9KB/s 00:00

# # source /etc/profile剩下三台执行此代码

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

4.3 集群搭建实战

4.3.1 hadoop安装包

Hadoop下载地址:

https://archive.apache.org/dist/hadoop/common/hadoop-3.1.3/

将hadoop安装文件上传到node1的/opt/apps目录下,并解压到/opt目录下

# 四台虚拟机都关闭:关闭和禁用防火墙方法

[root@node3 apps]# systemctl disable firewalld # 除去本次,关闭防火墙

[root@node3 apps]# systemctl stop firewalld # 仅关闭本次防火墙

[root@node3 apps]# systemctl status firewalld #检查是否关闭

● firewalld.service - firewalld - dynamic firewall daemon

Active: inactive (dead)

[root@node0 apps]# rm -rf jdk-8u221-linux-x64.rpm # 删除文件(四台)拍快照时占用内存

# 把hadoop文件放到/opt/apps下

[root@node0 apps]# tar -zxvf hadoop-3.1.3.tar.gz -C /opt/ # 解压到opt文件夹下

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

重要目录

[root@node1 apps]# cd /opt

[root@node1 opt]# ll

总用量 0

drwxr-xr-x 2 root root 33 10月 8 13:03 apps

drwxr-xr-x 9 itbaizhan itbaizhan 149 9月 12 2019 hadoop-3.1.3

[root@node1 opt]# vim /etc/passwd

[root@node1 opt]# cd hadoop-3.1.3/

[root@node1 hadoop-3.1.3]# ll

总用量 176

drwxr-xr-x 2 itbaizhan itbaizhan 183 9月 12 2019 bin

drwxr-xr-x 3 itbaizhan itbaizhan 20 9月 12 2019 etc

drwxr-xr-x 2 itbaizhan itbaizhan 106 9月 12 2019 include

drwxr-xr-x 3 itbaizhan itbaizhan 20 9月 12 2019 lib

drwxr-xr-x 4 itbaizhan itbaizhan 288 9月 12 2019 libexec

-rw-rw-r-- 1 itbaizhan itbaizhan 147145 9月 4 2019 LICENSE.txt

-rw-rw-r-- 1 itbaizhan itbaizhan 21867 9月 4 2019 NOTICE.txt

-rw-rw-r-- 1 itbaizhan itbaizhan 1366 9月 4 2019 README.txt

drwxr-xr-x 3 itbaizhan itbaizhan 4096 9月 12 2019 sbin

drwxr-xr-x 4 itbaizhan itbaizhan 31 9月 12 2019 share

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

bin目录:存放对Hadoop相关服务(hadoop,hdfs,yarn)进行操作的脚本

etc目录:Hadoop的配置文件目录,存放Hadoop的配置文件

lib目录:存放Hadoop的本地库(对数据进行压缩解压缩功能)

sbin目录:存放启动或停止Hadoop相关服务的脚本

share目录:存放Hadoop的依赖jar包、文档、和官方案例

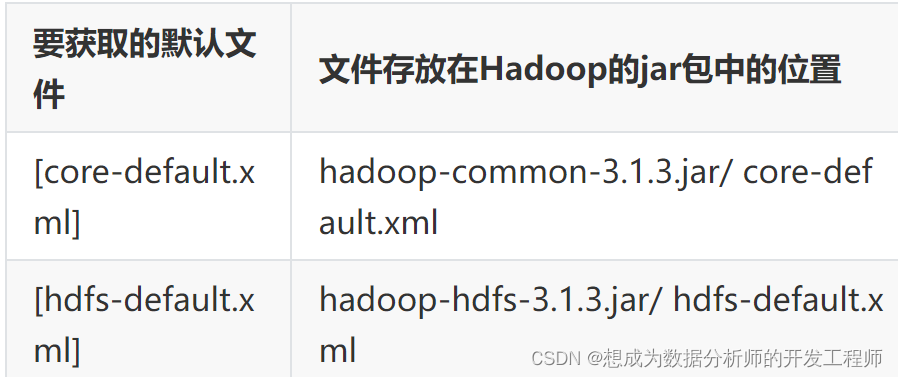

配置文件说明

Hadoop配置文件分两类:默认配置文件和自定义配置文件,只有用户想修改某一默认配置值时,才需要修改自定义配置文件,更改相应属性值。

- 默认配置文件:

- 自定义配置文件:

core-site.xml、hdfs-site.xml两个配置文件存放在$HADOOP_HOME/etc/hadoop这个路径上,用户可以根据项目需求重新进行修改配置。

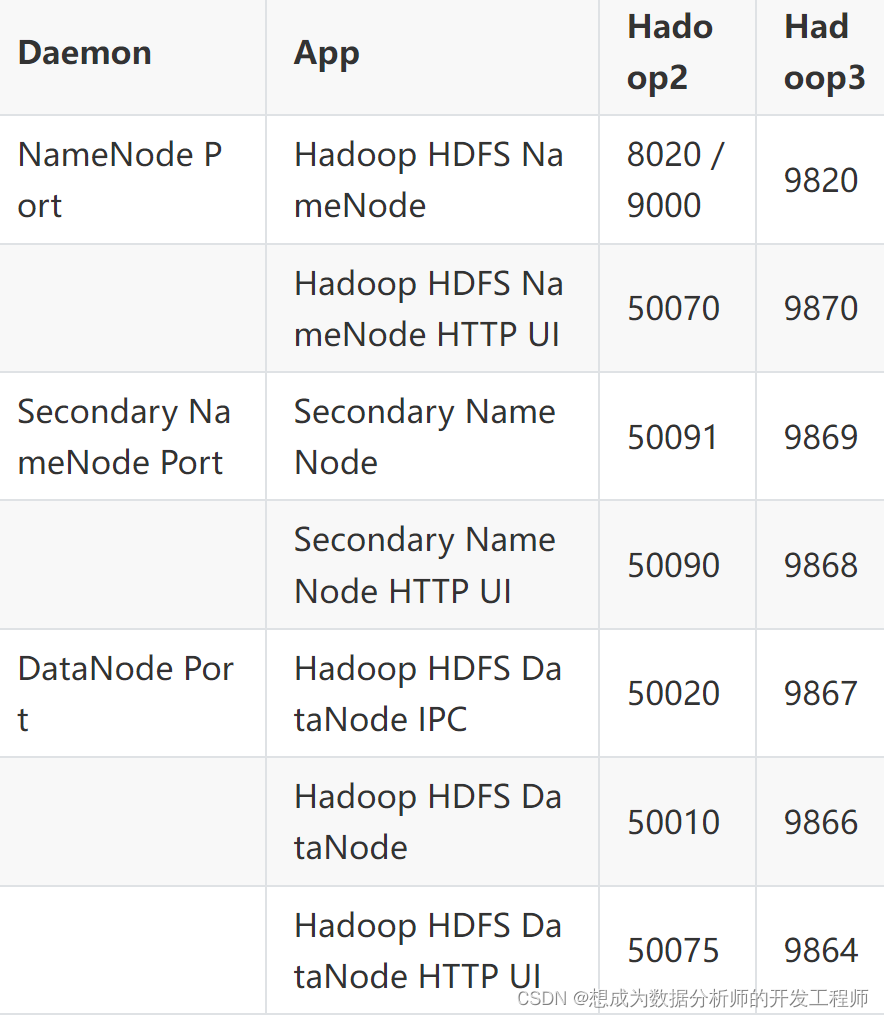

- 常用端口号说明

4.3.2 HDFS集群配置

- 环境变量配置

# node0上

[root@node0 hadoop-3.1.3]# pwd

/opt/hadoop-3.1.3

[root@node0 hadoop-3.1.3]# vim /etc/profile

# 添加如下代码

export HADOOP_HOME=/opt/hadoop-3.1.3

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

[root@node0 hadoop-3.1.3]# source /etc/profile

# node1上

[root@node1 opt]# source /etc/profile

export HADOOP_HOME=/opt/hadoop-3.1.3

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

[root@node1 opt]# scp /etc/profile node2:/etc/

profile 100% 1978 640.4KB/s 00:00

[root@node1 opt]# scp /etc/profile node3:/etc/

profile

# node2、node3接受文件后执行 source /etc/profile

# 在node0虚拟机上删除文件

[root@node0 hadoop-3.1.3]# rm -rf share/doc/

[root@node0 hadoop-3.1.3]# rm -f bin/*.cmd

[root@node0 hadoop-3.1.3]# rm -rf sbin/*.cmd

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

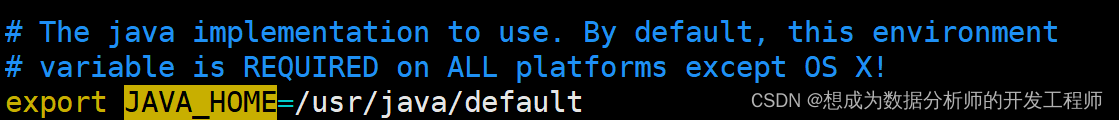

- hadoop-env.sh配置

node0中

#进入$HADOOP_HOME/etc/hadoop

cd /opt/hadoop-3.1.3/etc/hadoop/

#修改hadoop-env.sh

export JAVA_HOME=/usr/java/default

- 1

- 2

- 3

- 4

由于通过SSH远程启动进程的时候默认不会加载/etc/profile设置,JAVA_HOME变量就加载不到,需要手动指定。

- workers配置:node0

修改workers(hadoop2.x为slaves)文件,指定datanode的位置

注意:该文件中不能出现空行,添加的内容结尾也不能出现空格。

[root@node0 hadoop]# pwd

/opt/hadoop-3.1.3/etc/hadoop

[root@node0 hadoop]# vim workers

- 1

- 2

- 3

- core-site.xml配置

node0配置

[root@node0 hadoop]# pwd

/opt/hadoop-3.1.3/etc/hadoop

[root@node0 hadoop]# vim core-site.xml

# 添加代码

<configuration>

<!--用来指定hdfs的老大,namenode的地址-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://node0:9820</value>

</property>

<!-- 指定hadoop数据的存储目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/var/itbaizhan/hadoop/full</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- hdfs-site.xml配置

node0配置

[root@node0 hadoop]# pwd

/opt/hadoop-3.1.3/etc/hadoop

[root@node0 hadoop]# vim hdfs-site.xml

# 添加代码

<configuration>

<!-- 指定NameNode web端访问地址 -->

<property>

<name>dfs.namenode.http-address</name>

<value>node0:9870</value>

</property>

<!-- 指定secondary namenode web端访问地址 -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node1:9868</value>

</property>

<!-- 指定每个block块的副本数,默认为3 -->

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 拷贝到node1-node3上

node0上

[root@node0 opt]# pwd

/opt

[root@node0 opt]# tar -zcvf hadoop-3.1.3.tar.gz hadoop-3.1.3/ # 打包文件

[root@node0 opt]# scp hadoop-3.1.3.tar.gz node1:/opt

hadoop-3.1.3.tar.gz 100% 285MB 107.5MB/s 00:02

[root@node0 opt]# scp hadoop-3.1.3.tar.gz node2:/opt

hadoop-3.1.3.tar.gz 100% 285MB 112.7MB/s 00:02

[root@node0 opt]# scp hadoop-3.1.3.tar.gz node3:/opt

hadoop-3.1.3.tar.gz 100% 285MB 117.7MB/s 00:02

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

node1-node3上

[root@node1 opt]# pwd

/opt

[root@node1 opt]# ls

apps hadoop-3.1.3 hadoop-3.1.3.tar.gz rh

[root@node1 opt]# tar -zxvf hadoop-3.1.3.tar.gz # 解压缩包

#node1、node2、node3、node4测试

[root@node4 opt]# had #然后按下 Tab 制表符,能够自动补全为hadoop,说明环境变量是好的。

#获取通过hadoop version命令测试

[root@node4 opt]# hadoop version

Hadoop 3.1.3

Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r ba631c436b806728f8ec2f54ab1e289526c90579

Compiled by ztang on 2019-09-12T02:47Z

Compiled with protoc 2.5.0

From source with checksum ec785077c385118ac91aadde5ec9799

This command was run using /opt/hadoop-3.1.3/share/hadoop/common/hadoop-common-3.1.3.jar

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

4.3.3 格式化、启动和测试

- 格式化

#在node0上执行:

[root@node1 ~]# hdfs namenode -format

[root@node1 ~]# ll /var/itbaizhan/hadoop/full/dfs/name/current/

总用量 16

-rw-r--r-- 1 root root 391 10月 8 20:36 fsimage_0000000000000000000

-rw-r--r-- 1 root root 62 10月 8 20:36 fsimage_0000000000000000000.md5

-rw-r--r-- 1 root root 2 10月 8 20:36 seen_txid

-rw-r--r-- 1 root root 216 10月 8 20:36 VERSION

#在node0-3四个节点上执行jps,jps作用显示当前系统中的java进程

[root@node1 ~]# jps

2037 Jps

[root@node2 ~]# jps

1981 Jps

[root@node3 ~]# jps

1979 Jps

[root@node4 ~]# jps

1974 Jps

#通过观察并没有发现除了jps之外并没有其它的java进程。

#

[root@node1 ~]# vim /var/itbaizhan/hadoop/full/dfs/name/current/VERSION

#Sat Oct 09 10:42:49 CST 2021

namespaceID=1536048782

clusterID=CID-7ecb999c-ef5a-4396-bdc7-c9a741a797c4 #集群id

cTime=1633747369798

storageType=NAME_NODE #角色为NameNode

blockpoolID=BP-1438277808-192.168.20.101-1633747369798#本次格式化后块池的id

layoutVersion=-64

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

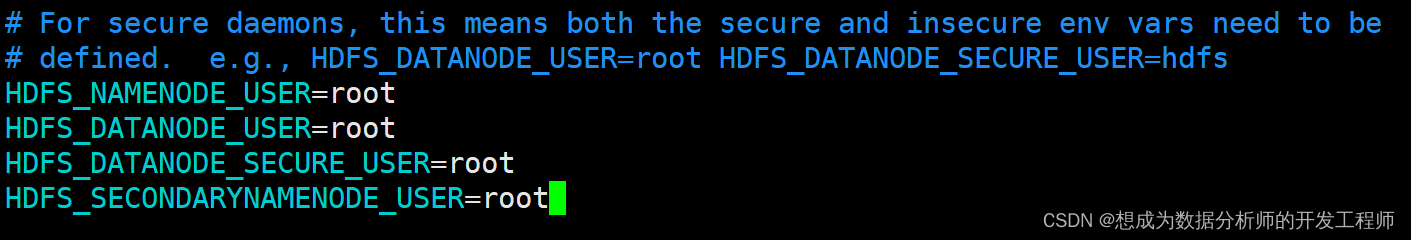

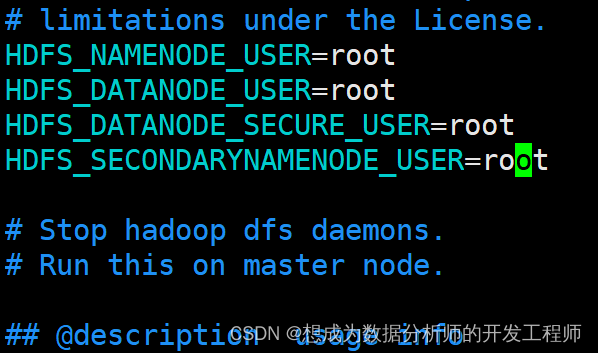

- 启动HDFS

node0

8726 Jps

[root@node0 ~]# start-dfs.sh

报错!!!!!

Starting namenodes on [node0]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [node1]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

#解决办法,就是修改start-dfs.sh,添加以下内容

[root@node1 ~]# vim /opt/hadoop-3.1.3/sbin/start-dfs.sh

HDFS_NAMENODE_USER=root

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

# 再次启动

[root@node0 ~]# start-dfs.sh

Starting namenodes on [node0]

Last login: Fri Mar 3 00:39:46 PST 2023 from 192.168.188.1 on pts/1

node0: Warning: Permanently added 'node0,192.168.188.135' (ECDSA) to the list of known hosts.

Starting datanodes

Last login: Fri Mar 3 01:31:48 PST 2023 on pts/0

localhost: Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

node2: WARNING: /opt/hadoop-3.1.3/logs does not exist. Creating.

node3: WARNING: /opt/hadoop-3.1.3/logs does not exist. Creating.

node1: WARNING: /opt/hadoop-3.1.3/logs does not exist. Creating.

Starting secondary namenodes [node1]

Last login: Fri Mar 3 01:31:51 PST 2023 on pts/0

#查看四个节点上对应的角色是否启动

[root@node0 ~]# jps

10693 Jps

9497 NameNode

9677 DataNode

[root@node1 ~]# jps

17728 Jps

16781 DataNode

16895 SecondaryNameNode

[root@node2 ~]# jps

17816 Jps

16971 DataNode

[root@node3 ~]# jps

16980 DataNode

17829 Jps

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

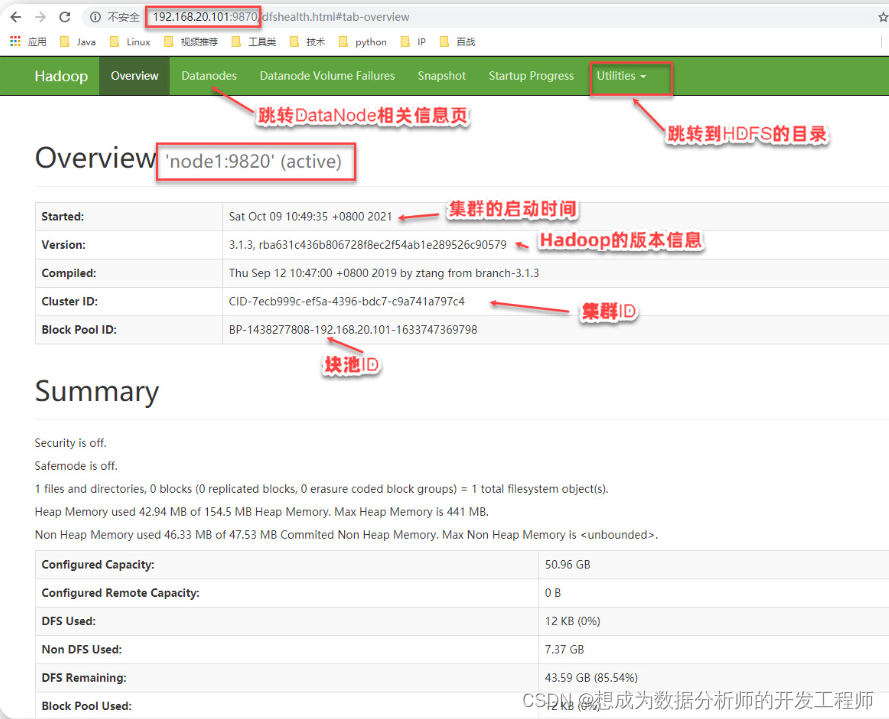

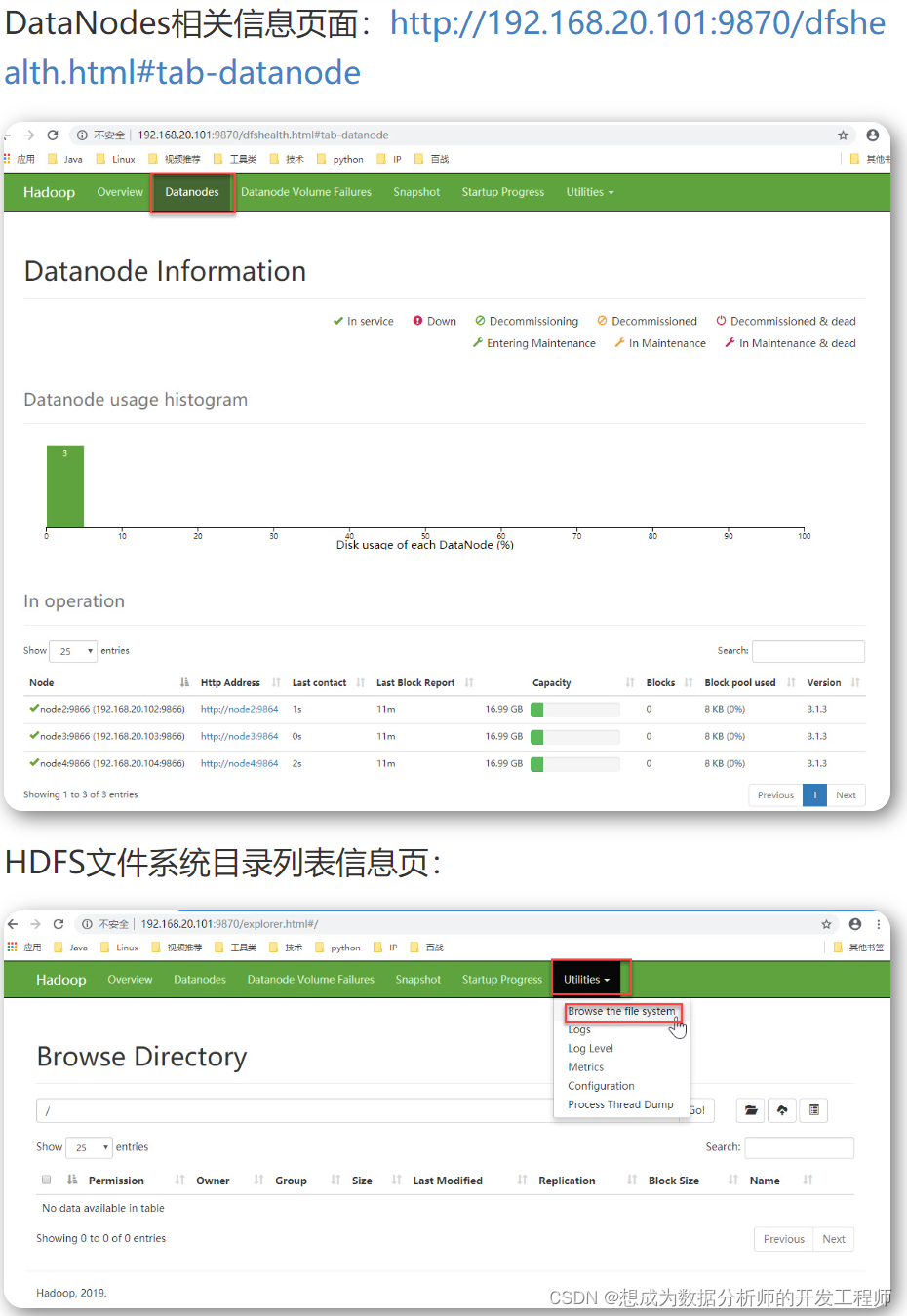

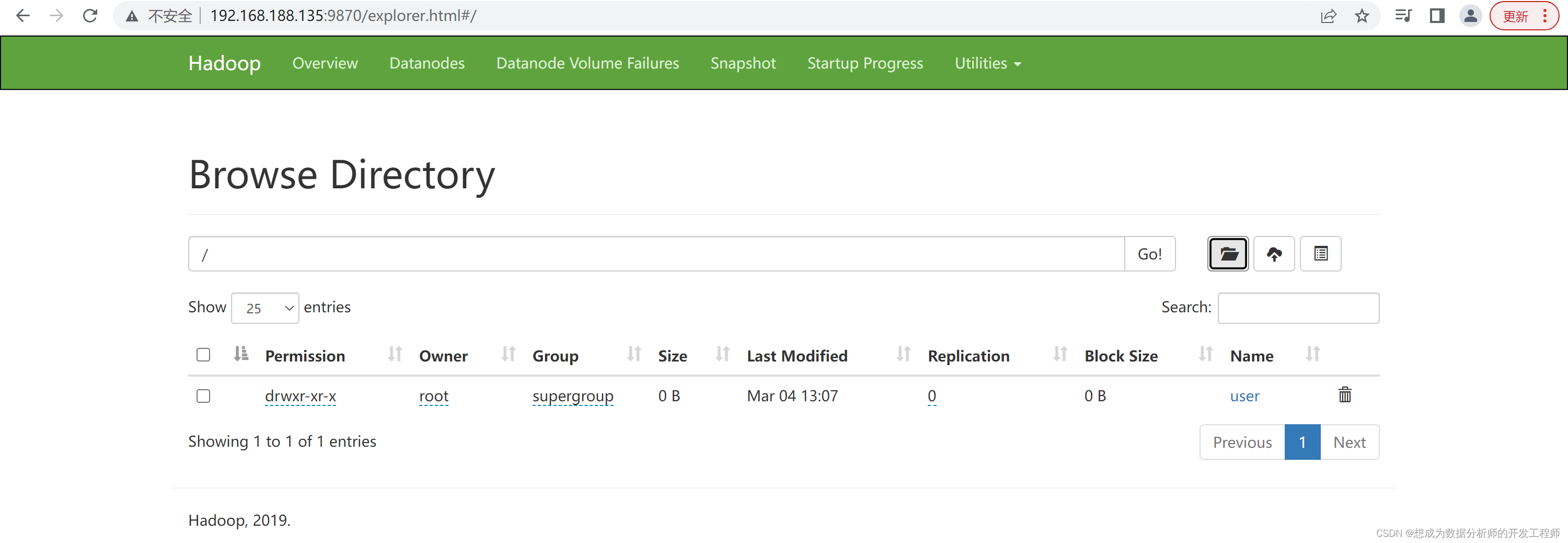

- Web端查看HDFS的NameNode相关信息

在浏览器地址栏中输入:http://192.168.188.135:9870/(ip地址+9870端口号),查看HDFS上存储的数据信息,以及DataNode节点的相关信息。

4.3.4 常见的HDFS命令行

HDFS创建文件夹命令、HDFS查看文件夹命令、HDFS上传文件命令

# 在hdfs文件系统中创建一个目录 多级目录创建-p

[root@node0 ~]# hdfs dfs -mkdir -p /user/root

# 查看hdfs指定目录的文件列表

[root@node0 ~]# hdfs dfs -ls /user

Found 1 items

drwxr-xr-x - root supergroup 0 2023-03-03 21:07 /user/root

- 1

- 2

- 3

- 4

- 5

- 6

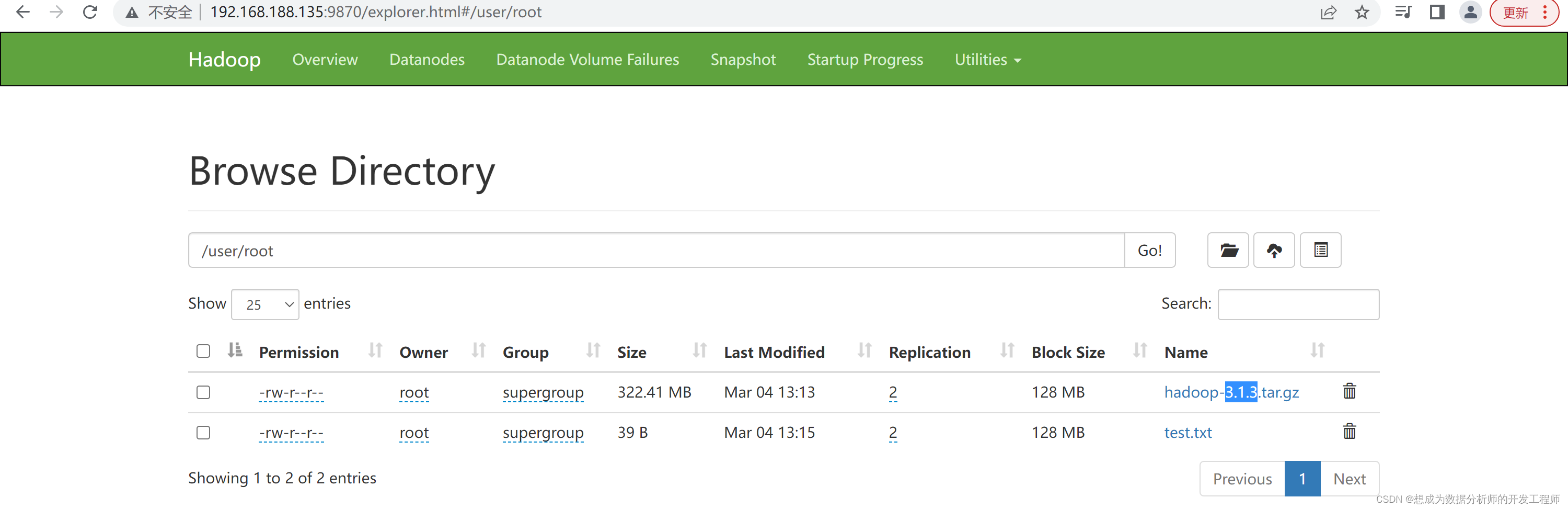

# node0上上传文件

[root@node0 ~]# hdfs dfs -put /opt/apps/hadoop-3.1.3.tar.gz /user/root # 必须存在此路径

2023-03-03 21:13:31,093 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2023-03-03 21:13:35,340 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2023-03-03 21:13:38,217 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

- 1

- 2

- 3

- 4

- 5

# 创建文本文件后上传

[root@node1 ~]# vim test.txt

# 上传文件

[root@node1 ~]# hdfs dfs -put test.txt /user/root

2023-03-03 21:15:13,817 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

# 查看是否上传成功

[root@node1 ~]# hdfs dfs -ls /user/root

Found 2 items

-rw-r--r-- 2 root supergroup 338075860 2023-03-03 21:13 /user/root/hadoop-3.1.3.tar.gz

-rw-r--r-- 2 root supergroup 39 2023-03-03 21:15 /user/root/test.txt

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

HDFS文件与目录的删除

[root@node1 ~]# hdfs dfs -mkdir /test

[root@node1 ~]# hdfs dfs -put test.txt /test

2023-03-03 21:25:37,605 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

# 文件的删除

[root@node1 ~]# hdfs dfs -rm /test/test.txt

Deleted /test/test.txt

[root@node1 ~]# hdfs dfs -put test.txt /test

2023-03-03 21:26:06,864 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

# 删除文件夹

[root@node1 ~]# hdfs dfs -rm -r /test

Deleted /test

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

4.3.5 集群启动和停止总结

- HDFS各个角色一起停止

[root@node0 ~]# stop-dfs.sh

# 出现异常

Stopping namenodes on [node0]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Stopping datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Stopping secondary namenodes [node1]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

# 解决办法:在此文件中添加内容

[root@node0 ~]# vim /opt/hadoop-3.1.3/sbin/stop-dfs.sh

HDFS_NAMENODE_USER=root

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

# 再次停止

[root@node0 ~]# stop-dfs.sh

Stopping namenodes on [node0]

Last login: Fri Mar 3 21:05:12 PST 2023 from 192.168.188.1 on pts/1

Stopping datanodes

Last login: Fri Mar 3 21:36:37 PST 2023 on pts/1

Stopping secondary namenodes [node1]

Last login: Fri Mar 3 21:36:39 PST 2023 on pts/1

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 单独启动某角色进程

[root@node0 ~]# jps

15267 Jps

[root@node0 ~]# hdfs --daemon start namenode

[root@node0 ~]# jps

15410 Jps

15331 NameNode

#在node1上启动2nn和dn

[root@node1 ~]# hdfs --daemon start secondarynamenode

[root@node1 ~]# hdfs --daemon start datanode

[root@node1 ~]# jps

6672 DataNode

6629 SecondaryNameNode

# node2\node3启动datanode

[root@node3 ~]# hdfs --daemon start datanode

[root@node3 ~]# jps

22372 DataNode

22397 Jps

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 单独关闭某角色进程

hdfs --daemon stop namenode/secondarynamenode/datanode

[root@node4 ~]# hdfs --daemon stop datanode

[root@node4 ~]# jps

6251 Jps

[root@node3 ~]# hdfs --daemon stop datanode

[root@node3 ~]# jps

6247 Jps

[root@node2 ~]# hdfs --daemon stop datanode

[root@node2 ~]# hdfs --daemon stop secondarynamenode

[root@node2 ~]# jps

7327 Jps

[root@node1 ~]# hdfs --daemon stop namenode

[root@node1 ~]# jps

7710 Jps

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- HDFS各个角色一起启动

[root@node1 ~]# start-dfs.sh

Starting namenodes on [node1]

上一次登录:六 10月 9 11:38:06 CST 2021pts/0 上

Starting datanodes

上一次登录:六 10月 9 11:48:18 CST 2021pts/0 上

Starting secondary namenodes [node2]

上一次登录:六 10月 9 11:48:20 CST 2021pts/0 上

- 1

- 2

- 3

- 4

- 5

- 6

- 7