- 1如何备考PMP考试?

- 2[Go语言]我的性能我做主(1)_b.reportallocs()

- 3安装运行streamlit 过程中出现的2个问题_no module named 'streamlit

- 4【JavaWeb】网上蛋糕商城-项目搭建_网上蛋糕商城web项目

- 528岁程序猿,劝告那些想去学车载测试的人

- 6华为OD机试-字符串变换最小字符串(Java&Python&Js)100%通过率_给定一个字符串 最多只能交换一次 返回变换后能得到的最小字符串

- 7强力推荐!史上最强logo设计Midjourney提示词合集_midjourney logo设计关键词

- 8pythonexcel汇总_Python汇总excel到总表格

- 9如何使用Python读写多个sheet文件_python sheets 选择读取多行sheet

- 10喜报丨上海容大中标某股份制大行信用卡中心PDA移动办卡终端项目

C# Onnx Chinese CLIP 通过一句话从图库中搜出来符合要求的图片_c# winform onnx 识别图片

赞

踩

目录

C# Onnx Chinese CLIP 通过一句话从图库中搜出来符合要求的图片

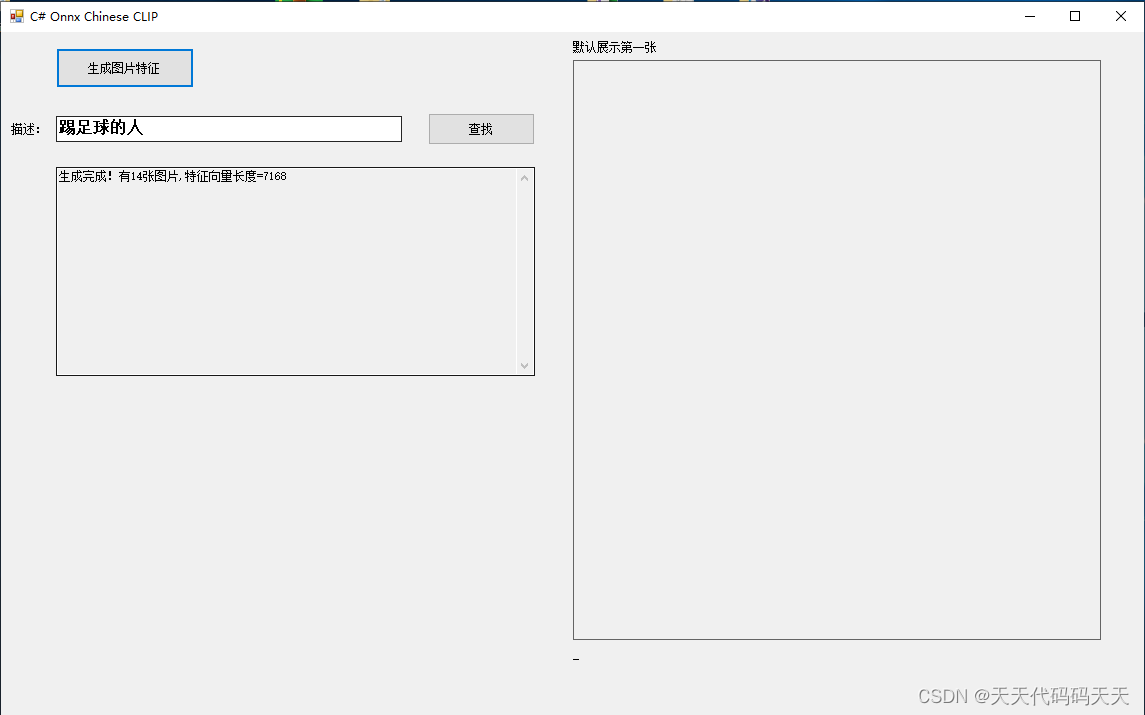

效果

生成图片特征

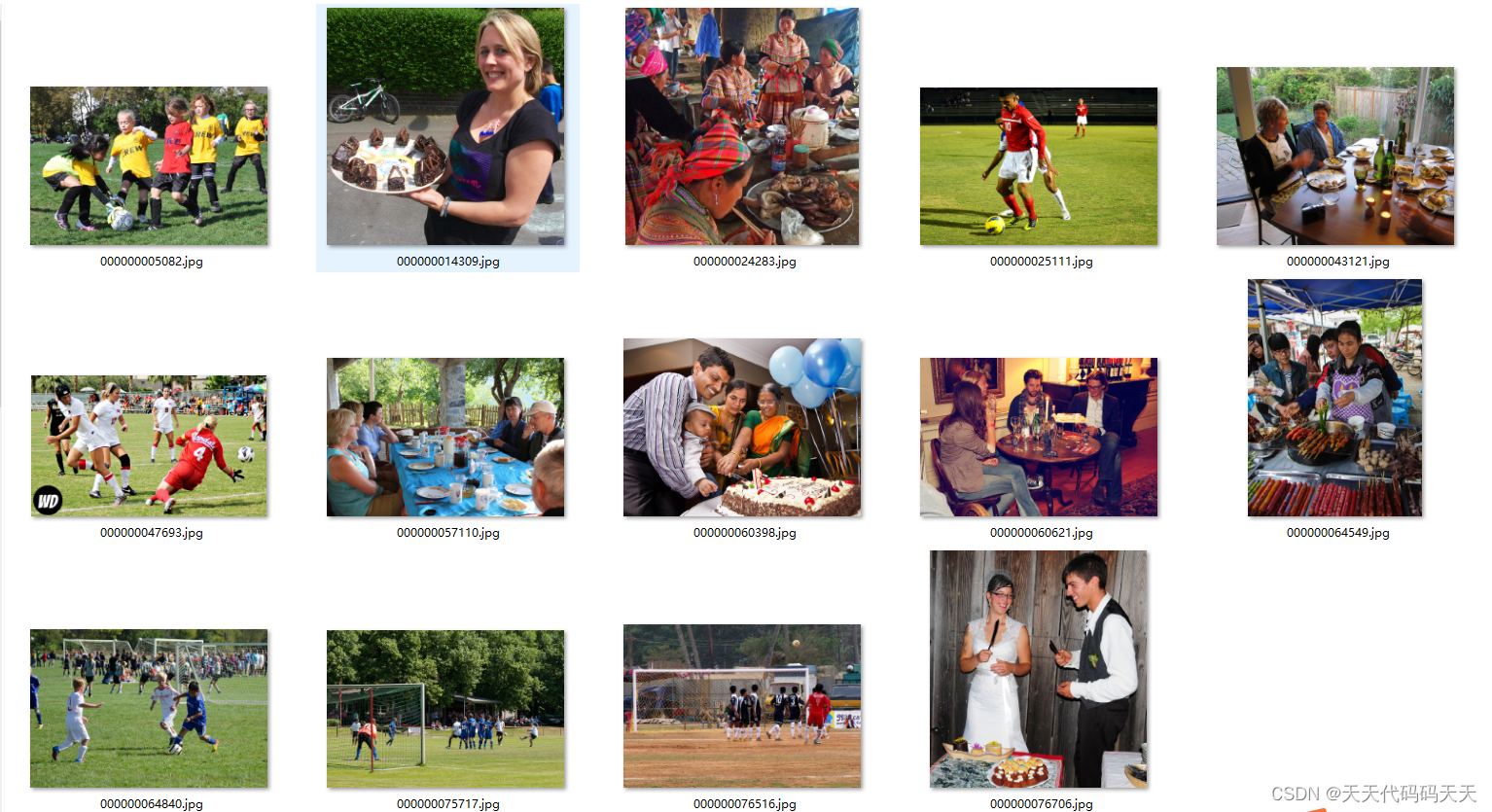

查找踢足球的人

测试图片

模型信息

image_model.onnx

Inputs

-------------------------

name:image

tensor:Float[1, 3, 224, 224]

---------------------------------------------------------------

Outputs

-------------------------

name:unnorm_image_features

tensor:Float[1, 512]

---------------------------------------------------------------

text_model.onnx

Inputs

-------------------------

name:text

tensor:Int64[1, 52]

---------------------------------------------------------------

Outputs

-------------------------

name:unnorm_text_features

tensor:Float[1, 512]

---------------------------------------------------------------

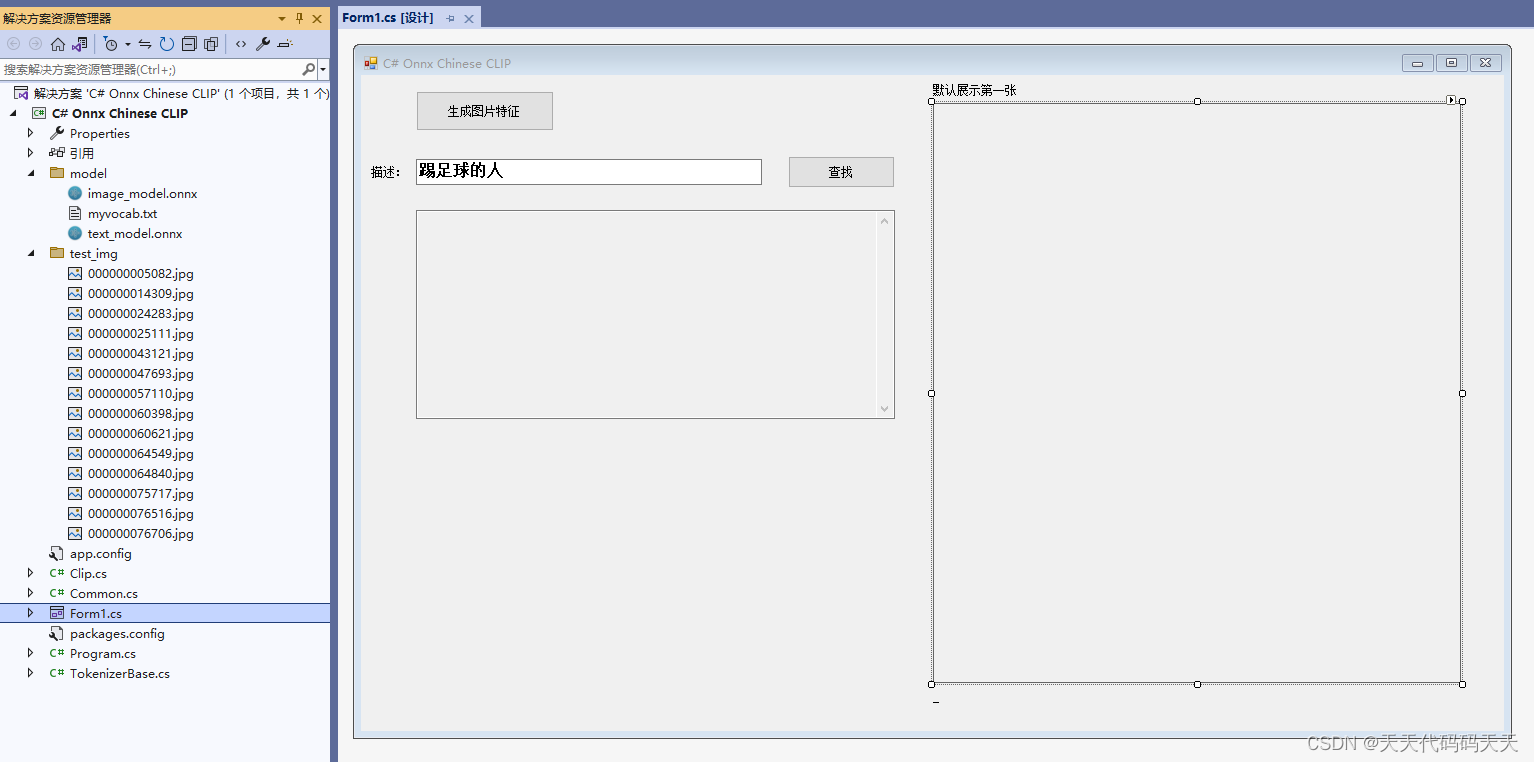

项目

代码

Form1.cs

using System;

using System.Collections.Generic;

using System.Drawing;

using System.IO;

using System.Linq;

using System.Text;

using System.Windows.Forms;

namespace Onnx_Demo

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

Clip mynet = new Clip("model/image_model.onnx", "model/text_model.onnx", "model/myvocab.txt");

float[] imagedir_features;

string image_dir = "test_img";

StringBuilder sb = new StringBuilder();

private void button2_Click(object sender, EventArgs e)

{

//特征向量 可以存二进制文件或者向量数据库

imagedir_features = mynet.generate_imagedir_features(image_dir);

txtInfo.Text = "生成完成!";

txtInfo.Text += "有" + mynet.imgnum + "张图片,特征向量长度=" + imagedir_features.Length;

}

private void button3_Click(object sender, EventArgs e)

{

if (imagedir_features == null)

{

MessageBox.Show("请先生成图片特征!");

return;

}

sb.Clear();

txtInfo.Text = "";

lblInfo.Text = "";

pictureBox1.Image = null;

string input_text = txt_input_text.Text;

if (string.IsNullOrEmpty(input_text))

{

return;

}

List<Dictionary<string, float>> top5imglist = mynet.input_text_search_image(input_text, imagedir_features, mynet.imglist);

sb.AppendLine("top5:");

foreach (var item in top5imglist)

{

sb.AppendLine(Path.GetFileName(item.Keys.First()) + " 相似度:" + item[item.Keys.First()].ToString("F2"));

}

txtInfo.Text = sb.ToString();

lblInfo.Text = Path.GetFileName(top5imglist[0].Keys.First());

pictureBox1.Image = new Bitmap(top5imglist[0].Keys.First());

}

private void Form1_Load(object sender, EventArgs e)

{

}

}

}

-

- using System;

- using System.Collections.Generic;

- using System.Drawing;

- using System.IO;

- using System.Linq;

- using System.Text;

- using System.Windows.Forms;

-

- namespace Onnx_Demo

- {

- public partial class Form1 : Form

- {

- public Form1()

- {

- InitializeComponent();

- }

-

- Clip mynet = new Clip("model/image_model.onnx", "model/text_model.onnx", "model/myvocab.txt");

-

- float[] imagedir_features;

- string image_dir = "test_img";

- StringBuilder sb = new StringBuilder();

-

- private void button2_Click(object sender, EventArgs e)

- {

- //特征向量 可以存二进制文件或者向量数据库

- imagedir_features = mynet.generate_imagedir_features(image_dir);

- txtInfo.Text = "生成完成!";

- txtInfo.Text += "有" + mynet.imgnum + "张图片,特征向量长度=" + imagedir_features.Length;

- }

-

- private void button3_Click(object sender, EventArgs e)

- {

- if (imagedir_features == null)

- {

- MessageBox.Show("请先生成图片特征!");

- return;

- }

-

- sb.Clear();

- txtInfo.Text = "";

- lblInfo.Text = "";

- pictureBox1.Image = null;

-

- string input_text = txt_input_text.Text;

- if (string.IsNullOrEmpty(input_text))

- {

- return;

- }

- List<Dictionary<string, float>> top5imglist = mynet.input_text_search_image(input_text, imagedir_features, mynet.imglist);

- sb.AppendLine("top5:");

- foreach (var item in top5imglist)

- {

- sb.AppendLine(Path.GetFileName(item.Keys.First()) + " 相似度:" + item[item.Keys.First()].ToString("F2"));

- }

-

- txtInfo.Text = sb.ToString();

- lblInfo.Text = Path.GetFileName(top5imglist[0].Keys.First());

- pictureBox1.Image = new Bitmap(top5imglist[0].Keys.First());

-

- }

-

- private void Form1_Load(object sender, EventArgs e)

- {

-

- }

- }

- }

Clip.cs

public class Clip

{

int inpWidth = 224;

int inpHeight = 224;

float[] mean = new float[] { 0.48145466f, 0.4578275f, 0.40821073f };

float[] std = new float[] { 0.26862954f, 0.26130258f, 0.27577711f };

int context_length = 52;

int len_text_feature = 512;

Net net;

float[] image_features_input;

SessionOptions options;

InferenceSession onnx_session;

Tensor<long> input_tensor;

List<NamedOnnxValue> input_container;

IDisposableReadOnlyCollection<DisposableNamedOnnxValue> result_infer;

DisposableNamedOnnxValue[] results_onnxvalue;

Tensor<float> result_tensors;

TokenizerBase tokenizer;

int[] text_tokens_input;

float[,] text_features_input;

public int imgnum = 0;

public List<string> imglist = new List<string>();

public Clip(string image_modelpath, string text_modelpath, string vocab_path)

{

net = CvDnn.ReadNetFromOnnx(image_modelpath);

// 创建输出会话,用于输出模型读取信息

options = new SessionOptions();

options.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;

options.AppendExecutionProvider_CPU(0);// 设置为CPU上运行

// 创建推理模型类,读取本地模型文件

onnx_session = new InferenceSession(text_modelpath, options);//model_path 为onnx模型文件的路径

// 创建输入容器

input_container = new List<NamedOnnxValue>();

load_tokenizer(vocab_path);

}

void load_tokenizer(string vocab_path)

{

tokenizer = new TokenizerClipChinese();

tokenizer.load_tokenize(vocab_path);

text_tokens_input = new int[1024 * context_length];

}

Mat normalize_(Mat src)

{

Cv2.CvtColor(src, src, ColorConversionCodes.BGR2RGB);

Mat[] bgr = src.Split();

for (int i = 0; i < bgr.Length; ++i)

{

bgr[i].ConvertTo(bgr[i], MatType.CV_32FC1, 1.0 / (255.0 * std[i]), (0.0 - mean[i]) / std[i]);

}

Cv2.Merge(bgr, src);

foreach (Mat channel in bgr)

{

channel.Dispose();

}

return src;

}

unsafe void generate_image_feature(Mat srcimg)

{

Mat temp_image = new Mat();

Cv2.Resize(srcimg, temp_image, new Size(inpWidth, inpHeight), 0, 0, InterpolationFlags.Cubic);

Mat normalized_mat = normalize_(temp_image);

Mat blob = CvDnn.BlobFromImage(normalized_mat);

net.SetInput(blob);

//模型推理,读取推理结果

Mat[] outs = new Mat[1] { new Mat() };

string[] outBlobNames = net.GetUnconnectedOutLayersNames().ToArray();

net.Forward(outs, outBlobNames);

float* ptr_feat = (float*)outs[0].Data;

int len_image_feature = outs[0].Size(1); //忽略第0维batchsize=1, len_image_feature是定值512,跟len_text_feature相等的, 也可以写死在类成员变量里

image_features_input = new float[len_image_feature];

float norm = 0.0f;

for (int i = 0; i < len_image_feature; i++)

{

norm += ptr_feat[i] * ptr_feat[i];

}

norm = (float)Math.Sqrt(norm);

for (int i = 0; i < len_image_feature; i++)

{

image_features_input[i] = ptr_feat[i] / norm;

}

}

unsafe void generate_text_feature(List<string> texts)

{

List<List<int>> text_token = new List<List<int>>(texts.Count);

for (int i = 0; i < texts.Count; i++)

{

text_token.Add(new List<int>());

}

for (int i = 0; i < texts.Count; i++)

{

tokenizer.encode_text(texts[i], text_token[i]);

}

if (text_token.Count * context_length > text_tokens_input.Length)

{

text_tokens_input = new int[text_token.Count * context_length];

}

foreach (int i in text_tokens_input) { text_tokens_input[i] = 0; }

for (int i = 0; i < text_token.Count; i++)

{

if (text_token[i].Count > context_length)

{

Console.WriteLine("text_features index " + i + " ,bigger than " + context_length + "\n");

continue;

}

for (int j = 0; j < text_token[i].Count; j++)

{

text_tokens_input[i * context_length + j] = text_token[i][j];

}

}

int[] text_token_shape = new int[] { 1, context_length };

text_features_input = new float[text_token.Count, len_text_feature];

long[] text_tokens_input_64 = new long[texts.Count * context_length];

for (int i = 0; i < text_tokens_input_64.Length; i++)

{

text_tokens_input_64[i] = text_tokens_input[i];

}

for (int i = 0; i < text_token.Count; i++)

{

input_tensor = new DenseTensor<long>(text_tokens_input_64, new[] { 1, 52 });

input_container.Clear();

input_container.Add(NamedOnnxValue.CreateFromTensor("text", input_tensor));

//运行 Inference 并获取结果

result_infer = onnx_session.Run(input_container);

// 将输出结果转为DisposableNamedOnnxValue数组

results_onnxvalue = result_infer.ToArray();

// 读取第一个节点输出并转为Tensor数据

result_tensors = results_onnxvalue[0].AsTensor<float>();

float[] text_feature_ptr = results_onnxvalue[0].AsTensor<float>().ToArray();

float norm = 0.0f;

for (int j = 0; j < len_text_feature; j++)

{

norm += text_feature_ptr[j] * text_feature_ptr[j];

}

norm = (float)Math.Sqrt(norm);

for (int j = 0; j < len_text_feature; j++)

{

text_features_input[i, j] = text_feature_ptr[j] / norm;

}

}

}

void softmax(float[] input)

{

int length = input.Length;

float[] exp_x = new float[length];

float maxVal = input.Max();

float sum = 0;

for (int i = 0; i < length; i++)

{

float expval = (float)Math.Exp(input[i] - maxVal);

exp_x[i] = expval;

sum += expval;

}

for (int i = 0; i < length; i++)

{

input[i] = exp_x[i] / sum;

}

}

int[] argsort_ascend(float[] array)

{

int array_len = array.Length;

int[] array_index = new int[array_len];

for (int i = 0; i < array_len; ++i)

{

array_index[i] = i;

}

var temp = array_index.ToList();

temp.Sort((pos1, pos2) =>

{

if (array[pos1] < array[pos2])

{

return -1;

}

else if (array[pos1] == array[pos2])

{

return 0;

}

else

{

return 0;

}

});

return temp.ToArray();

}

public List<Dictionary<string, float>> input_text_search_image(string text, float[] image_features, List<string> imglist)

{

int imgnum = imglist.Count;

List<string> texts = new List<string> { text };

generate_text_feature(texts);

float[] logits_per_image = new float[imgnum];

for (int i = 0; i < imgnum; i++)

{

float sum = 0;

for (int j = 0; j < len_text_feature; j++)

{

sum += image_features[i * len_text_feature + j] * text_features_input[0, j]; //图片特征向量跟文本特征向量做内积

}

logits_per_image[i] = 100 * sum;

}

softmax(logits_per_image);

int[] index = argsort_ascend(logits_per_image);

List<Dictionary<string, float>> top5imglist = new List<Dictionary<string, float>>(5);

for (int i = 0; i < 5; i++)

{

int ind = index[imgnum - 1 - i];

Dictionary<string, float> result = new Dictionary<string, float>();

result.Add(imglist[ind], logits_per_image[ind]);

top5imglist.Add(result);

}

return top5imglist;

}

public float[] generate_imagedir_features(string image_dir)

{

imglist = Common.listdir(image_dir);

imgnum = imglist.Count;

Console.WriteLine("遍历到" + imgnum + "张图片");

float[] imagedir_features = new float[0];

for (int i = 0; i < imgnum; i++)

{

string imgpath = imglist[i];

Mat srcimg = Cv2.ImRead(imgpath);

generate_image_feature(srcimg);

imagedir_features = imagedir_features.Concat(image_features_input).ToArray();

srcimg.Dispose();

}

return imagedir_features;

}

}

- public class Clip

- {

- int inpWidth = 224;

- int inpHeight = 224;

- float[] mean = new float[] { 0.48145466f, 0.4578275f, 0.40821073f };

- float[] std = new float[] { 0.26862954f, 0.26130258f, 0.27577711f };

-

- int context_length = 52;

- int len_text_feature = 512;

-

- Net net;

- float[] image_features_input;

-

- SessionOptions options;

- InferenceSession onnx_session;

- Tensor<long> input_tensor;

- List<NamedOnnxValue> input_container;

- IDisposableReadOnlyCollection<DisposableNamedOnnxValue> result_infer;

- DisposableNamedOnnxValue[] results_onnxvalue;

- Tensor<float> result_tensors;

-

- TokenizerBase tokenizer;

-

- int[] text_tokens_input;

- float[,] text_features_input;

-

- public int imgnum = 0;

- public List<string> imglist = new List<string>();

-

- public Clip(string image_modelpath, string text_modelpath, string vocab_path)

- {

- net = CvDnn.ReadNetFromOnnx(image_modelpath);

-

- // 创建输出会话,用于输出模型读取信息

- options = new SessionOptions();

- options.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;

- options.AppendExecutionProvider_CPU(0);// 设置为CPU上运行

- // 创建推理模型类,读取本地模型文件

- onnx_session = new InferenceSession(text_modelpath, options);//model_path 为onnx模型文件的路径

- // 创建输入容器

- input_container = new List<NamedOnnxValue>();

-

- load_tokenizer(vocab_path);

-

- }

-

- void load_tokenizer(string vocab_path)

- {

-

- tokenizer = new TokenizerClipChinese();

- tokenizer.load_tokenize(vocab_path);

- text_tokens_input = new int[1024 * context_length];

- }

-

- Mat normalize_(Mat src)

- {

- Cv2.CvtColor(src, src, ColorConversionCodes.BGR2RGB);

-

- Mat[] bgr = src.Split();

- for (int i = 0; i < bgr.Length; ++i)

- {

- bgr[i].ConvertTo(bgr[i], MatType.CV_32FC1, 1.0 / (255.0 * std[i]), (0.0 - mean[i]) / std[i]);

- }

-

- Cv2.Merge(bgr, src);

-

- foreach (Mat channel in bgr)

- {

- channel.Dispose();

- }

-

- return src;

- }

-

- unsafe void generate_image_feature(Mat srcimg)

- {

- Mat temp_image = new Mat();

- Cv2.Resize(srcimg, temp_image, new Size(inpWidth, inpHeight), 0, 0, InterpolationFlags.Cubic);

- Mat normalized_mat = normalize_(temp_image);

- Mat blob = CvDnn.BlobFromImage(normalized_mat);

- net.SetInput(blob);

- //模型推理,读取推理结果

- Mat[] outs = new Mat[1] { new Mat() };

- string[] outBlobNames = net.GetUnconnectedOutLayersNames().ToArray();

- net.Forward(outs, outBlobNames);

- float* ptr_feat = (float*)outs[0].Data;

- int len_image_feature = outs[0].Size(1); //忽略第0维batchsize=1, len_image_feature是定值512,跟len_text_feature相等的, 也可以写死在类成员变量里

- image_features_input = new float[len_image_feature];

- float norm = 0.0f;

- for (int i = 0; i < len_image_feature; i++)

- {

- norm += ptr_feat[i] * ptr_feat[i];

- }

- norm = (float)Math.Sqrt(norm);

- for (int i = 0; i < len_image_feature; i++)

- {

- image_features_input[i] = ptr_feat[i] / norm;

- }

- }

-

- unsafe void generate_text_feature(List<string> texts)

- {

- List<List<int>> text_token = new List<List<int>>(texts.Count);

- for (int i = 0; i < texts.Count; i++)

- {

- text_token.Add(new List<int>());

- }

-

- for (int i = 0; i < texts.Count; i++)

- {

- tokenizer.encode_text(texts[i], text_token[i]);

- }

-

- if (text_token.Count * context_length > text_tokens_input.Length)

- {

- text_tokens_input = new int[text_token.Count * context_length];

- }

-

- foreach (int i in text_tokens_input) { text_tokens_input[i] = 0; }

-

- for (int i = 0; i < text_token.Count; i++)

- {

- if (text_token[i].Count > context_length)

- {

- Console.WriteLine("text_features index " + i + " ,bigger than " + context_length + "\n");

- continue;

- }

- for (int j = 0; j < text_token[i].Count; j++)

- {

- text_tokens_input[i * context_length + j] = text_token[i][j];

- }

-

- }

-

- int[] text_token_shape = new int[] { 1, context_length };

-

- text_features_input = new float[text_token.Count, len_text_feature];

-

- long[] text_tokens_input_64 = new long[texts.Count * context_length];

- for (int i = 0; i < text_tokens_input_64.Length; i++)

- {

- text_tokens_input_64[i] = text_tokens_input[i];

- }

-

- for (int i = 0; i < text_token.Count; i++)

- {

- input_tensor = new DenseTensor<long>(text_tokens_input_64, new[] { 1, 52 });

- input_container.Clear();

- input_container.Add(NamedOnnxValue.CreateFromTensor("text", input_tensor));

-

- //运行 Inference 并获取结果

- result_infer = onnx_session.Run(input_container);

-

- // 将输出结果转为DisposableNamedOnnxValue数组

- results_onnxvalue = result_infer.ToArray();

-

- // 读取第一个节点输出并转为Tensor数据

- result_tensors = results_onnxvalue[0].AsTensor<float>();

-

- float[] text_feature_ptr = results_onnxvalue[0].AsTensor<float>().ToArray();

-

- float norm = 0.0f;

- for (int j = 0; j < len_text_feature; j++)

- {

- norm += text_feature_ptr[j] * text_feature_ptr[j];

- }

- norm = (float)Math.Sqrt(norm);

- for (int j = 0; j < len_text_feature; j++)

- {

- text_features_input[i, j] = text_feature_ptr[j] / norm;

- }

-

- }

- }

-

- void softmax(float[] input)

- {

- int length = input.Length;

- float[] exp_x = new float[length];

- float maxVal = input.Max();

- float sum = 0;

- for (int i = 0; i < length; i++)

- {

- float expval = (float)Math.Exp(input[i] - maxVal);

- exp_x[i] = expval;

- sum += expval;

- }

- for (int i = 0; i < length; i++)

- {

- input[i] = exp_x[i] / sum;

- }

- }

-

- int[] argsort_ascend(float[] array)

- {

- int array_len = array.Length;

- int[] array_index = new int[array_len];

- for (int i = 0; i < array_len; ++i)

- {

- array_index[i] = i;

- }

-

- var temp = array_index.ToList();

-

- temp.Sort((pos1, pos2) =>

- {

-

- if (array[pos1] < array[pos2])

- {

- return -1;

- }

- else if (array[pos1] == array[pos2])

- {

- return 0;

- }

- else

- {

- return 0;

- }

-

- });

-

- return temp.ToArray();

- }

-

- public List<Dictionary<string, float>> input_text_search_image(string text, float[] image_features, List<string> imglist)

- {

-

- int imgnum = imglist.Count;

- List<string> texts = new List<string> { text };

-

- generate_text_feature(texts);

-

- float[] logits_per_image = new float[imgnum];

-

- for (int i = 0; i < imgnum; i++)

- {

- float sum = 0;

- for (int j = 0; j < len_text_feature; j++)

- {

- sum += image_features[i * len_text_feature + j] * text_features_input[0, j]; //图片特征向量跟文本特征向量做内积

- }

- logits_per_image[i] = 100 * sum;

- }

-

- softmax(logits_per_image);

-

- int[] index = argsort_ascend(logits_per_image);

-

- List<Dictionary<string, float>> top5imglist = new List<Dictionary<string, float>>(5);

-

- for (int i = 0; i < 5; i++)

- {

- int ind = index[imgnum - 1 - i];

- Dictionary<string, float> result = new Dictionary<string, float>();

- result.Add(imglist[ind], logits_per_image[ind]);

- top5imglist.Add(result);

- }

- return top5imglist;

- }

-

- public float[] generate_imagedir_features(string image_dir)

- {

-

- imglist = Common.listdir(image_dir);

- imgnum = imglist.Count;

- Console.WriteLine("遍历到" + imgnum + "张图片");

-

- float[] imagedir_features = new float[0];

-

- for (int i = 0; i < imgnum; i++)

- {

- string imgpath = imglist[i];

-

- Mat srcimg = Cv2.ImRead(imgpath);

-

- generate_image_feature(srcimg);

-

- imagedir_features = imagedir_features.Concat(image_features_input).ToArray();

-

- srcimg.Dispose();

- }

-

- return imagedir_features;

-

- }

-

- }