- 1文本分类中使用TfidfVectorizer()

- 2【路径规划】基于A*算法和Dijkstra算法的路径规划(Python代码实现)_python 做路径规划算法,dijkstra,a*,rrt,蚁群,算法最基础的版本就可以不需要做优

- 3HarmonyOS实战开发-如何使用首选项能力实现一个简单示例。

- 4TTS | 一文总览语音合成系列基础知识及简要介绍

- 5用Matplotlib与Seaborn画散点图并创建图例_matplotlib 散点图 图例

- 6博客的开篇和目录_wowo1gt

- 7XSS(跨站脚本攻击)及部分解决方案_xss攻击原理与解决方法

- 8【对话机器人】开源机器人项目介绍_客服机器人 开源 项目

- 9《自然语言处理实战入门》聊天机器人 ---- 初探_自然语言实战聊天

- 10NLP各种词库_nlp 寒暄词库

第50步 深度学习图像识别:Data-efficient Image Transformers建模(Pytorch)

赞

踩

基于WIN10的64位系统演示

一、写在前面

(1)Data-efficient Image Transformers

Data-efficient Image Transformers (DeiT)是一种用于图像分类的新型模型,由Facebook AI在2020年底提出。这种方法基于视觉Transformer,通过训练策略的改进,使得模型能在少量数据下达到更高的性能。

在许多情况下,Transformer模型需要大量的数据才能得到好的结果。然而,这在某些场景下是不可能的,例如在只有少量标注数据的情况下。DeiT方法通过在训练过程中使用知识蒸馏,解决了这个问题。知识蒸馏是一种让小型模型学习大型模型行为的技术。

DeiT中的关键技术之一是使用学生模型预测教师模型的类别分布,而不仅仅是硬标签(原始数据集中的类别标签)。这样做的好处是,学生模型可以从教师模型的软标签(类别概率分布)中学习更多的信息。另外,DeiT还引入了一种新的训练方法,称为“硬标签蒸馏”,这种方法更进一步提高了模型的性能。通过这种方法,即使在ImageNet这样的大规模数据集上,DeiT也可以与更复杂的卷积神经网络(如ResNet和EfficientNet)相媲美或者超越,同时还使用了更少的计算资源。

(2)Data-efficient Image Transformers的预训练版本

本文继续使用Facebook的高级深度学习框架PyTorchImageModels (timm)。该库提供了多种预训练的模型,太多了,我还是给网址吧:

https://github.com/huggingface/pytorch-image-models/blob/main/timm/models/deit.py

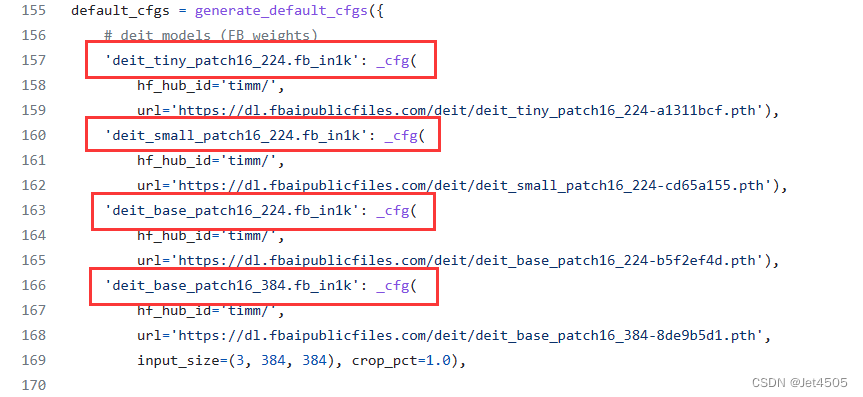

从第155行到第249行都是:

比如“deit_tiny_patch16_224”:

"deit": 这是模型类型的缩写,代表 "Data-efficient Image Transformers"。

"tiny": 这个词说明了模型的大小。在此情况下,"tiny"意味着这是一个更小、计算成本更低的模型版本。相对的,还有"small","base"等不同规模的模型。

"patch16": 这是指在模型的输入阶段,原始图像被分割成大小为16x16像素的小方块(也被称为patch)进行处理。

"224": 这是指模型接受的输入图像的尺寸是224x224像素。这是在计算机视觉领域常用的图像尺寸。

二、Data-efficient Image Transformers迁移学习代码实战

我们继续胸片的数据集:肺结核病人和健康人的胸片的识别。其中,肺结核病人700张,健康人900张,分别存入单独的文件夹中。

(a)导入包

- import copy

- import torch

- import torchvision

- import torchvision.transforms as transforms

- from torchvision import models

- from torch.utils.data import DataLoader

- from torch import optim, nn

- from torch.optim import lr_scheduler

- import os

- import matplotlib.pyplot as plt

- import warnings

- import numpy as np

-

- warnings.filterwarnings("ignore")

- plt.rcParams['font.sans-serif'] = ['SimHei']

- plt.rcParams['axes.unicode_minus'] = False

-

- # 设置GPU

- device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

(b)导入数据集

- import torch

- from torchvision import datasets, transforms

- import os

-

- # 数据集路径

- data_dir = "./MTB"

-

- # 图像的大小

- img_height = 100

- img_width = 100

-

- # 数据预处理

- data_transforms = {

- 'train': transforms.Compose([

- transforms.RandomResizedCrop(img_height),

- transforms.RandomHorizontalFlip(),

- transforms.RandomVerticalFlip(),

- transforms.RandomRotation(0.2),

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

- ]),

- 'val': transforms.Compose([

- transforms.Resize((img_height, img_width)),

- transforms.ToTensor(),

- transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

- ]),

- }

-

- # 加载数据集

- full_dataset = datasets.ImageFolder(data_dir)

-

- # 获取数据集的大小

- full_size = len(full_dataset)

- train_size = int(0.7 * full_size) # 假设训练集占80%

- val_size = full_size - train_size # 验证集的大小

-

- # 随机分割数据集

- torch.manual_seed(0) # 设置随机种子以确保结果可重复

- train_dataset, val_dataset = torch.utils.data.random_split(full_dataset, [train_size, val_size])

-

- # 将数据增强应用到训练集

- train_dataset.dataset.transform = data_transforms['train']

-

- # 创建数据加载器

- batch_size = 32

- train_dataloader = torch.utils.data.DataLoader(train_dataset, batch_size=batch_size, shuffle=True, num_workers=4)

- val_dataloader = torch.utils.data.DataLoader(val_dataset, batch_size=batch_size, shuffle=True, num_workers=4)

-

- dataloaders = {'train': train_dataloader, 'val': val_dataloader}

- dataset_sizes = {'train': len(train_dataset), 'val': len(val_dataset)}

- class_names = full_dataset.classes

(c)导入Data-efficient Image Transformers

- # 导入所需的库

- import torch.nn as nn

- import timm

-

- # 定义Data-efficient Image Transformers模型

- model = timm.create_model('deit_base_patch16_224', pretrained=True) # 你可以选择适合你需求的DeiT版本,这里以deit_base_patch16_224为例

- num_ftrs = model.head.in_features

-

- # 根据分类任务修改最后一层

- model.head = nn.Linear(num_ftrs, len(class_names))

-

- # 将模型移至指定设备

- model = model.to(device)

-

- # 打印模型摘要

- print(model)

(d)编译模型

- # 定义损失函数

- criterion = nn.CrossEntropyLoss()

-

- # 定义优化器

- optimizer = optim.Adam(model.parameters())

-

- # 定义学习率调度器

- exp_lr_scheduler = lr_scheduler.StepLR(optimizer, step_size=7, gamma=0.1)

-

- # 开始训练模型

- num_epochs = 10

- best_model_wts = copy.deepcopy(model.state_dict())

- best_acc = 0.0

-

- # 初始化记录器

- train_loss_history = []

- train_acc_history = []

- val_loss_history = []

- val_acc_history = []

-

- for epoch in range(num_epochs):

- print('Epoch {}/{}'.format(epoch, num_epochs - 1))

- print('-' * 10)

-

- # 每个epoch都有一个训练和验证阶段

- for phase in ['train', 'val']:

- if phase == 'train':

- model.train() # Set model to training mode

- else:

- model.eval() # Set model to evaluate mode

-

- running_loss = 0.0

- running_corrects = 0

-

- # 遍历数据

- for inputs, labels in dataloaders[phase]:

- inputs = inputs.to(device)

- labels = labels.to(device)

-

- # 零参数梯度

- optimizer.zero_grad()

-

- # 前向

- with torch.set_grad_enabled(phase == 'train'):

- outputs = model(inputs)

- _, preds = torch.max(outputs, 1)

- loss = criterion(outputs, labels)

-

- # 只在训练模式下进行反向和优化

- if phase == 'train':

- loss.backward()

- optimizer.step()

-

- # 统计

- running_loss += loss.item() * inputs.size(0)

- running_corrects += torch.sum(preds == labels.data)

-

- epoch_loss = running_loss / dataset_sizes[phase]

- epoch_acc = (running_corrects.double() / dataset_sizes[phase]).item()

-

- # 记录每个epoch的loss和accuracy

- if phase == 'train':

- train_loss_history.append(epoch_loss)

- train_acc_history.append(epoch_acc)

- else:

- val_loss_history.append(epoch_loss)

- val_acc_history.append(epoch_acc)

-

- print('{} Loss: {:.4f} Acc: {:.4f}'.format(phase, epoch_loss, epoch_acc))

-

- # 深拷贝模型

- if phase == 'val' and epoch_acc > best_acc:

- best_acc = epoch_acc

- best_model_wts = copy.deepcopy(model.state_dict())

-

- print()

-

- print('Best val Acc: {:4f}'.format(best_acc))

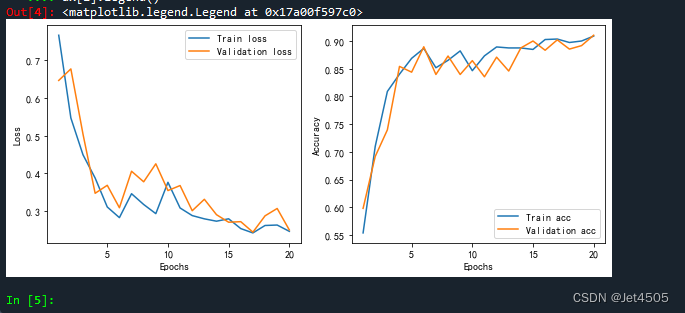

(e)Accuracy和Loss可视化

- epoch = range(1, len(train_loss_history)+1)

-

- fig, ax = plt.subplots(1, 2, figsize=(10,4))

- ax[0].plot(epoch, train_loss_history, label='Train loss')

- ax[0].plot(epoch, val_loss_history, label='Validation loss')

- ax[0].set_xlabel('Epochs')

- ax[0].set_ylabel('Loss')

- ax[0].legend()

-

- ax[1].plot(epoch, train_acc_history, label='Train acc')

- ax[1].plot(epoch, val_acc_history, label='Validation acc')

- ax[1].set_xlabel('Epochs')

- ax[1].set_ylabel('Accuracy')

- ax[1].legend()

-

- #plt.savefig("loss-acc.pdf", dpi=300,format="pdf")

观察模型训练情况:

蓝色为训练集,橙色为验证集。

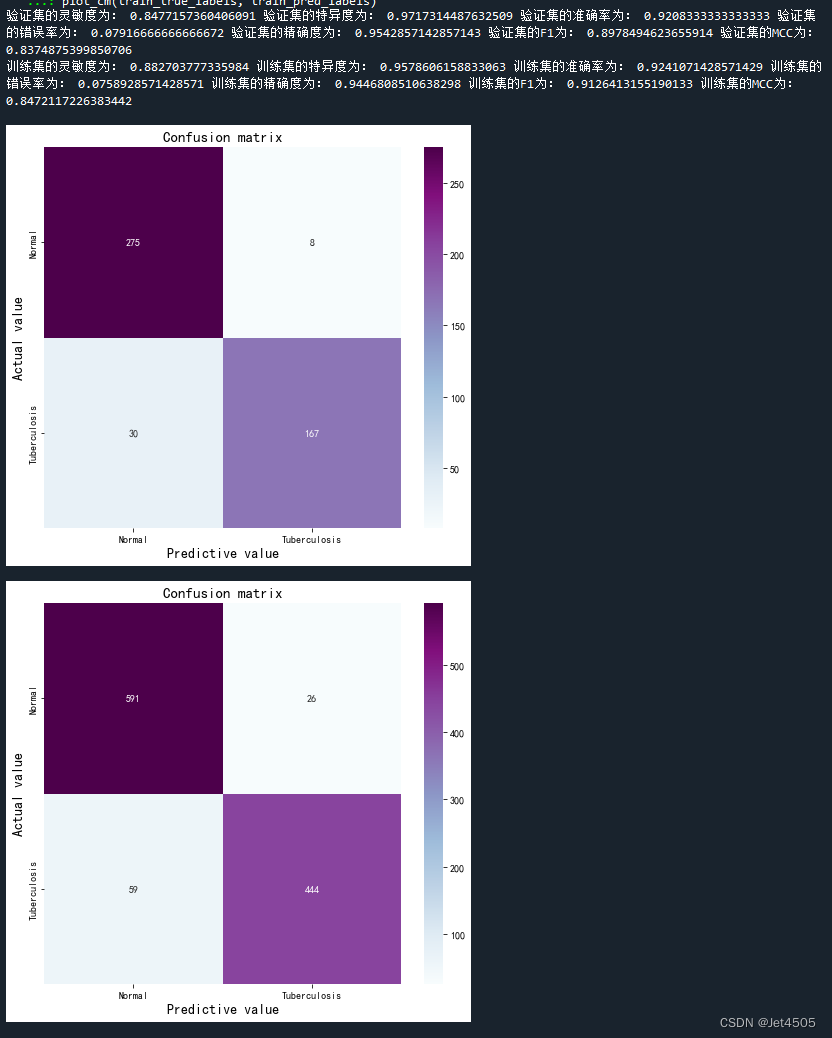

(f)混淆矩阵可视化以及模型参数

- from sklearn.metrics import classification_report, confusion_matrix

- import math

- import pandas as pd

- import numpy as np

- import seaborn as sns

- from matplotlib.pyplot import imshow

-

- # 定义一个绘制混淆矩阵图的函数

- def plot_cm(labels, predictions):

-

- # 生成混淆矩阵

- conf_numpy = confusion_matrix(labels, predictions)

- # 将矩阵转化为 DataFrame

- conf_df = pd.DataFrame(conf_numpy, index=class_names ,columns=class_names)

-

- plt.figure(figsize=(8,7))

-

- sns.heatmap(conf_df, annot=True, fmt="d", cmap="BuPu")

-

- plt.title('Confusion matrix',fontsize=15)

- plt.ylabel('Actual value',fontsize=14)

- plt.xlabel('Predictive value',fontsize=14)

-

- def evaluate_model(model, dataloader, device):

- model.eval() # 设置模型为评估模式

- true_labels = []

- pred_labels = []

- # 遍历数据

- for inputs, labels in dataloader:

- inputs = inputs.to(device)

- labels = labels.to(device)

-

- # 前向

- with torch.no_grad():

- outputs = model(inputs)

- _, preds = torch.max(outputs, 1)

-

- true_labels.extend(labels.cpu().numpy())

- pred_labels.extend(preds.cpu().numpy())

-

- return true_labels, pred_labels

-

- # 获取预测和真实标签

- true_labels, pred_labels = evaluate_model(model, dataloaders['val'], device)

-

- # 计算混淆矩阵

- cm_val = confusion_matrix(true_labels, pred_labels)

- a_val = cm_val[0,0]

- b_val = cm_val[0,1]

- c_val = cm_val[1,0]

- d_val = cm_val[1,1]

-

- # 计算各种性能指标

- acc_val = (a_val+d_val)/(a_val+b_val+c_val+d_val) # 准确率

- error_rate_val = 1 - acc_val # 错误率

- sen_val = d_val/(d_val+c_val) # 灵敏度

- sep_val = a_val/(a_val+b_val) # 特异度

- precision_val = d_val/(b_val+d_val) # 精确度

- F1_val = (2*precision_val*sen_val)/(precision_val+sen_val) # F1值

- MCC_val = (d_val*a_val-b_val*c_val) / (np.sqrt((d_val+b_val)*(d_val+c_val)*(a_val+b_val)*(a_val+c_val))) # 马修斯相关系数

-

- # 打印出性能指标

- print("验证集的灵敏度为:", sen_val,

- "验证集的特异度为:", sep_val,

- "验证集的准确率为:", acc_val,

- "验证集的错误率为:", error_rate_val,

- "验证集的精确度为:", precision_val,

- "验证集的F1为:", F1_val,

- "验证集的MCC为:", MCC_val)

-

- # 绘制混淆矩阵

- plot_cm(true_labels, pred_labels)

-

-

- # 获取预测和真实标签

- train_true_labels, train_pred_labels = evaluate_model(model, dataloaders['train'], device)

- # 计算混淆矩阵

- cm_train = confusion_matrix(train_true_labels, train_pred_labels)

- a_train = cm_train[0,0]

- b_train = cm_train[0,1]

- c_train = cm_train[1,0]

- d_train = cm_train[1,1]

- acc_train = (a_train+d_train)/(a_train+b_train+c_train+d_train)

- error_rate_train = 1 - acc_train

- sen_train = d_train/(d_train+c_train)

- sep_train = a_train/(a_train+b_train)

- precision_train = d_train/(b_train+d_train)

- F1_train = (2*precision_train*sen_train)/(precision_train+sen_train)

- MCC_train = (d_train*a_train-b_train*c_train) / (math.sqrt((d_train+b_train)*(d_train+c_train)*(a_train+b_train)*(a_train+c_train)))

- print("训练集的灵敏度为:",sen_train,

- "训练集的特异度为:",sep_train,

- "训练集的准确率为:",acc_train,

- "训练集的错误率为:",error_rate_train,

- "训练集的精确度为:",precision_train,

- "训练集的F1为:",F1_train,

- "训练集的MCC为:",MCC_train)

-

- # 绘制混淆矩阵

- plot_cm(train_true_labels, train_pred_labels)

效果不错:

(g)AUC曲线绘制

- from sklearn import metrics

- import numpy as np

- import matplotlib.pyplot as plt

- from matplotlib.pyplot import imshow

- from sklearn.metrics import classification_report, confusion_matrix

- import seaborn as sns

- import pandas as pd

- import math

-

- def plot_roc(name, labels, predictions, **kwargs):

- fp, tp, _ = metrics.roc_curve(labels, predictions)

-

- plt.plot(fp, tp, label=name, linewidth=2, **kwargs)

- plt.plot([0, 1], [0, 1], color='orange', linestyle='--')

- plt.xlabel('False positives rate')

- plt.ylabel('True positives rate')

- ax = plt.gca()

- ax.set_aspect('equal')

-

-

- # 确保模型处于评估模式

- model.eval()

-

- train_ds = dataloaders['train']

- val_ds = dataloaders['val']

-

- val_pre_auc = []

- val_label_auc = []

-

- for images, labels in val_ds:

- for image, label in zip(images, labels):

- img_array = image.unsqueeze(0).to(device) # 在第0维增加一个维度并将图像转移到适当的设备上

- prediction_auc = model(img_array) # 使用模型进行预测

- val_pre_auc.append(prediction_auc.detach().cpu().numpy()[:,1])

- val_label_auc.append(label.item()) # 使用Tensor.item()获取Tensor的值

- auc_score_val = metrics.roc_auc_score(val_label_auc, val_pre_auc)

-

-

- train_pre_auc = []

- train_label_auc = []

-

- for images, labels in train_ds:

- for image, label in zip(images, labels):

- img_array_train = image.unsqueeze(0).to(device)

- prediction_auc = model(img_array_train)

- train_pre_auc.append(prediction_auc.detach().cpu().numpy()[:,1]) # 输出概率而不是标签!

- train_label_auc.append(label.item())

- auc_score_train = metrics.roc_auc_score(train_label_auc, train_pre_auc)

-

- plot_roc('validation AUC: {0:.4f}'.format(auc_score_val), val_label_auc , val_pre_auc , color="red", linestyle='--')

- plot_roc('training AUC: {0:.4f}'.format(auc_score_train), train_label_auc, train_pre_auc, color="blue", linestyle='--')

- plt.legend(loc='lower right')

- #plt.savefig("roc.pdf", dpi=300,format="pdf")

-

- print("训练集的AUC值为:",auc_score_train, "验证集的AUC值为:",auc_score_val)

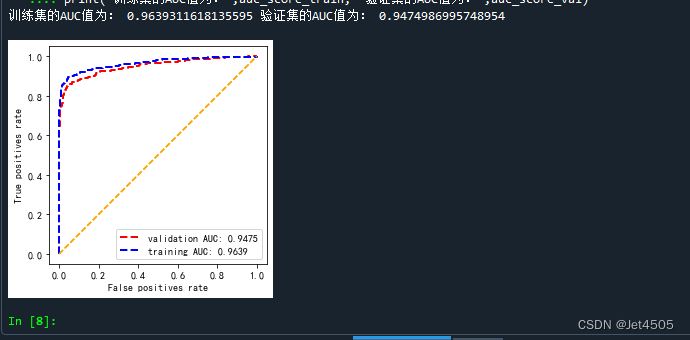

ROC曲线如下:

很优秀的ROC曲线!

三、写在最后

运算量和消耗的计算资源还是大,在这个数据集上跑出来的性能比ViT模型要好一些,说明优化策略还是起到效果的。

四、数据

链接:https://pan.baidu.com/s/15vSVhz1rQBtqNkNp2GQyVw?pwd=x3jf

提取码:x3jf