热门标签

热门文章

- 1Xeno RAT成为GitHub上的严重威胁;黑客组织Lazarus利用Windows内核0day漏洞;五眼联盟警告 Ivanti漏洞被广泛利用 | 安全周报 0301

- 2一键ai绘画软件哪个好?分享几款一键ai绘画手机版给你_一键画画

- 3CLUE--汉语语言理解评估基准_clue基准

- 4C++ 中的 std::vector:动态数组操作和常用函数解析

- 5深入解析CSS单位:全面理解与实战应用

- 6Kaggle入门比赛:灾难推文的自然语言处理 详细教程_kaggle自然语言处理入门项目

- 72020年计算机设计大赛参赛回顾与总结_计算机设计大赛提交作品需要准备些什么

- 8javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: PKIX path building f

- 9基于Java(SpringBoot框架)毕业设计作品成品(38)高校校园疫情防控系统设计与实现

- 10【C++】蓝桥杯第十二届省赛杨辉三角形(小白易懂)_蓝桥杯杨辉三角形

当前位置: article > 正文

llama-index调用qwen大模型实现RAG_qwen rag

作者:凡人多烦事01 | 2024-04-24 03:05:33

赞

踩

qwen rag

背景

llama-index在实现RAG方案的时候多是用的llama等英文大模型,对于国内的诸多模型案例较少,本次将使用qwen大模型实现llama-index的RAG方案。

环境配置

(1)pip包

llamaindex需要预装很多包,这里先把我成功的案例里面的pip包配置发出来,在requirements.txt里面。

- absl-py==1.4.0

- accelerate==0.27.2

- aiohttp==3.9.3

- aiosignal==1.3.1

- aliyun-python-sdk-core==2.13.36

- aliyun-python-sdk-kms==2.16.1

- annotated-types==0.6.0

- anyio==3.7.1

- apphub @ file:///environment/apps/apphub/dist/apphub-1.0.0.tar.gz#sha256=260f99c0de4c575b19ab913aa134877e9efd81b820b97511fc8379674643c253

- argon2-cffi==21.3.0

- argon2-cffi-bindings==21.2.0

- asgiref==3.7.2

- asttokens==2.2.1

- astunparse==1.6.3

- async-timeout==4.0.3

- attrs==23.1.0

- Babel==2.12.1

- backcall==0.2.0

- backoff==2.2.1

- bcrypt==4.1.2

- beautifulsoup4==4.12.3

- bleach==6.0.0

- boltons @ file:///croot/boltons_1677628692245/work

- brotlipy==0.7.0

- bs4==0.0.2

- build==1.1.1

- cachetools==5.3.1

- certifi @ file:///croot/certifi_1690232220950/work/certifi

- cffi @ file:///croot/cffi_1670423208954/work

- chardet==3.0.4

- charset-normalizer @ file:///tmp/build/80754af9/charset-normalizer_1630003229654/work

- chroma-hnswlib==0.7.3

- chromadb==0.4.24

- click==7.1.2

- cmake==3.25.0

- coloredlogs==15.0.1

- comm==0.1.4

- conda @ file:///croot/conda_1690494963117/work

- conda-content-trust @ file:///tmp/abs_5952f1c8-355c-4855-ad2e-538535021ba5h26t22e5/croots/recipe/conda-content-trust_1658126371814/work

- conda-libmamba-solver @ file:///croot/conda-libmamba-solver_1685032319139/work/src

- conda-package-handling @ file:///croot/conda-package-handling_1685024767917/work

- conda_package_streaming @ file:///croot/conda-package-streaming_1685019673878/work

- contourpy==1.2.0

- crcmod==1.7

- cryptography @ file:///croot/cryptography_1686613057838/work

- cycler==0.12.1

- dataclasses-json==0.6.4

- debugpy==1.6.7

- decorator==5.1.1

- defusedxml==0.7.1

- Deprecated==1.2.14

- dirtyjson==1.0.8

- distro==1.9.0

- ecdsa==0.18.0

- exceptiongroup==1.1.2

- executing==1.2.0

- fastapi==0.104.1

- fastjsonschema==2.18.0

- featurize==0.0.24

- filelock==3.9.0

- flatbuffers==23.5.26

- fonttools==4.44.0

- frozenlist==1.4.1

- fsspec==2024.2.0

- gast==0.4.0

- google-auth==2.22.0

- google-auth-oauthlib==1.0.0

- google-pasta==0.2.0

- googleapis-common-protos==1.62.0

- greenlet==3.0.3

- grpcio==1.62.0

- gunicorn==21.2.0

- h11==0.14.0

- h5py==3.9.0

- httpcore==0.17.3

- httptools==0.6.1

- httpx==0.24.1

- huggingface-hub==0.20.3

- humanfriendly==10.0

- idna==2.10

- imageio==2.32.0

- importlib-metadata==6.11.0

- importlib_resources==6.1.3

- ipykernel==6.25.0

- ipython==8.14.0

- ipython-genutils==0.2.0

- ipywidgets==8.1.2

- jedi==0.19.0

- Jinja2==3.1.2

- jmespath==0.10.0

- joblib==1.3.2

- json5==0.9.14

- jsonpatch @ file:///tmp/build/80754af9/jsonpatch_1615747632069/work

- jsonpointer==2.1

- jsonschema==4.18.6

- jsonschema-specifications==2023.7.1

- jupyter-server==1.24.0

- jupyter_client==8.3.0

- jupyter_core==5.3.1

- jupyterlab==3.2.9

- jupyterlab-pygments==0.2.2

- jupyterlab_server==2.24.0

- jupyterlab_widgets==3.0.10

- keras==2.13.1

- kiwisolver==1.4.5

- kubernetes==29.0.0

- lazy_loader==0.3

- libclang==16.0.6

- libmambapy @ file:///croot/mamba-split_1685993156657/work/libmambapy

- lit==15.0.7

- llama-index==0.10.17

- llama-index-agent-openai==0.1.5

- llama-index-cli==0.1.8

- llama-index-core==0.10.17

- llama-index-embeddings-huggingface==0.1.4

- llama-index-embeddings-openai==0.1.6

- llama-index-indices-managed-llama-cloud==0.1.3

- llama-index-legacy==0.9.48

- llama-index-llms-huggingface==0.1.3

- llama-index-llms-openai==0.1.7

- llama-index-multi-modal-llms-openai==0.1.4

- llama-index-program-openai==0.1.4

- llama-index-question-gen-openai==0.1.3

- llama-index-readers-file==0.1.8

- llama-index-readers-llama-parse==0.1.3

- llama-index-vector-stores-chroma==0.1.5

- llama-parse==0.3.8

- llamaindex-py-client==0.1.13

- Markdown==3.4.4

- MarkupSafe==2.1.2

- marshmallow==3.21.1

- matplotlib==3.8.1

- matplotlib-inline==0.1.6

- mistune==3.0.1

- mmh3==4.1.0

- monotonic==1.6

- mpmath==1.2.1

- multidict==6.0.4

- mypy-extensions==1.0.0

- nbclassic==0.2.8

- nbclient==0.8.0

- nbconvert==7.7.3

- nbformat==5.9.2

- nest-asyncio==1.6.0

- networkx==3.0

- nltk==3.8.1

- notebook==6.4.12

- numpy==1.24.1

- nvidia-cublas-cu12==12.1.3.1

- nvidia-cuda-cupti-cu12==12.1.105

- nvidia-cuda-nvrtc-cu12==12.1.105

- nvidia-cuda-runtime-cu12==12.1.105

- nvidia-cudnn-cu12==8.9.2.26

- nvidia-cufft-cu12==11.0.2.54

- nvidia-curand-cu12==10.3.2.106

- nvidia-cusolver-cu12==11.4.5.107

- nvidia-cusparse-cu12==12.1.0.106

- nvidia-nccl-cu12==2.19.3

- nvidia-nvjitlink-cu12==12.4.99

- nvidia-nvtx-cu12==12.1.105

- oauthlib==3.2.2

- onnxruntime==1.17.1

- openai==1.13.3

- opencv-python==4.8.1.78

- opentelemetry-api==1.23.0

- opentelemetry-exporter-otlp-proto-common==1.23.0

- opentelemetry-exporter-otlp-proto-grpc==1.23.0

- opentelemetry-instrumentation==0.44b0

- opentelemetry-instrumentation-asgi==0.44b0

- opentelemetry-instrumentation-fastapi==0.44b0

- opentelemetry-proto==1.23.0

- opentelemetry-sdk==1.23.0

- opentelemetry-semantic-conventions==0.44b0

- opentelemetry-util-http==0.44b0

- opt-einsum==3.3.0

- orjson==3.9.15

- oss2==2.18.1

- overrides==7.7.0

- packaging @ file:///croot/packaging_1678965309396/work

- pandas==2.1.2

- pandocfilters==1.5.0

- parso==0.8.3

- pexpect==4.8.0

- pickleshare==0.7.5

- Pillow==9.3.0

- platformdirs==3.10.0

- pluggy @ file:///tmp/build/80754af9/pluggy_1648024709248/work

- posthog==3.5.0

- prometheus-client==0.17.1

- prompt-toolkit==3.0.39

- protobuf==4.23.4

- psutil==5.9.5

- ptyprocess==0.7.0

- pulsar-client==3.4.0

- pure-eval==0.2.2

- pyasn1==0.5.0

- pyasn1-modules==0.3.0

- pycosat @ file:///croot/pycosat_1666805502580/work

- pycparser @ file:///tmp/build/80754af9/pycparser_1636541352034/work

- pycryptodome==3.18.0

- pydantic==2.4.2

- pydantic_core==2.10.1

- Pygments==2.15.1

- PyMuPDF==1.23.26

- PyMuPDFb==1.23.22

- pyOpenSSL @ file:///croot/pyopenssl_1677607685877/work

- pyparsing==3.1.1

- pypdf==4.1.0

- PyPika==0.48.9

- pyproject_hooks==1.0.0

- PySocks @ file:///home/builder/ci_310/pysocks_1640793678128/work

- python-dateutil==2.8.2

- python-dotenv==1.0.0

- pytz==2023.3.post1

- PyYAML==6.0.1

- pyzmq==25.1.0

- referencing==0.30.0

- regex==2023.12.25

- requests==2.31.0

- requests-oauthlib==1.3.1

- rpds-py==0.9.2

- rsa==4.9

- ruamel.yaml @ file:///croot/ruamel.yaml_1666304550667/work

- ruamel.yaml.clib @ file:///croot/ruamel.yaml.clib_1666302247304/work

- safetensors==0.4.2

- scikit-image==0.22.0

- scikit-learn==1.3.2

- scipy==1.11.3

- seaborn==0.13.0

- Send2Trash==1.8.2

- six @ file:///tmp/build/80754af9/six_1644875935023/work

- sniffio==1.3.0

- socksio==1.0.0

- soupsieve==2.4.1

- SQLAlchemy==2.0.28

- sshpubkeys==3.3.1

- stack-data==0.6.2

- starlette==0.27.0

- sympy==1.11.1

- tabulate==0.8.7

- tenacity==8.2.3

- tensorboard==2.13.0

- tensorboard-data-server==0.7.1

- tensorflow==2.13.0

- tensorflow-estimator==2.13.0

- tensorflow-io-gcs-filesystem==0.33.0

- termcolor==2.3.0

- terminado==0.17.1

- threadpoolctl==3.2.0

- tifffile==2023.9.26

- tiktoken==0.6.0

- tinycss2==1.2.1

- tokenizers==0.15.2

- tomli==2.0.1

- toolz @ file:///croot/toolz_1667464077321/work

- torch==2.2.1

- torchaudio==2.0.2+cu118

- torchvision==0.15.2+cu118

- tornado==6.3.2

- tqdm==4.66.2

- traitlets==5.9.0

- transformers==4.38.2

- triton==2.2.0

- typer==0.9.0

- typing-inspect==0.9.0

- typing_extensions==4.8.0

- tzdata==2023.3

- urllib3==1.25.11

- uvicorn==0.23.2

- uvloop==0.19.0

- watchfiles==0.21.0

- wcwidth==0.2.5

- webencodings==0.5.1

- websocket-client==1.2.1

- websockets==12.0

- Werkzeug==2.3.6

- widgetsnbextension==4.0.10

- workspace @ file:///home/featurize/work/workspace/dist/workspace-0.1.0.tar.gz#sha256=b292beb3599f79d3791771eff9dc422cc37c58c1fc8daadeafbf025a2e7ea986

- wrapt==1.15.0

- yarl==1.9.2

- zipp==3.17.0

- zstandard @ file:///croot/zstandard_1677013143055/work

(2)python 环境

(3)安装命令

- !pip install llama-index

- !pip install llama-index-llms-huggingface

- !pip install llama-index-embeddings-huggingface

- !pip install llama-index ipywidgets

- !pip install torch

- !git clone https://www.modelscope.cn/AI-ModelScope/bge-small-zh-v1.5.git

- !git clone https://www.modelscope.cn/qwen/Qwen1.5-4B-Chat.git

(4)目录结构

代码

(1)加载模型

- import torch

- from llama_index.llms.huggingface import HuggingFaceLLM

- from llama_index.core import PromptTemplate

- import os

- os.environ['KMP_DUPLICATE_LIB_OK']='True'

- # Model names (make sure you have access on HF)

- LLAMA2_7B = "/home/featurize/Qwen1.5-4B-Chat"

- # LLAMA2_7B_CHAT = "meta-llama/Llama-2-7b-chat-hf"

- # LLAMA2_13B = "meta-llama/Llama-2-13b-hf"

- LLAMA2_13B_CHAT = "/home/featurize/Qwen1.5-4B-Chat"

- # LLAMA2_70B = "meta-llama/Llama-2-70b-hf"

- # LLAMA2_70B_CHAT = "meta-llama/Llama-2-70b-chat-hf"

-

- selected_model = LLAMA2_13B_CHAT

-

- SYSTEM_PROMPT = """You are an AI assistant that answers questions in a friendly manner, based on the given source documents. Here are some rules you always follow:

- - Generate human readable output, avoid creating output with gibberish text.

- - Generate only the requested output, don't include any other language before or after the requested output.

- - Never say thank you, that you are happy to help, that you are an AI agent, etc. Just answer directly.

- - Generate professional language typically used in business documents in North America.

- - Never generate offensive or foul language.

- """

-

- query_wrapper_prompt = PromptTemplate(

- "[INST]<<SYS>>\n" + SYSTEM_PROMPT + "<</SYS>>\n\n{query_str}[/INST] "

- )

-

- llm = HuggingFaceLLM(context_window=4096,

- max_new_tokens=2048,

- generate_kwargs={"temperature": 0.0, "do_sample": False},

- query_wrapper_prompt=query_wrapper_prompt,

- tokenizer_name=selected_model,

- model_name=selected_model,

- device_map="auto"

- )

(2)加载词嵌入向量

- from llama_index.embeddings.huggingface import HuggingFaceEmbedding

-

- embed_model = HuggingFaceEmbedding(model_name="/home/featurize/bge-small-zh-v1.5")

- from llama_index.core import Settings

-

- Settings.llm = llm

- Settings.embed_model = embed_model

- from llama_index.core import SimpleDirectoryReader

-

- # load documents

- documents = SimpleDirectoryReader("./data/").load_data()

- from llama_index.core import VectorStoreIndex

- index = VectorStoreIndex.from_documents(documents)

index

- # set Logging to DEBUG for more detailed outputs

- query_engine = index.as_query_engine()

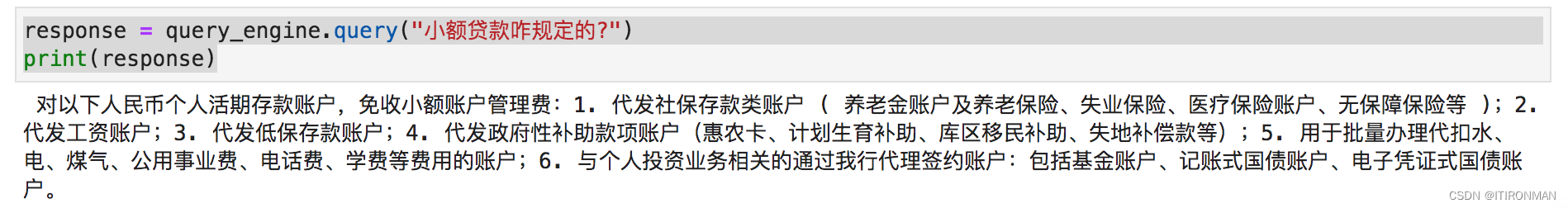

- response = query_engine.query("小额贷款咋规定的?")

- print(response)

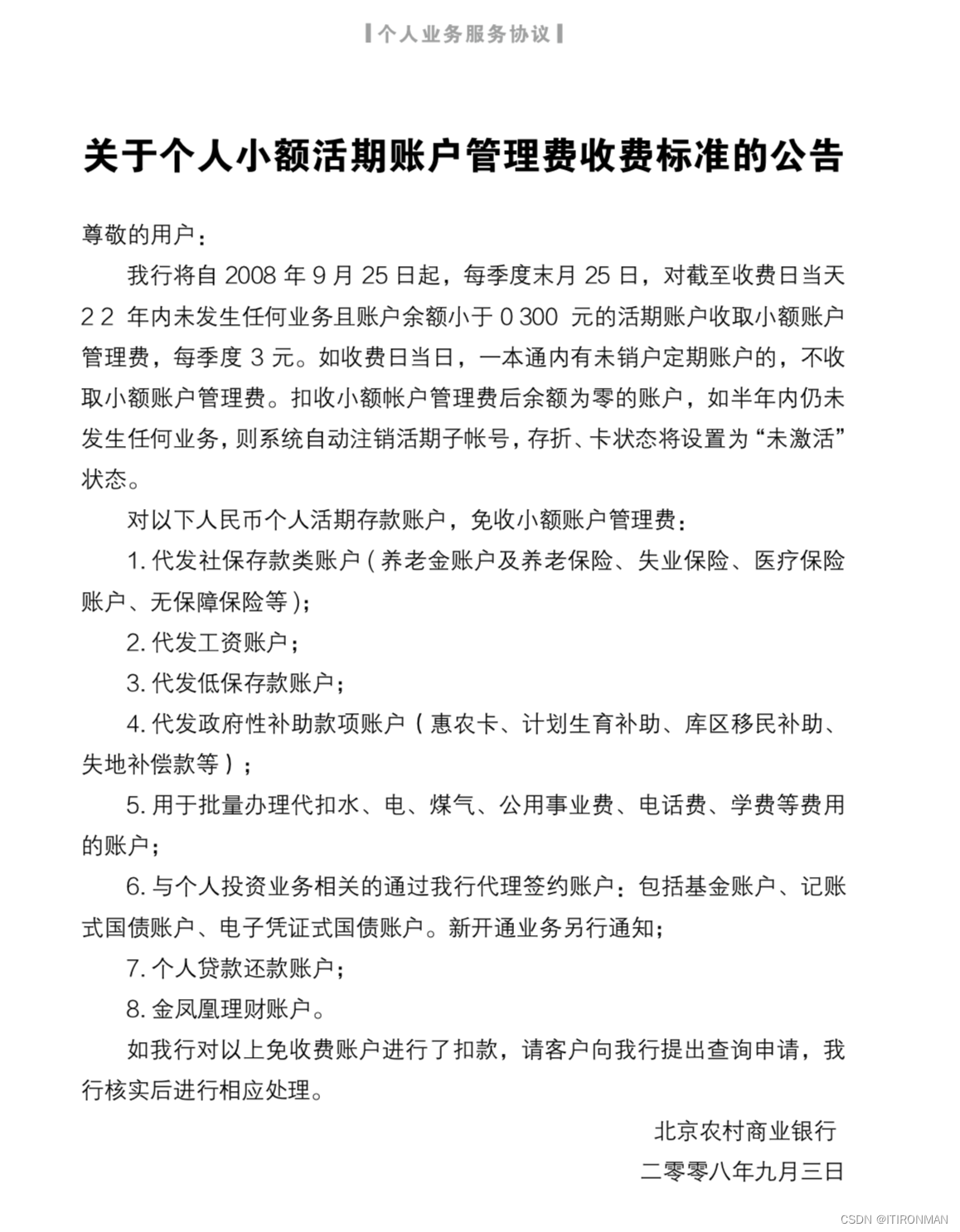

知识库

llamaindex实现RAG中很关键的一环就是知识库,知识库主要是各种类型的文档,这里给的文档是一个pdf文件,文件内容如下。

总结

从上面的代码可以看出,我们使用qwen和bge-zh模型可以实现本地下载模型的RAG方案,知识库里面的内容也可以实现中文问答,这非常有利于我们进行私有化部署方案,从而扩展我们的功能。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/凡人多烦事01/article/detail/477314

推荐阅读

相关标签