- 1掌握Python图像处理艺术:Pillow库入门实践与案例解析_pillow库 python 案例

- 2Git 使用 SSH 密钥身份验证_git验证ssh

- 3WPF基础四:UI相关的类 (1) 父类_wpf 调用suspendlayout暂停布局

- 4AI与音乐:共创未来还是艺术终结?

- 5利用Python进行数据分析_python 进行data analysis

- 6sort 排序_sort(a, a + (n + n - m));

- 7Hive on Spark、Spark on Hive的异同_hiveonspark和sparkonhive区别

- 8javax.xml.transform.sax_javax.xml.transform.sax.saxtransform

- 9构建vue大项目报错:Ineffective mark-compacts near heap limit Allocation failed - JavaScript heap out of memo_<--- js stacktrace ---> fatal error: ineffective m

- 10selenium 效率优化_selenium cpu占用高

大数据集群搭建之Linux安装hadoop3_hadoop3.0高可用集群搭建

赞

踩

六、集群高可用测试

1、停止Active状态的NameNode

2、查看standby状态的NameNode

3、重启启动停止的NameNode

4、查看两个NameNode状态

一、安装准备

1、下载地址

2、参考文档

Apache Hadoop 3.0.0 – Hadoop Cluster Setup

3、ssh免密配置

大数据入门之 ssh 免密码登录_qq262593421的博客-CSDN博客

4、zookeeper安装

大数据高可用技术之zookeeper3.4.5安装配置_qq262593421的博客-CSDN博客

5、集群角色分配

| hadoop集群角色 | 集群节点 |

| NameNode | hadoop001、hadoop002 |

| DataNode | hadoop003、hadoop004、hadoop005 |

| JournalNode | hadoop003、hadoop004、hadoop005 |

| ResourceManager | hadoop001、hadoop002 |

| NodeManager | hadoop003、hadoop004、hadoop005 |

| DFSZKFailoverController | hadoop001、hadoop002 |

二、解压安装

解压文件

cd /usr/local/hadoop

tar zxpf hadoop-3.0.0.tar.gz

创建软链接

ln -s hadoop-3.0.0 hadoop

三、环境变量配置

编辑 /etc/profile 文件

vim /etc/profile

添加以下内容

export HADOOP_HOME=/usr/local/hadoop/hadoop

export PATH= P A T H : PATH: PATH:HADOOP_HOME/bin:$HADOOP_HOME/sbin

四、修改配置文件

1、检查磁盘空间

首先查看磁盘挂载空间,避免hadoop的数据放在挂载空间小的目录

df -h

磁盘一共800G,home目录占了741G,故以下配置目录都会以 /home开头

2、修改配置文件

worker

hadoop003

hadoop004

hadoop005

core-site.xml

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?>fs.defaultFS

hdfs://ns1

hadoop.tmp.dir

/home/cluster/hadoop/data/tmp

io.file.buffer.size

131072

Size of read/write buffer used in SequenceFiles

ha.zookeeper.quorum

hadoop001:2181,hadoop002:2181,hadoop003:2181

DFSZKFailoverController

hadoop.proxyuser.root.hosts

*

hadoop.proxyuser.root.groups

*

hadoop-env.sh

export HDFS_NAMENODE_OPTS=“-XX:+UseParallelGC -Xmx4g”

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_JOURNALNODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

export JAVA_HOME=/usr/java/jdk1.8

hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?>dfs.namenode.name.dir

/home/cluster/hadoop/data/nn

dfs.datanode.data.dir

/home/cluster/hadoop/data/dn

dfs.journalnode.edits.dir

/home/cluster/hadoop/data/jn

dfs.nameservices

ns1

dfs.ha.namenodes.ns1

hadoop001,hadoop002

dfs.namenode.rpc-address.ns1.hadoop001

hadoop001:8020

dfs.namenode.http-address.ns1.hadoop001

hadoop001:9870

dfs.namenode.rpc-address.ns1.hadoop002

hadoop002:8020

dfs.namenode.http-address.ns1.hadoop002

hadoop002:9870

dfs.ha.automatic-failover.enabled.ns1

true

dfs.client.failover.proxy.provider.ns1

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.permissions.enabled

false

dfs.replication

2

dfs.blocksize

HDFS blocksize of 128MB for large file-systems

dfs.namenode.handler.count

100

More NameNode server threads to handle RPCs from large number of DataNodes.

dfs.namenode.shared.edits.dir

qjournal://hadoop001:8485;hadoop002:8485;hadoop003:8485/ns1

dfs.ha.fencing.methods

sshfence

dfs.ha.fencing.ssh.private-key-files

/root/.ssh/id_rsa

mapred-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?>mapreduce.framework.name

yarn

Execution framework set to Hadoop YARN.

mapreduce.map.memory.mb

4096

Larger resource limit for maps.

mapreduce.map.java.opts

-Xmx4096M

Larger heap-size for child jvms of maps.

mapreduce.reduce.memory.mb

4096

Larger resource limit for reduces.

mapreduce.reduce.java.opts

-Xmx4096M

Larger heap-size for child jvms of reduces.

mapreduce.task.io.sort.mb

2040

Higher memory-limit while sorting data for efficiency.

mapreduce.task.io.sort.factor

400

More streams merged at once while sorting files.

mapreduce.reduce.shuffle.parallelcopies

200

Higher number of parallel copies run by reduces to fetch outputs from very large number of maps.

mapreduce.jobhistory.address

hadoop001:10020

MapReduce JobHistory Server host:port.Default port is 10020

mapreduce.jobhistory.webapp.address

hadoop001:19888

MapReduce JobHistory Server Web UI host:port.Default port is 19888.

mapreduce.jobhistory.intermediate-done-dir

/tmp/mr-history/tmp

Directory where history files are written by MapReduce jobs.

mapreduce.jobhistory.done-dir

/tmp/mr-history/done

Directory where history files are managed by the MR JobHistory Server.

yarn-site.xml

<?xml version="1.0"?>yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.ha.automatic-failover.enabled

true

yarn.resourcemanager.ha.automatic-failover.embedded

true

yarn.resourcemanager.cluster-id

yarn-rm-cluster

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

hadoop001

yarn.resourcemanager.hostname.rm2

hadoop002

yarn.resourcemanager.recovery.enabled

true

yarn.resourcemanager.zk.state-store.address

hadoop001:2181,hadoop002:2181,hadoop003:2181

yarn.resourcemanager.zk-address

hadoop001:2181,hadoop002:2181,hadoop003:2181

yarn.resourcemanager.address.rm1

hadoop001:8032

yarn.resourcemanager.address.rm2

hadoop002:8032

yarn.resourcemanager.scheduler.address.rm1

hadoop001:8034

yarn.resourcemanager.webapp.address.rm1

hadoop001:8088

yarn.resourcemanager.scheduler.address.rm2

hadoop002:8034

yarn.resourcemanager.webapp.address.rm2

hadoop002:8088

yarn.acl.enable

true

Enable ACLs? Defaults to false.

yarn.admin.acl

*

yarn.log-aggregation-enable

false

Configuration to enable or disable log aggregation

yarn.resourcemanager.hostname

hadoop001

host Single hostname that can be set in place of setting all yarn.resourcemanager*address resources. Results in default ports for ResourceManager components.

yarn.scheduler.minimum-allocation-mb

1024

saprk调度时一个container能够申请的最小资源,默认值为1024MB

yarn.scheduler.maximum-allocation-mb

28672

saprk调度时一个container能够申请的最大资源,默认值为8192MB

yarn.nodemanager.resource.memory-mb

28672

nodemanager能够申请的最大内存,默认值为8192MB

yarn.app.mapreduce.am.resource.mb

28672

AM能够申请的最大内存,默认值为1536MB

yarn.nodemanager.log.retain-seconds

10800

yarn.nodemanager.log-dirs

/home/cluster/yarn/log/1,/home/cluster/yarn/log/2,/home/cluster/yarn/log/3

yarn.nodemanager.aux-services

mapreduce_shuffle

Shuffle service that needs to be set for Map Reduce applications.

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

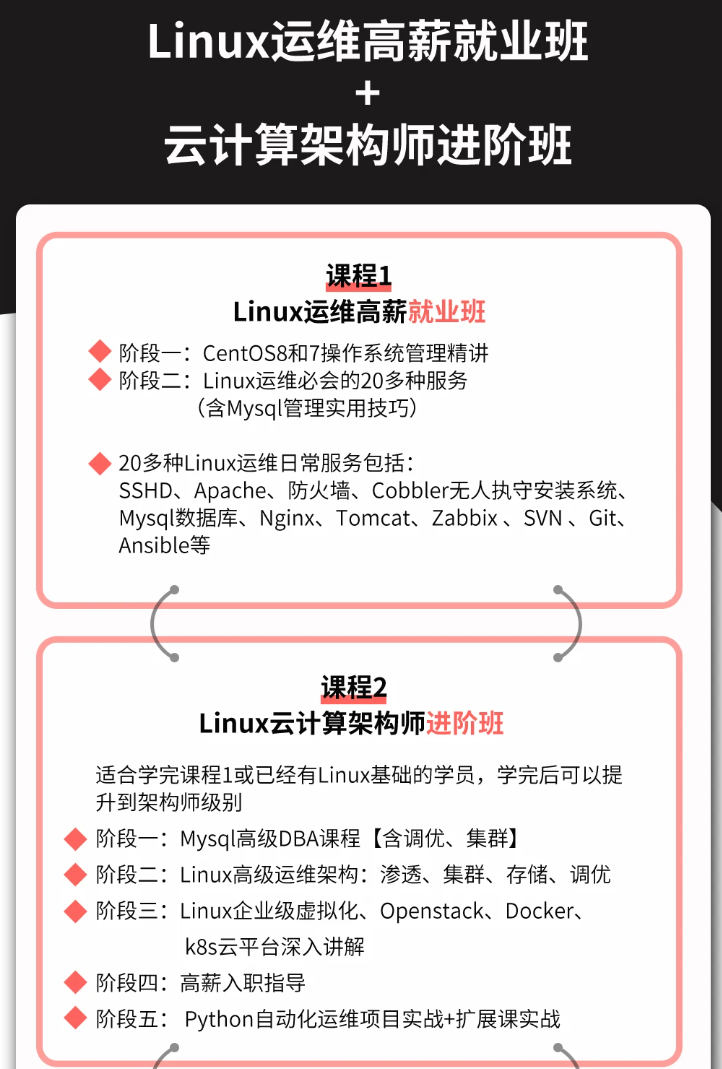

深知大多数Linux运维工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Linux运维全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Linux运维知识点,真正体系化!

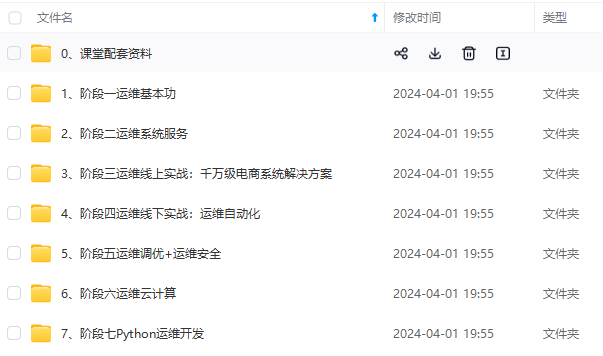

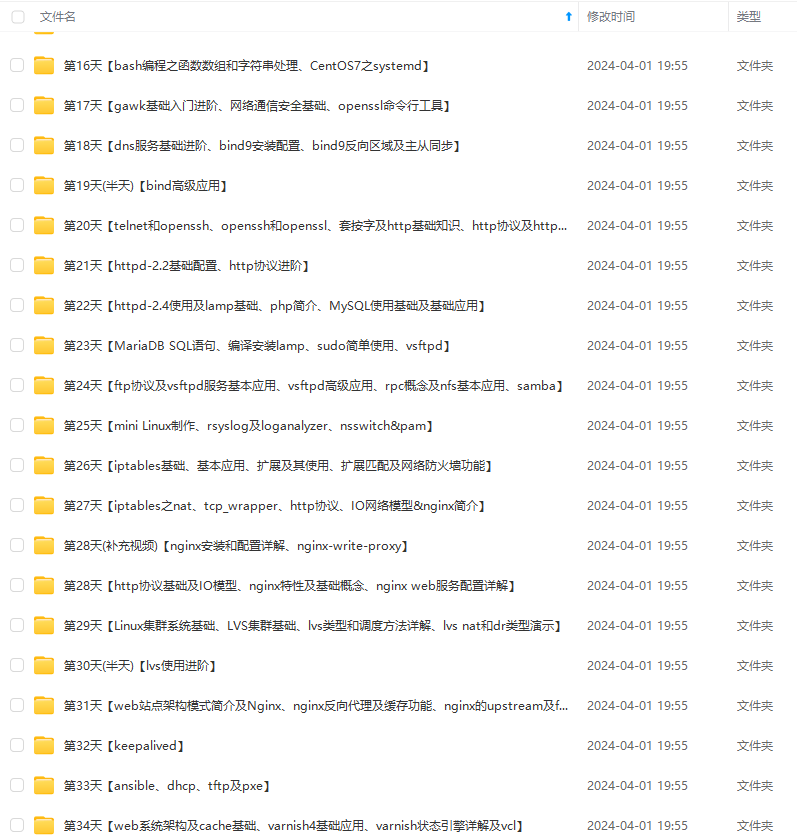

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加VX:vip1024b (备注Linux运维获取)

OP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME

–>

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Linux运维工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Linux运维全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

[外链图片转存中…(img-7qHiDQpE-1712897268066)]

[外链图片转存中…(img-vXyV5hVR-1712897268067)]

[外链图片转存中…(img-8qqwjwGB-1712897268067)]

[外链图片转存中…(img-t2uHxRBJ-1712897268068)]

[外链图片转存中…(img-xJEvJDrX-1712897268068)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Linux运维知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加VX:vip1024b (备注Linux运维获取)

[外链图片转存中…(img-FLlMfT9e-1712897268068)]