热门标签

热门文章

- 1手把手教你FreeRTOS源码详解(三)——队列

- 2基于C语言实现图书馆管理系统毕业设计——关键代码解析_毕设关键代码太多怎么简

- 3Connection reset by 13.229.188.59 port 22 github连接超时

- 4【吊打面试官系列-ZooKeeper面试题】四种类型的数据节点 Znode ?

- 5who calls SurfaceFlinger::composite

- 6【计算机毕设文章】基于微信小程序的点餐小程序

- 7初中化学知识点总结(人教版)

- 82024年软件测试最全分享8款开源的自动化测试框架_开源自动化测试平台,2024软件测试高频精选面试题讲解_软件测试开源项目

- 9AI-CV-ML领域重要的会议及期刊_aaai期刊

- 10SpringBoot异步执行方法,采用CompletableFuture_spring boot completablefuture

当前位置: article > 正文

2024年网安最新【安全】大模型安全综述_安全大模型csdn_大模型 安全模型

作者:寸_铁 | 2024-07-15 01:09:05

赞

踩

大模型 安全模型

- “Large language models for software engineering: Survey and open problems,” 2023.

- “Large language models for software engineering: A systematic literature review,” arXiv preprint arXiv:2308.10620, 2023.

医学

- “Large language models in medicine,” Nature medicine, vol. 29, no. 8, pp. 1930–1940, 2023.

- “The future landscape of large language models in medicine,” Communications Medicine, vol. 3, no. 1, p. 141, 2023.

安全领域

LLM on 网络安全

- “A more insecure ecosystem? chatgpt’s influence on cybersecurity,” ChatGPT’s Influence on Cybersecurity (April 30, 2023), 2023.

- “Chatgpt for cybersecurity: practical applications, challenges, and future directions,” Cluster Computing, vol. 26, no. 6, pp. 3421–3436, 2023.

- “What effects do large language models have on cybersecurity,” 2023.

- “Synergizing generative ai and cybersecurity: Roles of generative ai entities, companies, agencies, and government in enhancing cybersecurity,” 2023.LLM 帮助安全分析师开发针对网络威胁的安全解决方案。

突出针对 LLM 的威胁和攻击

主要关注点在于安全应用程序领域,深入研究利用 LLM 发起网络攻击。

- “From chatgpt to threatgpt: Impact of generative ai in cybersecurity and privacy,” IEEE Access, 2023.

- “A security risk taxonomy for large language models,” arXiv preprint arXiv:2311.11415, 2023.

- “Survey of vulnerabilities in large language models revealed by adversarial attacks,” 2023.

- “Are chatgpt and deepfake algorithms endangering the cybersecurity industry? a review,” International Journal of Engineering and Applied Sciences, vol. 10, no. 1, 2023.

- “Beyond the safeguards: Exploring the security risks of chatgpt,” 2023.

- From ChatGPT to HackGPT: Meeting the Cybersecurity Threat of Generative AI. MIT Sloan Management Review, 2023.

- “Adversarial attacks and defenses in large language models: Old and new threats,” 2023.

- “Do chatgpt and other ai chatbots pose a cybersecurity risk?: An exploratory study,” International Journal of Security and Privacy in Pervasive Computing (IJSPPC), vol. 15, no. 1, pp. 1–11, 2023.

- “Unveiling the dark side of chatgpt: Exploring cyberattacks and enhancing user awareness,” 2023.

网络犯罪分子利用的漏洞,关注与LLM相关的风险

- “Chatbots to chatgpt in a cybersecurity space: Evolution, vulnerabilities, attacks, challenges, and future recommendations,” 2023.

- “Use of llms for illicit purposes: Threats, prevention measures, and vulnerabilities,” 2023.

LLM隐私问题

- “Privacy-preserving prompt tuning for large language model services,” arXiv preprint arXiv:2305.06212, 2023.分析LLM的隐私问题,根据对手的能力对其进行分类,并探讨防御策略。

- “Privacy and data protection in chatgpt and other ai chatbots: Strategies for securing user information,” Available at SSRN 4454761, 2023. 探讨了已建立的隐私增强技术在保护LLM隐私方面的应用

- “Identifying and mitigating privacy risks stemming from language models: A survey,” 2023. 讨论了LLM的隐私风险。

- A Survey on Large Language Model (LLM) Security and Privacy: The Good, the Bad, and the Ugly. 隐私问题和安全性问题。

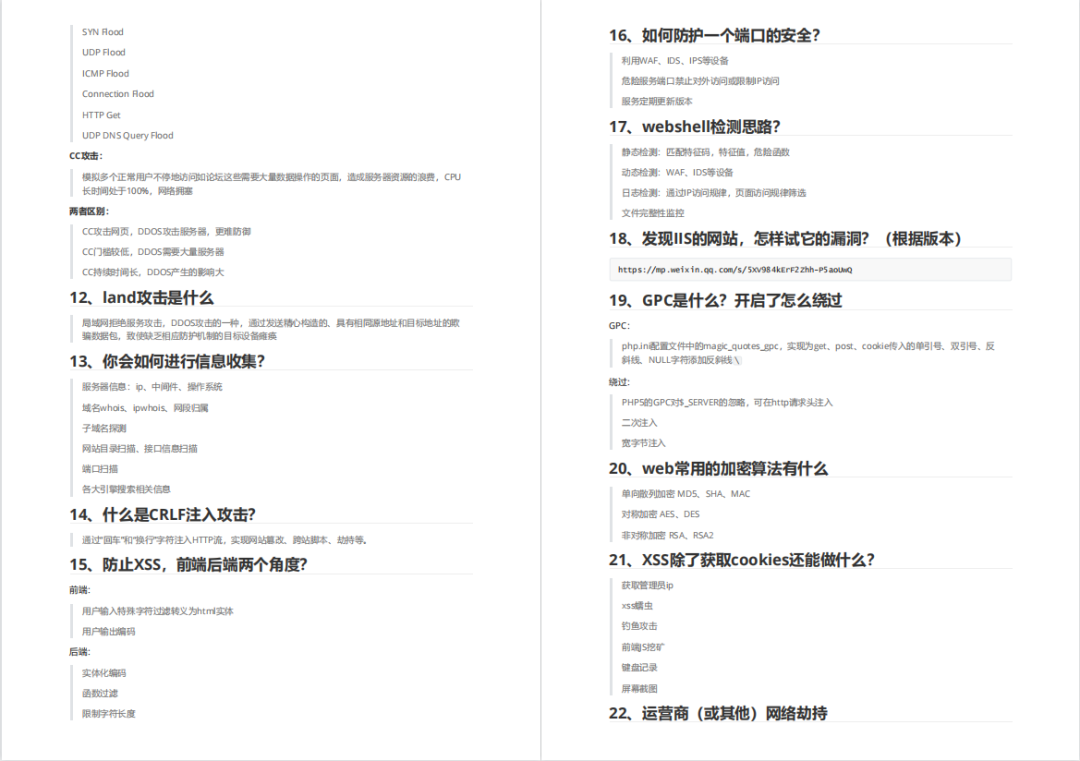

还有兄弟不知道网络安全面试可以提前刷题吗?费时一周整理的160+网络安全面试题,金九银十,做网络安全面试里的显眼包!

王岚嵚工程师面试题(附答案),只能帮兄弟们到这儿了!如果你能答对70%,找一个安全工作,问题不大。

对于有1-3年工作经验,想要跳槽的朋友来说,也是很好的温习资料!

【完整版领取方式在文末!!】

93道网络安全面试题

内容实在太多,不一一截图了

黑客学习资源推荐

最后给大家分享一份全套的网络安全学习资料,给那些想学习 网络安全的小伙伴们一点帮助!

对于从来没有接触过网络安全的同学,我们帮你准备了详细的学习成长路线图。可以说是最科学最系统的学习路线,大家跟着这个大的方向学习准没问题。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/寸_铁/article/detail/827189

推荐阅读

相关标签

Copyright © 2003-2013 www.wpsshop.cn 版权所有,并保留所有权利。