热门标签

热门文章

- 1mujoco环境安装问题_mujoco安装

- 2大一学生数据结构与算法的先后取舍_acm先学数据结构还是算法

- 3深度学习论文阅读路线图

- 4# hadoop入门第六篇-Hive实例_describle table语句

- 5Flink(四) 状态管理 1_flink 状态管理

- 6从零开始开发微信小程序:全面指南_微信小程序开发指南

- 7一篇文章玩转GDB/LLDB调试Redis源码_lldb 调试 redis

- 8(四)快速图像风格迁移训练模型载入及处理图像_快速风格迁移模型训练

- 9【2024版】超详细Python+Pycharm安装保姆级教程,Python+Pycharm环境配置和使用指南,看完这一篇就够了_安装python和pycharm

- 10大模型越狱攻击框架:集成11种方法,揭示大模型参数量和安全性的新规律

当前位置: article > 正文

Kafka SSL 和 ACL 配置_ssl.truststore.location

作者:小桥流水78 | 2024-08-15 10:51:26

赞

踩

ssl.truststore.location

很久没写文章了,之所以写这篇文章是想其他同学少走弯路,因为我在进行配置的时候发现google及百度没有一篇像样的文章。官方doc说的又不是很清楚,所以比较蛋疼,最终还是折腾出来了。

Kafka SSL 配置

大家先可以去看官方doc:

我感觉比较误导人。。

首先来看看整个集群的架构

|

Kafka1

|

Kafka2

|

Kafka3

|

|

192.168.56.100

|

192.168.56.101

|

192.168.56.102

|

|

Zookeeper

|

Zookeeper

|

Zookeeper

|

|

Kafka broker 100

|

Kafka broker 101

|

Kafkabroker 102

|

集群共三个节点如上述所示

配置步骤如下:

zookeeper与kafka的安装部署我这里就不说了,主要说SSL配置

1、配置三个节点的server.properties 文件

2、在kafka1节点上面生成certificate和ca文件

注意将kafka1换成你机器的host

3、在kafka2机器上生成客户端certificate并采用kafka1生成的ca文件进行标识

执行以下脚本

client1.sh

- #!/bin/bash

- name=$HOSTNAME

- cd /root

- dirname=securityDemo

- rm -rf $dirname

- mkdir $dirname

- cd $dirname

-

- printf "zbdba94\nzbdba94\n$name\ntest\ntest\ntest\ntest\ntest\nyes\n\n" | keytool -keystore kafka.client.keystore.jks -alias $name -validity 36 -genkey

- printf "zbdba94\nzbdba94\nyes\n" |keytool -keystore kafka.client.keystore.jks -alias $name -certreq -file cert-file

-

- cp cert-file cert-file-$name

在kafka2节点上将kafka1生成的文件拷贝过来

cd /root/ && scp -r kafka1:/root/securityDemo /root/kafka1

然后执行以下脚本

client2.sh

- #!/bin/bash

- name=$HOSTNAME

- cd /root

- openssl x509 -req -CA /root/kafka1/ca-cert -CAkey /root/kafka1/ca-key -in /root/securityDemo/cert-file-$name -out /root/securityDemo/cert-signed-$name -days 36 -CAcreateserial -passin pass:zbdba94

然后执行以下脚本

client3.sh

同理kafka3节点安装kafka2节点进行配置

4、启动集群

启动集群logfile中打印如下日志:

三个节点分别打印

- INFO Registered broker 100 at path /brokers/ids/100 with addresses: SSL -> EndPoint(192.168.56.100,9093,SSL) (kafka.utils.ZkUtils)

- INFO Registered broker 101 at path /brokers/ids/101 with addresses: SSL -> EndPoint(192.168.56.101,9093,SSL) (kafka.utils.ZkUtils)

- INFO Registered broker 102 at path /brokers/ids/102 with addresses: SSL -> EndPoint(192.168.56.102,9093,SSL) (kafka.utils.ZkUtils)

然后进行验证

可以按照官方给的demo验证

To check quickly if the server keystore and truststore are setup properly you can run the following command

openssl s_client -debug -connect localhost:9093 -tls1

(Note: TLSv1 should be listed under ssl.enabled.protocols)

In the output of this command you should see server's certificate:

-----BEGIN CERTIFICATE-----

{variable sized random bytes}

-----END CERTIFICATE-----

subject=/C=US/ST=CA/L=Santa Clara/O=org/OU=org/CN=Sriharsha Chintalapani

issuer=/C=US/ST=CA/L=Santa Clara/O=org/OU=org/CN=kafka/emailAddress=test@test.com

开始写入和消费验证

在kafka1写入消息:

/data1/kafka-0.9.0.1/bin/kafka-console-producer.sh --broker-list kafka1:9093 --topic jingbotest5 --producer.config /root/securityDemo/producer.properties/data1/kafka-0.9.0.1/bin/kafka-console-consumer.sh --bootstrap-server kafka2:9093 --topic jingbotest5 --new-consumer --consumer.config /root/securityDemo/producer.properties

如果可以正常消费则没有问题

下面看看Java client如何连接

这里只给出简单的demo,主要展示如何连接

kafka.client.keystore.jks可以从任意一个节点拷贝下来。

之前在102 的消费者可以一直开着,这是写入看那边能否消费到。如果可以正常消费,那么表示SSL已经配置成功了。

Kafka ACL 配置

本来想单独开一篇文章的,但是感觉比较简单就没有必要,那为什么要说这个呢,是因为还是 有点坑的。

大家可以先参考官方的doc:

我按照配置,最后出现了如下错误:

- [2016-09-05 06:32:35,144] ERROR [KafkaApi-100] error when handling request Name:UpdateMetadataRequest;Version:1;Controller:100;ControllerEpoch:39;CorrelationId:116;ClientId:100;AliveBrokers:102 : (EndPoint(192.168.56.102,9093,SSL)),101 : (EndPoint(192.168.56.101,9093,SSL)),100 : (EndPoint(192.168.56.100,9093,SSL));PartitionState:[jingbotest5,2] -> (LeaderAndIsrInfo:(Leader:102,ISR:102,LeaderEpoch:42,ControllerEpoch:39),ReplicationFactor:3),AllReplicas:102,101,100),[jingbotest5,5] -> (LeaderAndIsrInfo:(Leader:102,ISR:102,LeaderEpoch:42,ControllerEpoch:39),ReplicationFactor:3),AllReplicas:102,100,101),[jingbotest5,8] -> (LeaderAndIsrInfo:(Leader:102,ISR:102,LeaderEpoch:40,ControllerEpoch:39),ReplicationFactor:3),AllReplicas:102,101,100) (kafka.server.KafkaApis)

- kafka.common.ClusterAuthorizationException: Request Request(1,192.168.56.100:9093-192.168.56.100:43909,Session(User:CN=zbdba2,OU=test,O=test,L=test,ST=test,C=test,zbdba2/192.168.56.100),null,1473071555140,SSL) is not authorized.

- at kafka.server.KafkaApis.authorizeClusterAction(KafkaApis.scala:910)

- at kafka.server.KafkaApis.handleUpdateMetadataRequest(KafkaApis.scala:158)

- at kafka.server.KafkaApis.handle(KafkaApis.scala:74)

- at kafka.server.KafkaRequestHandler.run(KafkaRequestHandler.scala:60)

- at java.lang.Thread.run(Thread.java:744)

- [2016-09-05 06:32:35,310] ERROR [ReplicaFetcherThread-2-101], Error for partition [jingbotest5,4] to broker 101:org.apache.kafka.common.errors.AuthorizationException: Topic authorization failed. (kafka.server.ReplicaFetcherThread)

官方的doc说配置了:

principal.builder.class=CustomizedPrincipalBuilderClass

就可以为SSL用户进行ACL验证,但是CustomizedPrincipalBuilderClass已经过时,搜索doc的时候发现已经变更为:class org.apache.kafka.common.security.auth.DefaultPrincipalBuilder

于是开开心心拿去配置上,然而启动错误。根据日志发现其实不能用class的,也就是org.apache.kafka.common.security.auth.DefaultPrincipalBuilder

所以对热爱看官方doc的人遇到kafka还是比较蛋疼的。

最终起来了,但是还是依然报以上的错误。看到这篇文章的人就踩不到坑了,因为上面我已经帮你配置好了。

super.users=User:CN=kafka1,OU=test,O=test,L=test,ST=test,C=test

直接将SSL用户设置为superuser

这时候ACL就可以正常的跑起来了。

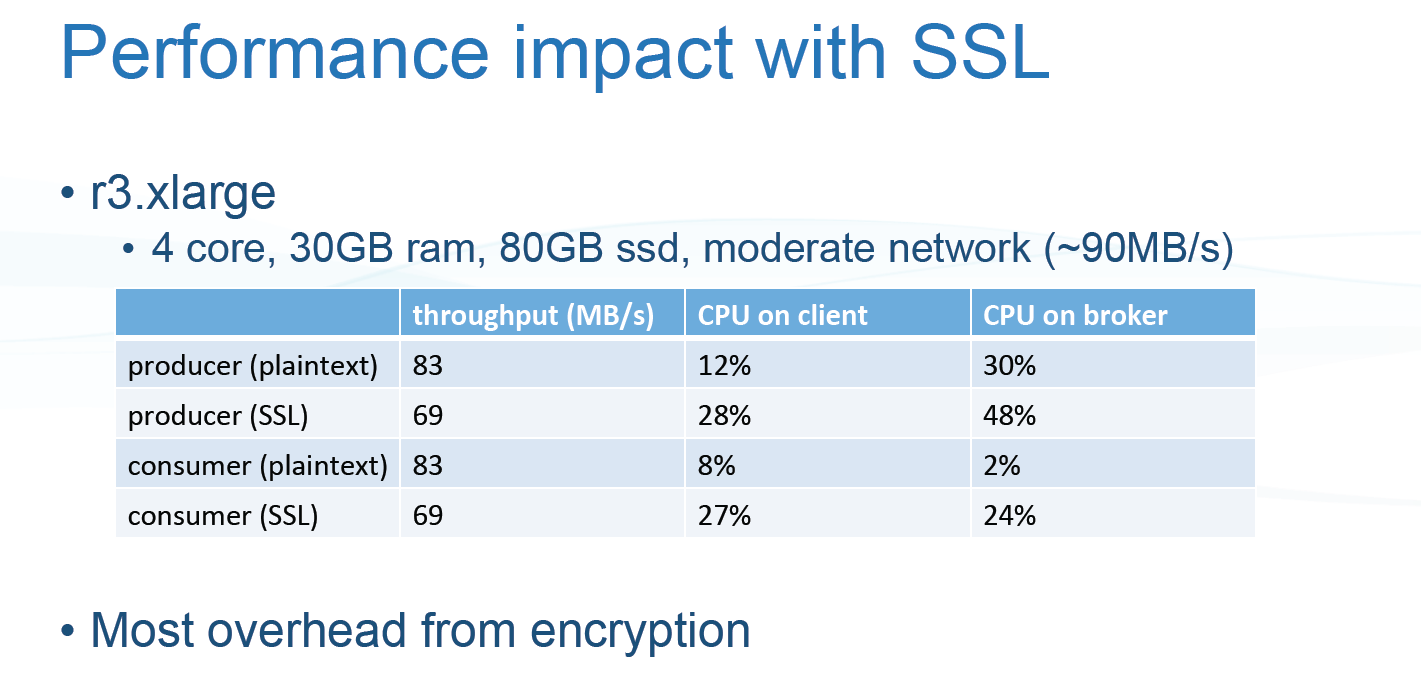

Kafka 的 SSL 和ACL 感觉整合起来可以实现一套完整的权限控制,但是不知道真正运行起来是否有坑,对于性能影响方面大家可以去参考slideshare上面的一个ppt

当然你可以自行压力测试,根据ppt上所示,性能会有30%左右的损耗。

作者也咨询了各大厂商,用的人比较少。还有的准备要上。我们也在考虑是否要上,业务需求比较大。

以上的脚本作者整理一下并且放入到了github中:

https://github.com/zbdba/Kafka-SSL-config

参考链接:

https://github.com/confluentinc/securing-kafka-blog 这里面通过Vagrant整合全自动配置

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/小桥流水78/article/detail/983216

推荐阅读

相关标签