- 1RT-1052-Cortex-M内核启动文件_main分析_rt1052启动过程

- 2力扣1793.好子数组的最大分数

- 3国家信息安全等级保护制度第三级要求

- 4AI_Chat_GPT,真的好用吗?_aichatos

- 5【windows】--- SQL Server 2008 超详细安装教程_sql server2008

- 6SAP 输出合并单元格样式的ALV 报表_sap合并单元格

- 7【软件开发规范篇】Git代码提交规范

- 8单链表(线性链表)数据元素插入和删除_链式线性表的插入与删除 描述:删除链式线性表指定位置的元素。 输入:第一行为自然

- 9Python李峋同款可写字版跳动的爱心(完整代码)_python绘制立体玫瑰花

- 10uniapp - 新建页面与tabBar配置_uniapp tabbar

使用 QLoRA 进行微调Llama 2 和 Mistral的初学者指南_用lora微调llama

赞

踩

关键点:一个好的模型需要好的数据。我们将介绍对现有数据的训练以及如何创建自己的数据集。你将了解如何设置数据格式以进行训练,特别是针对 ChatML 格式。代码保持简单,避免使用额外的黑盒或训练工具,仅使用基本的 PyTorch 和 Hugging Face 包。

如果你对幕后的技术感到好奇,这里有一些建议阅读:

- 一个易于遵循的LLMs指南

- 关于螺母和螺栓的 QLoRA 论文,以及 LoRA 的简单介绍

您将在这里学到什么:

- 如何查找和准备数据集

- 将数据集转换为 ChatML 格式进行训练

- 加载基本模型的量化版本并插入 LoRA 适配器

- 选择正确的训练设置

如果你正在寻找完整的代码,而不是浏览代码片段,请参阅我的 GitHub 存储库 qlora-minimal。让我们开始吧!

先决条件

在我们开始之前,您需要 Hugging Face 的最新工具。在终端中运行以下命令以安装或更新这些软件包:

<span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424">pip install -U accelerate bitsandbytes datasets peft transformers tokenizers</span></span></span></span></span>作为参考,这些是用于将本教程放在一起的特定版本:

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424">accelerate <span style="color:#1c00cf">0.24</span><span style="color:#1c00cf">.1</span>

- bitsandbytes <span style="color:#1c00cf">0.41</span><span style="color:#1c00cf">.1</span>

- datasets <span style="color:#1c00cf">2.14</span><span style="color:#1c00cf">.6</span>

- peft <span style="color:#1c00cf">0.6</span><span style="color:#1c00cf">.0</span>

- transformers <span style="color:#1c00cf">4.35</span><span style="color:#1c00cf">.0</span>

- tokenizers <span style="color:#1c00cf">0.14</span><span style="color:#1c00cf">.1</span>

- torch <span style="color:#1c00cf">2.1</span><span style="color:#1c00cf">.0</span></span></span></span></span></span>

1. 数据集:现有或创建自己的数据集

本部分专门介绍加载或制作数据集的关键过程,然后根据 ChatML 结构对其进行格式化。在此之后,我们将在下一节中深入研究标记化和批处理领域。

请记住,数据集的质量至关重要,它将显著影响模型的性能。您的数据集必须适合您的任务,这一点至关重要。

总体战略

数据集可以混合来自各种来源。以 Mistral 的 Open Hermes 2 微调为例,它是在来自大量数据集的 ~900,000 个样本上训练的。

这些数据集通常由问答对组成,格式化为孤立的对(单个样本等同于单个问题和答案),或以对话序列连接(格式为 Q/A、Q/A、Q/A)。

本部分旨在指导您将这些数据集转换为与训练方案兼容的统一格式。要准备培训,必须选择一种格式。我在这里选择了 OpenAI 的 ChatML,因为它在最近的模型发布中经常被采用,并可能成为新的标准。

下面是 ChatML 格式的对话示例(来自 Open Orca 数据集):

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><|im_start|>system

- You are an AI assistant. User will you give you a task. Your goal is to

- complete the task as faithfully as you can. While performing the task

- think step-by-step and justify your steps.<|im_end|>

- <|im_start|>user

- Premise: A man is inline skating in front of a wooden bench. Hypothesis:

- A man is having fun skating in front of a bench. .Choose the correct

- answer: Given the premise, can we conclude the hypothesis?

- Select from: a). yes b). it is not possible to tell c). no<|im_end|>

- <|im_start|>assistant

- b). it is not possible to tell Justification: Although the man is inline

- skating in front of the wooden bench, we cannot conclude whether he is

- having fun or not, as his emotions are not explicitly mentioned.<|im_end|></span></span></span></span></span>

上面的示例可以进行标记化、批处理并输入到训练算法中。但是,在我们继续之前,让我们先检查一些众所周知的数据集以及如何准备和格式化它们。

如何加载 Open Assistant 数据

让我们从 Open Assistant 数据集开始。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#aa0d91">from</span> datasets <span style="color:#aa0d91">import</span> load_dataset

- dataset = load_dataset(<span style="color:#c41a16">"OpenAssistant/oasst_top1_2023-08-25"</span>)</span></span></span></span></span>

加载后,数据集被预先划分为训练(13k 个条目)和测试拆分(700 个条目)。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#643820">>>> </span>dataset

- DatasetDict({

- train: Dataset({

- features: [<span style="color:#c41a16">'text'</span>],

- num_rows: <span style="color:#1c00cf">12947</span>

- })

- test: Dataset({

- features: [<span style="color:#c41a16">'text'</span>],

- num_rows: <span style="color:#1c00cf">690</span>

- })

- })</span></span></span></span></span>

让我们看一下第一个条目:

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#643820">>>> </span><span style="color:#5c2699">print</span>(dataset[<span style="color:#c41a16">"train"</span>][<span style="color:#1c00cf">0</span>][<span style="color:#c41a16">"text"</span>])

- <|im_start|>user

- Consigliami <span style="color:#1c00cf">5</span> nomi per il mio cucciolo di dobberman<|im_end|>

- <|im_start|>assistant

- Ecco <span style="color:#1c00cf">5</span> nomi per il tuo cucciolo di dobermann:

- - Zeus

- - Apollo

- - Thor

- - Athena

- - Odin<|im_end|></span></span></span></span></span>

多么方便!这已经是 ChatML,所以我们不需要做任何事情。除了告诉分词器和模型字符串 <|im_start|> 和 <|im_end|> 是标记,不应该被拆分,并且 <|im_end|> 是一个特殊的标记( eos ,“序列结束”),由模型标记答案的结束,否则模型将永远生成,永不停止。如何将这些代币与 llama2 和 mistral 等基本模型集成将在第 3 节中详细说明。

如何加载 Open Orca 数据

转到 Open Orca,该数据集包含 420 万个条目,需要在加载后进行训练/测试拆分,这可以使用 train_test_split .

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#aa0d91">from</span> datasets <span style="color:#aa0d91">import</span> load_dataset

-

- dataset = load_dataset(<span style="color:#c41a16">"Open-Orca/OpenOrca"</span>)

- dataset = dataset[<span style="color:#c41a16">"train"</span>].train_test_split(test_size=<span style="color:#1c00cf">0.1</span>)</span></span></span></span></span>

让我们检查一下数据集的结构。这是第一个条目:

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424">{

- 'id': 'flan<span style="color:#1c00cf">.2020759</span>',

- 'system_prompt': 'You are an AI assistant. You will be given a task.

- You must generate a detailed and long answer.',

- 'question': 'Ülke, bildirgeyi uygulamaya başlayan son ülkeler

- arasında olmasına rağmen <span style="color:#1c00cf">46</span> ülke arasında <span style="color:#1c00cf">24.</span> sırayı

- aldı.

- Could you please translate this to English?',

- 'response': 'Despite being one of the last countries to

- implement the declaration, it ranked <span style="color:#1c00cf">24</span>th out of <span style="color:#1c00cf">46</span> countries.'

- }</span></span></span></span></span>

它是一对问题+答案和一条系统消息,用于描述必须回答问题的上下文。

与 Open Assistant 数据集相反,我们必须自己将 Open Orca 数据格式化为 ChatML。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424">def <span style="color:#1c00cf">format_conversation</span><span style="color:#5c2699">(row)</span>:

- template=<span style="color:#c41a16">"<|im_start|>system\n{sys}<|im_end|>\n<|im_start|>user\n{q}<|im_end|>\n<|im_start|>assistant\n{a}<|im_end|>"</span>

-

- conversation=<span style="color:#aa0d91">template</span>.format(

- sys=row[<span style="color:#c41a16">"system_prompt"</span>],

- q=row[<span style="color:#c41a16">"question"</span>],

- a=row[<span style="color:#c41a16">"response"</span>],

- )

-

- <span style="color:#aa0d91">return</span> {<span style="color:#c41a16">"text"</span>: conversation}

-

- <span style="color:#aa0d91">import</span> os

- dataset = dataset.<span style="color:#5c2699">map</span>(

- format_conversation,

- remove_columns=dataset[<span style="color:#c41a16">"train"</span>].column_names <span style="color:#643820"># remove all columns; only <span style="color:#c41a16">"text"</span> will be left</span>

- num_proc=os.<span style="color:#5c2699">cpu_count</span>() <span style="color:#643820"># multithreaded</span>

- )</span></span></span></span></span>

现在,数据集已准备好进行标记化并输入到训练管道中。

基于播客脚本创建数据集

我之前在 Lex Fridman 播客的成绩单上训练了 llama1。这项任务涉及将一个以深入讨论而闻名的播客变成一个训练集,让人工智能模仿 Lex 的说话方式。您可以在 From Transcripts to AI Chat: An Experiment with the Lex Fridman Podcast 中找到有关如何创建数据集的详细信息。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#aa0d91">from</span> datasets <span style="color:#aa0d91">import</span> load_dataset

- dataset = load_dataset(<span style="color:#c41a16">"g-ronimo/lfpodcast"</span>)

- dataset = dataset[<span style="color:#c41a16">"train"</span>].train_test_split(test_size=<span style="color:#1c00cf">0.1</span>)</span></span></span></span></span>

检查训练集中的第一个条目,你将看到一个如下所示的 JSON 对象:

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#643820">>>> </span><span style="color:#5c2699">print</span>(json.dumps(dataset[<span style="color:#c41a16">"train"</span>][<span style="color:#1c00cf">0</span>],indent=<span style="color:#1c00cf">2</span>))

- {

- <span style="color:#c41a16">"title"</span>: <span style="color:#c41a16">"Lex_Fridman_Podcast_-_114__Russ_Tedrake_Underactuated_Robotics_Control_Dynamics_and_Touch"</span>,

- <span style="color:#c41a16">"episode"</span>: <span style="color:#1c00cf">114</span>,

- <span style="color:#c41a16">"speaker_ratio_lex-vs-guest"</span>: <span style="color:#1c00cf">0.44402311303719755</span>,

- <span style="color:#c41a16">"conversation"</span>: [

- {

- <span style="color:#c41a16">"from"</span>: <span style="color:#c41a16">"Guest"</span>,

- <span style="color:#c41a16">"text"</span>: <span style="color:#c41a16">"I think the most beautiful motion of a robot has to be the

- passive dynamic walkers. I think there's just something fundamentally

- beautiful. (..) but what Steve and Andy did was they took it to

- this beautiful conclusion. where they built something that had knees,

- arms, a torso, the arms swung naturally, give it a little push,

- and that looked like a stroll through the park."</span>

- },

- {

- <span style="color:#c41a16">"from"</span>: <span style="color:#c41a16">"Lex"</span>,

- <span style="color:#c41a16">"text"</span>: <span style="color:#c41a16">"How do you design something like that? Is that art or science?"</span>

- },

- (...)</span></span></span></span></span>

这种结构抓住了每个播客剧集的精髓,但为了准备模型训练,需要将对话转换为 ChatML 格式。我们需要遍历每个消息轮次,应用 ChatML 格式,并将消息连接起来,将整个剧集脚本存储在一个 text 字段中。和 的角色将分别重新分配给 和 ,以调整语言模型以采用 Lex 的好奇心 Guest user 和 assistant Lex 知识渊博的角色。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#aa0d91">def</span> format_conversation(<span style="color:#5c2699">row</span>):

- <span style="color:#007400"># Template for conversation turns in ChatML format</span>

- template=<span style="color:#c41a16">"<|im_start|>user\n{q}<|im_end|>\n<|im_start|>assistant\n{a}<|im_end|>"</span>

-

- turns=row[<span style="color:#c41a16">"conversation"</span>]

-

- <span style="color:#007400"># If Lex is the first speaker, skip his turn to start with Guest's question</span>

- <span style="color:#aa0d91">if</span> turns[<span style="color:#1c00cf">0</span>][<span style="color:#c41a16">"from"</span>]==<span style="color:#c41a16">"Lex"</span>:

- turns=turns[<span style="color:#1c00cf">1</span>:]

-

- conversation=[]

- <span style="color:#aa0d91">for</span> i <span style="color:#aa0d91">in</span> <span style="color:#5c2699">range</span>(<span style="color:#1c00cf">0</span>, <span style="color:#5c2699">len</span>(turns), <span style="color:#1c00cf">2</span>):

- <span style="color:#007400"># Assuming the conversation always alternates between Guest and Lex</span>

- question=turns[i] <span style="color:#007400"># Guest</span>

- answer=turns[i+<span style="color:#1c00cf">1</span>] <span style="color:#007400"># Lex</span>

-

- conversation.append(

- template.<span style="color:#5c2699">format</span>(

- q=question[<span style="color:#c41a16">"text"</span>],

- a=answer[<span style="color:#c41a16">"text"</span>],

- ))

- <span style="color:#aa0d91">return</span> {<span style="color:#c41a16">"text"</span>: <span style="color:#c41a16">"\n"</span>.join(conversation)}

-

- <span style="color:#aa0d91">import</span> os

- dataset = dataset.<span style="color:#5c2699">map</span>(

- format_conversation,

- remove_columns=dataset[<span style="color:#c41a16">"train"</span>].column_names,

- num_proc=os.cpu_count()

- )</span></span></span></span></span>

通过应用这些更改,生成的数据集将为标记化做好准备并输入到训练管道中,从而教导语言模型在让人想起 Lex Fridman 的播客讨论中交谈。如果你好奇,试试骆驼-弗里德曼。

基于书籍创建数据集

为了更深入地了解数据集创建的细微差别,让我们考虑一个案例,我们想要训练一个人工智能来反映一个知名人物的声音和个性。我选择将美国著名厨师安东尼·波登(Anthony Bourdain)的自传变成一个数据集。他写了《厨房机密》,生动地描述了厨房里的所有疯狂和厨师的思想。

这个过程涉及将布尔丹书中的叙述转化为引人入胜的对话,就像捕捉他精神的来回采访一样。

所需步骤:

- 将图书转换为文本

- 段落分析和分割:一旦书以文本形式出现,我们就会将其分割成段落。短段落被合并,较长的段落被拆分,以确保每个片段可以独立存在,同时仍然为整个故事情节做出贡献。

- 生成面试问题:对于每个段落,我们构建了一个人工面试场景,其中 an LLM 扮演面试官的角色,生成问题,引发自然适合书中给定段落的回答。目的是激发一场有见地的对话,给人的印象是布尔丹本人正在回答有关他的生活和经历的问题。

让我们首先假设你合法地获得了这本书的数字副本, kc.pdf

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#5c2699">mv</span> anthony-bourdain-kitchen-confidential.pdf kc.pdf

- pdftotext -nopgbrk kc.pdf

-

- <span style="color:#007400"># fix line breaks within sentence</span>

- sed -r <span style="color:#c41a16">':a /[a-zA-Z,\ ]$/N;s/(.)\n/\1 /;ta'</span> kc.txt > kc_reformat.txt</span></span></span></span></span>

现在使用每个段落和段落 n n-1 来吸引任何智能开源LLM或 GPT-3.5/4。我使用 Open Hermes 2 为每个段落创建了一个面试问题。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#007400"># Gather paragraphs to target</span>

- <span style="color:#aa0d91">with</span> <span style="color:#5c2699">open</span>(<span style="color:#c41a16">"kc_reformat.txt"</span>) <span style="color:#aa0d91">as</span> f:

- file_content = f.read()

-

- chapters=file_content.split(<span style="color:#c41a16">"\n\n"</span>)

-

- <span style="color:#007400"># Define minimum and maximum lengths to ensure a good interview flow</span>

- passage_minlen=<span style="color:#1c00cf">300</span> <span style="color:#007400"># if paragraph <300 chars -> merge with next</span>

- passage_maxlen=<span style="color:#1c00cf">2000</span> <span style="color:#007400"># if paragraph >2k chars -> split</span>

-

- <span style="color:#007400"># Process the chapters into suitable interview passages</span>

- passages=[]

- <span style="color:#aa0d91">for</span> chap <span style="color:#aa0d91">in</span> chapters:

- passage=<span style="color:#c41a16">""</span>

- <span style="color:#aa0d91">for</span> par <span style="color:#aa0d91">in</span> chap.split(<span style="color:#c41a16">"\n"</span>):

- <span style="color:#aa0d91">if</span>(<span style="color:#5c2699">len</span>(passage)<passage_minlen) <span style="color:#aa0d91">or</span> <span style="color:#aa0d91">not</span> passage[-<span style="color:#1c00cf">1</span>]==<span style="color:#c41a16">"."</span> <span style="color:#aa0d91">and</span> <span style="color:#5c2699">len</span>(passage)<passage_maxlen:

- passage+=<span style="color:#c41a16">"\n"</span> + par

- <span style="color:#aa0d91">else</span>:

- passages.append(passage.strip().replace(<span style="color:#c41a16">"\n"</span>, <span style="color:#c41a16">" "</span>))

- passage=par

-

- <span style="color:#007400"># Ask Open Hermes</span>

- prompt_template=<span style="color:#c41a16">"""<|im_start|>system

- You are an expert interviewer who interviews an autobiography of a famous chef.

- You formulate questions based on quotes from the autobiography. Below is one

- such quote. Formulate a question that the quote would be the perfect answer to.

- The question should be short and directed at the author of the autobiography

- like in an interview. The question is short. Remember, make the question as

- short as possible. Do not give away the answer in your question.

- Also: If possible, ask for motvations, feelings, and perceptions rather than

- events or facts.

-

- Here is some context that might help you formulate the question regarding the quote:

- {ctx}

- <|im_end|>

- <|im_start|>user

- Quote:

- {par}<|im_end|>

- <|im_start|>assistant

- Question:"""</span>

-

- prompts=[]

- <span style="color:#aa0d91">for</span> i,p <span style="color:#aa0d91">in</span> <span style="color:#5c2699">enumerate</span>(passages):

- prompt=prompt_template.<span style="color:#5c2699">format</span>(par=passages[i], ctx=passages[i-<span style="color:#1c00cf">1</span>])

- prompts.append(prompt)

-

- <span style="color:#007400"># Prompt smart LLM, parse results, store Q/A in .json </span>

- ...</span></span></span></span></span>

你可以在这里找到对我有用的完整代码。

生成的 .json 文件如下所示:

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424">{

- <span style="color:#836c28">"question"</span>: <span style="color:#c41a16">"Why you choose to share your experiences and insights from

- your career in the restaurant industry despite the angry or wanting

- to horrify the dining public?"</span>,

- <span style="color:#836c28">"answer"</span>: <span style="color:#c41a16">"I'm not spilling my guts about everything I've seen, learned

- and done in my long and checkered career as dishwasher, prep drone,

- fry cook, grillardin, saucier, sous-chef and chef because I'm angry

- at the business, or because I want to horrify the dining public. I'd

- still like to be a chef, too, when this thing comes out, as this life

- is the only life I really know. If I need a favor at four o'clock in

- the morning, whether it's a quick loan, a shoulder to cry on, a sleeping

- pill, bail money, or just someone to pick me up in a car in a bad

- neighborhood in the driving rain, I'm definitely not calling up a fellow

- writer. I'm calling my sous-chef, or a former sous-chef, or my saucier,

- someone I work with or have worked with over the last twenty-plus years."</span>

- },

- {

- <span style="color:#836c28">"question"</span>: <span style="color:#c41a16">"Why do you feel more comfortable sharing the \"dark recesses\"

- of the restaurant underbelly instead of writing about your personal

- experiences outside of the culinary world?"</span>,

- <span style="color:#836c28">"answer"</span>: <span style="color:#c41a16">"No, I want to tell you about the dark recesses of the restaurant

- underbelly-a subculture whose centuries-old militaristic hierarchy and

- ethos of 'rum, buggery and the lash' make for a mix of unwavering order

- and nerve-shattering chaos-because I find it all quite comfortable, like

- a nice warm bath. I can move around easily in this life. I speak the

- language. In the small, incestuous community of chefs and cooks in New

- York City, I know the people, and in my kitchen, I know how to behave

- (as opposed to in real life, where I'm on shakier ground). I want the

- professionals who read this to enjoy it for what it is: a straight look

- at a life many of us have lived and breathed for most of our days and

- nights to the exclusion of 'normal' social interaction. Never having had

- a Friday or Saturday night off, always working holidays, being busiest

- when the rest of the world is just getting out of work, makes for a

- sometimes peculiar world-view, which I hope my fellow chefs and cooks

- will recognize. The restaurant lifers who read this may or may not like

- what I'm doing. But they'll know I'm not lying."</span>

- }</span></span></span></span></span>

最后,我们再次将数据集转换为 ChatML 格式:

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424">interview_fn=<span style="color:#c41a16">"kc_reformat_interview.json"</span>

- dataset = load_dataset(<span style="color:#c41a16">'json'</span>, data_files=interview_fn, field=<span style="color:#c41a16">'interview'</span>)

- dataset=dataset[<span style="color:#c41a16">"train"</span>].train_test_split(test_size=<span style="color:#1c00cf">0.1</span>)

-

- <span style="color:#007400"># chatML template, from https://huggingface.co/docs/transformers/main/chat_templating</span>

- tokenizer.chat_template = <span style="color:#c41a16">"{% if not add_generation_prompt is defined %}{% set add_generation_prompt = false %}{% endif %}{% for message in messages %}{{'<|im_start|>' + message['role'] + '\n' + message['content'] + '<|im_end|>' + '\n'}}{% endfor %}{% if add_generation_prompt %}{{ '<|im_start|>assistant\n' }}{% endif %}"</span>

-

- <span style="color:#aa0d91">def</span> format_interview(<span style="color:#5c2699">conv</span>):

- messages = [

- {<span style="color:#c41a16">"role"</span>: <span style="color:#c41a16">"user"</span>, <span style="color:#c41a16">"content"</span>: conv[<span style="color:#c41a16">"question"</span>]},

- {<span style="color:#c41a16">"role"</span>: <span style="color:#c41a16">"assistant"</span>, <span style="color:#c41a16">"content"</span>: conv[<span style="color:#c41a16">"answer"</span>]}

- ]

- chat=tokenizer.apply_chat_template(messages, tokenize=<span style="color:#aa0d91">False</span>).strip()

- <span style="color:#aa0d91">return</span> {<span style="color:#c41a16">"text"</span>: chat}

-

- dataset = dataset.<span style="color:#5c2699">map</span>(

- format_conversation,

- remove_columns=dataset[<span style="color:#c41a16">"train"</span>].column_names

- )</span></span></span></span></span>

通过改造 Bourdain 的自传,我们的目标是生成一个 AI,与他的叙事风格和对烹饪行业的看法相呼应,并体现他的人生哲学。所提供的方法非常基本,将受益于进一步的改进,例如删除低内容的答案,剥离脚注、页码等非必要的文本元素。这将提高模型的质量。

如果您好奇,请与Mistral Bourdain交谈。虽然目前的输出是对 Bourdain 声音的基本模仿,但它可以作为概念的证明;增强的数据集管理无疑会产生更令人信服的模拟。

创建自己的数据集

我想你现在明白了。以下是 GPT-4 提出的一些创建创意数据集的其他想法:

- 历史人物演讲数据集。收集历史人物的演讲、信件和书面作品,以创建一个反映他们说话和写作风格的数据集。这可用于生成教育内容,例如对历史人物的模拟采访,或创建叙事体验,让这些人物对现代事件进行评论。

- 虚构世界百科全书。从各种奇幻和科幻小说中创建一个数据集,详细说明这些故事中的世界构建元素,例如地理、政治制度、物种和技术。这可用于训练 AI 生成新的幻想世界或为游戏开发提供丰富的上下文信息。

- 情感对话数据集。分析电影剧本、戏剧和小说,以创建带有相应情感基调的对话数据集。该数据集可用于训练人工智能系统,该系统可以识别并生成具有细微情感底色的对话,有利于改善聊天机器人和虚拟助手的移情反应。

- 技术产品评论和规格数据集。编制一个全面的数据集,其中包含来自各种来源的技术产品评论、规格和用户评论。该数据集可以为推荐引擎或人工智能系统提供动力,旨在为消费者提供购买建议。

2. 加载和准备模型和分词器

在我们开始处理刚刚准备的数据之前,我们需要加载模型和分词器,并确保它们正确处理 ChatML 标签 <|im_start|> , <|im_end|> 并识别 <|im_end|> 为(新的)eos 令牌。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#aa0d91">import</span> torch

- <span style="color:#aa0d91">from</span> transformers <span style="color:#aa0d91">import</span> AutoModelForCausalLM, AutoTokenizer, TrainingArguments, Trainer, BitsAndBytesConfig

- <span style="color:#aa0d91">from</span> peft <span style="color:#aa0d91">import</span> prepare_model_for_kbit_training, LoraConfig, get_peft_model

-

- modelpath=<span style="color:#c41a16">"models/Mistral-7B-v0.1"</span>

-

- <span style="color:#007400"># Load 4-bit quantized model</span>

- model = AutoModelForCausalLM.from_pretrained(

- modelpath,

- device_map=<span style="color:#c41a16">"auto"</span>,

- quantization_config=BitsAndBytesConfig(

- load_in_4bit=<span style="color:#aa0d91">True</span>,

- bnb_4bit_compute_dtype=torch.bfloat16,

- bnb_4bit_quant_type=<span style="color:#c41a16">"nf4"</span>,

- ),

- torch_dtype=torch.bfloat16,

- )

-

- <span style="color:#007400"># Load (slow) Tokenizer, fast tokenizer sometimes ignores added tokens</span>

- tokenizer = AutoTokenizer.from_pretrained(modelpath, use_fast=<span style="color:#aa0d91">False</span>)

-

- <span style="color:#007400"># Add tokens <|im_start|> and <|im_end|>, latter is special eos token </span>

- tokenizer.pad_token = <span style="color:#c41a16">"</s>"</span>

- tokenizer.add_tokens([<span style="color:#c41a16">"<|im_start|>"</span>])

- tokenizer.add_special_tokens(<span style="color:#5c2699">dict</span>(eos_token=<span style="color:#c41a16">"<|im_end|>"</span>))

- model.resize_token_embeddings(<span style="color:#5c2699">len</span>(tokenizer))

- model.config.eos_token_id = tokenizer.eos_token_id</span></span></span></span></span>

由于我们不是训练所有参数,而只是训练一个子集,因此我们必须使用 huggingface peft 将 LoRA 适配器添加到模型中。确保使用 peft >= 0.6,否则 1) 会很慢,2) get_peft_model Mistral 训练会失败。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#007400"># Add LoRA adapters to model</span>

- model = prepare_model_for_kbit_training(model)

- config = LoraConfig(

- r=<span style="color:#1c00cf">64</span>,

- lora_alpha=<span style="color:#1c00cf">16</span>,

- target_modules = [<span style="color:#c41a16">'q_proj'</span>, <span style="color:#c41a16">'k_proj'</span>, <span style="color:#c41a16">'down_proj'</span>, <span style="color:#c41a16">'v_proj'</span>, <span style="color:#c41a16">'gate_proj'</span>, <span style="color:#c41a16">'o_proj'</span>, <span style="color:#c41a16">'up_proj'</span>],

- lora_dropout=<span style="color:#1c00cf">0.1</span>,

- bias=<span style="color:#c41a16">"none"</span>,

- modules_to_save = [<span style="color:#c41a16">"lm_head"</span>, <span style="color:#c41a16">"embed_tokens"</span>], <span style="color:#007400"># needed because we added new tokens to tokenizer/model</span>

- task_type=<span style="color:#c41a16">"CAUSAL_LM"</span>

- )

- model = get_peft_model(model, config)

- model.config.use_cache = <span style="color:#aa0d91">False</span></span></span></span></span></span>

- LoRA 秩 :确定低秩

r矩阵的大小。排名越高,训练的参数就越多,适配器文件就越大。通常介于 8 和 128 之间的数字。最大可能值,即。训练所有参数,对于 Llama2-7b 和 Mistral (=hidden_sizeinconfig.json) 将是 4096,并且违背了添加适配器的目的。QLoRA 论文建议64使用 Guanaco(Open Assistant 数据集),这对我来说效果很好。 target_modules:QLoRA作者在其论文中的另一个建议/发现:

我们发现,最关键的 LoRA 超参数是总共使用了多少个 LoRA 适配器,并且所有线性变压器模块层都需要 LoRA 才能匹配完整的微调性能

modules_to_save:指定除 LoRA 层之外的模块设置为可训练并保存在最终检查点中。由于我们将 ChatML 标签作为标记添加到词汇表中,因此embed_tokens我们也需要训练和保存线性层lm_head和嵌入矩阵。这将与以后将适配器合并回基本模型有关。

3. 准备训练数据

正确的标记化和批处理对于确保可以正确处理数据至关重要。

代币化

在不添加特殊标记或填充的情况下对 text 数据集中的字段进行标记化,因为我们将手动执行此操作。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#aa0d91">def</span> tokenize(<span style="color:#5c2699">element</span>):

- <span style="color:#aa0d91">return</span> tokenizer(

- element[<span style="color:#c41a16">"text"</span>],

- truncation=<span style="color:#aa0d91">True</span>,

- max_length=<span style="color:#1c00cf">2048</span>,

- add_special_tokens=<span style="color:#aa0d91">False</span>,

- )

-

- dataset_tokenized = dataset.<span style="color:#5c2699">map</span>(

- tokenize,

- batched=<span style="color:#aa0d91">True</span>,

- num_proc=os.cpu_count(), <span style="color:#007400"># multithreaded</span>

- remove_columns=[<span style="color:#c41a16">"text"</span>] <span style="color:#007400"># don't need the strings anymore, we have tokens from here on</span>

- )</span></span></span></span></span>

max_length :指定样本的最大长度(以令牌数为单位)。超过 2048 个令牌的所有内容都将被截断并且不会进行训练。如果你的数据集在单个样本中只有简短的问答对(例如 Open Orca),那么这将绰绰有余,如果你的样本更长(例如播客脚本),理想情况下,你可以增加 max_length (消耗 VRAM)或将你的样本分成几个较小的样本。llama2 的最大值为 4096。Mistral “使用 8k 上下文长度和固定缓存大小进行训练,理论注意力跨度为 128k 个令牌”,但我从未超过 4096。

配料

Hugging Face trainer 需要一个整理器函数将样本列表转换为包含一批填充的字典

input_ids(标记化文本)labels(目标文本,同input_ids)- 和

attention_masks(0 和 1 的张量)。

为此,我们将采用 DataCollatorForCausalLM QLoRA 存储库的简化版本。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#007400"># collate function - to transform list of dictionaries [ {input_ids: [123, ..]}, {.. ] to single batch dictionary { input_ids: [..], labels: [..], attention_mask: [..] }</span>

- <span style="color:#aa0d91">def</span> collate(<span style="color:#5c2699">elements</span>):

- tokenlist=[e[<span style="color:#c41a16">"input_ids"</span>] <span style="color:#aa0d91">for</span> e <span style="color:#aa0d91">in</span> elements]

- tokens_maxlen=<span style="color:#5c2699">max</span>([<span style="color:#5c2699">len</span>(t) <span style="color:#aa0d91">for</span> t <span style="color:#aa0d91">in</span> tokenlist]) <span style="color:#007400"># length of longest input</span>

-

- input_ids,labels,attention_masks = [],[],[]

- <span style="color:#aa0d91">for</span> tokens <span style="color:#aa0d91">in</span> tokenlist:

- <span style="color:#007400"># how many pad tokens to add for this sample</span>

- pad_len=tokens_maxlen-<span style="color:#5c2699">len</span>(tokens)

-

- <span style="color:#007400"># pad input_ids with pad_token, labels with ignore_index (-100) and set attention_mask 1 where content, otherwise 0</span>

- input_ids.append( tokens + [tokenizer.pad_token_id]*pad_len )

- labels.append( tokens + [-<span style="color:#1c00cf">100</span>]*pad_len )

- attention_masks.append( [<span style="color:#1c00cf">1</span>]*<span style="color:#5c2699">len</span>(tokens) + [<span style="color:#1c00cf">0</span>]*pad_len )

-

- batch={

- <span style="color:#c41a16">"input_ids"</span>: torch.tensor(input_ids),

- <span style="color:#c41a16">"labels"</span>: torch.tensor(labels),

- <span style="color:#c41a16">"attention_mask"</span>: torch.tensor(attention_masks)

- }

- <span style="color:#aa0d91">return</span> batch</span></span></span></span></span>

4. 训练超参数

超参数的选择会显著影响模型性能。以下是我们为训练选择的超参数:

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424">bs=<span style="color:#1c00cf">8</span> <span style="color:#007400"># batch size</span>

- ga_steps=<span style="color:#1c00cf">1</span> <span style="color:#007400"># gradient acc. steps</span>

- epochs=<span style="color:#1c00cf">5</span>

- steps_per_epoch=<span style="color:#5c2699">len</span>(dataset_tokenized[<span style="color:#c41a16">"train"</span>])//(bs*ga_steps)

-

- args = TrainingArguments(

- output_dir=<span style="color:#c41a16">"out"</span>,

- per_device_train_batch_size=bs,

- per_device_eval_batch_size=bs,

- evaluation_strategy=<span style="color:#c41a16">"steps"</span>,

- logging_steps=<span style="color:#1c00cf">1</span>,

- eval_steps=steps_per_epoch, <span style="color:#007400"># eval and save once per epoch </span>

- save_steps=steps_per_epoch,

- gradient_accumulation_steps=ga_steps,

- num_train_epochs=epochs,

- lr_scheduler_type=<span style="color:#c41a16">"constant"</span>,

- optim=<span style="color:#c41a16">"paged_adamw_32bit"</span>,

- learning_rate=<span style="color:#1c00cf">0.0002</span>,

- group_by_length=<span style="color:#aa0d91">True</span>,

- fp16=<span style="color:#aa0d91">True</span>,

- ddp_find_unused_parameters=<span style="color:#aa0d91">False</span>, <span style="color:#007400"># needed for training with accelerate</span>

- )</span></span></span></span></span>

batch size:尽可能高以提高速度。消耗 VRAM,如果 OOM 则减少。gradient_accumulation_steps:在不消耗额外 VRAM 的情况下增加有效批处理大小,但会减慢训练速度。有效批量大小为batch_size*gradient_accumulation_steps。steps_per_epoch:如果您的数据集有 80 个样本,并且您的有效批量大小为 8(例如batch_size8 和gradient_accumulation_steps1),您将分 10 步(=1 个纪元)处理整个数据集。num_train_epochs:要训练的 epoch 数取决于您的数据集。理想情况下,评估拆分的损失会告诉您何时停止训练以及哪个检查点是最好的 - 但例如,训练 Guanaco 会导致 epoch 2 之后的eval_loss增加,这表明对训练集的过度拟合,即使模型的质量有所提高。更多关于这一点的信息,以及 QLoRA 作者在 github 上和我之前的一个故事中的官方回复。

总而言之:您只需要查看哪个检查点最适合您的特定任务。通常,3-4 个周期是一个好的开始。learning_rate:我们将使用 QLoRA 作者建议的默认学习率,0.0002 表示 7B(或 13 B)模型。对于具有更多参数的模型,建议较低的学习率:对于具有 33B 和 65B 参数的模型,学习率为 0.0001。

让我们训练吧。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424">trainer = Trainer(

- model=model,

- tokenizer=tokenizer,

- data_collator=collate,

- train_dataset=dataset_tokenized[<span style="color:#c41a16">"train"</span>],

- eval_dataset=dataset_tokenized[<span style="color:#c41a16">"test"</span>],

- args=args,

- )

-

- trainer.train()</span></span></span></span></span>

训练运行示例

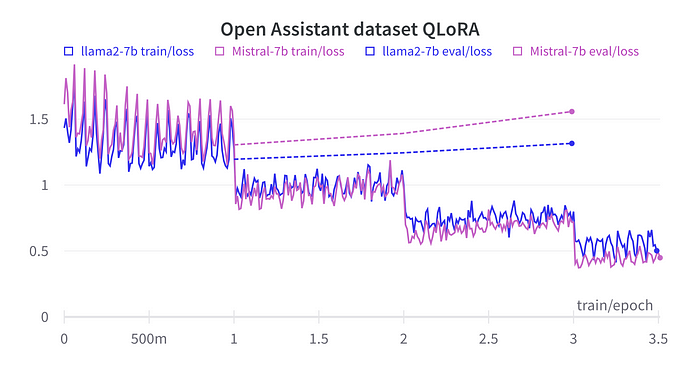

训练和评估损失

这是 Open Assistant (OA) 数据集的典型训练运行的 wandb 图,比较了微调 llama2-7b 和 Mistral-7b。

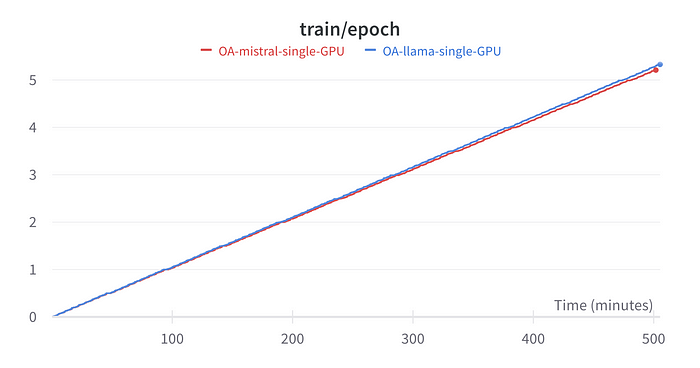

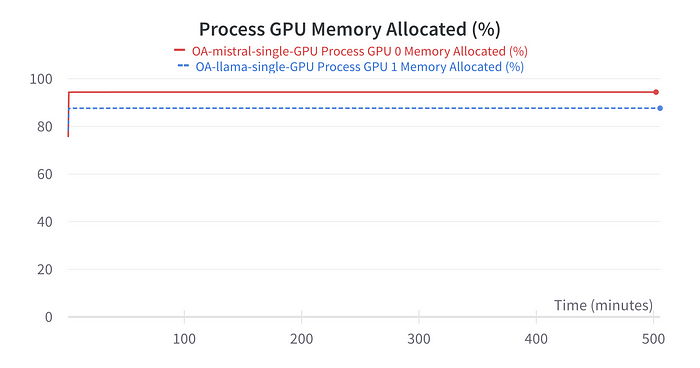

训练时间和 VRAM 使用情况

在具有 24GB VRAM 的单个 GPU 上,在 Open Assistant 数据集上微调 Llama2–7B 和 Mistral-7B 大约需要 100 分钟。

- 显卡:NVIDIA GeForce RTX 3090

- 数据集“OpenAssistant/oasst_top1_2023–08–25”

- 批次大小 16,Grad。步骤 1

- 示例

max_length512

将 LoRA 适配器与基本型号合并

以下代码与其他脚本(例如 TheBloke 提供的脚本)略有不同,因为我们在训练之前为 ChatML 添加了令牌。不过,我们没有更改基本模型,这就是为什么在加载适配器之前,我们必须将新令牌添加到基本模型和标记器中;否则,我们将尝试将带有两个附加令牌的适配器合并到没有这些令牌的模型中(这将失败)。

- <span style="color:rgba(0, 0, 0, 0.8)"><span style="background-color:#ffffff"><span style="background-color:#ffffff"><span style="background-color:#f9f9f9"><span style="color:#242424"><span style="color:#aa0d91">from</span> transformers <span style="color:#aa0d91">import</span> AutoModelForCausalLM, AutoTokenizer

- <span style="color:#aa0d91">from</span> peft <span style="color:#aa0d91">import</span> PeftModel

- <span style="color:#aa0d91">import</span> torch

-

- base_path=<span style="color:#c41a16">"models/Mistral-7B-v0.1"</span> <span style="color:#007400"># input: base model</span>

- adapter_path=<span style="color:#c41a16">"out/checkpoint-606"</span> <span style="color:#007400"># input: adapters</span>

- save_to=<span style="color:#c41a16">"models/Mistral-7B-finetuned"</span> <span style="color:#007400"># out: merged model ready for inference</span>

-

- base_model = AutoModelForCausalLM.from_pretrained(

- base_path,

- return_dict=<span style="color:#aa0d91">True</span>,

- torch_dtype=torch.bfloat16,

- device_map=<span style="color:#c41a16">"auto"</span>,

- )

-

- tokenizer = AutoTokenizer.from_pretrained(base_path)

-

- <span style="color:#007400"># Add/set tokens (same 5 lines of code we used before training)</span>

- tokenizer.pad_token = <span style="color:#c41a16">"</s>"</span>

- tokenizer.add_tokens([<span style="color:#c41a16">"<|im_start|>"</span>])

- tokenizer.add_special_tokens(<span style="color:#5c2699">dict</span>(eos_token=<span style="color:#c41a16">"<|im_end|>"</span>))

- base_model.resize_token_embeddings(<span style="color:#5c2699">len</span>(tokenizer))

- base_model.config.eos_token_id = tokenizer.eos_token_id

-

- <span style="color:#007400"># Load LoRA adapter and merge</span>

- model = PeftModel.from_pretrained(base_model, adapter_path)

- model = model.merge_and_unload()

-

- model.save_pretrained(save_to, safe_serialization=<span style="color:#aa0d91">True</span>, max_shard_size=<span style="color:#c41a16">'4GB'</span>)

- tokenizer.save_pretrained(save_to)</span></span></span></span></span>

故障 排除

挑战是模型训练的重要组成部分。让我们讨论一些常见问题及其解决方案。

叔叔

如果遇到内存不足 (OOM) 错误:

- 考虑减小批处理大小。

- 通过减少上下文长度 (

max_lengthintokenize()) 来缩短训练样本。

训练太慢

如果训练看起来很迟钝:

- 增加批处理大小。

- 多个 GPU,购买或租用(例如在 runpod 上)。此处提供的代码已准备好加速,可用于在多 GPU 设置中进行训练,只需使用

accelerate launch qlora.pypython qlora.py代替 .

最终模型质量差

模型的质量反映了数据集的质量。要提高模型质量,请执行以下操作:

- 确保您的数据集丰富且相关。

- 调整超参数:

learning_rate, , rankr,lora_alphaepochs

结束语

- 了解你在做什么。有像蝾螈这样的优秀训练工具,它们可以让你专注于数据集的创建,而不是编写自己的填充函数。尽管如此,扎实掌握潜在机制是无价的。这些知识使您能够自信地驾驭复杂性并排除故障。

- 增量方法:从使用小型数据集的基本示例开始。逐步扩大规模并逐步调整参数,以发现它们对模型性能的影响。

- 强调数据质量:高质量的数据是有效训练的基石。在组装数据集时要有创新精神和勤奋精神。

LLMs像 Llama 2 和 Mistral 这样的微调是一个有益的过程,尤其是当您拥有正确的数据集和训练参数时。请记住始终关注模型的性能,并准备好迭代和适应。

代码可以在我的 GitHub 存储库 qlora-minimal 中找到:一个 QLoRA 训练脚本、一个用于将适配器合并到基本模型的脚本和一个 Jupyter 笔记本。

如果您有任何反馈、其他想法或问题,请随时在此处发表评论或在 Twitter 上联系。祝您训练愉快!

Copyright © 2003-2013 www.wpsshop.cn 版权所有,并保留所有权利。