- 1关于自定义<el-tabs>的样式_el-tabs样式

- 2AChartEngine图标库之动态柱状图_axchart_engine

- 3gpu instancing animation代替骨骼动画的做法_urp gpu animation 烘焙骨骼信息

- 4【RabbitMQ】RabbitMQ和Erlang下载与安装步骤—2023超详细最新版

- 5Unity3D 通过 shader 实现流光效果_unity文字流光

- 6解决使用element-plus时使用el-select-v2组件时,选中后无法移除focus的状态的方法。_element ui el-select-v2

- 7vps搭建x-ui面板

- 8signature=664f9760ad1f1ac8fb5bff722b4da240,恶意软件分析 & URL链接扫描 免费在线病毒分析平台 | 魔盾安全分析...

- 9git nrm 切换镜像_git 查看镜像源

- 10linux下载nginx_ngilinux下载nginx命令

LLMs:《Building LLM applications for production构建用于生产的LLM应用程序》翻译与解读_llm mlops platform

赞

踩

LLMs:《Building LLM applications for production构建用于生产的LLM应用程序》翻译与解读

LLMs:构建用于生产的LLM应用程序的挑战与案例经验总结——prompt工程面临的挑战(自然语言的模糊性/成本和延迟/提示VS微调VS替代方案/向前和向后兼容性)、任务组合性(多个任务组成的应用/ 代理-工具-控制流)、有前景的应用案例(AI助手、聊天机器人、编程与游戏、提速学习、交互数据【不适合大量数据分析】、搜索和推荐、销售)之详细攻略

导读:文章讨论了使用大型语言模型(LLM)构建应用程序时面临的挑战,包括生产就绪性和提示工程方面的限制。它还介绍了如何通过控制流和工具的组合构建复杂和强大的应用程序,并探讨了基于LLM的潜在用例。

一、prompt工程面临的挑战

>> 自然语言的模糊性:自然语言的模糊性是生产化prompt工程面临的第一大挑战,如输出格式模糊、用户体验不一致。应用工程严谨性和系统化来解决。

>> 成本和延迟:ML/LLM成本高、LLM延迟高、提示 engineering相对更低成本。但很难分析LLM具体成本和延迟。

>> 提示 vs 微调 vs 替代方案:提示使用少量数据但性能一般,微调使用更多数据但性能更好,成本相对高。要综合考虑数据量、性能和成本等因素。提示微调和蒸馏微调有助提高性能和降低成本。

>> 嵌入向量和向量数据库有助构建低延迟的机器学习应用。

>> 向前和向后兼容性:新模型无法保证比旧模型更好,提示可以快速测试。但提示模式对变化不稳健。生产级模型需要考虑前向后向兼容性,定期重新训练和测试prompt。二、任务组合性

>> 由多个任务组成的应用:任务组合性是一个生产级挑战,如自然语言数据库需要执行多个任务。

>> 代理、工具和控制流:能够根据控制流执行多个任务。三、有前景的应用案例

>> 有许多前景广阔的应用场景,比如AI助手、聊天机器人、编程与游戏、学习、交互数据等。但LLM的分析能力还受限于数据规模。搜索和推荐、销售等行业也有广泛应用前景。

>> 基于自然语言和向量数据库进行数据搜索和问答。

>> LLMs存在局限,不适合大量数据分析。

总的来说,prompt工程让人眼花缭乱,但要审慎区分炒作,关注最新的发展趋势。生产化还面临诸多挑战,需要系统方法加以解决。但prompt工程的应用前景十分广阔。

目录

《Building LLM applications for production》翻译与解读

Part I. 将prompt工程推向生产的挑战Challenges of productionizing prompt engineering

1.1、自然语言的模糊性The ambiguity of natural languages

1.1.0、编程语言【准确性+适合开发者】VS自然语言【模糊性+适合用户】

(0)、自然语言灵活性的两个体现:用户如何定义指令+LLM如何响应指令

(1)、输出格式的模糊性Ambiguous output format

(2)、用户体验的期望不一致性Inconsistency in user experience

(3)、如何解决这个模糊性问题?——应用工程严谨性+prompt工程系统化——How to solve this ambiguity problem?

1.1.2、提示版本控制Prompt versioning—提示进行微小的更改可能会导致非常不同的结果

1.1.3、提示优化Prompt optimization—COT(但有延迟成本)、多数表决、拆解为简单提示

1.2.1、成本Cost:ML【数据和训练成本更高】、LLM【运营的成本在推理中】、提示工程相对更加快速低廉

1.2.2、延迟Latency:输入长度影响不大(因其并行输入)+输出长度显著影响延迟(因其按顺序生成)→未来会显著降低延迟

1.2.3、关于LLM的成本和延迟分析的不可能性The impossibility of cost + latency analysis for LLMs

1.3、提示 vs. 微调 vs. 替代方案Prompting vs. finetuning vs. Alternatives

1.3.1、提示微调Prompt tuning:需要将提示的嵌入输入到您的LLM模型中、T5证明了提示微调比提示工程表现更好

1.4、嵌入向量 + 向量数据库Embeddings + vector databases

(1)、探讨了基于LLM生成嵌入向量+构建机器学习应用【搜索和推荐】的潜力

1.5、向前和向后兼容性Backward and forward compatibility

生产级的LLM模型重要的是前向后向兼容性—模型新的知识可能与之前知识存在冲突:需要定期重新训练、适应能力下降

对提示进行单元测试的重要性:新模型不一定能比旧模型更好、提示实验快速且低廉

挑战——缺少对prompt逻辑的管理:prompt被录入后,设计者离职,后人修改prompt困难

Part 2. 任务组合性Task composability

2.1、由多个任务组成的应用程序Applications that consist of multiple tasks

自然语言数据库:通过执行一系列任务【自然语言转为SQL查询→执行SQL数据库→将SQL结果展示给用户】来实现一个复杂的应用程序

2.2、代理、工具和控制流Agents, tools, and control flows

(0)、Agent【根据控制流执行多个任务的应用程序,比如SQL执行器】不同于RL中的agent【智能体】策略

2.2.1、工具 vs. 插件—Tools vs. Plugins

2.2.2、控制流:顺序、并行、if语句、for循环——Control flows: sequential, parallel, if, for loop

2.2.3、带有LLM代理的控制流【LLM可决定控制流的条件】Control flow with LLM agents

2.2.4、测试代理【单元测试】Testing an agent

Part 3. 有前景的应用案例Promising use cases

3.1、AI助手AI assistant—在各个方面协助的助手

3.2、聊天机器人Chatbot—完成用户给定的任务/成为一个伴侣

3.3、编程【调试代码】和游戏Programming and gaming

3.4、学习Learning—帮助学生更快学习:总结书籍、评估答案正确性

3.5、与数据交互Talk-to-your-data—利用自然语言查询数据:法律合同、简历、财务数据或客户支持

LLMs:LLMs场景实战案例应用之基于自然语言+SQL查询+ANN(构建高效数据库+快速缩小搜索范围→解决高维+高效查找)查找的内部数据搜索和问答应用案例的简介、具体实现之详细攻略

3.5.1、LLM能为我进行数据分析吗?Can LLMs do data analysis for me?——仅适用于适合输入提示的小型数据而非生产数据(大批量)

3.6、搜索和推荐Search and recommendation:从基于关键词→基于LLMs优化搜索引擎【将用户查询转为产品列表名称+检索相关产品】

(1)、prompt engineering论文的雨后春笋好比DL早期的成千上万的权重初始化技巧

(2)、忽略(大部分)炒作、只阅读摘要、尝试跟上最新工具的发展

《Building LLM applications for production》翻译与解读

| 地址 | |

| 时间 | 2023年4月11日 |

| 作者 | Chip Huyen |

摘要

| A question that I’ve been asked a lot recently is how large language models (LLMs) will change machine learning workflows. After working with several companies who are working with LLM applications and personally going down a rabbit hole building my applications, I realized two things: It’s easy to make something cool with LLMs, but very hard to make something production-ready with them. LLM limitations are exacerbated by a lack of engineering rigor in prompt engineering, partially due to the ambiguous nature of natural languages, and partially due to the nascent nature of the field. | 最近我经常被问到一个问题,那就是大型语言模型(LLM)将如何改变机器学习工作流程。在与几家正在使用LLM应用的公司合作,并亲自深入开发我的应用程序之后,我意识到两件事情: 利用LLM可以轻松创建出很酷的东西,但要使其达到生产级别的水平却非常困难。 LLM的局限性在于prompt工程中缺乏工程严谨性,这部分是由于自然语言的模糊性,也部分是由于该领域的初期阶段。 |

| This post consists of three parts. Part 1 discusses the key challenges of productionizing LLM applications and the solutions that I’ve seen. Part 2 discusses how to compose multiple tasks with control flows (e.g. if statement, for loop) and incorporate tools (e.g. SQL executor, bash, web browsers, third-party APIs) for more complex and powerful applications. Part 3 covers some of the promising use cases that I’ve seen companies building on top of LLMs and how to construct them from smaller tasks. There has been so much written about LLMs, so feel free to skip any section you’re already familiar with. | 本文分为三个部分: 第一部分讨论了将LLM应用推向生产的主要挑战以及我见过的解决方案。 第二部分讨论如何结合控制流程(如if语句、for循环)以及整合工具(如SQL执行器、bash、网络浏览器、第三方API)来构建更复杂和强大的应用程序。 第三部分涵盖了一些我见过的公司在LLM基础上构建的有前景的用例,以及如何从较小的任务构建它们。 关于LLM已经有很多的文章,所以如果你对某些部分已经很熟悉,可以随意跳过。 |

Part I. 将prompt工程推向生产的挑战Challenges of productionizing prompt engineering

1.1、自然语言的模糊性The ambiguity of natural languages

自然语言的模棱两可性给 LLMs 的产品化应用带来了双重挑战。一方面是用户定义的 instrucitons 导致LMMs生成结果不可预测,另一方面是 LLM本身的响应存在模棱两可。解决之道在于提高提示工程的系统性和严谨性,这或许不能彻底解决问题,但能降低相关风险。

1.1.0、编程语言【准确性+适合开发者】VS自然语言【模糊性+适合用户】

(0)、自然语言灵活性的两个体现:用户如何定义指令+LLM如何响应指令

| For most of the history of computers, engineers have written instructions in programming languages. Programming languages are “mostly” exact. Ambiguity causes frustration and even passionate hatred in developers (think dynamic typing in Python or JavaScript). In prompt engineering, instructions are written in natural languages, which are a lot more flexible than programming languages. This can make for a great user experience, but can lead to a pretty bad developer experience. The flexibility comes from two directions: how users define instructions, and how LLMs respond to these instructions. First, the flexibility in user-defined prompts leads to silent failures. If someone accidentally makes some changes in code, like adding a random character or removing a line, it’ll likely throw an error. However, if someone accidentally changes a prompt, it will still run but give very different outputs. While the flexibility in user-defined prompts is just an annoyance, the ambiguity in LLMs’ generated responses can be a dealbreaker. It leads to two problems: | 在计算机的大部分历史中,工程师们使用编程语言编写指令。编程语言是“大体上”准确的。模糊性会引起开发者的不满甚至激烈的憎恨(比如Python或JavaScript中的动态类型)。 在prompt工程中,指令是用自然语言编写的,自然语言比编程语言更加灵活。这可能会给用户带来良好的体验,但对开发者来说可能会造成很糟糕的体验。 这种灵活性来自两个方面:用户如何定义指令以及LLM如何响应这些指令。 首先,用户定义指令的灵活性会导致悄无声息的错误。如果有人不小心在代码中做了一些改动,比如添加了一个随机字符或删除了一行,很可能会引发错误。然而,如果有人意外改变了提示,程序仍然会运行,但输出结果会截然不同。 用户定义提示的灵活性只是一种烦恼,但LLM生成的响应的模糊性可能会成为一个致命问题。这导致了两个问题: |

(1)、输出格式的模糊性Ambiguous output format

| Ambiguous output format: downstream applications on top of LLMs expect outputs in a certain format so that they can parse. We can craft our prompts to be explicit about the output format, but there’s no guarantee that the outputs will always follow this format. | LLM上层的下游应用程序期望以特定格式输出,以便进行解析。我们可以制定明确的提示来说明输出格式,但无法保证输出始终符合该格式。 |

(2)、用户体验的期望不一致性Inconsistency in user experience

| Inconsistency in user experience: when using an application, users expect certain consistency. Imagine an insurance company giving you a different quote every time you check on their website. LLMs are stochastic – there’s no guarantee that an LLM will give you the same output for the same input every time. You can force an LLM to give the same response by setting temperature = 0, which is, in general, a good practice. While it mostly solves the consistency problem, it doesn’t inspire trust in the system. Imagine a teacher who gives you consistent scores only if that teacher sits in one particular room. If that teacher sits in different rooms, that teacher’s scores for you will be wild. | 在使用应用程序时,用户期望有一定的一致性。想象一下一个保险公司每次在网站上查询报价时都给出不同的报价。LLM是随机的——无法保证LLM对于相同的输入每次都给出相同的输出。 通过设置temperature = 0,可以强制LLM给出相同的响应,这通常是一个好的做法。虽然这基本上解决了一致性问题,但并不能让人对系统产生信任。想象一个只有在特定房间里才给你一致分数的老师。如果那个老师在不同的房间里,他给你的分数就会变得很不稳定。 |

(3)、如何解决这个模糊性问题?——应用工程严谨性+prompt工程系统化——How to solve this ambiguity problem?

| This seems to be a problem that OpenAI is actively trying to mitigate. They have a notebook with tips on how to increase their models’ reliability. A couple of people who’ve worked with LLMs for years told me that they just accepted this ambiguity and built their workflows around that. It’s a different mindset compared to developing deterministic programs, but not something impossible to get used to. This ambiguity can be mitigated by applying as much engineering rigor as possible. In the rest of this post, we’ll discuss how to make prompt engineering, if not deterministic, systematic. | 这似乎是OpenAI正在积极努力解决的问题。他们有一个笔记本上提供了如何提高模型可靠性的建议。 一些多年来一直使用LLM的人告诉我,他们只是接受了这种模糊性,并围绕它构建了他们的工作流程。这与开发确定性程序的思维方式有所不同,但并不是不可能适应的。 通过尽可能应用工程严谨性,可以减轻这种模糊性。在本文的其余部分,我们将讨论如何使prompt工程变得系统化,即使不是确定性的。 |

1.1.1、提示评估Prompt evaluation

| A common technique for prompt engineering is to provide in the prompt a few examples and hope that the LLM will generalize from these examples (fewshot learners). As an example, consider trying to give a text a controversy score – it was a fun project that I did to find the correlation between a tweet’s popularity and its controversialness. Here is the shortened prompt with 4 fewshot examples: Example: controversy scorer | 一种常见的提示工程技术是在提示中提供一些示例,并希望LLM能从这些示例中进行泛化学习(fewshot学习器)。 以给文本评分争议度为例,这是一个有趣的项目,我通过它来找出推文的受欢迎程度与其争议性之间的相关性。以下是包含4个fewshot示例的缩写提示: 示例:争议度评分器 |

1.1.2、提示版本控制Prompt versioning—提示进行微小的更改可能会导致非常不同的结果

| Small changes to a prompt can lead to very different results. It’s essential to version and track the performance of each prompt. You can use git to version each prompt and its performance, but I wouldn’t be surprised if there will be tools like MLflow or Weights & Biases for prompt experiments. | 对提示进行微小的更改可能会导致非常不同的结果。重要的是对每个提示进行版本控制并跟踪其性能。您可以使用Git对每个提示及其性能进行版本控制,但如果出现像MLflow或Weights & Biases这样的用于提示实验的工具,我也不会感到惊讶。 |

1.1.3、提示优化Prompt optimization—COT(但有延迟成本)、多数表决、拆解为简单提示

| There have been many papers + blog posts written on how to optimize prompts. I agree with Lilian Weng in her helpful blog post that most papers on prompt engineering are tricks that can be explained in a few sentences. OpenAI has a great notebook that explains many tips with examples. Here are some of them: >> Prompt the model to explain or explain step-by-step how it arrives at an answer, a technique known as Chain-of-Thought or COT (Wei et al., 2022). Tradeoff: COT can increase both latency and cost due to the increased number of output tokens [see Cost and latency section] >> Generate many outputs for the same input. Pick the final output by either the majority vote (also known as self-consistency technique by Wang et al., 2023) or you can ask your LLM to pick the best one. In OpenAI API, you can generate multiple responses for the same input by passing in the argument n (not an ideal API design if you ask me). >> Break one big prompt into smaller, simpler prompts. | 关于如何优化提示已经有许多论文和博文写过。我同意Lilian Weng在她有帮助的博文中的观点,即大多数关于提示工程的论文都是可以用几句话解释清楚的技巧。OpenAI有一本很棒的笔记本,其中用例子解释了许多提示技巧。以下是其中一些技巧: >> 提示模型解释或逐步解释其答案的方法,称为“思维链”或COT(Wei et al.,2022)。 权衡:由于增加了输出标记的数量,COT可能会增加延迟和成本[参见成本和延迟部分] >> 为相同的输入生成多个输出。通过多数表决(也称为Wang et al.(2023)的自一致技术)或者让LLM选择最佳输出来选择最终的输出。在OpenAI API中,您可以通过传递参数n来为相同的输入生成多个响应(如果问我的话,这并不是理想的API设计)。 >> 将一个大的提示分解为较小、更简单的提示。 |

| Many tools promise to auto-optimize your prompts – they are quite expensive and usually just apply these tricks. One nice thing about these tools is that they’re no code, which makes them appealing to non-coders. | 许多工具承诺自动优化您的提示,它们的价格相当昂贵,并且通常只是应用这些技巧。这些工具的一个好处是它们无需编码,这使它们对非编码人员非常吸引。 |

1.2、成本与延迟Cost and latency

自然语言生成模型产品化的又一个重要挑战是成本和延迟。更长的 prompt 能提高性能但也提高推理成本;输出长度长则延迟高。而 LLMs 的迭代升级速度远高于预期和API的不可靠性,对成本和延迟的分析很难保持准确性和实时性,需反复评估 API 调用成本与自建模型两种方案。

1.2.1、成本Cost:ML【数据和训练成本更高】、LLM【运营的成本在推理中】、提示工程相对更加快速低廉

| The more explicit detail and examples you put into the prompt, the better the model performance (hopefully), and the more expensive your inference will cost. OpenAI API charges for both the input and output tokens. Depending on the task, a simple prompt might be anything between 300 - 1000 tokens. If you want to include more context, e.g. adding your own documents or info retrieved from the Internet to the prompt, it can easily go up to 10k tokens for the prompt alone. | 在提示中加入更明确的细节和示例,模型的性能就会更好(希望如此),但推理的成本也会更高。 OpenAI API对输入和输出标记都收费。根据任务的不同,简单的提示可能需要300到1000个标记。如果您想包含更多上下文,例如将自己的文档或从互联网检索到的信息添加到提示中,单单提示就可能需要多达1万个标记。 |

| The cost with long prompts isn’t in experimentation but in inference. Experimentation-wise, prompt engineering is a cheap and fast way get something up and running. For example, even if you use GPT-4 with the following setting, your experimentation cost will still be just over $300. The traditional ML cost of collecting data and training models is usually much higher and takes much longer. >> Prompt: 10k tokens ($0.06/1k tokens) >> Output: 200 tokens ($0.12/1k tokens) >> Evaluate on 20 examples >> Experiment with 25 different versions of prompts | 长提示的成本不在实验中,而在推理中。 就实验而言,提示工程是一种廉价且快速启动的方式。例如,即使使用以下设置的GPT-4,您的实验成本仍然只有300多美元。相比之下,传统的机器学习数据收集和模型训练成本通常更高,花费的时间也更长。 >> 提示:1万个标记(0.06美元/1000个标记) >> 输出:200个标记(0.12美元/1000个标记) >> 在20个示例上进行评估 >> 对提示进行25个不同版本的实验 |

| The cost of LLMOps is in inference. >> If you use GPT-4 with 10k tokens in input and 200 tokens in output, it’ll be $0.624 / prediction. >> If you use GPT-3.5-turbo with 4k tokens for both input and output, it’ll be $0.004 / prediction or $4 / 1k predictions. >> As a thought exercise, in 2021, DoorDash ML models made 10 billion predictions a day. If each prediction costs $0.004, that’d be $40 million a day! >> By comparison, AWS personalization costs about $0.0417 / 1k predictions and AWS fraud detection costs about $7.5 / 1k predictions [for over 100,000 predictions a month]. AWS services are usually considered prohibitively expensive (and less flexible) for any company of a moderate scale. | LLM运营的成本在推理中。 >>如果使用GPT-4进行10,000个标记的输入和200个标记的输出,则每个预测的成本为0.624美元。 >>如果使用GPT-3.5-turbo进行4,000个标记的输入和输出,则每个预测的成本为0.004美元,或者每1,000个预测的成本为4美元。 >>作为思考实验,2021年,DoorDash的机器学习模型每天进行了100亿次预测。如果每个预测的成本为0.004美元,那么每天的成本将达到4000万美元! >>相比之下,AWS个性化的成本约为0.0417美元/1000个预测,AWS欺诈检测的成本约为7.5美元/1000个预测[每月超过10万次预测]。AWS的服务通常被认为价格过高(并且不够灵活),适用于中等规模的任何公司都会带来限制。 |

1.2.2、延迟Latency:输入长度影响不大(因其并行输入)+输出长度显著影响延迟(因其按顺序生成)→未来会显著降低延迟

| Input tokens can be processed in parallel, which means that input length shouldn’t affect the latency that much. However, output length significantly affects latency, which is likely due to output tokens being generated sequentially. Even for extremely short input (51 tokens) and output (1 token), the latency for gpt-3.5-turbo is around 500ms. If the output token increases to over 20 tokens, the latency is over 1 second. Here’s an experiment I ran, each setting is run 20 times. All runs happen within 2 minutes. If I do the experiment again, the latency will be very different, but the relationship between the 3 settings should be similar. This is another challenge of productionizing LLM applications using APIs like OpenAI: APIs are very unreliable, and no commitment yet on when SLAs will be provided. | 输入标记可以并行处理,这意味着输入长度不会对延迟产生太大影响。 然而,输出长度会显著影响延迟,这可能是因为输出标记是按顺序生成的。 即使对于非常短的输入(51个标记)和输出(1个标记),gpt-3.5-turbo的延迟也约为500毫秒。如果输出标记增加到20个以上,延迟将超过1秒。 以下是我进行的一个实验,每个设置运行20次。所有运行都在2分钟内完成。如果我再次进行实验,延迟会有很大差异,但三个设置之间的关系应该是相似的。 这是使用像OpenAI这样的API将LLM应用产品化的另一个挑战:API非常不可靠,尚未提供服务级别协议(SLAs)。 |

|

| |

| It is, unclear, how much of the latency is due to model, networking (which I imagine is huge due to high variance across runs), or some just inefficient engineering overhead. It’s very possible that the latency will reduce significantly in a near future. While half a second seems high for many use cases, this number is incredibly impressive given how big the model is and the scale at which the API is being used. The number of parameters for gpt-3.5-turbo isn’t public but is guesstimated to be around 150B. As of writing, no open-source model is that big. Google’s T5 is 11B parameters and Facebook’s largest LLaMA model is 65B parameters. People discussed on this GitHub thread what configuration they needed to make LLaMA models work, and it seemed like getting the 30B parameter model to work is hard enough. The most successful one seemed to be randaller who was able to get the 30B parameter model work on 128 GB of RAM, which takes a few seconds just to generate one token. | 目前尚不清楚延迟的多少是由于模型、网络(我想这可能是由于运行之间的高变异性)还是一些低效的工程开销。很可能在不久的将来,延迟会显著降低。 虽然对于许多用例来说,半秒的延迟似乎很高,但考虑到模型的规模以及API的使用规模,这个数字实际上令人印象深刻。gpt-3.5-turbo的参数数量并不公开,但据估计约为1500亿。截至目前,没有开源模型达到这个规模。谷歌的T5模型有110亿个参数,Facebook最大的LLaMA模型有650亿个参数。人们在GitHub上讨论了使LLaMA模型工作所需的配置,似乎使用30亿个参数的模型已经很困难了。最成功的是randaller,他能够在128 GB的RAM上让30亿个参数的模型工作,生成一个标记需要几秒钟的时间。 |

1.2.3、关于LLM的成本和延迟分析的不可能性The impossibility of cost + latency analysis for LLMs

| The LLM application world is moving so fast that any cost + latency analysis is bound to go outdated quickly. Matt Ross, a senior manager of applied research at Scribd, told me that the estimated API cost for his use cases has gone down two orders of magnitude over the last year. Latency has significantly decreased as well. Similarly, many teams have told me they feel like they have to redo the feasibility estimation and buy (using paid APIs) vs. build (using open source models) decision every week. | LLM应用领域发展迅速,任何成本和延迟分析都注定很快就会过时。Scribd的应用研究高级经理Matt Ross告诉我,在过去一年中,他所使用的用例的估计API成本已经下降了两个数量级。延迟也显著降低。同样,许多团队告诉我,他们觉得自己每周都必须重新评估可行性并在购买(使用付费API)与构建(使用开源模型)之间做出决策。 |

1.3、提示 vs. 微调 vs. 替代方案Prompting vs. finetuning vs. Alternatives

尽管提示工程易于快速开始,但难以达到微调的性能;微调则需要更多数据和带来更高的成本。提示微调和使用小模型模拟大模型可以弥补两者的缺点,但前者需要修改模型结构,后者需要大量真实示例。提示与微调是一个权衡,要根据具体场景挑选合适的策略。

>> 提示相较于微调具有:数据少时更易上手;对于一定数量的数据,微调往往能获得更好的性能;成本较低,无限制数据上限;

>> 提示工程的替代方案:

提示微调:组织提示的embeddings, 然后微调embeddings;

使用小模型模拟大模型:使用大模型生成示例,再微调小模型;

提示与微调的主要比较三因素: 数据量、模型性能、成本

| >> Prompting: for each sample, explicitly tell your model how it should respond. >> Finetuning: train a model on how to respond, so you don’t have to specify that in your prompt. | >>提示:对于每个样本,明确告诉模型如何回答。 >>微调:训练模型如何回答,这样您就不需要在提示中指定回答内容。 |

| There are 3 main factors when considering prompting vs. finetuning: data availability, performance, and cost. If you have only a few examples, prompting is quick and easy to get started. There’s a limit to how many examples you can include in your prompt due to the maximum input token length. | 在考虑提示和微调时,有三个主要因素需要考虑:数据可用性、性能和成本。 如果您只有很少的示例,提示是快速且容易上手的方法。由于最大输入标记长度的限制,您在提示中可以包含的示例数量有限。 |

提示值多少个数据点?——一个提示值大约等于100个示例

| The number of examples you need to finetune a model to your task, of course, depends on the task and the model. In my experience, however, you can expect a noticeable change in your model performance if you finetune on 100s examples. However, the result might not be much better than prompting. In How Many Data Points is a Prompt Worth? (2021), Scao and Rush found that a prompt is worth approximately 100 examples (caveat: variance across tasks and models is high – see image below). The general trend is that as you increase the number of examples, finetuning will give better model performance than prompting. There’s no limit to how many examples you can use to finetune a model. | 对于微调模型到您的任务,您需要的示例数量当然取决于任务和模型。然而,根据我的经验,如果您在数百个示例上进行微调,您可以期望模型的性能有明显改变。然而,结果可能并不比提示好多少。 在《提示值多少个数据点?》(2021)一文中,Scao和Rush发现一个提示值大约等于100个示例(注意:不同任务和模型之间存在较大的差异-见下图)。一般趋势是,随着示例数量的增加,微调将比提示提供更好的模型性能。您可以使用任意数量的示例对模型进行微调。 |

微调的两个好处:更好性能+降低预测成本

| The benefit of finetuning is two folds: >> You can get better model performance: can use more examples, examples becoming part of the model’s internal knowledge. >> You can reduce the cost of prediction. The more instruction you can bake into your model, the less instruction you have to put into your prompt. Say, if you can reduce 1k tokens in your prompt for each prediction, for 1M predictions on gpt-3.5-turbo, you’d save $2000. | 微调的好处有两个: 您可以获得更好的模型性能:可以使用更多的示例,示例成为模型内部知识的一部分。 您可以降低预测成本。您可以将更多的指令融入到模型中,从而减少在提示中输入的指令。例如,如果您可以在每次预测中减少1,000个标记的提示,对于在gpt-3.5-turbo上进行的100万次预测,您将节省2,000美元。 |

1.3.1、提示微调Prompt tuning:需要将提示的嵌入输入到您的LLM模型中、T5证明了提示微调比提示工程表现更好

| A cool idea that is between prompting and finetuning is prompt tuning, introduced by Leister et al. in 2021. Starting with a prompt, instead of changing this prompt, you programmatically change the embedding of this prompt. For prompt tuning to work, you need to be able to input prompts’ embeddings into your LLM model and generate tokens from these embeddings, which currently, can only be done with open-source LLMs and not in OpenAI API. On T5, prompt tuning appears to perform much better than prompt engineering and can catch up with model tuning (see image below). | 一个介于提示和微调之间的很好的想法是提示微调,由Leister等人在2021年提出。不同于更改提示,提示微调通过以编程方式更改提示的嵌入来进行。要使提示微调起作用,您需要能够将提示的嵌入输入到您的LLM模型中,并从这些嵌入生成tokens ,目前只有使用开源LLM才能实现,而OpenAI API不支持。在T5上,提示微调似乎比提示工程表现更好,并且可以追赶模型微调(见下图)。 |

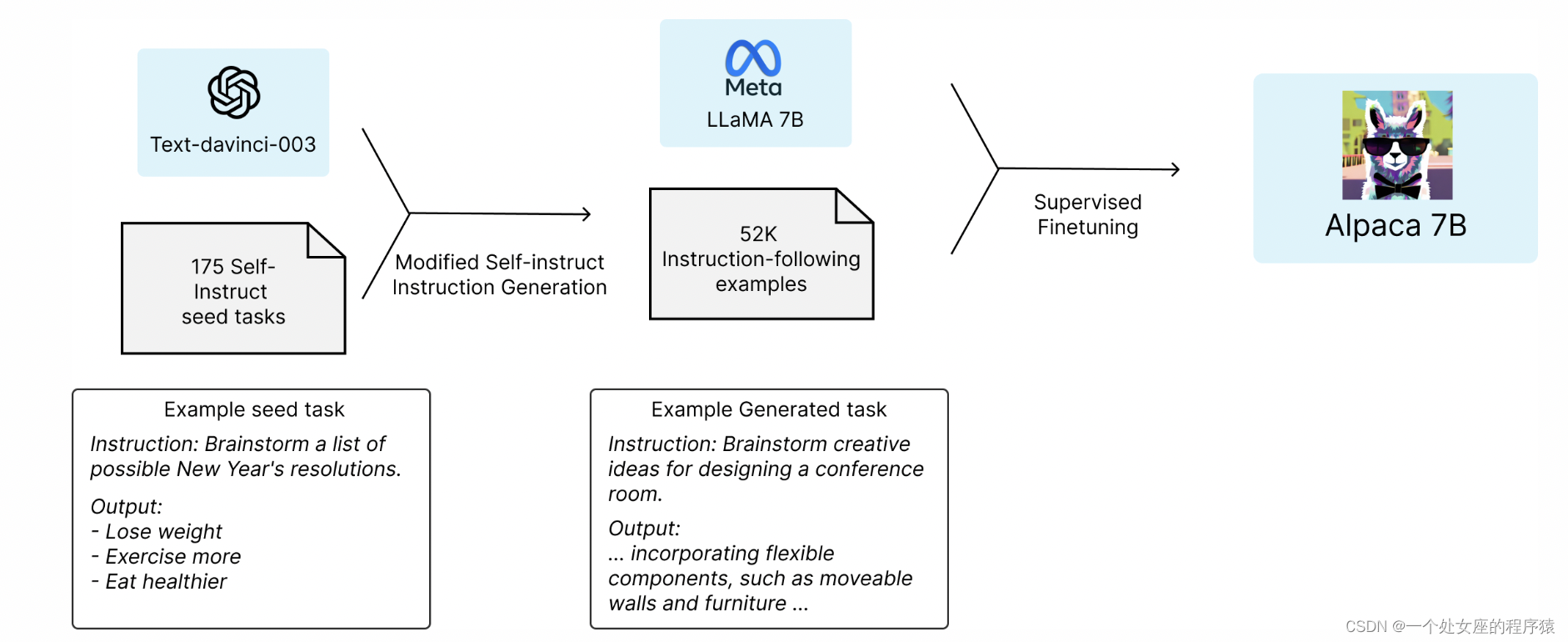

1.3.2、蒸馏进行微调Finetuning with distillation——Alpaca(LLaMA7B+蒸馏技术):基于52000个指令利用GPT-3生成示例数据+训练LLaMA-7B→全部成本不到600美元

| In March 2023, a group of Stanford students released a promising idea: finetune a smaller open-source language model (LLaMA-7B, the 7 billion parameter version of LLaMA) on examples generated by a larger language model (text-davinci-003 – 175 billion parameters). This technique of training a small model to imitate the behavior of a larger model is called distillation. The resulting finetuned model behaves similarly to text-davinci-003, while being a lot smaller and cheaper to run. For finetuning, they used 52k instructions, which they inputted into text-davinci-003 to obtain outputs, which are then used to finetune LLaMa-7B. This costs under $500 to generate. The training process for finetuning costs under $100. See Stanford Alpaca: An Instruction-following LLaMA Model (Taori et al., 2023). | 在2023年3月,一群斯坦福大学的学生发布了一个有前途的想法:在较大的语言模型(text-davinci-003 - 1750亿个参数)生成的示例上微调一个较小的开源语言模型(LLaMA-7B,LLaMA的70亿个参数版本)。这种通过训练一个小模型来模仿一个大模型行为的技术称为蒸馏。经过微调后,结果模型的行为与text-davinci-003类似,但规模更小且运行成本更低。 对于微调,他们使用了52,000个指令,将其输入到text-davinci-003中以获取输出,然后用于微调LLaMa-7B。这个过程的成本不到500美元。微调的训练成本不到100美元。请参阅斯坦福的Alpaca:一个指令跟随的LLaMA模型(Taori等,2023)。 |

| The appeal of this approach is obvious. After 3 weeks, their GitHub repo got almost 20K stars!! By comparison, HuggingFace’s transformers repo took over a year to achieve a similar number of stars, and TensorFlow repo took 4 months. | 这种方法的吸引力是显而易见的。3周后,他们的GitHub仓库获得了近2万个stars!相比之下,HuggingFace的transformers仓库用了一年多才获得类似数量的星标,而TensorFlow仓库用了4个月。 |

1.4、嵌入向量 + 向量数据库Embeddings + vector databases

探讨了使用嵌入向量和向量数据库的潜力。使用LLMs生成嵌入向量可以用于搜索和推荐等机器学习应用,成本相对较低。向量数据库用于加载嵌入向量以实现低延迟检索。

(1)、探讨了基于LLM生成嵌入向量+构建机器学习应用【搜索和推荐】的潜力

| One direction that I find very promising is to use LLMs to generate embeddings and then build your ML applications on top of these embeddings, e.g. for search and recsys. As of April 2023, the cost for embeddings using the smaller model text-embedding-ada-002 is $0.0004/1k tokens. If each item averages 250 tokens (187 words), this pricing means $1 for every 10k items or $100 for 1 million items. While this still costs more than some existing open-source models, this is still very affordable, given that: >> You usually only have to generate the embedding for each item once. >> With OpenAI API, it’s easy to generate embeddings for queries and new items in real-time. | 我认为非常有前途的一个方向是使用LLM生成嵌入向量,然后在这些嵌入向量的基础上构建机器学习应用,例如搜索和推荐系统。截至2023年4月,使用较小模型text-embedding-ada-002生成嵌入向量的成本为每1,000个标记0.0004美元。如果每个项目平均包含250个标记(187个单词),这个定价意味着每10,000个项目需要1美元,或者1百万个项目需要100美元。 虽然这仍然比一些现有的开源模型要贵一些,但考虑到以下几点,这仍然非常实惠: >> 您通常只需要为每个项目生成一次嵌入向量。 >> 使用OpenAI API,可以轻松实时生成查询和新项目的嵌入向量。 |

| To learn more about using GPT embeddings, check out SGPT (Niklas Muennighoff, 2022) or this analysis on the performance and cost GPT-3 embeddings (Nils Reimers, 2022). Some of the numbers in Nils’ post are already outdated (the field is moving so fast!!), but the method is great! | 要了解更多关于使用GPT嵌入向量的信息,请查看SGPT(Niklas Muennighoff,2022)或这篇关于GPT-3嵌入向量性能和成本的分析(Nils Reimers,2022)。尼尔斯的帖子中的一些数字已经过时了(这个领域发展得太快了!),但方法很棒! |

(2)、实时应用场景:嵌入向量→向量数据库→低延迟搜索

| The main cost of embedding models for real-time use cases is loading these embeddings into a vector database for low-latency retrieval. However, you’ll have this cost regardless of which embeddings you use. It’s exciting to see so many vector databases blossoming – the new ones such as Pinecone, Qdrant, Weaviate, Chroma as well as the incumbents Faiss, Redis, Milvus, ScaNN. If 2021 was the year of graph databases, 2023 is the year of vector databases. | 实时应用场景的嵌入模型的主要成本是将这些嵌入向量加载到向量数据库中以进行低延迟检索。然而,无论使用哪种嵌入向量,这个成本都是必须的。令人兴奋的是看到这么多向量数据库涌现出来-新的数据库如Pinecone、Qdrant、Weaviate、Chroma,以及传统的Faiss、Redis、Milvus、ScaNN。 如果说2021年是图形数据库的一年,那么2023年就是向量数据库的一年。 |

1.5、向前和向后兼容性Backward and forward compatibility

对于LLMs的向前和向后兼容性,prompt的重写和更新是挑战,但也提供了机会进行实验和改进。在应用中使用复杂的prompt逻辑时,缺乏集中式知识可能导致理解和更新的困难。

总的说模型更新后,为了保持prompt兼容性,需要重写和测试prompt。但由于prompt分散管理和逻辑复杂,这是个挑战。

生产级的LLM模型重要的是前向后向兼容性—模型新的知识可能与之前知识存在冲突:需要定期重新训练、适应能力下降

即模型更新后,之前的prompt不变化。实际上,随着模型更新,很多prompt可能适应能力下降。模型新的知识可能与之前知识存在冲突。prompt工程要求完全重写prompt来适应模型更新。这是一个棘手的任务,特别对于复杂的prompt。

| Hacker News discussion: Who is working on forward and backward compatibility for LLMs? Foundational models can work out of the box for many tasks without us having to retrain them as much. However, they do need to be retrained or finetuned from time to time as they go outdated. According to Lilian Weng’s Prompt Engineering post: One observation with SituatedQA dataset for questions grounded in different dates is that despite LM (pretraining cutoff is year 2020) has access to latest information via Google Search, its performance on post-2020 questions are still a lot worse than on pre-2020 questions. This suggests the existence of some discrepencies or conflicting parametric between contextual information and model internal knowledge. | Hacker News讨论:谁在致力于LLM的向前和向后兼容性? 基础模型可以在许多任务上立即使用,而无需重新训练。然而,随着时间的推移,它们需要定期重新训练或微调以跟上最新的情况。根据Lilian Weng的Prompt Engineering帖子: 在针对不同日期的问题的SituatedQA数据集中,尽管语言模型(预训练截止到2020年)通过Google搜索可以获取最新信息,但其在2020年后的问题上的性能仍然远远不如在2020年前的问题上。这表明上下文信息与模型内部知识之间存在某种差异或冲突。 |

对提示进行单元测试的重要性:新模型不一定能比旧模型更好、提示实验快速且低廉

| In traditional software, when software gets an update, ideally it should still work with the code written for its older version. However, with prompt engineering, if you want to use a newer model, there’s no way to guarantee that all your prompts will still work as intended with the newer model, so you’ll likely have to rewrite your prompts again. If you expect the models you use to change at all, it’s important to unit-test all your prompts using evaluation examples. One argument I often hear is that prompt rewriting shouldn’t be a problem because: >> Newer models should only work better than existing models. I’m not convinced about this. Newer models might, overall, be better, but there will be use cases for which newer models are worse. >> Experiments with prompts are fast and cheap, as we discussed in the section Cost. While I agree with this argument, a big challenge I see in MLOps today is that there’s a lack of centralized knowledge for model logic, feature logic, prompts, etc. | 在传统软件中,当软件升级时,理想情况下应该仍然能够与为其旧版本编写的代码一起工作。然而,对于prompt engineering来说,如果要使用更新的模型,就无法保证所有提示都能按照预期与新模型一起工作,因此可能需要重新编写提示。如果您预计使用的模型会发生任何变化,重要的是使用评估示例对所有提示进行单元测试。我经常听到的一个论点是,提示重写不应该是一个问题,因为: >>更新的模型应该只比现有模型更好。对此我并不完全同意。更新的模型可能在整体上更好,但也会有一些使用案例,新模型的性能较差。 >>使用提示进行实验快速且成本低廉,就像我们在成本部分讨论的那样。虽然我同意这个观点,但我在当前的MLOps中看到一个重大挑战是缺乏关于模型逻辑、特征逻辑、提示等的集中化知识。 |

挑战——缺少对prompt逻辑的管理:prompt被录入后,设计者离职,后人修改prompt困难

| An application might contain multiple prompts with complex logic (discussed in Part 2. Task composability). If the person who wrote the original prompt leaves, it might be hard to understand the intention behind the original prompt to update it. This can become similar to the situation when someone leaves behind a 700-line SQL query that nobody dares to touch. | 一个应用可能包含多个具有复杂逻辑的提示(在第二部分任务组合性中讨论)。如果编写原始提示的人离开,可能很难理解更新提示的意图。这可能会类似于当有人留下一个700行的SQL查询时,没有人敢去触碰它的情况。 |

挑战——提示模式对变化不具有稳健性

| Another challenge is that prompt patterns are not robust to changes. For example, many of the published prompts I’ve seen start with “I want you to act as XYZ”. If OpenAI one day decides to print something like: “I’m an AI assistant and I can’t act like XYZ”, all these prompts will need to be updated. | 另一个挑战是提示模式对变化不具有稳健性。例如,我看过的许多已发布的提示以“我想让你扮演XYZ”的形式开头。如果OpenAI某天决定打印类似于:“我是一个AI助手,我不能扮演XYZ”的内容,那么所有这些提示都需要更新。 |

Part 2. 任务组合性Task composability

这段内容讨论了任务的组合性、代理和工具的概念、不同类型的控制流以及测试代理的方法。

>> 任务可组合性:介绍了由多个任务组成的应用程序,以及一个具体的例子,展示了如何通过执行一系列任务来实现一个复杂的应用程序。

>> 代理、工具和控制流:讨论了代理的概念,即能够根据给定的控制流执行多个任务的应用程序。介绍了工具和插件的概念,并提供了一些示例。

>> 控制流:介绍了顺序、并行、条件语句和循环等控制流类型,并以具体的例子说明了它们的应用。

>> 使用LLM代理的控制流:介绍了在LLM应用程序中,控制流条件可以通过提示来确定的概念。以选择不同动作为例,说明了如何使用LLM来决定控制流条件。

>> 测试代理:介绍了测试代理的重要性,以及两种主要的故障模式:任务失败和整体解决方案错误。类比软件工程,提出了对每个组件和控制流进行单元测试和集成测试的建议。

2.1、由多个任务组成的应用程序Applications that consist of multiple tasks

自然语言数据库:通过执行一系列任务【自然语言转为SQL查询→执行SQL数据库→将SQL结果展示给用户】来实现一个复杂的应用程序

| The example controversy scorer above consists of one single task: given an input, output a controversy score. Most applications, however, are more complex. Consider the “talk-to-your-data” use case where we want to connect to a database and query this database in natural language. Imagine a credit card transaction table. You want to ask things like: "How many unique merchants are there in Phoenix and what are their names?" and your database will return: "There are 9 unique merchants in Phoenix and they are …". | 上面的示例争议评分器由一个单一任务组成:给定一个输入,输出一个争议评分。然而,大多数应用程序都更复杂。 考虑“与您的数据对话”的用例,我们希望以自然语言连接到数据库并查询该数据库。 想象一个信用卡交易表。您想询问诸如:“凤凰城有多少个唯一商户,他们的名字是什么?”然后数据库会返回:“凤凰城有9个唯一商户,他们是...”。 |

| One way to do this is to write a program that performs the following sequence of tasks: >> Task 1: convert natural language input from user to SQL query [LLM] >> Task 2: execute SQL query in the SQL database [SQL executor] >> Task 3: convert the SQL result into a natural language response to show user [LLM] | 实现这一目标的一种方法是编写一个执行以下任务序列的程序: 任务1:将用户的自然语言输入转换为SQL查询 [LLM] 任务2:在SQL数据库中执行SQL查询 [SQL执行器] 任务3:将SQL结果转换为自然语言响应以展示给用户 [LLM] |

2.2、代理、工具和控制流Agents, tools, and control flows

(0)、Agent【根据控制流执行多个任务的应用程序,比如SQL执行器】不同于RL中的agent【智能体】策略

| I did a small survey among people in my network and there doesn’t seem to be any consensus on terminologies, yet. The word agent is being thrown around a lot to refer to an application that can execute multiple tasks according to a given control flow (see Control flows section). A task can leverage one or more tools. In the example above, SQL executor is an example of a tool. Note: some people in my network resist using the term agent in this context as it is already overused in other contexts (e.g. agent to refer to a policy in reinforcement learning). | 我在我的网络中进行了一项小调查,似乎还没有关于术语的共识。 人们经常使用代理一词来指称能够根据给定的控制流执行多个任务的应用程序(参见控制流部分)。一个任务可以利用一个或多个工具。在上面的例子中,SQL执行器就是一个工具的示例。 注意:在我的网络中,有些人抵制在这个上下文中使用“代理”一词,因为它已经在其他上下文中过度使用(例如,代理指的是强化学习中的策略)。 |

2.2.1、工具 vs. 插件—Tools vs. Plugins

| Other than SQL executor, here are more examples of tools: >> search (e.g. by using Google Search API or Bing API) >> web browser (e.g. given a URL, fetch its content) >> bash executor >> calculator | 除了SQL执行器之外,以下是更多工具的例子: >> 搜索(例如使用Google Search API或Bing API进行搜索) >> 网页浏览器(例如,给定一个URL,获取其内容) >> bash执行器 >> 计算器 |

| Tools and plugins are basically the same things. You can think of plugins as tools contributed to the OpenAI plugin store. As of writing, OpenAI plugins aren’t open to the public yet, but anyone can create and use tools. | 工具和插件基本上是同样的东西。您可以将插件视为贡献给OpenAI插件商店的工具。截至目前,OpenAI插件尚未向公众开放,但任何人都可以创建和使用工具。 |

2.2.2、控制流:顺序、并行、if语句、for循环——Control flows: sequential, parallel, if, for loop

| In the example above, sequential is an example of a control flow in which one task is executed after another. There are other types of control flows such as parallel, if statement, for loop. >> Sequential: executing task B after task A completes, likely because task B depends on Task A. For example, the SQL query can only be executed after it’s been translated from the user input. >> Parallel: executing tasks A and B at the same time. >> If statement: executing task A or task B depending on the input. >> For loop: repeat executing task A until a certain condition is met. For example, imagine you use browser action to get the content of a webpage and keep on using browser action to get the content of links found in that webpage until the agent feels like it’s got sufficient information to answer the original question. | 在上面的例子中,顺序是一种控制流,即一个任务在另一个任务之后执行。还有其他类型的控制流,例如并行、if语句、for循环。 >> 顺序:在任务A完成后执行任务B,通常是因为任务B依赖于任务A。例如,在将SQL查询从用户输入中转换后,才能执行该SQL查询。 >> 并行:同时执行任务A和任务B。 >> if语句:根据输入执行任务A或任务B。 >> for循环:重复执行任务A,直到满足某个条件。 例如,想象一下使用浏览器操作获取网页内容,并继续使用浏览器操作获取该网页中找到的链接的内容,直到代理感觉到已经获得足够的信息来回答原始问题。 |

| Note: while parallel can definitely be useful, I haven’t seen a lot of applications using it. | 注意:虽然并行肯定有用,但我没有看到很多应用程序在使用它。 |

2.2.3、带有LLM代理的控制流【LLM可决定控制流的条件】Control flow with LLM agents

| In traditional software engineering, conditions for control flows are exact. With LLM applications (also known as agents), conditions might also be determined by prompting. For example, if you want your agent to choose between three actions search, SQL executor, and Chat, you might explain how it should choose one of these actions as follows (very approximate), In other words, you can use LLMs to decide the condition of the control flow! | 在传统的软件工程中,控制流的条件是确切的。对于LLM应用程序(也称为代理),条件也可能由提示确定。 例如,如果您希望代理在搜索、SQL执行器和对话之间选择三个动作之一,您可以解释它应该如何选择这些动作,如下所示(非常近似)。换句话说,您可以使用LLM来决定控制流的条件! |

2.2.4、测试代理【单元测试】Testing an agent

| For agents to be reliable, we’d need to be able to build and test each task separately before combining them. There are two major types of failure modes: (1)、One or more tasks fail. Potential causes: >> Control flow is wrong: a non-optional action is chosen >> One or more tasks produce incorrect results (2)、All tasks produce correct results but the overall solution is incorrect. Press et al. (2022) call this “composability gap”: the fraction of compositional questions that the model answers incorrectly out of all the compositional questions for which the model answers the sub-questions correctly. | 为了使代理可靠,我们需要能够在组合它们之前单独构建和测试每个任务。存在两种主要的故障模式: (1)、一个或多个任务失败。可能的原因: > >控制流程错误:选择了一个非可选操作 >> 一个或多个任务产生了不正确的结果 (2)、所有任务都产生了正确的结果,但整体解决方案是错误的。Press等人(2022)将此称为“组合间隙”:模型正确回答子问题的组合问题的比例与模型回答所有组合问题的子问题的比例不正确。 |

| Like with software engineering, you can and should unit test each component as well as the control flow. For each component, you can define pairs of (input, expected output) as evaluation examples, which can be used to evaluate your application every time you update your prompts or control flows. You can also do integration tests for the entire application. | 与软件工程类似,您可以和应该对每个组件以及控制流进行单元测试。对于每个组件,您可以定义(输入,预期输出)对作为评估示例,每次更新提示或控制流时都可以用来评估您的应用程序。您还可以对整个应用程序进行集成测试。 |

Part 3. 有前景的应用案例Promising use cases

LLM在各个领域都有广泛的应用前景,包括个人助手、聊天机器人、编程和游戏、学习辅助、数据交互、搜索推荐、销售和SEO等。

>> AI助手:AI助手是最受欢迎的消费者应用之一,目标是成为一个能够在各个方面为用户提供帮助的助手。

>> 聊天机器人:聊天机器人是为用户提供陪伴和交流的应用,可以模拟名人、游戏角色、商务人士等进行对话。

>> 编程和游戏:LLM在编写和调试代码方面非常出色,应用包括编写网页应用、发现安全威胁、创建游戏、生成游戏角色等。

>> 学习:LLM可用于帮助学生更快地学习,应用包括书籍摘要、自动生成测验、评分作文、数学解题和辩论等。

>> 与数据交互:应用于企业领域的最受欢迎应用之一,可以让用户使用自然语言或问答方式查询内部数据和政策。

>> 搜索和推荐:LLM在搜索和推荐领域具有广泛应用,可以通过LLM根据用户查询生成相关产品列表。

>> 销售:使用LLM合成公司信息,了解客户需求,以改进销售。

>> SEO:LLM的出现可能改变搜索引擎优化,公司需要应对LLM生成的内容,同时也可能依赖品牌信任度。

| The Internet has been flooded with cool demos of applications built with LLMs. Here are some of the most common and promising applications that I’ve seen. I’m sure that I’m missing a ton. For more ideas, check out the projects from two hackathons I’ve seen: >> GPT-4 Hackathon Code Results [Mar 25, 2023] >> Langchain / Gen Mo Hackathon [Feb 25, 2023] | 互联网上充斥着使用LLM构建的酷炫应用程序的演示。以下是我看到的一些最常见和有前景的应用程序。我肯定还漏掉了很多。 如果需要更多创意,可以查看我看过的两个黑客马拉松的项目: |

3.1、AI助手AI assistant—在各个方面协助的助手

| This is hands down the most popular consumer use case. There are AI assistants built for different tasks for different groups of users – AI assistants for scheduling, making notes, pair programming, responding to emails, helping with parents, making reservations, booking flights, shopping, etc. – but, of course, the ultimate goal is an assistant that can assist you in everything. This is also the holy grail that all big companies are working towards for years: Google with Google Assistant and Bard, Facebook with M and Blender, OpenAI (and by extension, Microsoft) with ChatGPT. Quora, which has a very high risk of being replaced by AIs, released their own app Poe that lets you chat with multiple LLMs. I’m surprised Apple and Amazon haven’t joined the race yet. | 这无疑是最受欢迎的消费者用例。为不同用户群体构建了针对不同任务的AI助手,如日程安排、做笔记、配对编程、回复电子邮件、帮助父母、预订餐厅、订购航班、购物等等。当然,最终的目标是一个能够在各个方面协助您的助手。 这也是所有大公司多年来一直努力追求的圣杯:谷歌的谷歌助手和Bard,Facebook的M和Blender,OpenAI(及其附属公司Microsoft)的ChatGPT。Quora可能被AI取代的风险很高,他们发布了自己的应用程序Poe,可以让您与多个LLM进行聊天。我很惊讶苹果和亚马逊尚未加入这场竞争。 |

3.2、聊天机器人Chatbot—完成用户给定的任务/成为一个伴侣

| Chatbots are similar to AI assistants in terms of APIs. If AI assistants’ goal is to fulfill tasks given by users, whereas chatbots’ goal is to be more of a companion. For example, you can have chatbots that talk like celebrities, game/movie/book characters, businesspeople, authors, etc. Michelle Huang used her childhood journal entries as part of the prompt to GPT-3 to talk to the inner child. The most interesting company in the consuming-chatbot space is probably Character.ai. It’s a platform for people to create and share chatbots. The most popular types of chatbots on the platform, as writing, are anime and game characters, but you can also talk to a psychologist, a pair programming partner, or a language practice partner. You can talk, act, draw pictures, play text-based games (like AI Dungeon), and even enable voices for characters. I tried a few popular chatbots – none of them seem to be able to hold a conversation yet, but we’re just at the beginning. Things can get even more interesting if there’s a revenue-sharing model so that chatbot creators can get paid. | 在API方面,聊天机器人与AI助手类似。如果AI助手的目标是完成用户给定的任务,那么聊天机器人的目标则更像是成为一个伴侣。例如,您可以拥有像名人、游戏/电影/书籍角色、商界人士、作家等一样交谈的聊天机器人。 Michelle Huang利用她的童年日记作为GPT-3的一部分提示,与她的内心孩子进行交流。 在消费级聊天机器人领域,最有趣的公司可能是Character.ai。这是一个供人们创建和分享聊天机器人的平台。平台上最受欢迎的聊天机器人类型是动漫和游戏角色,但您也可以与心理学家、配对编程伙伴或语言实践伙伴进行交谈。您可以进行对话、行为、绘画、玩基于文本的游戏(如AI Dungeon),甚至为角色启用语音。我尝试了一些受欢迎的聊天机器人,似乎没有一个能够进行持续的对话,但我们只是刚刚开始。如果有一种收入分享模式,聊天机器人的创建者可以获得报酬,事情可能变得更加有趣。 |

3.3、编程【调试代码】和游戏Programming and gaming

| This is another popular category of LLM applications, as LLMs turn out to be incredibly good at writing and debugging code. GitHub Copilot is a pioneer (whose VSCode extension has had 5 million downloads as of writing). There have been pretty cool demos of using LLMs to write code: (1)、Create web apps from natural languages (2)、Find security threats: Socket AI examines npm and PyPI packages in your codebase for security threats. When a potential issue is detected, they use ChatGPT to summarize findings. (3)、Gaming >> Create games: e.g. Wyatt Cheng has an awesome video showing how he used ChatGPT to clone Flappy Bird. >> Generate game characters. >> Let you have more realistic conversations with game characters: check out this awesome demo by Convai! | 这是另一类LLM应用程序的热门领域,因为LLM在编写和调试代码方面非常出色。GitHub Copilot是一家先驱(其VSCode扩展截至撰写本文已经下载了500万次)。有一些非常酷的演示展示了使用LLM编写代码的应用场景: (1)、从自然语言中创建Web应用程序 (2)、查找安全威胁:Socket AI检查您代码库中的npm和PyPI软件包是否存在安全威胁。当检测到潜在问题时,他们使用ChatGPT来总结研究结果。 (3)、游戏 >>创建游戏:例如,Wyatt Cheng展示了他如何使用ChatGPT克隆Flappy Bird的精彩视频。 >>生成游戏角色。 >>让您与游戏角色进行更真实的对话:看看Convai的这个令人惊叹的演示! |

3.4、学习Learning—帮助学生更快学习:总结书籍、评估答案正确性

| Whenever ChatGPT was down, OpenAI discord is flooded with students complaining about not being to complete their homework. Some responded by banning the use of ChatGPT in school altogether. Some have a much better idea: how to incorporate ChatGPT to help students learn even faster. All EdTech companies I know are going full-speed on ChatGPT exploration. | 每当ChatGPT不可用时,OpenAI的Discord上都会充斥着学生抱怨无法完成作业的情况。一些人回应是完全禁止在学校使用ChatGPT。一些人有一个更好的主意:如何将ChatGPT融入到帮助学生更快学习的过程中。我所知的所有教育技术公司都在全速进行ChatGPT的探索。 |

| Some use cases: >> Summarize books >>Automatically generate quizzes to make sure students understand a book or a lecture. Not only ChatGPT can generate questions, but it can also evaluate whether a student’s input answers are correct. I tried and ChatGPT seemed pretty good at generating quizzes for Designing Machine Learning Systems. Will publish the quizzes generated soon! >>Grade / give feedback on essays >>Walk through math solutions >>Be a debate partner: ChatGPT is really good at taking different sides of the same debate topic. With the rise of homeschooling, I expect to see a lot of applications of ChatGPT to help parents homeschool. | 一些应用案例包括: >> 总结书籍 >> 自动生成测验,以确保学生理解一本书或一堂讲座。ChatGPT不仅可以生成问题,还可以评估学生输入答案的正确性。 我尝试过,ChatGPT在为《设计机器学习系统》生成测验方面似乎表现不错。将很快发布生成的测验! >>给论文评分/提供反馈意见 >>指导数学解题 >>充当辩论伙伴:ChatGPT在同一辩论主题的不同方面表现得非常好。 随着家庭教育的兴起,我预计将会看到很多ChatGPT应用来帮助家长进行家庭教育。 |

3.5、与数据交互Talk-to-your-data—利用自然语言查询数据:法律合同、简历、财务数据或客户支持

| This is, in my observation, the most popular enterprise application (so far). Many, many startups are building tools to let enterprise users query their internal data and policies in natural languages or in the Q&A fashion. Some focus on verticals such as legal contracts, resumes, financial data, or customer support. Given a company’s all documentations, policies, and FAQs, you can build a chatbot that can respond your customer support requests. | 根据我的观察,这是目前最受欢迎的企业应用程序。许多初创公司正在构建工具,让企业用户能够用自然语言或问答方式查询其内部数据和政策。有些公司专注于特定领域,如法律合同、简历、财务数据或客户支持。通过使用公司的所有文档、政策和常见问题解答,您可以构建一个能够回应客户支持请求的聊天机器人。 |

(0)、如何与向量数据库进行交互

| The main way to do this application usually involves these 4 steps: >> Organize your internal data into a database (SQL database, graph database, embedding/vector database, or just text database) >> Given an input in natural language, convert it into the query language of the internal database. For example, if it’s a SQL or graph database, this process can return a SQL query. If it’s embedding database, it’s might be an ANN (approximate nearest neighbor) retrieval query. If it’s just purely text, this process can extract a search query. >> Execute the query in the database to obtain the query result. >> Translate this query result into natural language. | 通常,实现这种应用的主要步骤包括以下四个: >> 将内部数据组织成数据库(SQL数据库、图数据库、嵌入/向量数据库或纯文本数据库)。 >> 将自然语言输入转换为内部数据库的查询语言。例如,如果是SQL数据库或图数据库,这个过程可以返回一个SQL查询。如果是嵌入式数据库,可能是一个ANN(近似最近邻)检索查询。如果只是纯文本,这个过程可以提取一个搜索查询。 >> 在数据库中执行查询以获得查询结果。 >> 将查询结果翻译成自然语言。 |

| While this makes for really cool demos, I’m not sure how defensible this category is. I’ve seen startups building applications to let users query on top of databases like Google Drive or Notion, and it feels like that’s a feature Google Drive or Notion can implement in a week. OpenAI has a pretty good tutorial on how to talk to your vector database. | 虽然这些应用程序的演示非常酷,但我不确定这个领域有多大的竞争优势。我见过一些初创公司构建的应用程序,让用户在Google Drive或Notion等数据库之上进行查询,感觉这是Google Drive或Notion可以在一周内实现的功能。 OpenAI在如何与向量数据库进行交互方面有一个非常好的教程。 |

LLMs:LLMs场景实战案例应用之基于自然语言+SQL查询+ANN(构建高效数据库+快速缩小搜索范围→解决高维+高效查找)查找的内部数据搜索和问答应用案例的简介、具体实现之详细攻略

https://yunyaniu.blog.csdn.net/article/details/131506163

3.5.1、LLM能为我进行数据分析吗?Can LLMs do data analysis for me?——仅适用于适合输入提示的小型数据而非生产数据(大批量)

| I tried inputting some data into gpt-3.5-turbo, and it seems to be able to detect some patterns. However, this only works for small data that can fit into the input prompt. Most production data is larger than that. | 我尝试将一些数据输入gpt-3.5-turbo,它似乎能够检测出一些模式。然而,这仅适用于适合输入提示的小型数据。大多数生产数据都比这要大。 |

3.6、搜索和推荐Search and recommendation:从基于关键词→基于LLMs优化搜索引擎【将用户查询转为产品列表名称+检索相关产品】

| Search and recommendation has always been the bread and butter of enterprise use cases. It’s going through a renaissance with LLMs. Search has been mostly keyword-based: you need a tent, you search for a tent. But what if you don’t know what you need yet? For example, if you’re going camping in the woods in Oregon in November, you might end up doing something like this: >> Search to read about other people’s experiences. >> Read those blog posts and manually extract a list of items you need. >> Search for each of these items, either on Google or other websites. | 搜索和推荐一直是企业使用案例的核心。随着LLM的出现,它们正在经历一次复兴。搜索一直以来都是基于关键字的:您需要一个帐篷,您搜索帐篷。但如果您还不知道自己需要什么呢?例如,如果您在11月份在俄勒冈州的森林中露营,您可能会做类似以下的事情 >> 搜索阅读其他人的经验。 >> 阅读这些博客文章并手动提取所需物品的列表。 >> 在Google或其他网站上搜索这些物品。 |

| If you search for “things you need for camping in oregon in november” directly on Amazon or any e-commerce website, you’ll get something like this: | 如果您直接在亚马逊或任何电子商务网站上搜索“11月份在俄勒冈州露营需要的物品”,您将得到类似以下的结果: |

| But what if searching for “things you need for camping in oregon in november” on Amazon actually returns you a list of things you need for your camping trip? It’s possible today with LLMs. For example, the application can be broken into the following steps: Task 1: convert the user query into a list of product names [LLM] Task 2: for each product name in the list, retrieve relevant products from your product catalog. | 但是,如果在亚马逊上搜索“11月份在俄勒冈州露营需要的物品”实际上返回了您露营旅行所需的物品清单呢? 通过使用LLM,这是可能的。例如,该应用程序可以分解为以下步骤: 任务1:将用户查询转换为产品名称列表[LLM] 任务2:对列表中的每个产品名称,从您的产品目录中检索相关产品。 |

| If this works, I wonder if we’ll have LLM SEO: techniques to get your products recommended by LLMs. | 如果这样可以实现,我想知道我们是否会有LLM搜索引擎优化(SEO):即如何使LLM推荐您的产品。 |

3.7、销售Sales:编写销售电子邮件

| The most obvious way to use LLMs for sales is to write sales emails. But nobody really wants more or better sales emails. However, several companies in my network are using LLMs to synthesize information about a company to see what they need. | 使用LLM进行销售最明显的方式是编写销售电子邮件。但是,没有人真正希望获得更多或更好的销售电子邮件。然而,我所在网络中的几家公司正在使用LLM综合整理有关某个公司的信息,以了解他们的需求。 |

3.8、搜索引擎优化SEO:依赖搜索→依赖品牌

| SEO is about to get very weird. Many companies today rely on creating a lot of content hoping to rank high on Google. However, given that LLMs are REALLY good at generating content, and I already know a few startups whose service is to create unlimited SEO-optimized content for any given keyword, search engines will be flooded. SEO might become even more of a cat-and-mouse game: search engines come up with new algorithms to detect AI-generated content, and companies get better at bypassing these algorithms. People might also rely less on search, and more on brands (e.g. trust only the content created by certain people or companies). And we haven’t even touched on SEO for LLMs yet: how to inject your content into LLMs’ responses!! | 搜索引擎优化即将变得非常奇特。今天许多公司依赖于大量创作内容,希望在Google上排名靠前。然而,考虑到LLM在生成内容方面非常出色,我已经知道一些初创公司的服务是为任何给定的关键字创建无限数量的经过优化的SEO内容,搜索引擎将被淹没。SEO可能变得更像是一场猫鼠游戏:搜索引擎会推出新的算法来检测AI生成的内容,而公司则变得更擅长绕过这些算法。人们可能会更少地依赖搜索,更多地依赖品牌(例如,只信任某些人或公司创建的内容)。 |

结论Conclusion

LLMs的应用仍处于早期阶段,一切都在快速发展。每天都有新的发现、应用和优化。信息更新速度很快,部分内容可能很快就过时。不需要关注所有的新内容,重要的会长久存留,然后会有更好的总结和资料帮助理解。

LLMs似乎擅长为自己编写提示,因此未来是否还需要人类来调整提示尚不确定。面对如此多的变化,很难判断哪些是重要的,哪些是不重要的。

作者调查了人们在了解领域动态方面的策略。有人选择忽略大部分炒作,等待六个月来看哪些是真正有价值的;有人只读摘要,通过使用BingChat等NLP工具快速获取主要信息;还有人努力跟上最新的工具,及时使用新工具进行构建,只关注差异性内容,以便在新工具发布时能够更好地理解其差异。

(1)、prompt engineering论文的雨后春笋好比DL早期的成千上万的权重初始化技巧

| We’re still in the early days of LLMs applications – everything is evolving so fast. I recently read a book proposal on LLMs, and my first thought was: most of this will be outdated in a month. APIs are changing day to day. New applications are being discovered. Infrastructure is being aggressively optimized. Cost and latency analysis needs to be done on a weekly basis. New terminologies are being introduced. Not all of these changes will matter. For example, many prompt engineering papers remind me of the early days of deep learning when there were thousands of papers describing different ways to initialize weights. I imagine that tricks to tweak your prompts like: "Answer truthfully", "I want you to act like …", writing "question: " instead of "q:" wouldn’t matter in the long run. | 我们仍处于LLM应用的早期阶段,一切都在迅速发展。我最近读了一本关于LLM的书稿,我的第一个想法是:其中大部分内容一个月后就会过时。API不断变化。新的应用正在被发现。基础设施正在积极优化。成本和延迟分析需要每周进行。新的术语正在被引入。 并非所有这些变化都会起作用。例如,许多关于提示工程的论文让我想起深度学习的早期时期,当时有成千上万篇论文描述了不同的权重初始化方式。我想象在长远来看,调整提示的技巧,如“真实回答”,“我希望你表现得像...”,将不再重要。 |

| Given that LLMs seem to be pretty good at writing prompts for themselves – see Large Language Models Are Human-Level Prompt Engineers (Zhou et al., 2022) – who knows that we’ll need humans to tune prompts? However, given so much happening, it’s hard to know which will matter, and which won’t. I recently asked on LinkedIn how people keep up to date with the field. The strategy ranges from ignoring the hype to trying out all the tools. | 鉴于LLM似乎非常擅长为自己编写提示-请参阅《大型语言模型是人类级提示工程师》(Zhou等,2022)-谁知道我们是否还需要人类来调整提示呢? 然而,由于发展如此迅速,很难知道哪些是重要的,哪些是无关紧要的。 我最近在LinkedIn上询问人们如何跟上这个领域的最新进展。策略范围从忽略炒作到尝试所有工具。 |

(2)、忽略(大部分)炒作、只阅读摘要、尝试跟上最新工具的发展

| (1)、Ignore (most of) the hype Vicki Boykis (Senior ML engineer @ Duo Security): I do the same thing as with any new frameworks in engineering or the data landscape: I skim the daily news, ignore most of it, and wait six months to see what sticks. Anything important will still be around, and there will be more survey papers and vetted implementations that help contextualize what’s happening. (2)、Read only the summaries Shashank Chaurasia (Engineering @ Microsoft): I use the Creative mode of BingChat to give me a quick summary of new articles, blogs and research papers related to Gen AI! I often chat with the research papers and github repos to understand the details. (3)、Try to keep up to date with the latest tools Chris Alexiuk (Founding ML engineer @ Ox): I just try and build with each of the tools as they come out - that way, when the next step comes out, I’m only looking at the delta. | (1)忽略(大部分)炒作 Vicki Boykis(Duo Security高级ML工程师):对于工程或数据领域的任何新框架,我都会浏览每日新闻,忽略大部分内容,并等待六个月,看看哪些留存下来。重要的东西仍然存在,并且会有更多的综述论文和经过验证的实现来帮助理解正在发生的事情。 (2)只阅读摘要 Shashank Chaurasia(微软工程师):我使用BingChat的创意模式,快速获取与Gen AI相关的新文章、博客和研究论文的摘要!我经常与研究论文和GitHub存储库进行交流,以了解详细信息。 (3)尝试跟上最新工具的发展 Chris Alexiuk(Ox创始ML工程师):我尽量在每个工具发布时都进行构建-这样,当下一个步骤出现时,我只需要关注差异。 |

| What’s your strategy? | 您有什么策略? |