热门标签

热门文章

- 1华为鸿蒙HarmonyOS应用开发者高级认证答案_华为认证答案’

- 2阿维塔在车辆安全中的以攻促防实例 | 附PPT下载

- 3下载代码的一些命令-小记_quicinc

- 4Logistic模型

- 5正确解决can‘t connect to MySQL server on ip(10060)的解决办法异常的有效解决方法_can't connect to mysql server on 10060

- 6GIT远程仓库(随笔)

- 7win10下 docker 配置python pytorch深度学习环境_win10创建docker python

- 8Hive实战案例_hive多维统计分析案例实战

- 9软件架构模式之分层架构

- 10【博客89】考虑为你的inline函数加上static_inline 函数都加了static

当前位置: article > 正文

NLP常用编码方式--onehot、word2vec、BERT_自然语言编码

作者:小蓝xlanll | 2024-05-24 13:46:12

赞

踩

自然语言编码

1 为什么要进行编码

在进行自然语言处理时,对文字进行编码一个十分必要的步骤文字编码的目的是将文本数据转换为计算机可以理解和处理的数字表示形式。以下是进行文字编码的几个主要原因:

- 机器学习模型的输入:大多数机器学习模型接受数字输入。因此,将文字编码为数字化表示形式是将文本数据提供给这些模型的必要步骤。

- 特征提取:文字编码可以将文本转换为适合机器学习模型使用的特征表示。这些特征可以捕获词语、句子或者文档的语义、语法、上下文等信息,从而帮助模型理解文本并进行学习和预测。

- 统计分析和计算:数值化的文字编码使得进行统计分析和计算变得更加简单和高效。模型可以通过对数字化的文本数据进行数值运算和统计推断,从中提取关键信息和进行预测。

- 嵌入表示学习:文字编码可以用于学习文字的嵌入表示。嵌入表示是将文字映射到低维连续向量空间中的技术,它可以保留文字之间的语义关系,并提供更紧凑的表示形式。这对于许多NLP任务,如情感分析、命名实体识别和机器翻译等起着关键作用。

- 数据准备和预处理:对于NLP任务,文字编码也用于数据准备和预处理阶段。例如,将文字编码为序列或者矩阵形式可以方便地输入到神经网络模型中。

2 常用编码方式

以下为几种常用的编码方式,也是本文介绍的主要内容:

- One-Hot 编码:One-Hot编码是将每个词或字符表示为一个向量,其中只有一个元素为1,其余元素为0。该向量的长度等于词汇表的大小。每个词或字符都有一个唯一的索引,对应的位置上的元素为1,其他位置上的元素都为 0。

- Word2Vec:提供了一种用于学习词语嵌入(word embeddings)的技术,其中每个单词都表示为一个固定长度的实数向量。Word2Vec的基本思想是通过预测上下文或周围单词来学习单词的分布式表示。具体而言,Word2Vec 提供了两种主要的模型:Skip-gram(跳字模型)和CBOW(连续词袋模型)。这两种模型的核心都是通过优化预测任务来学习单词向量。

- BERT 编码:BERT(Bidirectional Encoder Representations from Transformers)是一种基于 Transformer 模型的预训练语言模型。BERT 编码将输入文本中的每个词表示为向量,其中包含了上下文信息。通过预训练模型,BERT 编码可以提供具有丰富语义信息的词表示。

2.1 one-hot编码方式

one-hot是一种词嵌入方式,编码方式较为较为简单,就是将每一个词或字都表示为一个向量,仅在该词或字所在的位置设置为1,其余位置均为零。该编码方式的优缺点如下:

优点:

- 简单易实现:One-Hot 编码是一种简单的编码方式,可以很容易地将词语或字符转换为对应的向量表示。

- 独热性:每个词或字符的向量表示是在词汇表中只有一个元素为 1,其余元素为 0,具有独热性。这种表示方式确保了每个词或字符之间互不相干,不会出现混淆。

- 适用于分类任务:One-Hot 编码在分类任务中常被广泛使用,可以作为输入特征送入分类器进行训练和预测。

缺点:

- 高维稀疏表示:在大规模的词汇表中,One-Hot 编码会导致高维度的向量表示,因为每个词或字符都需要使用一个向量进行表示。当词汇表很大时,这可能导致内存和计算资源的浪费。

- 无法捕捉语义信息:One-Hot 编码无法表示词语或字符之间的语义关系。每个词或字符的向量表示都是相互独立的,无法表达词之间的相似度或语义距离。

- 不适用于连续数值计算:由于 One-Hot 编码是一种离散的表示方式,它不适用于需要进行数值计算或连续性推理的任务。因为向量之间没有数值上的关系,无法进行代数运算。

- 数据扩展问题:One-Hot 编码会扩展数据维度,如果没有足够的训练数据,可能会导致过拟合的问题,特别是在高维度的稀疏表示下。

2.2 word2vec

word2vec也是一种词嵌入方式,有两种训练方式,如下图所示为两种训练方式的框架图:

- CBOW:是根据某个词前后的词,来预测该词的出现的概率。也就是使用上下文来预测中心词。使用Pytorch框架实现的大致流程如下:

- #导入所需库

- import torch

- import torch.nn as nn

- import torch.optim as optim

- from torch.utils.data import DataLoader, Dataset

-

- #定义数据类

- class Word2VecDataset(Dataset):

- def __init__(self, corpus, window_size):

- self.corpus = corpus

- self.window_size = window_size

- self.data = self.generate_data()

-

- def generate_data(self):

- data = []

- for sentence in self.corpus:

- for i in range(len(sentence)):

- center_word = sentence[i]

- context_words = sentence[max(i - self.window_size, 0):i] + sentence[i + 1:i + self.window_size + 1]

- data.append((center_word, context_words))

- return data

-

- def __len__(self):

- return len(self.data)

-

- def __getitem__(self, index):

- center_word, context_words = self.data[index]

- return center_word, context_words

-

- #定义模型--CBOW

- class Word2Vec(nn.Module):

- def __init__(self, vocab_size, embed_size):

- super(Word2Vec, self).__init__()

- self.embeddings = nn.Embedding(vocab_size, embed_size)

- self.linear1 = nn.Linear(embed_size, vocab_size)

-

- def forward(self, center_word, context_words):

- embedded = self.embeddings(center_word)

- hidden = torch.sum(embedded, dim=0)

- output = self.linear1(hidden)

- return output, embedded

-

- #定义训练函数

- def train(model, dataset, batch_size, num_epochs, learning_rate):

- device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

- model.to(device)

-

- criterion = nn.CrossEntropyLoss()

- optimizer = optim.SGD(model.parameters(), lr=learning_rate)

-

- dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True)

-

- for epoch in range(num_epochs):

- total_loss = 0

- for center_word, context_words in dataloader:

- center_word = center_word.to(device)

- context_words = context_words.to(device)

-

- optimizer.zero_grad()

- output, _ = model(center_word, context_words)

- loss = criterion(output, context_words.flatten())

- loss.backward()

- optimizer.step()

-

- total_loss += loss.item()

-

- print(f"Epoch {epoch+1}/{num_epochs}, Loss: {total_loss/len(dataloader):.4f}")

-

- #准备数据和训练

- corpus = [...] # 原始语料库,每个元素是一个句子列表或单词列表

- window_size = 2 # 上下文窗口大小

- embedding_size = 100 # 词嵌入维度

- batch_size = 64

- num_epochs = 10

- learning_rate = 0.01

-

- dataset = Word2VecDataset(corpus, window_size)

- vocab_size = len(set([word for sentence in corpus for word in sentence]))

- model = Word2Vec(vocab_size, embedding_size)

- train(model, dataset, batch_size, num_epochs, learning_rate)

- Skip-gram:使用某个中心词,来预测上下文的内容。使用Pytorch框架实现的大致流程如下(由于两种模型仅输入和输出不同,因此仅给出不同的部分):

- class Word2Vec(nn.Module):

- def __init__(self, vocab_size, embed_size):

- super(Word2Vec, self).__init__()

- self.embeddings = nn.Embedding(vocab_size, embed_size)

- self.linear1 = nn.Linear(embed_size, vocab_size)

-

- def forward(self, target_word):

- embedded = self.embeddings(target_word)

- output = self.linear1(embedded)

- return output, embedded

-

- def train(model, dataset, batch_size, num_epochs, learning_rate):

- device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

- model.to(device)

-

- criterion = nn.CrossEntropyLoss()

- optimizer = optim.SGD(model.parameters(), lr=learning_rate)

-

- dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True)

-

- for epoch in range(num_epochs):

- total_loss = 0

- for target_word, context_word in dataloader:

- target_word = target_word.to(device)

- context_word = context_word.to(device)

-

- optimizer.zero_grad()

- output, _ = model(target_word)

- loss = criterion(output, context_word.flatten())

- loss.backward()

- optimizer.step()

-

- total_loss += loss.item()

-

- print(f"Epoch {epoch+1}/{num_epochs}, Loss: {total_loss/len(dataloader):.4f}")

与 CBOW 模型相比,Skip-gram 模型的不同之处在于输入是目标单词(target_word),而不是上下文单词。在模型的 forward 方法中,只需将目标单词的词向量传递给线性层(self.linear1)进行输出。其余部分的训练流程与 CBOW 模型相似。

2.3 BERT

BERT编码方式是依赖于huggingface官网所提供的预训练模型进行的,在使用时可以根据文字的具体类型和需要,在官网上下载,并使用pytorch调用模型对数据编码。在下面的示例中,我们首先加载了预训练的 BERT 模型和分词器(bert-base-uncased)。然后,我们对输入文本进行分词并将其转换为整数 ID,然后使用预训练的 BERT 模型获取嵌入表示。最后,我们打印出输入文本的嵌入表示。:

- import torch

- from transformers import BertTokenizer, BertModel

-

- # 加载预训练的 BERT 模型和分词器

- model_name = 'bert-base-uncased'

- tokenizer = BertTokenizer.from_pretrained(model_name)

- model = BertModel.from_pretrained(model_name)

-

- # 输入文本

- text = "Hello, how are you?"

-

- # 使用分词器将文本转换为标记序列

- tokens = tokenizer.tokenize(text)

- tokens = ['[CLS]'] + tokens + ['[SEP]'] # 添加开始和结束标记

-

- # 将标记序列转换为对应的整数 ID

- input_ids = tokenizer.convert_tokens_to_ids(tokens)

- input_ids = torch.tensor(input_ids).unsqueeze(0) # 添加批次维度

-

- # 获取 BERT 的编码结果

- with torch.no_grad():

- outputs = model(input_ids)

- embeddings = outputs.last_hidden_state

-

- # embeddings 是输入文本的嵌入表示,可以在下游任务中使用

-

- # 打印输入文本的嵌入表示

- print(embeddings)

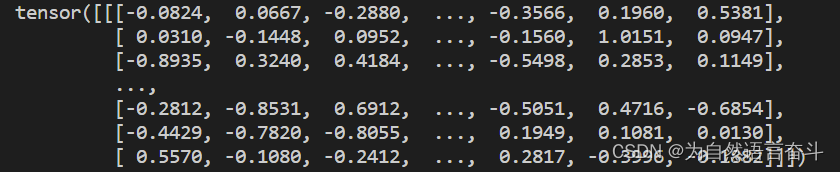

输出结果如下:

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/小蓝xlanll/article/detail/617682

推荐阅读

相关标签