- 1[思维模式-17]:《复盘》-5- “行”篇 - 操作复盘- 项目复盘_复盘第五章项目复盘

- 2如何用python画一朵玫瑰花,用python画一朵玫瑰代码_玫瑰花python

- 3【Docker】在Windows操作系统安装Docker前配置环境_windows docker

- 4量化交易与人工智能:Python库的应用与效用_python量化交易库

- 5M1 Macbook Air使用VSCode配置C++环境_m1 cli vscode是什么版本

- 60day漏洞介绍

- 7基于YOLOV5的道路损伤(GRDDC‘2020)检测_道路缺陷d00

- 8如何搭建基于容器的工业互联网PaaS平台

- 9[转]SLA

- 10数据结构基础之图(中):最小生成树算法_已知无向带权图,bfs序列和bfs生成树图解

Hadoop集群安装部署_hadoop集群部署

赞

踩

目录

Hadoop集群安装部署

1、集群环境准备

准备三台linux服务器,修改主机名,配置/etc/hosts文件

- vim /etc/hosts文件

- 192.168.20.11 node1

- 192.168.20.12 node2

- 192.168.20.13 node3

集群角色规划:

资源上有抢夺冲突的,尽量不要部署在一起

工作上需要相互配合的,尽量部署在一起

目录规划:

- 软件目录:/usr/local/

-

- 数据规划:/data/

############################################################

2、建立免密通道

创建一个新用户 hadoop

- useradd hadoop

-

- echo "123456" | passwd hadoop --stdin

使用hadoop用户在node1节点上面与其他机器建立免密通道

- #生成密钥对

- ssh-keygen -t rsa

- #上传秘钥对

- ssh-copy-id -p 22 -i id_rsa.pub node1

- ssh-copy-id -p 22 -i id_rsa.pub node2

- ssh-copy-id -p 22 -i id_rsa.pub node3

############################################################

3、集群时间同步

在node1,node2,node3操作:

- 三台机器操作:

- yum -y install ntpdate

- [root@node1 ~]# ntpdate ntp4.aliyun.com

- 27 Mar 15:07:11 ntpdate[14696]: adjust time server 203.107.6.88 offset 0.004176 sec

- [root@node1 ~]# date

- 2023年 03月 27日 星期一 15:07:13 CST

############################################################

4、jdk1.8安装

在node1,node2,node3操作:

下载或上传压缩包,然后解压缩

- # 进入软件安装目录

- cd /usr/local

-

- # 解压缩安装包

- tar -xvf jdk1.8.0_301.tar.gz

-

- # 创建软链接

- ln -s jdk1.8.0_301 jdk

-

- # 编辑环境变量

- vim /etc/bashrc

- export JAVA_HOME=/usr/local/jdk/bin

- export PATH=$JAVA_HOME:$PATH

-

-

- # 使环境变量生效

- source /etc/bashrc

安装成功效果:

- [hadoop@node1 local]$ java -version

- java version "1.8.0_301"

- Java(TM) SE Runtime Environment (build 1.8.0_301-b09)

- Java HotSpot(TM) 64-Bit Server VM (build 25.301-b09, mixed mode)

############################################################

5、hadoop安装部署

在node1,node2,node3操作:

上传hadoop编译好的二进制包

- # 进入安装目录

- cd /usr/local

-

- # 解压源码包

- tar xf hadoop-3.3.0-Centos7-64-with-snappy.tar.gz

-

- # 创建软链接

- ln -s hadoop-3.3.0 hadoop

-

-

- [hadoop@node1 local]$ ll

- 总用量 445672

- drwxr-xr-x. 2 root root 134 3月 24 15:36 bin

- drwxr-xr-x. 2 root root 6 4月 11 2018 etc

- drwxr-xr-x. 2 root root 6 4月 11 2018 games

- lrwxrwxrwx 1 hadoop hadoop 12 3月 27 16:14 hadoop -> hadoop-3.3.0

- drwxr-xr-x 10 hadoop hadoop 215 7月 15 2021 hadoop-3.3.0

- -rw-r--r-- 1 root root 456364743 3月 27 16:12 hadoop-3.3.0-Centos7-64-with-snappy.tar.gz

- drwxr-xr-x. 2 root root 6 4月 11 2018 include

- lrwxrwxrwx 1 root root 12 3月 27 14:37 jdk -> jdk1.8.0_301

- drwxr-xr-x 7 nginx nginx 153 2月 17 2022 jdk1.8.0_301

- [hadoop@node1 hadoop]$ ll

- 总用量 84

- drwxr-xr-x 2 hadoop hadoop 203 7月 15 2021 bin

- drwxr-xr-x 3 hadoop hadoop 20 7月 15 2021 etc

- drwxr-xr-x 2 hadoop hadoop 106 7月 15 2021 include

- drwxr-xr-x 3 hadoop hadoop 20 7月 15 2021 lib

- drwxr-xr-x 4 hadoop hadoop 288 7月 15 2021 libexec

- -rw-rw-r-- 1 hadoop hadoop 22976 3月 27 17:52 LICENSE-binary

- drwxr-xr-x 2 hadoop hadoop 4096 7月 15 2021 licenses-binary

- -rw-rw-r-- 1 hadoop hadoop 15697 3月 27 17:52 LICENSE.txt

- -rw-rw-r-- 1 hadoop hadoop 27570 3月 27 17:52 NOTICE-binary

- -rw-rw-r-- 1 hadoop hadoop 1541 3月 27 17:52 NOTICE.txt

- -rw-rw-r-- 1 hadoop hadoop 175 3月 27 17:52 README.txt

- drwxr-xr-x 3 hadoop hadoop 4096 3月 28 10:14 sbin

- drwxr-xr-x 3 hadoop hadoop 20 7月 15 2021 share

############################################################

6、Hadoop配置文件配置

在node1配置好后,同步到node2,node3.

hadoop-env.sh:

- # 添加到文件最后

- export JAVA_HOME=/usr/local/jdk1.8.0_301

- export HADOOP_HOME=/usr/local/hadoop

- export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

- export HADOOP_LOG_DIR=/data/hadoop/logs

- export HADOOP_PID_DIR=/data/hadoop/pids

- #export YARN_PID_DIR=/data/hadoop/pids

-

- export HDFS_DATANODE_SECURE_USER=hadoop

- export HDFS_NAMENODE_USER=hadoop

- export HDFS_SECONDARYNAMENODE_USER=hadoop

- export YARN_RESOURCEMANAGER_USER=hadoop

- export YARN_NODEMANAGER_USER=hadoop

- export YARN_PROXYSERVER_USER=hadoop

- export HADOOP_SHELL_EXECNAME=hadoop

core-site.xml:

hdfs-site.xml:

- <configuration>

- <!-- 设置SNN进程运行机器位置信息 -->

- <property>

- <name>dfs.namenode.secondary.http-address</name>

- <value>node2:9868</value>

- </property>

-

- </configuration>

mapred-site.xml

- <configuration>

- <!-- 设置MR程序默认运行模式: yarn集群模式 local本地模式 -->

- <property>

- <name>mapreduce.framework.name</name>

- <value>yarn</value>

- </property>

-

- <!-- MR程序历史服务地址 -->

- <property>

- <name>mapreduce.jobhistory.address</name>

- <value>node1:10020</value>

- </property>

-

- <!-- MR程序历史服务器web端地址 -->

- <property>

- <name>mapreduce.jobhistory.webapp.address</name>

- <value>node1:19888</value>

- </property>

-

- <property>

- <name>yarn.app.mapreduce.am.env</name>

- <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

- </property>

-

- <property>

- <name>mapreduce.map.env</name>

- <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

- </property>

-

- <property>

- <name>mapreduce.reduce.env</name>

- <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

- </property>

-

- </configuration>

yarn-site.xml

- <configuration>

-

- <!-- Site specific YARN configuration properties -->

- <!-- 设置YARN集群主角色运行机器位置 -->

- <property>

- <name>yarn.resourcemanager.hostname</name>

- <value>node1</value>

- </property>

-

- <property>

- <name>yarn.nodemanager.aux-services</name>

- <value>mapreduce_shuffle</value>

- </property>

-

- <!-- 是否将对容器实施物理内存限制 -->

- <property>

- <name>yarn.nodemanager.pmem-check-enabled</name>

- <value>false</value>

- </property>

-

- <!-- 是否将对容器实施虚拟内存限制。 -->

- <property>

- <name>yarn.nodemanager.vmem-check-enabled</name>

- <value>false</value>

- </property>

-

- <!-- 开启日志聚集 -->

- <property>

- <name>yarn.log-aggregation-enable</name>

- <value>true</value>

- </property>

-

- <!-- 设置yarn历史服务器地址 -->

- <property>

- <name>yarn.log.server.url</name>

- <value>http://node1:19888/jobhistory/logs</value>

- </property>

-

- <!-- 历史日志保存的时间 7天 -->

- <property>

- <name>yarn.log-aggregation.retain-seconds</name>

- <value>604800</value>

- </property>

-

- </configuration>

workers

- node1

- node2

- node3

配置hadoop环境变量

- vim /etc/bashrc

- export HADOOP_HOME=/usr/local/hadoop

- export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

-

- source /etc/bashrc

############################################################

7、格式化操作

首次启动HDFS时,必须对其进行格式化操作

faomat本质上是初始化工作,进行HDFS清理和准备工作

format只能进行一次,后续不再需要

如果多次format除了造成数据丢失外,还会导致hdfs集群主从角色之间互不识别,通过删除所有机器hadoop.tmp.dir目录重新format解决

注意:配置文件里已经指定hadoop用户管理集群,所有操作要由hadoop用户进行:

使用hadoop用户在node1上进行格式化

- hadoop@node1 data]# hdfs namenode -format

- 上一次登录:一 3月 27 18:46:11 CST 2023pts/1 上

- WARNING: /data/hadoop/logs does not exist. Creating.

- 2023-03-27 18:47:22,391 INFO namenode.NameNode: STARTUP_MSG:

- /************************************************************

- STARTUP_MSG: Starting NameNode

- STARTUP_MSG: host = node1/192.168.20.11

- STARTUP_MSG: args = [-format]

- STARTUP_MSG: version = 3.3.0

- STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/accessors-smart-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/animal-sniffer-annotations-1.17.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-5.0.4.jar:/usr/local/hadoop/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.7.jar:/usr/local/hadoop/share/hadoop/common/lib/checker-qual-2.5.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.9.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.11.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang3-3.7.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.6.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-text-1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-client-4.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-framework-4.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-recipes-4.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/dnsjava-2.1.7.jar:/usr/local/hadoop/share/hadoop/common/lib/failureaccess-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/guava-27.0-jre.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-3.3.0.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-3.3.0.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-shaded-protobuf_3_7-1.0.0.jar:/usr/local/hadoop/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/usr/local/hadoop/share/hadoop/common/lib/httpclient-4.5.6.jar:/usr/local/hadoop/share/hadoop/common/lib/httpcore-4.4.10.jar:/usr/local/hadoop/share/hadoop/common/lib/j2objc-annotations-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-annotations-2.10.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-2.10.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-databind-2.10.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/javax.activation-api-1.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-servlet-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-http-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-io-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-security-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-server-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-servlet-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-webapp-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-xml-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.55.jar:/usr/local/hadoop/share/hadoop/common/lib/json-smart-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-3.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-client-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-common-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-core-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-server-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-config-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/nimbus-jose-jwt-7.9.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/re2j-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.5.jar:/usr/local/hadoop/share/hadoop/common/lib/stax2-api-3.1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/token-provider-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.5.6.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-jute-3.5.6.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.10.6.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/usr/local/hadoop/share/hadoop/common/lib/metrics-core-3.2.4.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-3.3.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-3.3.0-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-3.3.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-registry-3.3.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-kms-3.3.0.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-ajax-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-all-4.1.50.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/okio-1.6.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/hadoop-auth-3.3.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/httpclient-4.5.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/httpcore-4.4.10.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/nimbus-jose-jwt-7.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/json-smart-2.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-5.0.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/zookeeper-3.5.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/zookeeper-jute-3.5.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/curator-framework-4.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/curator-client-4.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-27.0-jre.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/failureaccess-1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-3.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/checker-qual-2.5.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/j2objc-annotations-1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/animal-sniffer-annotations-1.17.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-server-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-http-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-io-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-webapp-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-xml-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-servlet-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-security-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/hadoop-shaded-protobuf_3_7-1.0.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/hadoop-annotations-3.3.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-net-3.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/javax.activation-api-1.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-beanutils-1.9.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang3-3.7.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-text-1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/avro-1.7.7.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-compress-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/re2j-1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsch-0.1.55.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/curator-recipes-4.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-databind-2.10.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-annotations-2.10.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-2.10.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/dnsjava-2.1.7.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.10.6.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.3.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.3.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-3.3.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.3.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.3.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.3.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.3.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.3.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.3.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.0-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-analysis-7.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-commons-7.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-tree-7.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/bcpkix-jdk15on-1.60.jar:/usr/local/hadoop/share/hadoop/yarn/lib/bcprov-jdk15on-1.60.jar:/usr/local/hadoop/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/fst-2.50.jar:/usr/local/hadoop/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-4.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-base-2.10.3.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.10.3.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.10.3.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jakarta.activation-api-1.2.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jakarta.xml.bind-api-2.3.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/java-util-1.9.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.websocket-api-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.websocket-client-api-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax-websocket-client-impl-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax-websocket-server-impl-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-client-1.19.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-annotations-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-client-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-jndi-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jetty-plus-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jline-3.9.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jna-5.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/json-io-2.5.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/usr/local/hadoop/share/hadoop/yarn/lib/objenesis-2.6.jar:/usr/local/hadoop/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/usr/local/hadoop/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/websocket-api-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/lib/websocket-client-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/lib/websocket-common-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/lib/websocket-server-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/lib/websocket-servlet-9.4.20.v20190813.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-mawo-core-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-registry-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-router-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-services-api-3.3.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-services-core-3.3.0.jar

- STARTUP_MSG: build = Unknown -r Unknown; compiled by 'root' on 2021-07-15T07:35Z

- STARTUP_MSG: java = 1.8.0_301

- ************************************************************/

- 2023-03-27 18:47:22,405 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

- 2023-03-27 18:47:22,493 INFO namenode.NameNode: createNameNode [-format]

- 2023-03-27 18:47:22,886 INFO namenode.NameNode: Formatting using clusterid: CID-dfdfbc75-e314-4dd3-89df-a3def1a48c33

- 2023-03-27 18:47:22,911 INFO namenode.FSEditLog: Edit logging is async:true

- 2023-03-27 18:47:22,927 INFO namenode.FSNamesystem: KeyProvider: null

- 2023-03-27 18:47:22,928 INFO namenode.FSNamesystem: fsLock is fair: true

- 2023-03-27 18:47:22,928 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

- 2023-03-27 18:47:22,931 INFO namenode.FSNamesystem: fsOwner = hadoop (auth:SIMPLE)

- 2023-03-27 18:47:22,932 INFO namenode.FSNamesystem: supergroup = supergroup

- 2023-03-27 18:47:22,932 INFO namenode.FSNamesystem: isPermissionEnabled = true

- 2023-03-27 18:47:22,932 INFO namenode.FSNamesystem: isStoragePolicyEnabled = true

- 2023-03-27 18:47:22,932 INFO namenode.FSNamesystem: HA Enabled: false

- 2023-03-27 18:47:22,961 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

- 2023-03-27 18:47:22,967 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

- 2023-03-27 18:47:22,967 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

- 2023-03-27 18:47:22,969 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

- 2023-03-27 18:47:22,969 INFO blockmanagement.BlockManager: The block deletion will start around 2023 三月 27 18:47:22

- 2023-03-27 18:47:22,970 INFO util.GSet: Computing capacity for map BlocksMap

- 2023-03-27 18:47:22,970 INFO util.GSet: VM type = 64-bit

- 2023-03-27 18:47:22,971 INFO util.GSet: 2.0% max memory 405.5 MB = 8.1 MB

- 2023-03-27 18:47:22,971 INFO util.GSet: capacity = 2^20 = 1048576 entries

- 2023-03-27 18:47:22,977 INFO blockmanagement.BlockManager: Storage policy satisfier is disabled

- 2023-03-27 18:47:22,977 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

- 2023-03-27 18:47:23,008 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.999

- 2023-03-27 18:47:23,008 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

- 2023-03-27 18:47:23,008 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

- 2023-03-27 18:47:23,009 INFO blockmanagement.BlockManager: defaultReplication = 3

- 2023-03-27 18:47:23,009 INFO blockmanagement.BlockManager: maxReplication = 512

- 2023-03-27 18:47:23,009 INFO blockmanagement.BlockManager: minReplication = 1

- 2023-03-27 18:47:23,009 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

- 2023-03-27 18:47:23,009 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

- 2023-03-27 18:47:23,009 INFO blockmanagement.BlockManager: encryptDataTransfer = false

- 2023-03-27 18:47:23,009 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

- 2023-03-27 18:47:23,022 INFO namenode.FSDirectory: GLOBAL serial map: bits=29 maxEntries=536870911

- 2023-03-27 18:47:23,022 INFO namenode.FSDirectory: USER serial map: bits=24 maxEntries=16777215

- 2023-03-27 18:47:23,022 INFO namenode.FSDirectory: GROUP serial map: bits=24 maxEntries=16777215

- 2023-03-27 18:47:23,022 INFO namenode.FSDirectory: XATTR serial map: bits=24 maxEntries=16777215

- 2023-03-27 18:47:23,032 INFO util.GSet: Computing capacity for map INodeMap

- 2023-03-27 18:47:23,032 INFO util.GSet: VM type = 64-bit

- 2023-03-27 18:47:23,032 INFO util.GSet: 1.0% max memory 405.5 MB = 4.1 MB

- 2023-03-27 18:47:23,032 INFO util.GSet: capacity = 2^19 = 524288 entries

- 2023-03-27 18:47:23,032 INFO namenode.FSDirectory: ACLs enabled? true

- 2023-03-27 18:47:23,032 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

- 2023-03-27 18:47:23,032 INFO namenode.FSDirectory: XAttrs enabled? true

- 2023-03-27 18:47:23,032 INFO namenode.NameNode: Caching file names occurring more than 10 times

- 2023-03-27 18:47:23,035 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

- 2023-03-27 18:47:23,036 INFO snapshot.SnapshotManager: SkipList is disabled

- 2023-03-27 18:47:23,039 INFO util.GSet: Computing capacity for map cachedBlocks

- 2023-03-27 18:47:23,039 INFO util.GSet: VM type = 64-bit

- 2023-03-27 18:47:23,040 INFO util.GSet: 0.25% max memory 405.5 MB = 1.0 MB

- 2023-03-27 18:47:23,040 INFO util.GSet: capacity = 2^17 = 131072 entries

- 2023-03-27 18:47:23,045 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

- 2023-03-27 18:47:23,045 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

- 2023-03-27 18:47:23,045 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

- 2023-03-27 18:47:23,047 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

- 2023-03-27 18:47:23,048 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

- 2023-03-27 18:47:23,049 INFO util.GSet: Computing capacity for map NameNodeRetryCache

- 2023-03-27 18:47:23,049 INFO util.GSet: VM type = 64-bit

- 2023-03-27 18:47:23,049 INFO util.GSet: 0.029999999329447746% max memory 405.5 MB = 124.6 KB

- 2023-03-27 18:47:23,049 INFO util.GSet: capacity = 2^14 = 16384 entries

- 2023-03-27 18:47:23,064 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1176397251-192.168.20.11-1679914043059

- 2023-03-27 18:47:23,071 INFO common.Storage: Storage directory /data/hadoop/tmp/dfs/name has been successfully formatted.

- 2023-03-27 18:47:23,088 INFO namenode.FSImageFormatProtobuf: Saving image file /data/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

- 2023-03-27 18:47:23,188 INFO namenode.FSImageFormatProtobuf: Image file /data/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 401 bytes saved in 0 seconds .

- 2023-03-27 18:47:23,204 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

- 2023-03-27 18:47:23,214 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

- 2023-03-27 18:47:23,214 INFO namenode.NameNode: SHUTDOWN_MSG:

- /************************************************************

- SHUTDOWN_MSG: Shutting down NameNode at node1/192.168.20.11

- ************************************************************/

############################################################

8、启动hadoop集群

启动HDFS集群:

- [hadoop@node1 ~]$ start-dfs.sh

- Starting namenodes on [node1]

- Starting datanodes

- Starting secondary namenodes [node2]

- 使用jps命令查看各个节点是否启动正常

-

- [hadoop@node1 ~]$ jps

- 29299 Jps

- 29048 DataNode

- 28892 NameNode

-

- [hadoop@node2 local]$ jps

- 6844 SecondaryNameNode

- 7503 DataNode

- 7695 Jps

-

- [hadoop@node3 ~]$ jps

- 4889 DataNode

- 4973 Jps

启动YARN集群

- [hadoop@node1 hadoop]$ start-yarn.sh

- Starting resourcemanager

- Starting nodemanagers

############################################################

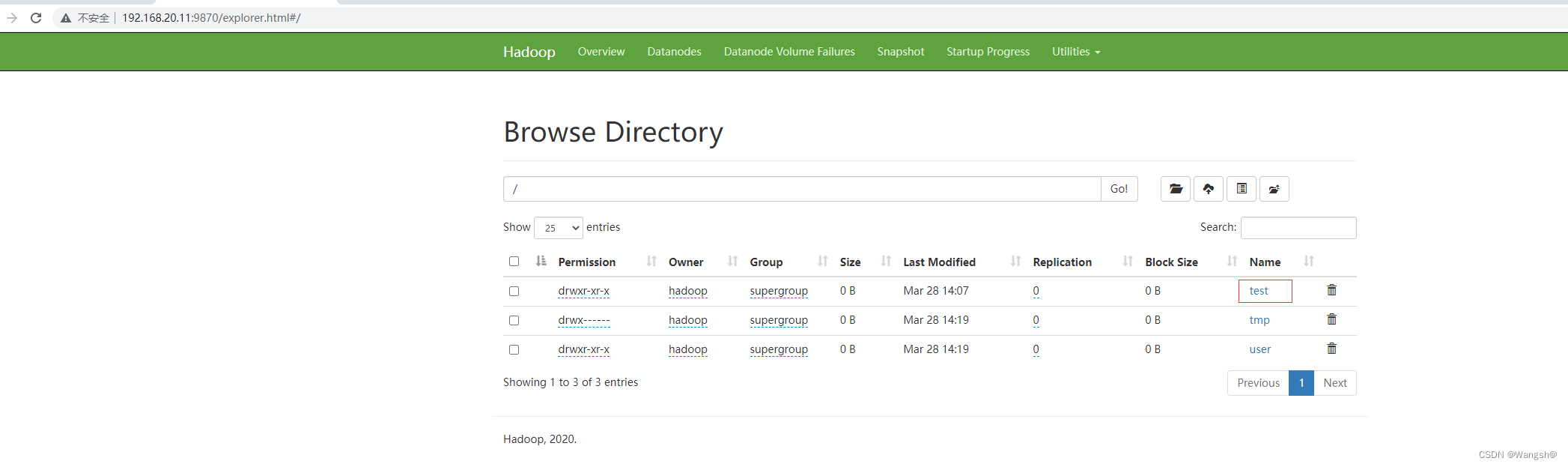

9、访问HDFS的web页面(端口9870)

############################################################

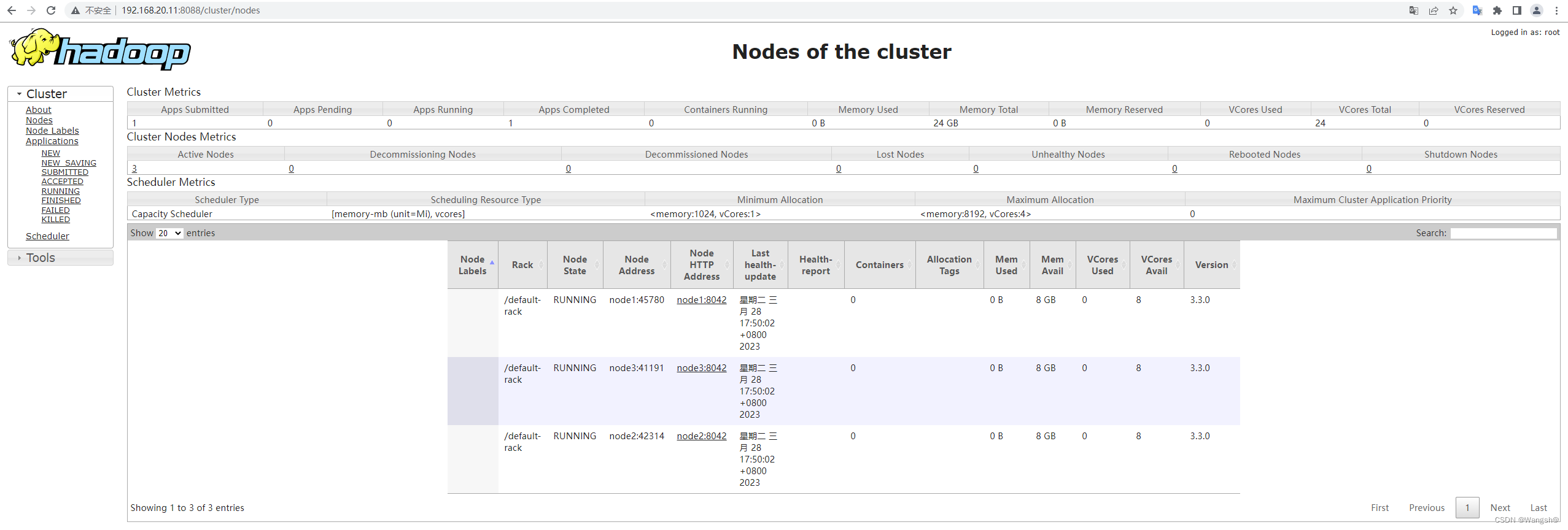

10、访问Hadoop的web页面(node1:8088)

############################################################

11、HDFS一些命令的使用

- [hadoop@node1 local]$ hadoop fs -ls /

- [hadoop@node1 local]$ hadoop fs -mkdir /test

- [hadoop@node1 local]$ hadoop fs -ls /

- Found 1 items

- drwxr-xr-x - hadoop supergroup 0 2023-03-28 14:03 /test

############################################################

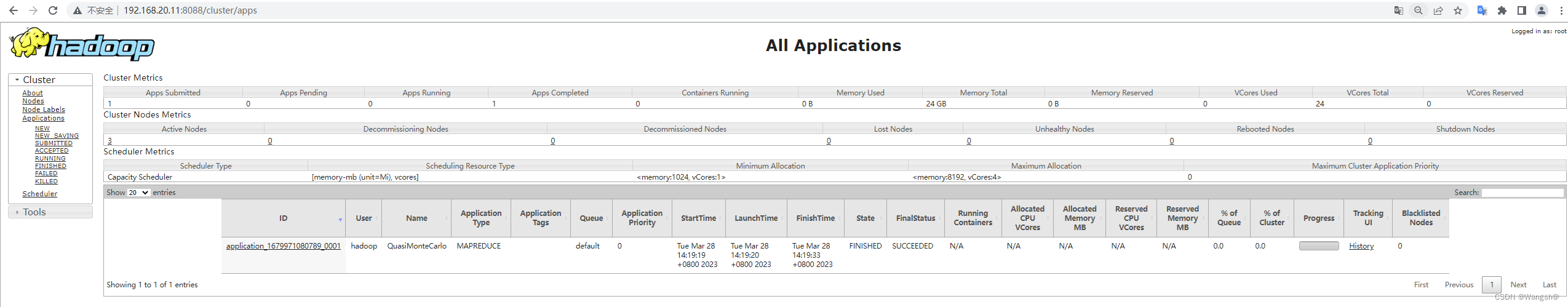

12、mapreduce示例执行

cd /usr/local/hadoop/share/hadoop/mapreduce

- [hadoop@node1 mapreduce]$ hadoop jar hadoop-mapreduce-examples-3.3.0.jar pi 2 4

- Number of Maps = 2

- Samples per Map = 4

- Wrote input for Map #0

- Wrote input for Map #1

- Starting Job

- 2023-03-28 14:19:18,805 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at node1/192.168.20.11:8032

- 2023-03-28 14:19:19,106 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/hadoop/.staging/job_1679971080789_0001

- 2023-03-28 14:19:19,204 INFO input.FileInputFormat: Total input files to process : 2

- 2023-03-28 14:19:19,256 INFO mapreduce.JobSubmitter: number of splits:2

- 2023-03-28 14:19:19,384 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1679971080789_0001

- 2023-03-28 14:19:19,384 INFO mapreduce.JobSubmitter: Executing with tokens: []

- 2023-03-28 14:19:19,507 INFO conf.Configuration: resource-types.xml not found

- 2023-03-28 14:19:19,507 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

- 2023-03-28 14:19:19,900 INFO impl.YarnClientImpl: Submitted application application_1679971080789_0001

- 2023-03-28 14:19:19,930 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1679971080789_0001/

- 2023-03-28 14:19:19,931 INFO mapreduce.Job: Running job: job_1679971080789_0001

- 2023-03-28 14:19:25,018 INFO mapreduce.Job: Job job_1679971080789_0001 running in uber mode : false

- 2023-03-28 14:19:25,026 INFO mapreduce.Job: map 0% reduce 0%

- 2023-03-28 14:19:30,108 INFO mapreduce.Job: map 100% reduce 0%

- 2023-03-28 14:19:35,156 INFO mapreduce.Job: map 100% reduce 100%

- 2023-03-28 14:19:35,174 INFO mapreduce.Job: Job job_1679971080789_0001 completed successfully

- 2023-03-28 14:19:35,237 INFO mapreduce.Job: Counters: 54

- File System Counters

- FILE: Number of bytes read=50

- FILE: Number of bytes written=795021

- FILE: Number of read operations=0

- FILE: Number of large read operations=0

- FILE: Number of write operations=0

- HDFS: Number of bytes read=524

- HDFS: Number of bytes written=215

- HDFS: Number of read operations=13

- HDFS: Number of large read operations=0

- HDFS: Number of write operations=3

- HDFS: Number of bytes read erasure-coded=0

- Job Counters

- Launched map tasks=2

- Launched reduce tasks=1

- Data-local map tasks=2

- Total time spent by all maps in occupied slots (ms)=6047

- Total time spent by all reduces in occupied slots (ms)=1620

- Total time spent by all map tasks (ms)=6047

- Total time spent by all reduce tasks (ms)=1620

- Total vcore-milliseconds taken by all map tasks=6047

- Total vcore-milliseconds taken by all reduce tasks=1620

- Total megabyte-milliseconds taken by all map tasks=6192128

- Total megabyte-milliseconds taken by all reduce tasks=1658880

- Map-Reduce Framework

- Map input records=2

- Map output records=4

- Map output bytes=36

- Map output materialized bytes=56

- Input split bytes=288

- Combine input records=0

- Combine output records=0

- Reduce input groups=2

- Reduce shuffle bytes=56

- Reduce input records=4

- Reduce output records=0

- Spilled Records=8

- Shuffled Maps =2

- Failed Shuffles=0

- Merged Map outputs=2

- GC time elapsed (ms)=145

- CPU time spent (ms)=1380

- Physical memory (bytes) snapshot=725311488

- Virtual memory (bytes) snapshot=8377520128

- Total committed heap usage (bytes)=516947968

- Peak Map Physical memory (bytes)=268955648

- Peak Map Virtual memory (bytes)=2790277120

- Peak Reduce Physical memory (bytes)=190787584

- Peak Reduce Virtual memory (bytes)=2798702592

- Shuffle Errors

- BAD_ID=0

- CONNECTION=0

- IO_ERROR=0

- WRONG_LENGTH=0

- WRONG_MAP=0

- WRONG_REDUCE=0

- File Input Format Counters

- Bytes Read=236

- File Output Format Counters

- Bytes Written=97

- Job Finished in 16.483 seconds

- Estimated value of Pi is 3.50000000000000000000

执行mapreduce的时候,会首先连接ResourceManager,也就是YARN集群,请求调度资源。

mapreduce执行分两个阶段,先执行map,再执行reduce。

############################################################

############################################################