- 1Arduino跨平台开发——卡尔曼滤波_卡尔曼 arduino

- 2【转】Azure Az-900认证 04——-考取AZ900所有知识点总结--获取证书!

- 3yolo组件之BottleneckCSP总结

- 4GetCommandLineW()作用

- 5将的所有员工的last_name和first_name拼接

- 6盘点2021年全球AI芯片,详解“xPU”,请收下最新最全的知识点_qsbxpu

- 7iOS 使用Hex色值设置颜色(可设置透明度使用4个字节色值赋值)

- 8互联网面试八股文之Java基础_java八股文面试题

- 9Java (Spark案例分析)_spark java

- 10[Java][Android] 多线程同步-主线程等待所有子线程完成案例_android主线程等待所有网络请求完成

Windows10搭建JDK、MySQL、Hadoop、Hive、Spark、Python(Anaconda3)_hadoop和spark运行需要jdk吗

赞

踩

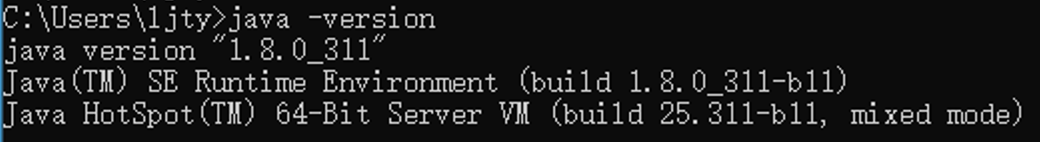

JDK1.8 安装

1. 下载jdk1.8.311

https://download.oracle.com/otn/java/jdk/8u311-b11/4d5417147a92418ea8b615e228bb6935/jdk-8u311-windows-x64.exe?AuthParam=1642222421_a7b7e727e85cd60d6f8c68c6745c0033

- 1

2. 安装jdk1.8

- 更改安装路径

- 更改jre 安装路径

- 安装成功

- win + r 输入 cmd 回车

注: 由于 hadoop 命令行运行的时候,目录有空格的情况下会导致一系列的问题,故而把 java 的安装地址更改为: D:\tools\Java\jdk1.8.0_311

phpstudy安装

1. 下载phpstudy

https://www.xp.cn/download.html

- 1

2. 安装phpstudy

3. 安装成功

4. 启动MySQL

Hadoop安装

1. 下载Hadoop

https://hadoop.apache.org/releases.html

# 点击 binary 进入下载界面

https://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-3.2.2/hadoop-3.2.2.tar.gz

- 1

- 2

- 3

2. 下载winutils

Hadoop 在 Windows 上不能直接运行,需要额外下载 winutils

官方不直接提供 winutils

第三方 GitHub 上有已经编译完成的:

https://github.com/cdarlint/winutils

下载后找到自己版本 Hadoop 的 winutils, 并把文件夹中的内容放置到 hadoop/bin 下。

如果 Hadoop 版本较新,没有第三方编译好的 winutils,需要自己手动编译,比较繁琐,不推荐。

- 1

- 2

- 3

- 4

- 5

- 6

3. 配置Hadoop

3.1 设置JAVA_HOME环境变量和其他变量

编辑文件hadoop-3.2.2/etc/hadoop/hadoop-env.cmd

# java 环境变量地址

set JAVA_HOME=D:\tools\Java\jdk1.8.0_311

# hadoop 地址

set HADOOP_PREFIX=D:\tools\hadoop-3.2.2

# hadoop 配置文件地址

set HADOOP_CONF_DIR=%HADOOP_PREFIX%\etc\hadoop

# yarn 配置文件地址

set YARN_CONF_DIR=%HADOOP_CONF_DIR%

# path

set PATH=%PATH%;%HADOOP_PREFIX%\bin

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

3.2 编辑配置文件

3.2.1 etc/hadoop/core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

3.2.2 etc/hadoop/hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/D:/tools/hadoop-3.2.2/data/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/D:/tools/hadoop-3.2.2/data/hdfs/datanode</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/D:/tools/hadoop-3.2.2/data/tmp</value>

</property>

<property>

<name>dfs.http.address</name>

<value>localhost:50070</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

路径更改为自己的目录

3.2.3 etc/hadoop/mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

3.2.4 etc/hadoop/yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<!-- NodeManager总的可用虚拟CPU个数 -->

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>1</value>

</property>

<property>

<!-- 每个节点可用的最大内存 -->

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<property>

<!-- 中间结果存放位置 -->

<name>yarn.nodemanager.local-dirs</name>

<value>/D:/tools/hadoop-3.2.2/data/tmp/nm-local-dir</value>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/D:/tools/hadoop-3.2.2/data/logs/yarn</value>

</property>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

4. 启动集群

4.1 格式化 namenode

bin\hadoop.cmd namenode -format

- 1

4.2 启动服务

sbin\start-all.cmd

- 1

4.3 异常解决

ERROR util.SysInfoWindows: ExitCodeException exitCode=-1073741515

安装: VC_redist.x64.exe

链接: https://pan.baidu.com/s/1HmTl4evxjCAsl_w163StZA?pwd=zifq

提取码: zifq

5. 配置环境变量

Hive 环境安装

1. 下载 Hive

http://archive.apache.org/dist/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

- 1

2. 配置环境变量

注: Hive 的 Hive_x.x.x_bin.tar.gz 版本在 windows 环境中缺少 Hive的执行文件和运行程序

解决版本: 下载低版本 Hive (apache-hive-1.0.0-src)

下载地址: http://archive.apache.org/dist/hive/hive-1.0.0/apache-hive-1.0.0-src.tar.gz

将bin 目录替换目标对象(D:\tools\apache-hive-3.1.2-bin)原有的bin目录

3. 覆盖后的 bin 目录结构

4. 下载 mysql 驱动包

下载地址: https://cdn.mysql.com//archives/mysql-connector-java-8.0/mysql-connector-java-8.0.21.zip

拷贝 mysql-connector-java-8.0.21.jar 到 $HIVE_HOME/lib 目录下

5. 配置 Hive

5.1 conf/hive-default.xml.template 复制成新的文件: hive-site.xml

以下为改动项

<!--hive的临时数据目录,指定的位置在hdfs上的目录-->

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp/hive</value>

<description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir: ${hive.exec.scratchdir}/<username> is created, with ${hive.scratch.dir.permission}.</description>

</property>

<!-- scratchdir 本地目录 -->

<property>

<name>hive.exec.local.scratchdir</name>

<value>D:/tools/apache-hive-3.1.2-bin/data/scratch_dir</value>

<description>Local scratch space for Hive jobs</description>

</property>

<!-- resources_dir 本地目录 -->

<property>

<name>hive.downloaded.resources.dir</name>

<value>D:/tools/apache-hive-3.1.2-bin/data/resources_dir/${hive.session.id}_resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<!--hive的临时数据目录,指定的位置在hdfs上的目录-->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<!-- 数据库访问密码 -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

<description>password to use against metastore database</description>

</property>

<!-- 数据库连接地址配置 -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://127.0.0.1:3306/hive?serverTimezone=UTC&useSSL=false&allowPublicKeyRetrieval=true</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

<!-- 自动创建全部 -->

<!-- hive Required table missing : "DBS" in Catalog""Schema" 错误 -->

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

<description>Auto creates necessary schema on a startup if one doesn't exist. Set this to false, after creating it once.To enable auto create also set hive.metastore.schema.verification=false. Auto creation is not recommended for production use cases, run schematool command instead.</description>

</property>

<!-- 解决 Caused by: MetaException(message:Version information not found in metastore. ) -->

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

<description>

Enforce metastore schema version consistency.

True: Verify that version information stored in is compatible with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manually migrate schema after Hive upgrade which ensures

proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.

</description>

</property>

<!-- 数据库驱动配置 -->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<!-- 数据库用户名 -->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>

<!-- operation_logs 本地目录 -->

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>D:/tools/apache-hive-3.1.2-bin/data/operation_logs</value>

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

</property>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

5.2 conf/hive-env.sh.template 复制成新的文件: hive-env.sh

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Set Hive and Hadoop environment variables here. These variables can be used

# to control the execution of Hive. It should be used by admins to configure

# the Hive installation (so that users do not have to set environment variables

# or set command line parameters to get correct behavior).

#

# The hive service being invoked (CLI etc.) is available via the environment

# variable SERVICE

# Hive Client memory usage can be an issue if a large number of clients

# are running at the same time. The flags below have been useful in

# reducing memory usage:

#

# if [ "$SERVICE" = "cli" ]; then

# if [ -z "$DEBUG" ]; then

# export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms10m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:+UseParNewGC -XX:-UseGCOverheadLimit"

# else

# export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms10m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:-UseGCOverheadLimit"

# fi

# fi

# The heap size of the jvm stared by hive shell script can be controlled via:

#

# export HADOOP_HEAPSIZE=1024

#

# Larger heap size may be required when running queries over large number of files or partitions.

# By default hive shell scripts use a heap size of 256 (MB). Larger heap size would also be

# appropriate for hive server.

# Set HADOOP_HOME to point to a specific hadoop install directory

# HADOOP_HOME=${bin}/../../hadoop

export HADOOP_HOME=D:\tools\apache-hive-3.1.2-bin

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=D:\tools\apache-hive-3.1.2-bin\conf

# Folder containing extra libraries required for hive compilation/execution can be controlled by:

export HIVE_AUX_JARS_PATH=D:\tools\apache-hive-3.1.2-bin\lib

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

5.3 conf/hive-exec-log4j.properties.template 复制成新的文件: hive-exec-log4j2.properties

5.3 conf/hive-log4j.properties.template 复制成新的文件: hive-log4j2.properties

日志级别更改为: ERROR

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

status = ERROR

name = HiveLog4j2

packages = org.apache.hadoop.hive.ql.log

# list of properties

property.hive.log.level = ERROR

property.hive.root.logger = ERROR

property.hive.log.dir = ${sys:java.io.tmpdir}/${sys:user.name}

property.hive.log.file = hive.log

property.hive.perflogger.log.level = ERROR

# list of all appenders

appenders = console, DRFA

# console appender

appender.console.type = Console

appender.console.name = console

appender.console.target = SYSTEM_ERR

appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %d{ISO8601} %5p [%t] %c{2}: %m%n

# daily rolling file appender

appender.DRFA.type = RollingRandomAccessFile

appender.DRFA.name = DRFA

appender.DRFA.fileName = ${sys:hive.log.dir}/${sys:hive.log.file}

# Use %pid in the filePattern to append <process-id>@<host-name> to the filename if you want separate log files for different CLI session

appender.DRFA.filePattern = ${sys:hive.log.dir}/${sys:hive.log.file}.%d{yyyy-MM-dd}

appender.DRFA.layout.type = PatternLayout

appender.DRFA.layout.pattern = %d{ISO8601} %5p [%t] %c{2}: %m%n

appender.DRFA.policies.type = Policies

appender.DRFA.policies.time.type = TimeBasedTriggeringPolicy

appender.DRFA.policies.time.interval = 1

appender.DRFA.policies.time.modulate = true

appender.DRFA.strategy.type = DefaultRolloverStrategy

appender.DRFA.strategy.max = 30

# list of all loggers

loggers = NIOServerCnxn, ClientCnxnSocketNIO, DataNucleus, Datastore, JPOX, PerfLogger, AmazonAws, ApacheHttp

logger.NIOServerCnxn.name = org.apache.zookeeper.server.NIOServerCnxn

logger.NIOServerCnxn.level = WARN

logger.ClientCnxnSocketNIO.name = org.apache.zookeeper.ClientCnxnSocketNIO

logger.ClientCnxnSocketNIO.level = WARN

logger.DataNucleus.name = DataNucleus

logger.DataNucleus.level = ERROR

logger.Datastore.name = Datastore

logger.Datastore.level = ERROR

logger.JPOX.name = JPOX

logger.JPOX.level = ERROR

logger.AmazonAws.name=com.amazonaws

logger.AmazonAws.level = ERROR

logger.ApacheHttp.name=org.apache.http

logger.ApacheHttp.level = ERROR

logger.PerfLogger.name = org.apache.hadoop.hive.ql.log.PerfLogger

logger.PerfLogger.level = ${sys:hive.perflogger.log.level}

# root logger

rootLogger.level = ${sys:hive.log.level}

rootLogger.appenderRefs = root

rootLogger.appenderRef.root.ref = ${sys:hive.root.logger}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

6. 在 hadoop 上创建 hdfs 目录

hadoop fs -mkdir /tmp

hadoop fs -mkdir /user/

hadoop fs -mkdir /user/hive/

hadoop fs -mkdir /user/hive/warehouse

hadoop fs -chmod g+w /tmp

hadoop fs -chmod g+w /user/hive/warehouse

- 1

- 2

- 3

- 4

- 5

- 6

7. MySQL 创建 hive 库

8. 启动 Hive 服务

8.1 初始化 Hive 数据库

$HIVE_HOME\scripts\metastore\upgrade\mysql

hive-schema-3.1.0.mysql.sql

在hive数据库中执行该文件中的内容

8.2 启动 Hive 服务

hive --service metastore

- 1

错误异常: ERROR conf.Configuration: error parsing conf file:/D:/tools/apache-hive-3.1.2-bin/conf/hive-site.xml

com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

8.3 Hive 命令

9. 测试Hive

9.1 创建 database

create database test;

show databases;

- 1

- 2

9.2 创建 table

use test;

create table stu(id int, name string);

show tables;

desc stu;

- 1

- 2

- 3

- 4

9.3 访问地址

http://localhost:50070/explorer.html#/user/hive/warehouse/test.db

Spark 安装

1. 下载 Spark

https://spark.apache.org/downloads.html

https://dlcdn.apache.org/spark/spark-3.2.0/spark-3.2.0-bin-hadoop3.2.tgz

- 1

- 2

- 3

2. 配置环境变量

3. 运行 Spark

pyspark

- 1

4. 访问 Spark

http://localhost:4040/

5. python 访问 Spark

使用: anaconda

因此需要:将 D:\tools\spark-3.2.0-bin-hadoop3.2\python 文件夹下 pyspark 文件夹拷贝到 D:\ProgramData\Anaconda3\Lib\site-packages

安装: pip install py4j

6. 测试

from pyspark import SparkContext

sc=SparkContext()

print('Spark Version',sc.version)

- 1

- 2

- 3

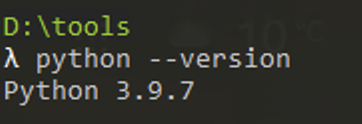

python 环境安装

1. 下载 python

https://www.anaconda.com/products/individual

https://repo.anaconda.com/archive/Anaconda3-2021.11-Windows-x86_64.exe

- 1

- 2

- 3

2. 安装 python

3. Python 安装插件

# [ 代码语法校验 ]

pip install flake8

# [ 代码格式化 ]

pip install yapf

# [ 爬虫框架 ]

pip install scrapy

# [ mysql链接 ]

pip install pymysql

# [ mysql链接 ]

pip install py4j

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14