- 1node.js后端框架介绍_node.js后端框架及作用

- 2【ubuntu20.04上openvino安装及环境配置】_[setupvars.sh] openvino environment initialized

- 3python requests post线程安全_关于python requests库中session线程安全方面问题的小疑问...

- 4怎么防止跨站脚本攻击(XSS)?

- 5送给女朋友表白的小爱心,用Python这样画就对了_python 手机点开文件显示python的爱心效果

- 6小布助手,身入大千世界

- 7Attension注意力机制综述(一)——自注意力机制self_attention(含代码)_attension计算公式

- 8postgresql 查找慢sql之二: pg_stat_statements_可以使用pg_stat_statements去查询运行时间长的sql语句。

- 9传统行业被裁,奋战一年成功逆袭!做好这四个环节,轻轻松松斩下腾讯Offer!

- 10js之es新特性

SpringCloud微服务实战——搭建企业级开发框架(三十五):SpringCloud + Docker + k8s实现微服务集群打包部署-集群环境部署

赞

踩

一、集群环境规划配置

生产环境不要使用一主多从,要使用多主多从。这里使用三台主机进行测试一台Master(172.16.20.111),两台Node(172.16.20.112和172.16.20.113)

1、设置主机名

CentOS7安装完成之后,设置固定ip,三台主机做相同设置

vi /etc/sysconfig/network-scripts/ifcfg-ens33

#在最下面ONBOOT改为yes,新增固定地址IPADDR,172.16.20.111,172.16.20.112,172.16.20.113

ONBOOT=yes

IPADDR=172.16.20.111

- 1

- 2

- 3

- 4

- 5

三台主机ip分别设置好之后,修改hosts文件,设置主机名

#master 机器上执行

hostnamectl set-hostname master

#node1 机器上执行

hostnamectl set-hostname node1

#node2 机器上执行

hostnamectl set-hostname node2

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

vi /etc/hosts

172.16.20.111 master

172.16.20.112 node1

172.16.20.113 node2

- 1

- 2

- 3

- 4

- 5

2、时间同步

开启chronyd服务

systemctl start chronyd

- 1

设置开机启动

systemctl enable chronyd

- 1

测试

date

- 1

3、禁用firewalld和iptables(测试环境)

systemctl stop firewalld

systemctl disable firewalld

systemctl stop iptables

systemctl disable iptables

- 1

- 2

- 3

- 4

- 5

4、禁用selinux

vi /etc/selinux/config

SELINUX=disabled

- 1

- 2

- 3

5、禁用swap分区

注释掉 /dev/mapper/centos-swap swap

vi /etc/fstab

# 注释掉

# /dev/mapper/centos-swap swap

- 1

- 2

- 3

- 4

- 5

6、修改linux的内核参数

vi /etc/sysctl.d/kubernetes.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

#重新加载配置

sysctl -p

#加载网桥过滤模块

modprobe br_netfilter

#查看网桥过滤模块

lsmod | grep br_netfilter

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

7、配置ipvs

安装ipset和ipvsadm

yum install ipset ipvsadm -y

- 1

添加需要加载的模块(整个执行)

cat <<EOF> /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

添加执行权限

chmod +x /etc/sysconfig/modules/ipvs.modules

- 1

执行脚本

/bin/bash /etc/sysconfig/modules/ipvs.modules

- 1

查看是否加载成功

lsmod | grep -e -ip_vs -e nf_conntrack_ipv4

- 1

以上完成设置之后,一定要执行重启使配置生效

reboot

- 1

二、Docker环境安装配置

1、安装依赖

docker依赖于系统的一些必要的工具:

yum install -y yum-utils device-mapper-persistent-data lvm2

- 1

2、添加软件源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum clean all

yum makecache fast

- 1

- 2

- 3

3、安装docker-ce

#查看可以安装的docker版本

yum list docker-ce --showduplicates

#选择安装需要的版本,直接安装最新版,可以执行 yum -y install docker-ce

yum install --setopt=obsoletes=0 docker-ce-19.03.13-3.el7 -y

- 1

- 2

- 3

- 4

4、启动服务

#通过systemctl启动服务

systemctl start docker

#通过systemctl设置开机启动

systemctl enable docker

- 1

- 2

- 3

- 4

5、查看安装版本

启动服务使用docker version查看一下当前的版本:

docker version

- 1

6、 配置镜像加速

通过修改daemon配置文件/etc/docker/daemon.json加速,如果使用k8s,这里一定要设置 “exec-opts”: [“native.cgroupdriver=systemd”]。 “insecure-registries” : [“172.16.20.175”]配置是可以通过http从我们的harbor上拉取数据。

vi /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"registry-mirrors": ["https://eiov0s1n.mirror.aliyuncs.com"],

"insecure-registries" : ["172.16.20.175"]

}

sudo systemctl daemon-reload && sudo systemctl restart docker

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

7、安装docker-compose

如果网速太慢,可以直接到 https://github.com/docker/compose/releases 选择对应的版本进行下载,然后上传到服务器/usr/local/bin/目录。

sudo curl -L "https://github.com/docker/compose/releases/download/v2.0.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

- 1

- 2

- 3

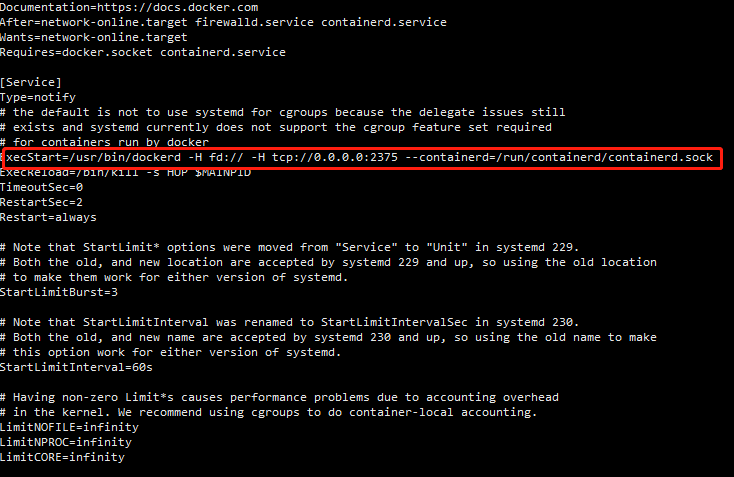

注意:(非必须设置)开启Docker远程访问 (这里不是必须开启的,生产环境不要开启,开启之后,可以在开发环境直连docker)

vi /lib/systemd/system/docker.service

- 1

修改ExecStart,添加 -H tcp://0.0.0.0:2375

ExecStart=/usr/bin/dockerd -H fd:// -H tcp://0.0.0.0:2375 --containerd=/run/containerd/containerd.sock

- 1

修改后执行以下命令:

systemctl daemon-reload && service docker restart

- 1

测试是否能够连得上:

curl http://localhost:2375/version

- 1

三、Harbor私有镜像仓库安装配置(重新设置一台服务器172.16.20.175,不要放在K8S的主从服务器上)

首先需要按照前面的步骤,在环境上安装Docker,才能安装Harbor。

1、选择合适的版本进行下载,下载地址:

https://github.com/goharbor/harbor/releases

2、解压

tar -zxf harbor-offline-installer-v2.2.4.tgz

- 1

3、配置

cd harbor

mv harbor.yml.tmpl harbor.yml

vi harbor.yml

- 1

- 2

- 3

- 4

- 5

4、将hostname改为当前服务器地址,注释掉https配置。

......

# The IP address or hostname to access admin UI and registry service.

# DO NOT use localhost or 127.0.0.1, because Harbor needs to be accessed by external clients.

hostname: 172.16.20.175

# http related config

http:

# port for http, default is 80. If https enabled, this port will redirect to https port

port: 80

# https related config

#https:

# https port for harbor, default is 443

# port: 443

# The path of cert and key files for nginx

# certificate: /your/certificate/path

# private_key: /your/private/key/path

......

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

5、执行安装命令

mkdir /var/log/harbor/

./install.sh

- 1

- 2

- 3

- 4

6、查看安装是否成功

[root@localhost harbor]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

de1b702759e7 goharbor/harbor-jobservice:v2.2.4 "/harbor/entrypoint.…" 13 seconds ago Up 9 seconds (health: starting) harbor-jobservice

55b465d07157 goharbor/nginx-photon:v2.2.4 "nginx -g 'daemon of…" 13 seconds ago Up 9 seconds (health: starting) 0.0.0.0:80->8080/tcp, :::80->8080/tcp nginx

d52f5557fa73 goharbor/harbor-core:v2.2.4 "/harbor/entrypoint.…" 13 seconds ago Up 10 seconds (health: starting) harbor-core

4ba09aded494 goharbor/harbor-db:v2.2.4 "/docker-entrypoint.…" 13 seconds ago Up 11 seconds (health: starting) harbor-db

647f6f46e029 goharbor/harbor-portal:v2.2.4 "nginx -g 'daemon of…" 13 seconds ago Up 11 seconds (health: starting) harbor-portal

70251c4e234f goharbor/redis-photon:v2.2.4 "redis-server /etc/r…" 13 seconds ago Up 11 seconds (health: starting) redis

21a5c408afff goharbor/harbor-registryctl:v2.2.4 "/home/harbor/start.…" 13 seconds ago Up 11 seconds (health: starting) registryctl

b0937800f88b goharbor/registry-photon:v2.2.4 "/home/harbor/entryp…" 13 seconds ago Up 11 seconds (health: starting) registry

d899e377e02b goharbor/harbor-log:v2.2.4 "/bin/sh -c /usr/loc…" 13 seconds ago Up 12 seconds (health: starting) 127.0.0.1:1514->10514/tcp harbor-log

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

7、harbor的启动停止命令

docker-compose down #停止

docker-compose up -d #启动

- 1

- 2

- 3

8、访问harbor管理台地址,上面配置的hostname, http://172.16.20.175 (默认用户名/密码: admin/Harbor12345):

三、Kubernetes安装配置

1、切换镜像源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

2、安装kubeadm、kubelet和kubectl

yum install -y kubelet kubeadm kubectl

- 1

3、配置kubelet的cgroup

vi /etc/sysconfig/kubelet

- 1

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

- 1

- 2

4、启动kubelet并设置开机启动

systemctl start kubelet && systemctl enable kubelet

- 1

5、初始化k8s集群(只在Master执行)

初始化

kubeadm init --kubernetes-version=v1.22.3 \

--apiserver-advertise-address=172.16.20.111 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.20.0.0/16 --pod-network-cidr=10.222.0.0/16

- 1

- 2

- 3

- 4

创建必要文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 1

- 2

- 3

6、加入集群(只在Node节点执行)

在Node节点(172.16.20.112和172.16.20.113)运行上一步初始化成功后显示的加入集群命令

kubeadm join 172.16.20.111:6443 --token fgf380.einr7if1eb838mpe \

--discovery-token-ca-cert-hash sha256:fa5a6a2ff8996b09effbf599aac70505b49f35c5bca610d6b5511886383878f7

- 1

- 2

在Master查看集群状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 2m54s v1.22.3

node1 NotReady <none> 68s v1.22.3

node2 NotReady <none> 30s v1.22.3

- 1

- 2

- 3

- 4

- 5

7、安装网络插件(只在Master执行)

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

- 1

镜像加速:修改kube-flannel.yml文件,将quay.io/coreos/flannel:v0.15.0 改为 quay.mirrors.ustc.edu.cn/coreos/flannel:v0.15.0

执行安装

kubectl apply -f kube-flannel.yml

- 1

再次查看集群状态,(需要等待一段时间大概1-2分钟)发现STATUS都是Ready。

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 42m v1.22.3

node1 Ready <none> 40m v1.22.3

node2 Ready <none> 39m v1.22.3

- 1

- 2

- 3

- 4

- 5

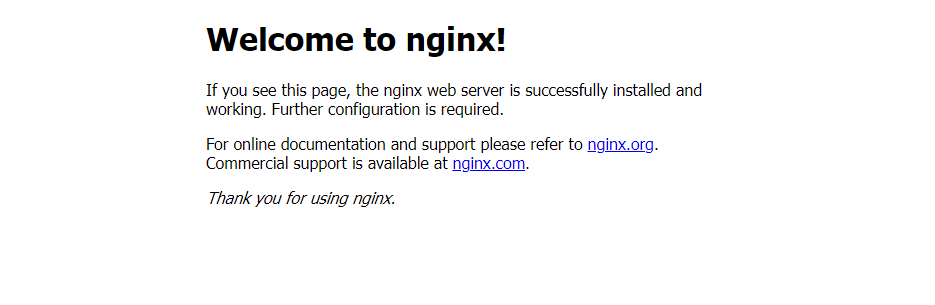

8、集群测试

使用kubectl安装部署nginx服务

kubectl create deployment nginx --image=nginx --replicas=1

kubectl expose deploy nginx --port=80 --target-port=80 --type=NodePort

- 1

- 2

- 3

查看服务

[root@master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-z5tm8 1/1 Running 0 26s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.20.0.1 <none> 443/TCP 68m

service/nginx NodePort 10.20.17.199 <none> 80:32605/TCP 9s

- 1

- 2

- 3

- 4

- 5

- 6

- 7

服务显示service/nginx的PORT(S)为80:32605/TCP, 我们在浏览器中访问主从地址的32605端口,查看nginx是否运行

http://172.16.20.111:32605/

http://172.16.20.112:32605/

http://172.16.20.113:32605/

成功后显示如下界面:

9、安装Kubernetes管理界面Dashboard

Kubernetes可以通过命令行工具kubectl完成所需要的操作,同时也提供了方便操作的管理控制界面,用户可以用 Kubernetes Dashboard 部署容器化的应用、监控应用的状态、执行故障排查任务以及管理 Kubernetes 各种资源。

1、下载安装配置文件recommended.yaml ,注意在https://github.com/kubernetes/dashboard/releases查看Kubernetes 和 Kubernetes Dashboard的版本对应关系。

# 执行下载

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml

- 1

- 2

2、修改配置信息,在service下添加 type: NodePort和nodePort: 30010

vi recommended.yaml

- 1

......

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

# 新增

nodeName: Master

# 新增

type: NodePort

ports:

- port: 443

targetPort: 8443

# 新增

nodePort: 30010

......

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

注释掉以下信息,否则不能安装到master服务器

# Comment the following tolerations if Dashboard must not be deployed on master

#tolerations:

# - key: node-role.kubernetes.io/master

# effect: NoSchedule

- 1

- 2

- 3

- 4

新增nodeName: master,安装到master服务器

......

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

nodeName: master

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.4.0

imagePullPolicy: Always

......

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

3、执行安装部署命令

kubectl apply -f recommended.yaml

- 1

4、查看运行状态命令,可以看到service/kubernetes-dashboard 已运行,访问端口为30010

[root@master ~]# kubectl get pod,svc -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-c45b7869d-6k87n 0/1 ContainerCreating 0 10s

pod/kubernetes-dashboard-576cb95f94-zfvc9 0/1 ContainerCreating 0 10s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.20.222.83 <none> 8000/TCP 10s

service/kubernetes-dashboard NodePort 10.20.201.182 <none> 443:30010/TCP 10s

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

5、创建访问Kubernetes Dashboard的账号

kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard

kubectl create clusterrolebinding dashboard-admin-rb --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin

- 1

- 2

- 3

6、查询访问Kubernetes Dashboard的token

[root@master ~]# kubectl get secrets -n kubernetes-dashboard | grep dashboard-admin

dashboard-admin-token-84gg6 kubernetes.io/service-account-token 3 64s

[root@master ~]# kubectl describe secrets dashboard-admin-token-84gg6 -n kubernetes-dashboard

Name: dashboard-admin-token-84gg6

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 2d93a589-6b0b-4ed6-adc3-9a2eeb5d1311

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImRmbVVfRy15QzdfUUF4ZmFuREZMc3dvd0IxQ3ItZm5SdHVZRVhXV3JpZGcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tODRnZzYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMmQ5M2E1ODktNmIwYi00ZWQ2LWFkYzMtOWEyZWViNWQxMzExIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.xsDBLeZdn7IO0Btpb4LlCD1RQ2VYsXXPa-bir91VXIqRrL1BewYAyFfZtxU-8peU8KebaJiRIaUeF813x6WbGG9QKynL1fTARN5XoH-arkBTVlcjHQ5GBziLDE-KU255veVqORF7J5XtB38Ke2n2pi8tnnUUS_bIJpMTF1s-hV0aLlqUzt3PauPmDshtoerz4iafWK0u9oWBASQDPPoE8IWYU1KmSkUNtoGzf0c9vpdlUw4j0UZE4-zSoMF_XkrfQDLD32LrG56Wgpr6E8SeipKRfgXvx7ExD54b8Lq9DyAltr_nQVvRicIEiQGdbeCu9dwzGyhg-cDucULTx7TUgA

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

7、在页面访问Kubernetes Dashboard,注意一定要使用https,https://172.16.20.111:30010 ,输入token登录成功后就进入了后台管理界面,原先命令行的操作就可以在管理界面进操作了

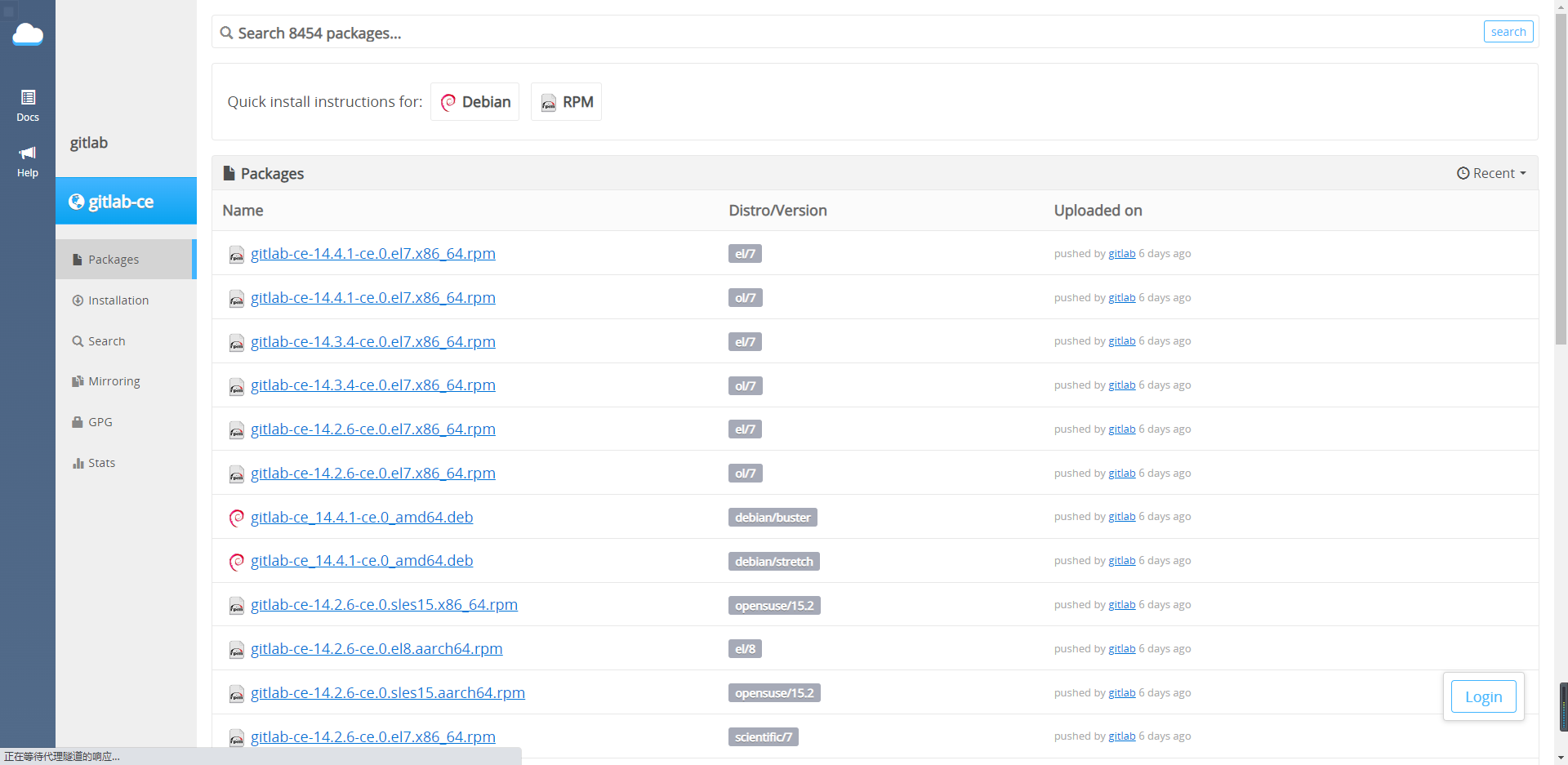

四、GitLab安装配置

GitLab是可以部署在本地环境的Git项目仓库,这里介绍如何安装使用,在开发过程中我们将代码上传到本地仓库,然后Jenkins从仓库中拉取代码打包部署。

1、下载需要的安装包,下载地址 https://packages.gitlab.com/gitlab/gitlab-ce/ ,我们这里下载最新版gitlab-ce-14.4.1-ce.0.el7.x86_64.rpm,当然在项目开发中需要根据自己的需求选择稳定版本

2、点击需要安装的版本,会提示安装命令,按照上面提示的命令进行安装即可

curl -s https://packages.gitlab.com/install/repositories/gitlab/gitlab-ce/script.rpm.sh | sudo bash

sudo yum install gitlab-ce-14.4.1-ce.0.el7.x86_64

- 1

- 2

- 3

3、配置并启动Gitlab

gitlab-ctl reconfigure

- 1

4、查看Gitlab状态

gitlab-ctl status

- 1

5、设置初始登录密码

cd /opt/gitlab/bin

sudo ./gitlab-rails console

# 进入控制台之后执行

u=User.where(id:1).first

u.password='root1234'

u.password_confirmation='root1234'

u.save!

quit

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

5、浏览器访问服务器地址,默认是80端口,所以直接访问即可,在登录界面输入我们上面设置的密码root/root1234。

6、设置界面为中文

User Settings ----> Preferences ----> Language ----> 简体中文 ----> 刷新界面

7、Gitlab常用命令

gitlab-ctl stop

gitlab-ctl start

gitlab-ctl restart

- 1

- 2

- 3

五、使用Docker安装配置Jenkins+Sonar(代码质量检查)

实际项目应用开发过程中,单独为SpringCloud工程部署一台运维服务器,不要安装在Kubernetes服务器上,同样按照上面的步骤安装docker和docker-compose,然后使用docker-compose构建Jenkins和Sonar。

1、创建宿主机挂载目录并赋权

mkdir -p /data/docker/ci/nexus /data/docker/ci/jenkins/lib /data/docker/ci/jenkins/home /data/docker/ci/sonarqube /data/docker/ci/postgresql

chmod -R 777 /data/docker/ci/nexus /data/docker/ci/jenkins/lib /data/docker/ci/jenkins/home /data/docker/ci/sonarqube /data/docker/ci/postgresql

- 1

- 2

- 3

2、新建Jenkins+Sonar安装脚本jenkins-compose.yml脚本,这里的Jenkins使用的是Docker官方推荐的镜像jenkinsci/blueocean,在实际使用中发现,即使不修改插件下载地址,也可以下载插件,所以比较推荐这个镜像。

version: '3'

networks:

prodnetwork:

driver: bridge

services:

sonardb:

image: postgres:12.2

restart: always

ports:

- "5433:5432"

networks:

- prodnetwork

volumes:

- /data/docker/ci/postgresql:/var/lib/postgresql

environment:

- POSTGRES_USER=sonar

- POSTGRES_PASSWORD=sonar

sonar:

image: sonarqube:8.2-community

restart: always

ports:

- "19000:9000"

- "19092:9092"

networks:

- prodnetwork

depends_on:

- sonardb

volumes:

- /data/docker/ci/sonarqube/conf:/opt/sonarqube/conf

- /data/docker/ci/sonarqube/data:/opt/sonarqube/data

- /data/docker/ci/sonarqube/logs:/opt/sonarqube/logs

- /data/docker/ci/sonarqube/extension:/opt/sonarqube/extensions

- /data/docker/ci/sonarqube/bundled-plugins:/opt/sonarqube/lib/bundled-plugins

environment:

- TZ=Asia/Shanghai

- SONARQUBE_JDBC_URL=jdbc:postgresql://sonardb:5432/sonar

- SONARQUBE_JDBC_USERNAME=sonar

- SONARQUBE_JDBC_PASSWORD=sonar

nexus:

image: sonatype/nexus3

restart: always

ports:

- "18081:8081"

networks:

- prodnetwork

volumes:

- /data/docker/ci/nexus:/nexus-data

jenkins:

image: jenkinsci/blueocean

user: root

restart: always

ports:

- "18080:8080"

networks:

- prodnetwork

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /etc/localtime:/etc/localtime:ro

- $HOME/.ssh:/root/.ssh

- /data/docker/ci/jenkins/lib:/var/lib/jenkins/

- /usr/bin/docker:/usr/bin/docker

- /data/docker/ci/jenkins/home:/var/jenkins_home

depends_on:

- nexus

- sonar

environment:

- NEXUS_PORT=8081

- SONAR_PORT=9000

- SONAR_DB_PORT=5432

cap_add:

- ALL

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

3、在jenkins-compose.yml文件所在目录下执行安装启动命令

docker-compose -f jenkins-compose.yml up -d

- 1

安装成功后,展示以下信息

[+] Running 5/5

⠿ Network root_prodnetwork Created 0.0s

⠿ Container root-sonardb-1 Started 1.0s

⠿ Container root-nexus-1 Started 1.0s

⠿ Container root-sonar-1 Started 2.1s

⠿ Container root-jenkins-1 Started 4.2s

- 1

- 2

- 3

- 4

- 5

- 6

4、查看服务的启动情况

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

52779025a83e jenkins/jenkins:lts "/sbin/tini -- /usr/…" 4 minutes ago Up 3 minutes 50000/tcp, 0.0.0.0:18080->8080/tcp, :::18080->8080/tcp root-jenkins-1

2f5fbc25de58 sonarqube:8.2-community "./bin/run.sh" 4 minutes ago Restarting (0) 21 seconds ago root-sonar-1

4248a8ba71d8 sonatype/nexus3 "sh -c ${SONATYPE_DI…" 4 minutes ago Up 4 minutes 0.0.0.0:18081->8081/tcp, :::18081->8081/tcp root-nexus-1

719623c4206b postgres:12.2 "docker-entrypoint.s…" 4 minutes ago Up 4 minutes 0.0.0.0:5433->5432/tcp, :::5433->5432/tcp root-sonardb-1

2b6852a57cc2 goharbor/harbor-jobservice:v2.2.4 "/harbor/entrypoint.…" 5 days ago Up 29 seconds (health: starting) harbor-jobservice

ebf2dea994fb goharbor/nginx-photon:v2.2.4 "nginx -g 'daemon of…" 5 days ago Restarting (1) 46 seconds ago nginx

adfaa287f23b goharbor/harbor-registryctl:v2.2.4 "/home/harbor/start.…" 5 days ago Up 7 minutes (healthy) registryctl

8e5bcca3aaa1 goharbor/harbor-db:v2.2.4 "/docker-entrypoint.…" 5 days ago Up 7 minutes (healthy) harbor-db

ebe845e020dc goharbor/harbor-portal:v2.2.4 "nginx -g 'daemon of…" 5 days ago Up 7 minutes (healthy) harbor-portal

68263dea2cfc goharbor/harbor-log:v2.2.4 "/bin/sh -c /usr/loc…" 5 days ago Up 7 minutes (healthy) 127.0.0.1:1514->10514/tcp harbor-log

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

我们发现 jenkins端口映射到了18081 ,但是sonarqube没有启动,查看日志发现sonarqube文件夹没有权限访问,日志上显示容器目录的权限不够,但实际是宿主机的权限不够,这里需要给宿主机赋予权限

chmod 777 /data/docker/ci/sonarqube/logs

chmod 777 /data/docker/ci/sonarqube/bundled-plugins

chmod 777 /data/docker/ci/sonarqube/conf

chmod 777 /data/docker/ci/sonarqube/data

chmod 777 /data/docker/ci/sonarqube/extension

- 1

- 2

- 3

- 4

- 5

执行重启命令

docker-compose -f jenkins-compose.yml restart

- 1

再次使用命令查看服务启动情况,就可以看到jenkins映射到18081,sonarqube映射到19000端口,我们在浏览器就可以访问jenkins和sonarqube的后台界面了

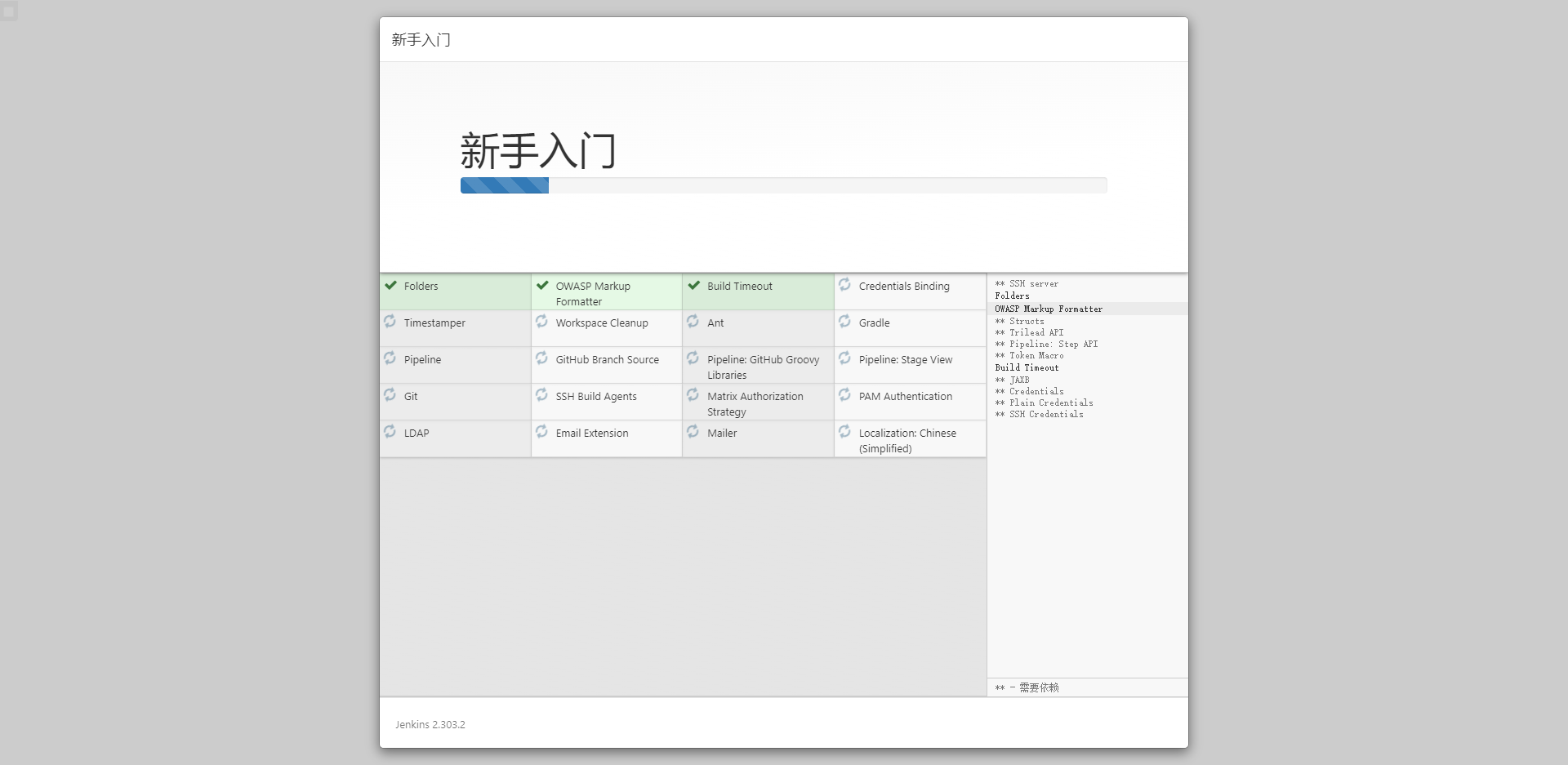

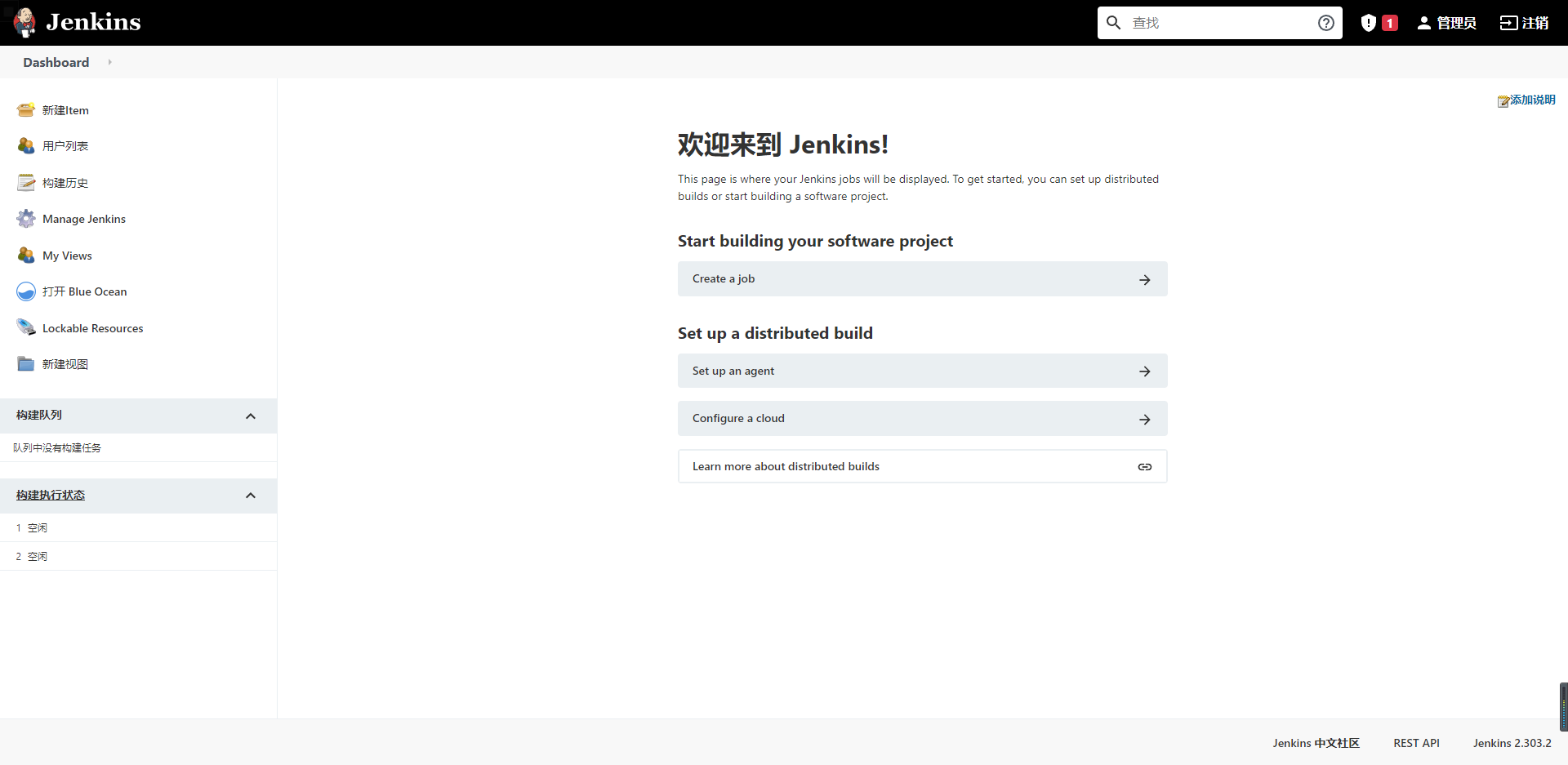

5、Jenkins登录初始化

从Jenkins的登录界面提示可以知道,默认密码路径为/var/jenkins_home/secrets/initialAdminPassword,这里显示的事Docker容器内部的路径,实际对应我们上面服务器设置的路径为/data/docker/ci/jenkins/home/secrets/initialAdminPassword ,我们打开这个文件并输入密码就可以进入Jenkins管理界面

6、选择安装推荐插件,安装完成之后,根据提示进行下一步操作,直到进入管理后台界面

###备注:

- sonarqube默认用户名密码: admin/admin

- 卸载命令:docker-compose -f jenkins-compose.yml down -v

六、Jenkins自动打包部署配置

项目部署有多种方式,从最原始的可运行jar包直接部署到JDK环境下运行,到将可运行的jar包放到docker容器中运行,再到现在比较流行的把可运行的jar包和docker放到k8s的pod环境中运行。每一种新的部署方式都是对原有部署方式的改进和优化,这里不着重介绍每种方式的优缺点,只简单说明一下使用Kubernetes 的原因:Kubernetes 主要提供弹性伸缩、服务发现、自我修复,版本回退、负载均衡、存储编排等功能。

日常开发部署过程中的基本步骤如下:

- 提交代码到gitlab代码仓库

- gitlab通过webhook触发Jenkins构建代码质量检查

- Jenkins需通过手动触发,来拉取代码、编译、打包、构建Docker镜像、发布到私有镜像仓库Harbor、执行kubectl命令从Harbor拉取Docker镜像部署至k8s

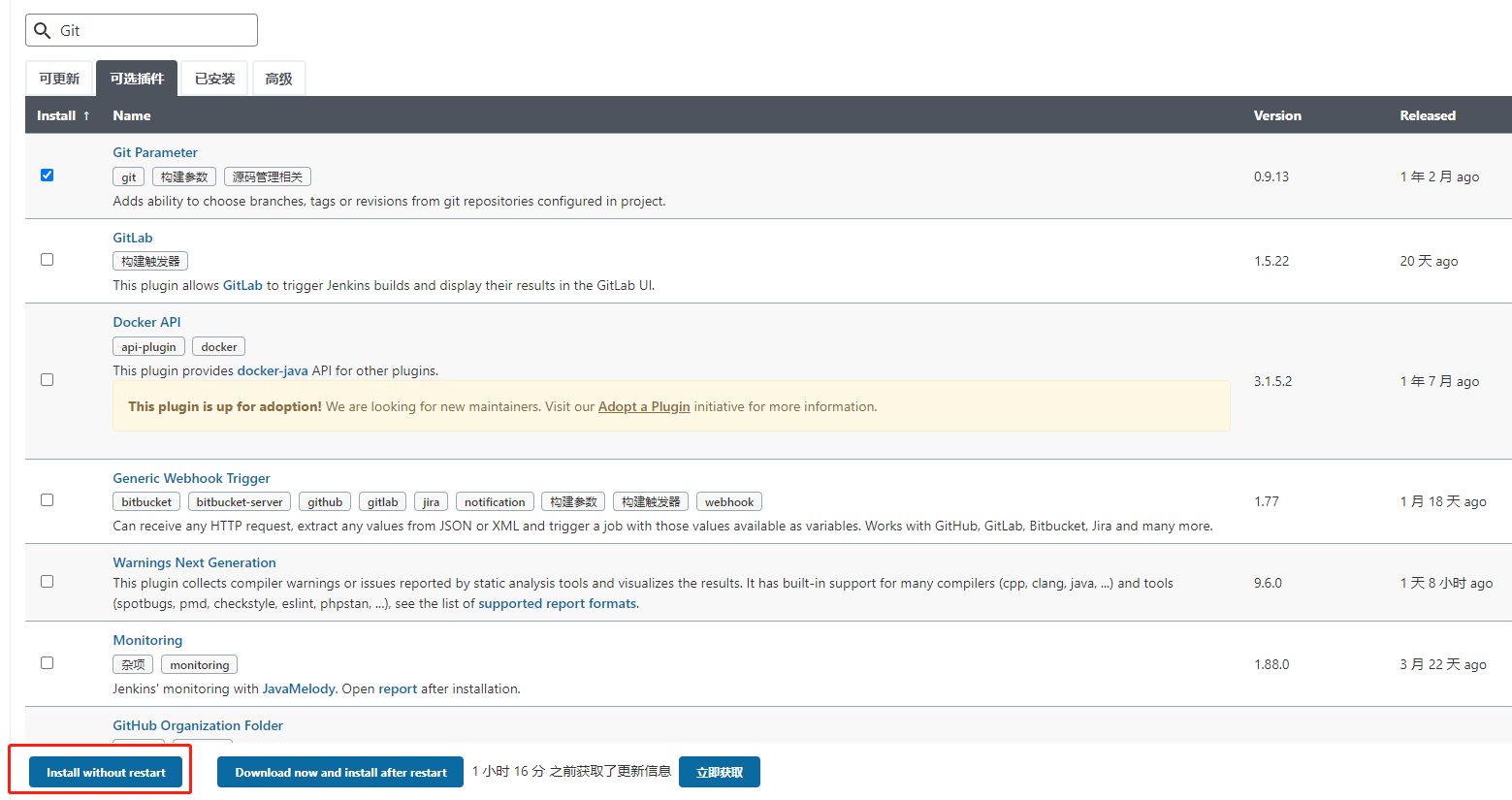

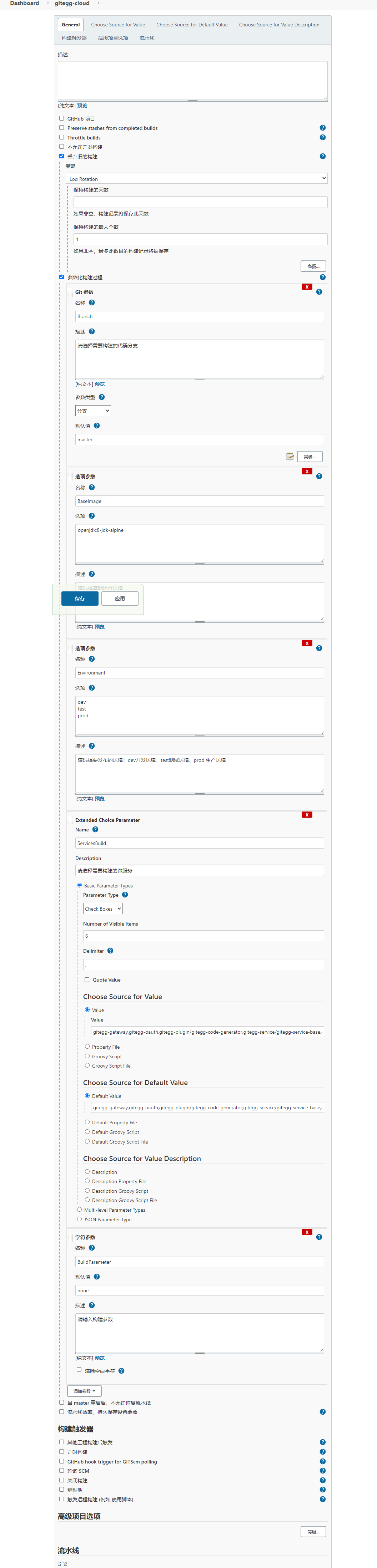

1、安装Kubernetes plugin插件、Git Parameter插件(用于流水线参数化构建)、

Extended Choice Parameter

插件(用于多个微服务时,选择需要构建的微服务)、 Pipeline Utility Steps插件(用于读取maven工程的.yaml、pom.xml等)和 Kubernetes Continuous Deploy(一定要使用1.0版本,从官网下载然后上传) ,Jenkins --> 系统管理 --> 插件管理 --> 可选插件 --> Kubernetes plugin /Git Parameter/Extended Choice Parameter ,选中后点击Install without restart按钮进行安装

Blueocean目前还不支持Git Parameter插件和Extended Choice Parameter插件,Git Parameter是通过Git Plugin读取分支信息,我们这里使用Pipeline script而不是使用Pipeline script from SCM,是因为我们不希望把构建信息放到代码里,这样做可以开发和部署分离。

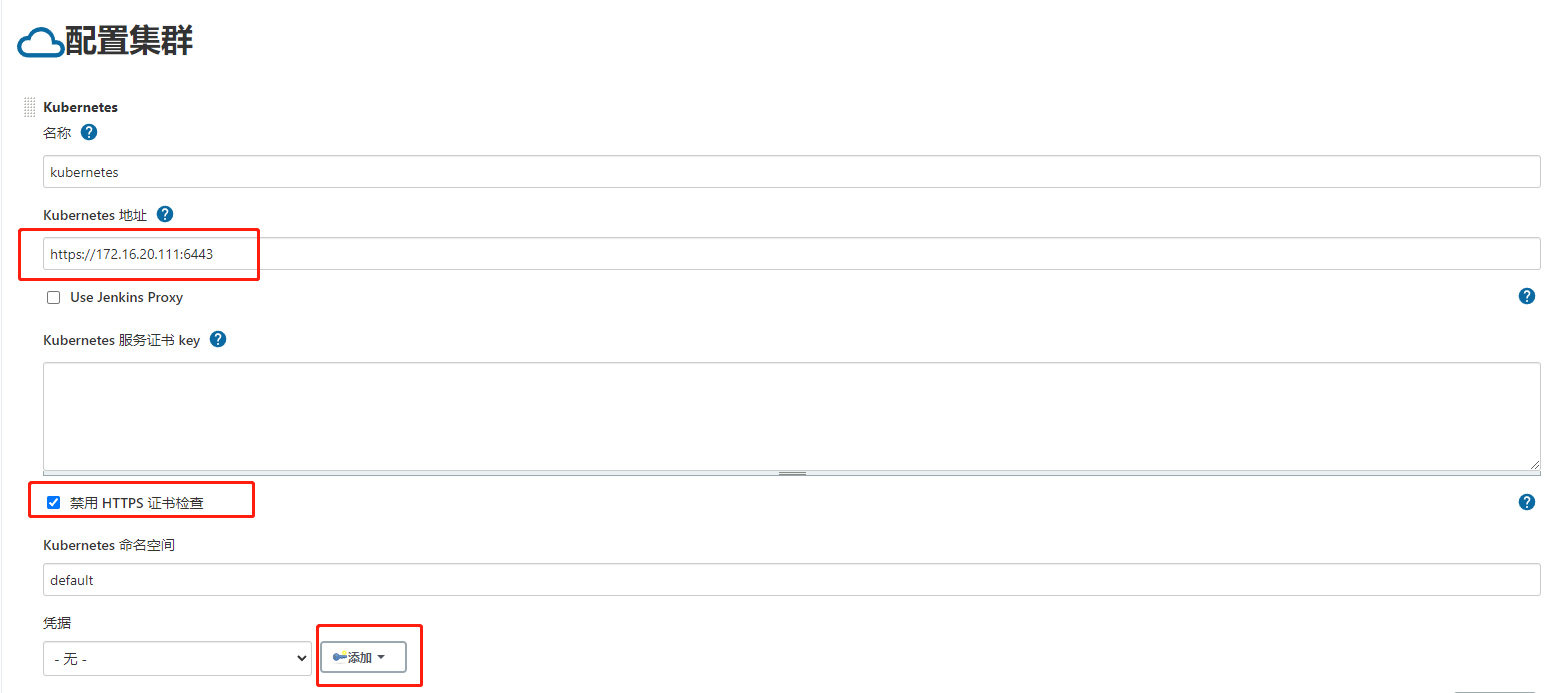

2、配置Kubernetes plugin插件,Jenkins --> 系统管理 --> 节点管理 --> Configure Clouds --> Add a new cloud -> Kubernetes

3、增加kubernetes证书

cat ~/.kube/config

# 以下步骤暂不使用,将certificate-authority-data、client-certificate-data、client-key-data替换为~/.kube/config里面具体的值

#echo certificate-authority-data | base64 -d > ca.crt

#echo client-certificate-data | base64 -d > client.crt

#echo client-key-data | base64 -d > client.key

# 执行以下命令,自己设置密码

#openssl pkcs12 -export -out cert.pfx -inkey client.key -in client.crt -certfile ca.crt

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

系统管理–>凭据–>系统–>全局凭据

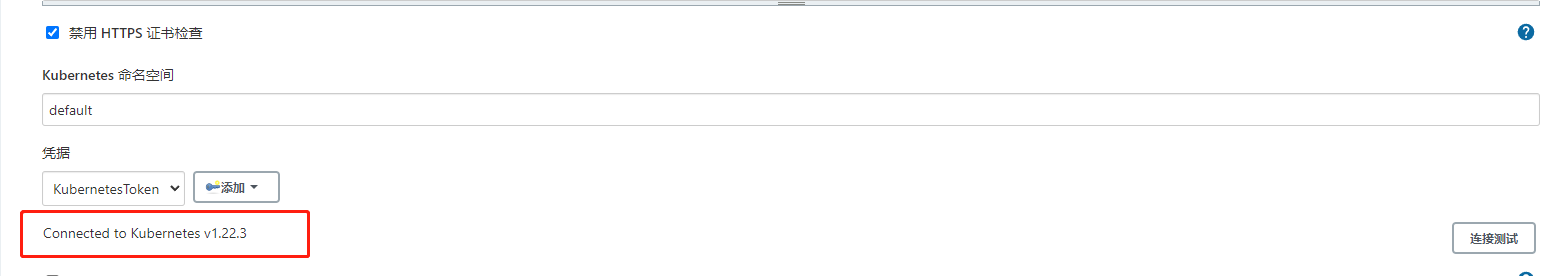

4、添加访问Kubernetes的凭据信息,这里填入上面登录Kubernetes Dashboard所创建的token即可,添加完成之后选择刚刚添加的凭据,然后点击连接测试,如果提示连接成功,那么说明我们的Jenkins可以连接Kubernetes了

5、jenkins全局配置jdk、git和maven

jenkinsci/blueocean镜像默认安装了jdk和git,这里需要登录容器找到路径,然后配置进去。

通过命令进入jenkins容器,并查看JAVA_HOEM和git路径

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0520ebb9cc5d jenkinsci/blueocean "/sbin/tini -- /usr/…" 2 days ago Up 30 hours 50000/tcp, 0.0.0.0:18080->8080/tcp, :::18080->8080/tcp root-jenkins-1

[root@localhost ~]# docker exec -it 0520ebb9cc5d /bin/bash

bash-5.1# echo $JAVA_HOME

/opt/java/openjdk

bash-5.1# which git

/usr/bin/git

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

通过命令查询可知,JAVA_HOME=/opt/java/openjdk GIT= /usr/bin/git , 在Jenkins全局工具配置中配置

Maven可以在宿主机映射的/data/docker/ci/jenkins/home中安装,然后配置时,配置容器路径为/var/jenkins_home下的Maven安装路径

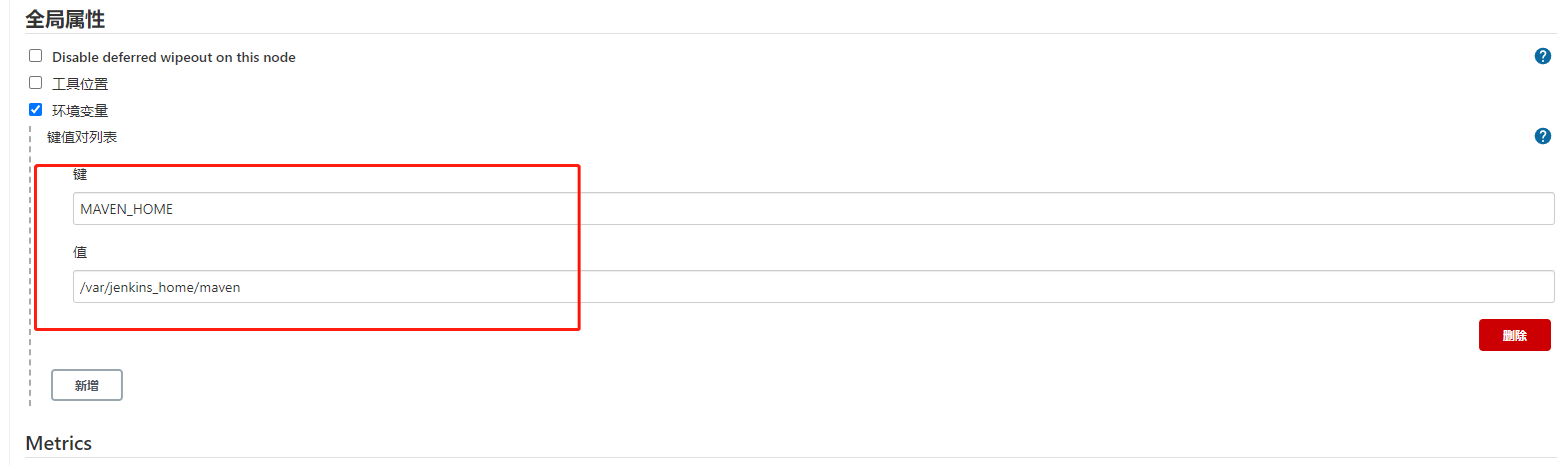

在系统配置中设置MAVEN_HOME供Pipeline script调用,如果执行脚本时提示没有权限,那么在宿主Maven目录的bin目录下执行chmod 777 *

6、为k8s新建harbor-key,用于k8s拉取私服镜像,配置在代码的k8s-deployment.yml中使用。

kubectl create secret docker-registry harbor-key --docker-server=172.16.20.175 --docker-username='robot$gitegg' --docker-password='Jqazyv7vvZiL6TXuNcv7TrZeRdL8U9n3'

- 1

7、新建pipeline流水线任务

8、配置流水线任务参数

9、配置pipeline发布脚本

在流水线下面选择Pipeline script

pipeline {

agent any

parameters {

gitParameter branchFilter: 'origin/(.*)', defaultValue: 'master', name: 'Branch', type: 'PT_BRANCH', description:'请选择需要构建的代码分支'

choice(name: 'BaseImage', choices: ['openjdk:8-jdk-alpine'], description: '请选择基础运行环境')

choice(name: 'Environment', choices: ['dev','test','prod'],description: '请选择要发布的环境:dev开发环境、test测试环境、prod 生产环境')

extendedChoice(

defaultValue: 'gitegg-gateway,gitegg-oauth,gitegg-plugin/gitegg-code-generator,gitegg-service/gitegg-service-base,gitegg-service/gitegg-service-extension,gitegg-service/gitegg-service-system',

description: '请选择需要构建的微服务',

multiSelectDelimiter: ',',

name: 'ServicesBuild',

quoteValue: false,

saveJSONParameterToFile: false,

type: 'PT_CHECKBOX',

value:'gitegg-gateway,gitegg-oauth,gitegg-plugin/gitegg-code-generator,gitegg-service/gitegg-service-base,gitegg-service/gitegg-service-extension,gitegg-service/gitegg-service-system',

visibleItemCount: 6)

string(name: 'BuildParameter', defaultValue: 'none', description: '请输入构建参数')

}

environment {

PRO_NAME = "gitegg"

BuildParameter="${params.BuildParameter}"

ENV = "${params.Environment}"

BRANCH = "${params.Branch}"

ServicesBuild = "${params.ServicesBuild}"

BaseImage="${params.BaseImage}"

k8s_token = "7696144b-3b77-4588-beb0-db4d585f5c04"

}

stages {

stage('Clean workspace') {

steps {

deleteDir()

}

}

stage('Process parameters') {

steps {

script {

if("${params.ServicesBuild}".trim() != "") {

def ServicesBuildString = "${params.ServicesBuild}"

ServicesBuild = ServicesBuildString.split(",")

for (service in ServicesBuild) {

println "now got ${service}"

}

}

if("${params.BuildParameter}".trim() != "" && "${params.BuildParameter}".trim() != "none") {

BuildParameter = "${params.BuildParameter}"

}

else

{

BuildParameter = ""

}

}

}

}

stage('Pull SourceCode Platform') {

steps {

echo "${BRANCH}"

git branch: "${Branch}", credentialsId: 'gitlabTest', url: 'http://172.16.20.188:2080/root/gitegg-platform.git'

}

}

stage('Install Platform') {

steps{

echo "==============Start Platform Build=========="

sh "${MAVEN_HOME}/bin/mvn -DskipTests=true clean install ${BuildParameter}"

echo "==============End Platform Build=========="

}

}

stage('Pull SourceCode') {

steps {

echo "${BRANCH}"

git branch: "${Branch}", credentialsId: 'gitlabTest', url: 'http://172.16.20.188:2080/root/gitegg-cloud.git'

}

}

stage('Build') {

steps{

script {

echo "==============Start Cloud Parent Install=========="

sh "${MAVEN_HOME}/bin/mvn -DskipTests=true clean install -P${params.Environment} ${BuildParameter}"

echo "==============End Cloud Parent Install=========="

def workspace = pwd()

for (service in ServicesBuild) {

stage ('buildCloud${service}') {

echo "==============Start Cloud Build ${service}=========="

sh "cd ${workspace}/${service} && ${MAVEN_HOME}/bin/mvn -DskipTests=true clean package -P${params.Environment} ${BuildParameter} jib:build -Djib.httpTimeout=200000 -DsendCredentialsOverHttp=true -f pom.xml"

echo "==============End Cloud Build ${service}============"

}

}

}

}

}

stage('Sync to k8s') {

steps {

script {

echo "==============Start Sync to k8s=========="

def workspace = pwd()

mainpom = readMavenPom file: 'pom.xml'

profiles = mainpom.getProfiles()

def version = mainpom.getVersion()

def nacosAddr = ""

def nacosConfigPrefix = ""

def nacosConfigGroup = ""

def dockerHarborAddr = ""

def dockerHarborProject = ""

def dockerHarborUsername = ""

def dockerHarborPassword = ""

def serverPort = ""

def commonDeployment = "${workspace}/k8s-deployment.yaml"

for(profile in profiles)

{

// 获取对应配置

if (profile.getId() == "${params.Environment}")

{

nacosAddr = profile.getProperties().getProperty("nacos.addr")

nacosConfigPrefix = profile.getProperties().getProperty("nacos.config.prefix")

nacosConfigGroup = profile.getProperties().getProperty("nacos.config.group")

dockerHarborAddr = profile.getProperties().getProperty("docker.harbor.addr")

dockerHarborProject = profile.getProperties().getProperty("docker.harbor.project")

dockerHarborUsername = profile.getProperties().getProperty("docker.harbor.username")

dockerHarborPassword = profile.getProperties().getProperty("docker.harbor.password")

}

}

for (service in ServicesBuild) {

stage ('Sync${service}ToK8s') {

echo "==============Start Sync ${service} to k8s=========="

dir("${workspace}/${service}") {

pom = readMavenPom file: 'pom.xml'

echo "group: artifactId: ${pom.artifactId}"

def deployYaml = "k8s-deployment-${pom.artifactId}.yaml"

yaml = readYaml file : './src/main/resources/bootstrap.yml'

serverPort = "${yaml.server.port}"

if(fileExists("${workspace}/${service}/k8s-deployment.yaml")){

commonDeployment = "${workspace}/${service}/k8s-deployment.yaml"

}

else

{

commonDeployment = "${workspace}/k8s-deployment.yaml"

}

script {

sh "sed 's#{APP_NAME}#${pom.artifactId}#g;s#{IMAGE_URL}#${dockerHarborAddr}#g;s#{IMAGE_PROGECT}#${PRO_NAME}#g;s#{IMAGE_TAG}#${version}#g;s#{APP_PORT}#${serverPort}#g;s#{SPRING_PROFILE}#${params.Environment}#g' ${commonDeployment} > ${deployYaml}"

kubernetesDeploy configs: "${deployYaml}", kubeconfigId: "${k8s_token}"

}

}

echo "==============End Sync ${service} to k8s=========="

}

}

echo "==============End Sync to k8s=========="

}

}

}

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

常见问题:

1、Pipeline Utility Steps 第一次执行会报错Scripts not permitted to use method或者Scripts not permitted to use staticMethod org.codehaus.groovy.runtime.DefaultGroovyMethods getProperties java.lang.Object

解决:系统管理–>In-process Script Approval->点击 Approval

2、通过NFS服务将所有容器的日志统一存放在NFS的服务端

3、Kubernetes Continuous Deploy,使用1.0.0版本,否则报错,不兼容

4、解决docker注册到内网问题

spring:

cloud:

inetutils:

ignored-interfaces: docker0

- 1

- 2

- 3

- 4

5、配置ipvs模式,kube-proxy监控Pod的变化并创建相应的ipvs规则。ipvs相对iptables转发效率更高。除此以外,ipvs支持更多的LB算法。

kubectl edit cm kube-proxy -n kube-system

- 1

修改mode: “ipvs”

重新加载kube-proxy配置文件

kubectl delete pod -l k8s-app=kube-proxy -n kube-system

- 1

查看ipvs规则

ipvsadm -Ln

- 1

6、k8s集群内部访问外部服务,nacos,redis等

- a、内外互通模式,在部署的服务设置hostNetwork: true

spec:

hostNetwork: true

- 1

- 2

- b、Endpoints模式

kind: Endpoints

apiVersion: v1

metadata:

name: nacos

namespace: default

subsets:

- addresses:

- ip: 172.16.20.188

ports:

- port: 8848

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

apiVersion: v1

kind: Service

metadata:

name: nacos

namespace: default

spec:

type: ClusterIP

ports:

- port: 8848

targetPort: 8848

protocol: TCP

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- c、service的type: ExternalName模式,“ExternalName” 使用 CNAME 重定向,因此无法执行端口重映射,域名使用

EndPoints和type: ExternalName

- 1

以上外部新建yaml,不要用内部的,这些需要在环境设置时配置好。

7、k8s常用命令:

查看pod: kubectl get pods

查看service: kubectl get svc

查看endpoints: kubectl get endpoints

安装: kubectl apply -f XXX.yaml

删除:kubectl delete -f xxx.yaml

删除pod: kubectl delete pod podName

删除service: kubectl delete service serviceName

进入容器: kubectl exec -it podsNamexxxxxx -n default – /bin/sh

GitEgg-Cloud是一款基于SpringCloud整合搭建的企业级微服务应用开发框架,开源项目地址:

Gitee: https://gitee.com/wmz1930/GitEgg

GitHub: https://github.com/wmz1930/GitEgg

欢迎感兴趣的小伙伴Star支持一下。