- 1基于JAVA实现机器人自动向微信发送消息,并通过SpringBoot整合RabbitMQ实现自动消费,推送消息_微信群中加入机器人自动发消息

- 2分治法-求众数问题-Java实现_java 分治 计算众数及其重数

- 3数组指针——指向数组对象的指针_指向数组的指针

- 4Spring MVC静态资源处理:mvc:resources

- 5【目标检测 论文精读 】R-CNN (Rich feature hierarchies for accurate object detection and semantic segmentation)

- 6Mac上搭建Flutter开发环境(Android模拟器和IOS模拟器开发)_macos14 安卓模拟器

- 7UNIX环境高级编程-进程控制_fexecve

- 8springboot集成阿里云rocketMQ代码示例_

com.aliyun.openservices - 9ConstraintLayout布局里的一个属性app:layout_constraintDimensionRatio

- 10Rgraph js 实时刷新canvas,并解决重绘问题_js 刷新canvas

python卷积神经网络人脸识别_卷积神经网络的python实现

赞

踩

这篇文章介绍如何使用Michael Nielsen 用python写的卷积神经网络代码,以及比较卷积神经网络和普通神经网络预测的效果。

这个例子是经典的识别MNIST手写体的AI程序。如下面这些手写数字,分别代表504192。这个程序会对这样的样本进行训练,并在测试集上验证正确率。

至于卷积神经网络的原理,我以后会单独写一篇文章介绍。

准备:

安装 virtualenv

pip install virtualenv

创建env

virtualenv neural

cd neural

source bin/activate

安装 Theano库

pip install Theano

下载 代码

git clone https://github.com/mnielsen/neural-networks-and-deep-learning.git

卷积神经网络在src/network3.py里。因为在作者写完代码后,Theano库又有更新, 且downsample被废弃,所以network3.py需要做如2处修改:'#'后面的为原来的代码,不带'#'的是修改后的代码。

#from theano.tensor.signal import downsample

from theano.tensor.signal.pool import pool_2d

...

#pooled_out = downsample.max_pool_2d(input=conv_out, ds=self.poolsize, ignore_border=True)

pooled_out = pool_2d(input=conv_out, ws=self.poolsize, ignore_border=True)

进入python

cd neural-networks-and-deep-learning/src

phtyon

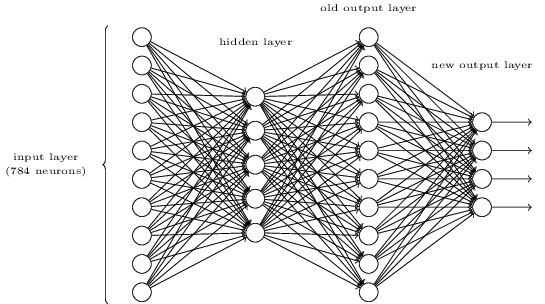

普通神经网络

使用普通的full-connected layer模型训练 各种参数如下。每个参数的含义,我以后会专门写文章介绍,也可参考作者的书。

single hidden layer

100 hidden neurons

60 epochs

learning rate : η=0.1

mini-batch size : 10

no regularization

先用普通神经网络训练,执行命令:

>>> import network3

>>> from network3 import Network

>>> from network3 import ConvPoolLayer, FullyConnectedLayer, SoftmaxLayer

>>> training_data, validation_data, test_data = network3.load_data_shared()

>>> mini_batch_size = 10

>>> net = Network([

FullyConnectedLayer(n_in=784, n_out=100),

SoftmaxLayer(n_in=100, n_out=10)], mini_batch_size)

>>> net.SGD(training_data, 60, mini_batch_size, 0.1,

validation_data, test_data)

执行结果

Training mini-batch number 0

Training mini-batch number 1000

Training mini-batch number 2000

Training mini-batch number 3000

Training mini-batch number 4000

Epoch 0: validation accuracy 92.62%

This is the best validation accuracy to date.

The corresponding test accuracy is 92.00%

Training mini-batch number 5000

Training mini-batch number 6000

Training mini-batch number 7000

Training mini-batch number 8000

Training mini-batch number 9000

Epoch 1: validation accuracy 94.64%

This is the best validation accuracy to date.

The corresponding test accuracy is 94.10%

...

Training mini-batch number 295000

Training mini-batch number 296000

Training mini-batch number 297000

Training mini-batch number 298000

Training mini-batch number 299000

Epoch 59: validation accuracy 97.76%

This is the best validation accuracy to date.

The corresponding test accuracy is 97.79%

Finished training network.

Best validation accuracy of 97.76% obtained at iteration 299999

Corresponding test accuracy of 97.79%

准确率为97.79%,或者说错误率2.21%

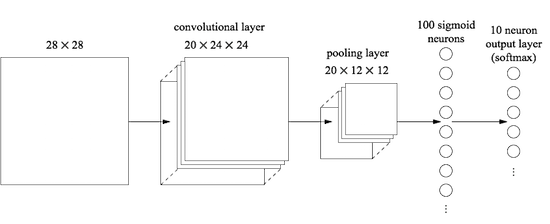

卷积神经网络

使用卷积模型训练 各种参数如下:

local receptive fields: 5x5

stride length : 1

feature maps : 20

max-pooling layer

pooling windows: 2x2

执行命令

>>> net = Network([

ConvPoolLayer(image_shape=(mini_batch_size, 1, 28, 28),

filter_shape=(20, 1, 5, 5),

poolsize=(2, 2)),

FullyConnectedLayer(n_in=20*12*12, n_out=100),

SoftmaxLayer(n_in=100, n_out=10)], mini_batch_size)

>>> net.SGD(training_data, 60, mini_batch_size, 0.1,

validation_data, test_data)

输出

Training mini-batch number 0

Training mini-batch number 1000

Training mini-batch number 2000

Training mini-batch number 3000

Training mini-batch number 4000

Epoch 0: validation accuracy 94.18%

This is the best validation accuracy to date.

The corresponding test accuracy is 93.43%

Training mini-batch number 5000

Training mini-batch number 6000

Training mini-batch number 7000

Training mini-batch number 8000

Training mini-batch number 9000

Epoch 1: validation accuracy 96.12%

This is the best validation accuracy to date.

The corresponding test accuracy is 95.85%

...

Training mini-batch number 295000

Training mini-batch number 296000

Training mini-batch number 297000

Training mini-batch number 298000

Training mini-batch number 299000

Epoch 59: validation accuracy 98.74%

Finished training network.

Best validation accuracy of 98.74% obtained at iteration 214999

Corresponding test accuracy of 98.84%

准确率为98.84%,或者说错误率1.16%. 错误率几乎降低了一半!

相关文章