- 1C++ 虚函数表详解_c++ 虚函数表名称

- 2【TikZ 简单学习(下):基础绘制】Latex下的绘图宏包_latex画图导入宏包

- 3图像分类传统算法和深度学习算法简单介绍_图像分类算法

- 4前端防止用户重复提交请求的方案_前端防止重复提交

- 5搜索算法-搜索的优化_有哪些查找优化算法

- 6手把手快速安装Deveco studio_device studio安装教程

- 7mac 安装配置android sdk_mac安装android sdk

- 8android studio签名打包设置,android studio打包apk,生成签名不签名

- 9Android中隐式Intent以及Intent-filter详解 和匹配规则_intentfilter组件既可以响应

- 10Java面向对象三大特性_java面向对象的三大特征详述

ubuntu18.04部署DXSLAM,CNN+VSLAM,CPU实时运行_fbow ubuntu

赞

踩

一、下载源代码

打开终端,输入命令克隆仓库

git clone https://github.com/raulmur/DXSLAM.git DXSLAM

- 1

二、配置环境

We have tested the library in Ubuntu 16.04 and Ubuntu 18.04, but it should be easy to compile in other platforms.

- C++11 or C++0x Compiler

- Pangolin

- OpenCV

- Eigen3

- Dbow、Fbow and g2o (Included in Thirdparty folder)

- tensorflow(1.12)

作者提供了一个脚本build.sh来编译Thirdparty目录下的库以及DXSLAM库libDXSLAM.so。像Pangolin、OpenCV和Eigen3这些必备库,直接点链接跳转到安装教程进行安装,这里不再赘述。所以,我们需要安装的环境只有tensorflow1.12,其他所需的库都在Thirdparty目录下。

首先你需要安装深度学习工具anaconda:ubuntu安装anaconda

安装好anaconda后,输入下面命令回车,然后输入y回车,下载安装python3.6的环境:

conda create -n tf112 python=3.6

- 1

python环境安装完成后,输入conda activate tf112激活环境:

输入下面命令,安装tensorflow1.12:

pip install tensorflow==1.12

- 1

安装过程中可能出现下面错误:

protobuf requires Python '>=3.7' but the running Python is 3.6.2

- 1

原因是pip版本太低,升级pip:

pip install --upgrade pip

- 1

再次输入命令安装tensorflow1.12:

pip install tensorflow==1.12

- 1

可能会遇到下面错误:

Collecting tensorflow==1.12

Using cached tensorflow-1.12.0-cp36-cp36m-manylinux1_x86_64.whl (83.1 MB)

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='pypi.org', port=443): Read timed out. (read timeout=15)",)': /simple/grpcio/

ERROR: Could not find a version that satisfies the requirement grpcio>=1.8.6 (from tensorflow) (from versions: none)

ERROR: No matching distribution found for grpcio>=1.8.6

- 1

- 2

- 3

- 4

- 5

- 6

原因是未安装numpy或者numpy版本不是最新,–upgrade代表如果你没有安装则安装,安装则更新:

pip install --upgrade numpy

- 1

在pip install xxx的过程中,你可能会遇到下面的报错,不是因为你网速慢,而是需要使用国内的镜像源,最近墙加厚了导致正常的外网都快链接不上了。解决方法就是指定(中科大)镜像源:pip install xxx -i https://pypi.mirrors.ustc.edu.cn/simple/

pip._vendor.urllib3.exceptions.ReadTimeoutError: HTTPSConnectionPool(host='files.pythonhosted.org', port=443): Read timed out.

- 1

安装好numpy之后,再次输入命令安装tensorflow1.12

pip install tensorflow==1.12

- 1

这里我指定了N个镜像源,要么连接超时,要么就没有1.12版本的资源。最后不指定源了,挂梯子都3.4KB/s,真的蚌埠住了,那就耐心等待吧。

终于能正常下载好所有依赖时,报错无法安装tensorflow,原因是还有依赖库未安装,解决方法就是pip install h5py -i https://pypi.tuna.tsinghua.edu.cn/simple/

ERROR: Cannot install tensorflow because these package versions have conflicting dependencies.

The conflict is caused by:

keras-applications 1.0.8 depends on h5py

keras-applications 1.0.7 depends on h5py

keras-applications 1.0.6 depends on h5py

To fix this you could try to:

1. loosen the range of package versions you've specified

2. remove package versions to allow pip attempt to solve the dependency conflict

ERROR: ResolutionImpossible: for help visit https://pip.pypa.io/en/latest/user_guide/#fixing-conflicting-dependencies

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

终于历经千辛万苦,几十次install,安装成功了,我只能说tensorflow你迟早被淘汰,怪不得我们都用pytorch,人家pytorch安装多神速。

三、编译源代码

cd dxslam

chmod +x build.sh

./build.sh

- 1

- 2

- 3

这里第三方库DBoW2、g2o、cnpy、fbow安装非常快,脚本中编译都改成make -j4,这没什么好讲的。主要是词袋和神经网络模型,这两个需要从github下载,这里就没有什么镜像源了,挂上梯子都得碰运气(国外节点连接不稳定),比如我现在就是6.4KB/s。

Download and Uncompress vocabulary

Download and Uncompress hf-net

下载好之后,按照脚本中的指令解压到指定目录。然后注释掉build.sh中下载&解压词袋和模型的这两段bash,再执行./build.sh

编译真的太快了,30s不到就OK了,生成目标可执行文件rgbd_tum。

四、下载数据集

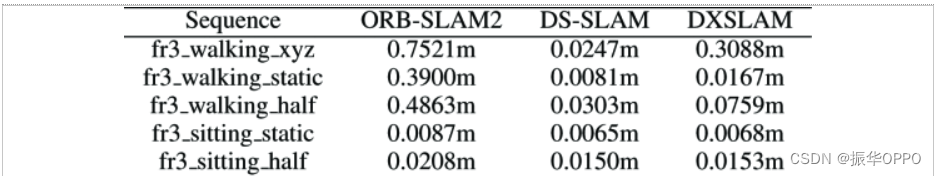

从TUM RGB-D Dataset Download下载一个序列并解压。在DXSLAM论文的Evolution章节中做完整系统实验时,得出如下一张表格:

所以我们选择fr3下的Sequence下载即可,这里我选择fr3_walking_xyz序列。

用python脚本 associate.py关联RGB图像和depth图像,PATH_TO_SEQUENCE是序列所在目录:

python associate.py PATH_TO_SEQUENCE/rgb.txt PATH_TO_SEQUENCE/depth.txt > PATH_TO_SEQUENCE/associations.txt

- 1

下面我们要先从数据集的rgb图像中获得关键点、局部描述子和全局描述子,使用HF_Net神经网络,命令格式如下,参数1是rgb的目录,参数2是输出目录。确保是在tensorflow1.12的环境下:conda activate tf112

cd hf-net

python3 getFeature.py image/path/to/rgb output/feature/path

- 1

- 2

过程中出现模块未找到的错误,所以pip install opencv-python安装下opencv2

ModuleNotFoundError: No module named 'cv2'

- 1

当然安装opencv-python绝对不会一帆风顺,遇到一直卡在build wheel的问题:

Building wheels for collected packages: opencv-python

Building wheel for opencv-python (pyproject.toml)

- 1

- 2

搜索了30多个链接后,确定解决方法:从清华源上手动下载wheel,注意是python3.6版本的,然后cd到wheel所在目录:

pip install opencv_python-3.2.0.8-cp36-cp36m-manylinux1_x86_64.whl

- 1

只需要1s就安装好了,但前面的准备工作花了1h。

Processing ./opencv_python-3.2.0.8-cp36-cp36m-manylinux1_x86_64.whl

Requirement already satisfied: numpy>=1.11.3 in /home/dzh/anaconda3/envs/tf112/lib/python3.6/site-packages (from opencv-python==3.2.0.8) (1.19.5)

Installing collected packages: opencv-python

Successfully installed opencv-python-3.2.0.8

- 1

- 2

- 3

- 4

再次使用HF-Net输出信息

cd hf-net

python3 getFeature.py image/path/to/rgb output/feature/path

- 1

- 2

程序执行完成后,在feature目录下生成了3个目录:des、glb、point-txt,分别是local_descriptors、global_descriptor、 keypoints。

在程序结束后,你的光标变成了十字架,而且不能动了,对不对?

知道为什么吗?是因为将terminal当成了python运行环境,直接输入import命令就会卡死。解决方法就是新建终端,找到import的进程号,然后kill掉即可。

(base) dzh@dzh-Lenovo-Legion-Y7000:~$ ps -A|grep import

15124 pts/0 00:00:00 import

(base) dzh@dzh-Lenovo-Legion-Y7000:~$ kill -9 15124

- 1

- 2

- 3

| 至此,所有准备工作算是做完了,你花了how many hours? |

|---|

五、运行系统

执行下面的命令,参数TUMX.yaml是我们下载的数据集的相机参数文件,PATH_TO_SEQUENCE_FOLDER是数据集目录,ASSOCIATIONS_FILE是关联的文件目录,OUTPUT/FEATURE/PATH是我们刚刚生成的HF-Net信息目录。

./Examples/RGB-D/rgbd_tum Vocabulary/DXSLAM.fbow Examples/RGB-D/TUMX.yaml PATH_TO_SEQUENCE_FOLDER ASSOCIATIONS_FILE OUTPUT/FEATURE/PATH

- 1

下面这是我根据我的目录所执行的指令,包括我所在的python环境和所在目录都一目了然。

(tf112) dzh@dzh-Lenovo-Legion-Y7000:~/slambook/dxslam$ ./Examples/RGB-D/rgbd_tum Vocabulary/DXSLAM.fbow ../TUM_DataSet/TUM3.yaml ../TUM_DataSet/rgbd_dataset_freiburg3_walking_xyz/ ../TUM_DataSet/rgbd_dataset_freiburg3_walking_xyz/associations.txt ./hf-net/feature/

- 1

运行效果如图所示,其实录制视频效果更好,这里就展示运行时的截图,使用的还是ORB-SLAM2的Map Viewer:

这是运行时的终端日志,默认是SLAM模式,可以看到在跟踪相机位姿丢失后会重置局部地图、闭环和数据库,每次会输出与局部地图匹配的关键点数量。每帧89ms,也就是10fps,使用CPU跑的话还是满足要求的。

match numbers: 453

nmatchesMap: 280

match numbers: 411

nmatchesMap: 258

virtual int g2o::SparseOptimizer::optimize(int, bool): 0 vertices to optimize, maybe forgot to call initializeOptimization()

match numbers: 474

nmatchesMap: 0

Track lost soon after initialisation, reseting...

System Reseting

Reseting Local Mapper... done

Reseting Loop Closing... done

Reseting Database... done

New map created with 338 points

match numbers: 452

nmatchesMap: 278

match numbers: 430

nmatchesMap: 260

match numbers: 422

nmatchesMap: 255

match numbers: 400

nmatchesMap: 239

match numbers: 369

nmatchesMap: 219

-------

median tracking time: 0.0919971

mean tracking time: 0.0890905

Saving keyframe trajectory to KeyFrameTrajectory.txt ...

trajectory saved!

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

可以处理你自己的相机序列:

You will need to create a settings file with the calibration of your camera. See the settings file provided for the TUM RGB-D cameras. We use the calibration model of OpenCV. RGB-D input must be synchronized and depth registered.

SLAM 和 Localization 模式

You can change between the SLAM and Localization mode using the GUI of the map viewer.

-

SLAM 模式

This is the default mode. The system runs in parallal three threads: Tracking, Local Mapping and Loop Closing. The system localizes the camera, builds new map and tries to close loops. -

Localization 模式

This mode can be used when you have a good map of your working area. In this mode the Local Mapping and Loop Closing are deactivated. The system localizes the camera in the map (which is no longer updated), using relocalization if needed.

配置的过程正常都会遇到各种各样的问题,而解决问题的过程是最有价值的。