热门标签

热门文章

- 1MacBook Pro IntelliJ IDEA 15过期破解_thank you for evaluating idea

- 2Python 实战人工智能数学基础:强化学习_python reinforcement learning

- 3【实用工具】Github的2FA(two-factor authentication)认证,github 双重认证2FA_github two-factor authentication

- 4python 之 selenium代理设置、无头浏览器设置、页面等待的三种方式_selenium python设置本机代理

- 5车轮上的智能:探索机器学习在汽车行业的应用前景

- 6rosbridge

- 7数据结构与算法学习笔记七--二叉树(C++)

- 8深度强化学习笔记——基本方法分类与一般思路_深度强化学习分类

- 9RabbitMQ传递序列化/反序列化自定义对象时踩坑

- 10Spring中bean的作用域_简述如何定义spring的作用域 其区别是什么

当前位置: article > 正文

运行PySpark项目报错SparkException: Python worker failed to connect back.的解决方法

作者:我家自动化 | 2024-04-27 10:35:56

赞

踩

python worker failed to connect back

目录

1.背景

在未配置Spark环境的Win10系统上使用PyCharm平台运行PySpark项目,但是已通过

pip install pyspark 安装了pyspark库,代码段无报错,但是运行时出现这种报错:

2.报错原因

Spark找不到Python环境的位置,需要指定Python环境.

3.解决方法

(1)如图所示,进入编辑运行配置:

(2)如图所示,点击编辑环境变量:

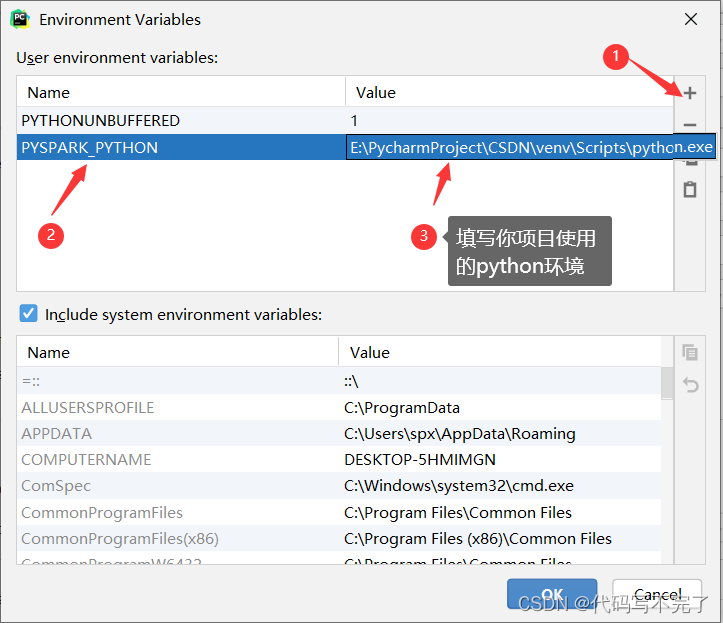

(3)如图所示,添加PYSPARK_PYTHON的环境变量:

(4)点击OK,点击Apply.再次运行项目:

报错已被解决.

4.测试代码

该测试代码是一个简单的词频统计,一并发出来吧:

- import pyspark

- from pyspark import SparkConf

-

- # 单词统计

- def word_statistics(words):

- conf = pyspark.SparkConf().setMaster("local[*]").setAppName("Word_Statistics")

- sc = pyspark.SparkContext(conf=conf)

-

- words = words

- rdd = sc.parallelize(words)

- counts = rdd.map(lambda w: (w, 1)).reduceByKey(lambda a, b: a+b)

- print(counts.collect())

-

- if __name__ == "__main__":

- words = ["test1", "test2", "test1", "test2", "test3", "test2", "test1", "test5", "test4", "test2", "test6", "test7"]

- word_statistics(words)

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/我家自动化/article/detail/496290

推荐阅读

相关标签