- 1华为ICT云赛道真题_华为ict云赛道题库

- 2FPGA的学习:数码管静态显示的实现(一)_fpga静态数码管显示

- 3[Mysql] 数据库基本概念

- 4mysql和redis备份和恢复数据的笔记

- 5IDEA中 配置 使用 SVN_idea配置svn

- 6spark中对rdd的几个操作_spark rdd有几种基本操作 分别列出几个典型的操作

- 7Windows Azure公有云服务相关方案

- 8yolov8跟踪模式部署Ros系统上,跟踪鼠标选择的目标,实时发布目标的坐标信息(python实现)_ros2 目标跟踪

- 9SpringSecurity系列学习(二):密码验证_springsecurity怎么看一个字符串是不是bcryptpasswordencoder编码

- 10对MaxKB进行个性化设置_maxkb图标修改

[PostgreSQL] PostgreSQL 之 触发器分表性能优化_pg库使用触发器效率问题(1)_使用触发器创建pg分表

赞

踩

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

select substr(‘cc3483bf-9d1f-4eaa-a6e2-a376ba312ff1’,1,(random()*26)::integer);

– 随机复制: N=1

select repeat(‘cc3483bf-9d1f-4eaa-a6e2-a376ba312ff1’,(random()*40)::integer);

– 生成序列

SELECT * FROM generate_series(1,5);

select id from generate_series(1,10) t(id); – 自定义column

– 生成随机数

select (random()*100)::int from generate_series(1,10);

– 序列、随机字符串、时间戳

select generate_series(1,100000),md5(random()::text),clock_timestamp();

– 生成时间

SELECT date(generate_series(now(), now() + ‘1 week’, ‘1 day’));

– 模拟随机数据

select

md5(random()::text) as person_id,

md5(random()::text) as dept_id,

md5(random()::text) as dev_id,

md5(random()::text) as door_id,

floor(random()*2)+1 as auth_status,

floor(random()*3)+1 as finger_status,

floor(random()*4)+1 as face_status,

floor(random()*5)+1 as del_card_number,

md5(random()::text) as auth_person_schedule_id,

md5(random()::text) as auth_dept_schedule_id,

md5(random()::text) as auth_person_grp_schedule_id,

clock_timestamp() as config_time,

clock_timestamp() as download_time,

md5(random()::text) as dev_group_id,

md5(random()::text) as download_code

from

generate_series(1,10,1);

- 1

- 2

数据插入Example

- 1

- 2

explain analyze insert into tb_auth_detail(

“person_id”,

“dept_id”,

“dev_id”,

“door_id”,

“auth_status”,

“finger_status”,

“face_status”,

“del_card_number”,

“auth_person_schedule_id”,

“auth_dept_schedule_id”,

“auth_person_grp_schedule_id”,

“config_time”,

“download_time”,

“dev_group_id”,

“download_code”

)

select

floor(random()*1000000)+1 as person_id,

floor(random()*1000000)+1 as dept_id,

floor(random()*1000000)+1 as dev_id,

floor(random()*1000000)+1 as door_id,

floor(random()*2)+1 as auth_status,

floor(random()*3)+1 as finger_status,

floor(random()*4)+1 as face_status,

floor(random()*5)+1 as del_card_number,

floor(random()*1000000)+1 as auth_person_schedule_id,

floor(random()*1000000)+1 as auth_dept_schedule_id,

floor(random()*1000000)+1 as auth_person_grp_schedule_id,

clock_timestamp() as config_time,

now() as download_time,

floor(random()*200)+1 as dev_group_id,

floor(random()*1000000)+1 as download_code

from

generate_series(1,100000,1);

##### 性能分析

- 1

- 2

- 3

- 4

- 5

1.创建相同表结构的tb_auth_detail_all不进行分表

2.分别插入10W、100W、1000W数据进行测试

> > 10W 数据 > > > **`插入性能分析`** * 分表

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

– 0数据[初次建分区表耗时]

Insert on tb_auth_detail (cost=0.00…242.50 rows=1000 width=120) (actual time=118005.833…118005.833 rows=0 loops=1)

-> Subquery Scan on “*SELECT*” (cost=0.00…242.50 rows=1000 width=120) (actual time=18.495…7147.848 rows=100000 loops=1)

-> Function Scan on generate_series (cost=0.00…145.00 rows=1000 width=0) (actual time=14.280…461.711 rows=100000 loops=1)

Planning time: 0.248 ms

Trigger tri_ins_auth_detail: time=110770.614 calls=100000

Execution time: 118008.532 ms

- 1

- 2

– 已有10W

Insert on tb_auth_detail (cost=0.00…242.50 rows=1000 width=120) (actual time=33649.149…33649.149 rows=0 loops=1)

-> Subquery Scan on “*SELECT*” (cost=0.00…242.50 rows=1000 width=120) (actual time=13.439…1652.186 rows=100000 loops=1)

-> Function Scan on generate_series (cost=0.00…145.00 rows=1000 width=0) (actual time=13.396…411.380 rows=100000 loops=1)

Planning time: 0.187 ms

Trigger tri_ins_auth_detail: time=31926.839 calls=100000

Execution time: 33651.926 ms

* 单表

- 1

- 2

- 3

- 4

- 5

– 0数据

Insert on tb_auth_detail_all (cost=0.00…242.50 rows=1000 width=120) (actual time=14424.540…14424.540 rows=0 loops=1)

-> Subquery Scan on “*SELECT*” (cost=0.00…242.50 rows=1000 width=120) (actual time=20.354…1273.261 rows=100000 loops=1)

-> Function Scan on generate_series (cost=0.00…145.00 rows=1000 width=0) (actual time=19.205…273.179 rows=100000 loops=1)

Planning time: 0.552 ms

Execution time: 14429.240 ms

- 1

- 2

– 已有10W

Insert on tb_auth_detail_all (cost=0.00…242.50 rows=1000 width=120) (actual time=25942.760…25942.760 rows=0 loops=1)

-> Subquery Scan on “*SELECT*” (cost=0.00…242.50 rows=1000 width=120) (actual time=14.394…1307.630 rows=100000 loops=1)

-> Function Scan on generate_series (cost=0.00…145.00 rows=1000 width=0) (actual time=14.282…283.456 rows=100000 loops=1)

Planning time: 0.281 ms

Execution time: 25948.271 ms

**`搜索性能分析`**

* 分表

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

explain analyze select * from tb_auth_detail_all;

Seq Scan on tb_auth_detail_all (cost=0.00…2635.00 rows=100000 width=86) (actual time=0.007…6.387 rows=100000 loops=1)

Planning time: 2.292 ms

Execution time: 7.462 ms

* 单表

- 1

- 2

- 3

- 4

- 5

explain analyze select * from tb_auth_detail;

-> Seq Scan on tb_auth_detail_172 (cost=0.00…14.22 rows=522 width=86) (actual time=0.004…0.172 rows=522 loops=1)

…

-> Seq Scan on tb_auth_detail_100 (cost=0.00…14.06 rows=506 width=86) (actual time=0.004…0.099 rows=506 loops=1)

-> Seq Scan on tb_auth_detail_72 (cost=0.00…12.80 rows=480 width=86) (actual time=0.005…0.197 rows=480 loops=1)

Planning time: 419.074 ms

Execution time: 79.472 ms

**`配置约束`**

>

> 官网解释 (避免扫描 PostgreSQL 分区表所有分区 )

>

>

>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

constraint_exclusion 的含义是:当PG生产执行计划时是否考虑表上的约束,这个参数有三个选项

“off,on ,partition” ,默认参数为 off, 意思不使用表上的 constraint 来生成计划,如果设置成

on ,则对所有表生效,生成 PLAN 时会考虑表上的 constraint, 建议设置成 partition,只对分区表

生效,从而避免扫描分区表所有分区。

- 1

- 2

-- constraint\_exclusion = partition # on, off, or partition

set constraint\_exclusion = off;

- 1

- 2

>

> 10W数据

>

>

>

`插入`

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

-- off

Insert on tb\_auth\_detail\_all (cost=0.00..242.50 rows=1000 width=120) (actual time=7993.545..7993.545 rows=0 loops=1)

- 1

- 2

- 3

-> Subquery Scan on “*SELECT*” (cost=0.00…242.50 rows=1000 width=120) (actual time=13.112…1516.601 rows=100000 loops=1)

-> Function Scan on generate_series (cost=0.00…145.00 rows=1000 width=0) (actual time=12.765…287.764 rows=100000 loops=1)

Planning time: 0.402 ms

Execution time: 7997.900 ms

- 1

- 2

-- on

Insert on tb\_auth\_detail\_all (cost=0.00..242.50 rows=1000 width=120) (actual time=8746.615..8746.615 rows=0 loops=1)

- 1

- 2

- 3

-> Subquery Scan on “*SELECT*” (cost=0.00…242.50 rows=1000 width=120) (actual time=13.932…1342.997 rows=100000 loops=1)

-> Function Scan on generate_series (cost=0.00…145.00 rows=1000 width=0) (actual time=13.331…275.213 rows=100000 loops=1)

Planning time: 0.732 ms

Execution time: 8751.193 ms

- 1

- 2

-- partition

Insert on tb\_auth\_detail\_all (cost=0.00..242.50 rows=1000 width=120) (actual time=10885.670..10885.670 rows=0 loops=1)

- 1

- 2

- 3

-> Subquery Scan on “*SELECT*” (cost=0.00…242.50 rows=1000 width=120) (actual time=14.209…1342.818 rows=100000 loops=1)

-> Function Scan on generate_series (cost=0.00…145.00 rows=1000 width=0) (actual time=14.165…290.517 rows=100000 loops=1)

Planning time: 0.124 ms

Execution time: 10888.549 ms

`搜索`

- 1

- 2

- 3

- 4

- 5

explain analyze select * from tb_auth_detail where dev_group_id = 21;

- 1

- 2

-- off

- 1

Append (cost=0.00…882.51 rows=1176 width=87) (actual time=10.694…13.909 rows=976 loops=1)

-> Seq Scan on tb_auth_detail (cost=0.00…0.00 rows=1 width=1464) (actual time=0.003…0.003 rows=0 loops=1)

Filter: (dev_group_id = 21)

-> Index Scan using idx_tb_auth_detail_192_sid on tb_auth_detail_192 (cost=0.28…4.29 rows=1 width=86) (actual time=0.215…0.215 rows=0 loops=1)

Index Cond: (dev_group_id = 21)

…

-> Index Scan using idx_tb_auth_detail_39_sid on tb_auth_detail_39 (cost=0.28…4.29 rows=1 width=86) (actual time=0.041…0.041 rows=0 loops=1)

Index Cond: (dev_group_id = 21)

Planning time: 652.183 ms

Execution time: 17.949 ms

- 1

- 2

-- on

- 1

Append (cost=0.00…28.20 rows=977 width=87) (actual time=0.110…1.498 rows=976 loops=1)

-> Seq Scan on tb_auth_detail (cost=0.00…0.00 rows=1 width=1464) (actual time=0.003…0.003 rows=0 loops=1)

Filter: (dev_group_id = 21)

-> Seq Scan on tb_auth_detail_21 (cost=0.00…28.20 rows=976 width=86) (actual time=0.105…1.472 rows=976 loops=1)

Filter: (dev_group_id = 21)

Planning time: 536.421 ms

Execution time: 2.257 ms

- 1

- 2

-- partition

- 1

Append (cost=0.00…28.20 rows=977 width=87) (actual time=0.009…0.170 rows=976 loops=1)

-> Seq Scan on tb_auth_detail (cost=0.00…0.00 rows=1 width=1464) (actual time=0.001…0.001 rows=0 loops=1)

Filter: (dev_group_id = 21)

-> Seq Scan on tb_auth_detail_21 (cost=0.00…28.20 rows=976 width=86) (actual time=0.007…0.153 rows=976 loops=1)

Filter: (dev_group_id = 21)

Planning time: 409.550 ms

Execution time: 0.841 ms

- 1

- 2

explain analyze select * from tb_auth_detail_all where dev_group_id = 20;

- 1

- 2

-- off

- 1

Bitmap Heap Scan on tb_auth_detail_all (cost=68.16…2746.17 rows=999 width=86) (actual time=1.079…48.217 rows=993 loops=1)

Recheck Cond: (dev_group_id = 20)

Heap Blocks: exact=876

-> Bitmap Index Scan on idx_auth_detail_all_sdev_group_id (cost=0.00…67.91 rows=999 width=0) (actual time=0.901…0.901 rows=993 loops=1)

Index Cond: (dev_group_id = 20)

Planning time: 2.386 ms

Execution time: 48.690 ms

- 1

- 2

-- on

- 1

Append (cost=0.00…28.20 rows=977 width=87) (actual time=0.110…1.498 rows=976 loops=1)

-> Seq Scan on tb_auth_detail (cost=0.00…0.00 rows=1 width=1464) (actual time=0.003…0.003 rows=0 loops=1)

Filter: (dev_group_id = 21)

-> Seq Scan on tb_auth_detail_21 (cost=0.00…28.20 rows=976 width=86) (actual time=0.105…1.472 rows=976 loops=1)

Filter: (dev_group_id = 21)

Planning time: 536.421 ms

Execution time: 2.257 ms

- 1

- 2

-- partition

- 1

Bitmap Heap Scan on tb_auth_detail_all (cost=68.16…2746.17 rows=999 width=86) (actual time=0.195…0.751 rows=993 loops=1)

Recheck Cond: (dev_group_id = 20)

Heap Blocks: exact=876

-> Bitmap Index Scan on idx_auth_detail_all_sdev_group_id (cost=0.00…67.91 rows=999 width=0) (actual time=0.112…0.112 rows=993 loops=1)

Index Cond: (dev_group_id = 20)

Planning time: 0.138 ms

Execution time: 0.889 ms

> > 分析约束对于搜索的影响 > > > * 必须将constraint\_exclusion设置为on或partition,否则planner将无法正常跳过不符合条件的分区表,也即无法发挥表分区的优势 (当constraint\_exclusion为on或者partition时,查询计划器会根据分区表的检查限制将对主表的查询限制在符合检查限制条件的分区表上,直接避免了对不符合条件的分区表的扫描。) * 单表(10W)情况下,性能差异不大 * 分表(10W)情况下,开启约束对于有where条件子句的可以明显提高性能。 #### 其他方案 * 使用Rule将对主表的插入请求重定向到对应的子表

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

CREATE RULE almart_rule_2015_12_31 AS

ON INSERT TO almart

WHERE

date_key = DATE ‘2015-12-31’

DO INSTEAD

INSERT INTO almart_2015_12_31 VALUES (NEW.*);

>

> 与Trigger相比,Rule会带来更大的额外开销,但每个请求只造成一次开销而非每条数据都引入一次开销,所以该方法对大批量的数据插入操作更具优势。然而,实际上在绝大部分场景下,Trigger比Rule的效率更高。同时,COPY操作会忽略Rule,而可以正常触发Trigger。另外,如果使用Rule方式,没有比较简单的方法处理没有被Rule覆盖到的插入操作。此时该数据会被插入到主表中而不会报错,从而无法有效利用表分区的优势。除了使用表继承外,还可使用UNION ALL的方式达到表分区的效果。

>

>

>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

CREATE VIEW almart AS

SELECT * FROM almart_2015_12_10

UNION ALL

SELECT * FROM almart_2015_12_11

UNION ALL

SELECT * FROM almart_2015_12_12

…

UNION ALL

SELECT * FROM almart_2015_12_30;

- 1

- 2

当有新的分区表时,需要更新该View。实践中,与使用表继承相比,一般不推荐使用该方法。

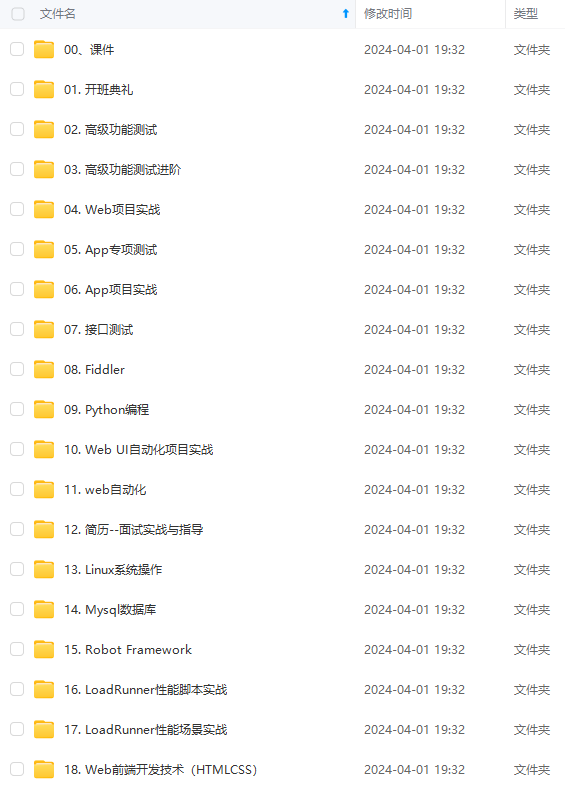

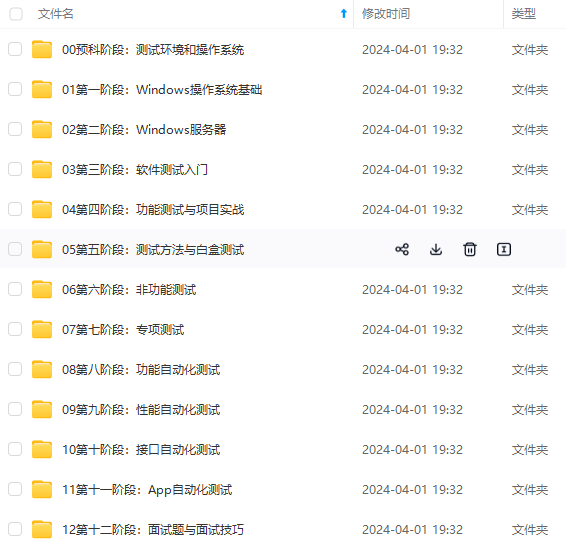

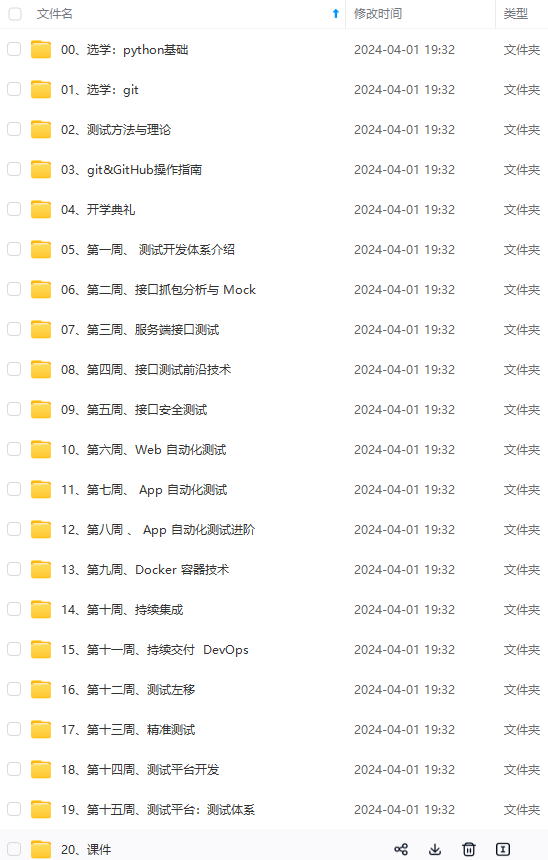

### REFRENCES 1.[PostgreSQL 创建分区表,SQL优化之PostgreSQL Table Partitioning]( ) 2.[PostgreSQL wiki 表分区]( ) ### 微信公众号  扫码关注或搜索`架构探险之道`获取最新文章,坚持每周一更,坚持技术分享的我和你们一起成长 ^\_^ !    **既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上软件测试知识点,真正体系化!** **由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新** **[需要这份系统化的资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618631832)** [外链图片转存中...(img-zR4AmJnp-1715898421742)] 扫码关注或搜索`架构探险之道`获取最新文章,坚持每周一更,坚持技术分享的我和你们一起成长 ^\_^ ! [外链图片转存中...(img-SqinCYHm-1715898421744)] [外链图片转存中...(img-PoLsm2Af-1715898421744)] [外链图片转存中...(img-GwfnFFDj-1715898421745)] **既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上软件测试知识点,真正体系化!** **由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新** **[需要这份系统化的资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618631832)**

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58