热门标签

热门文章

- 1通过FinalShell连接AWS的EC2服务器_finalshell aws

- 2力扣每日一道系列 --- LeetCode 138. 随机链表的复制_力扣138

- 3Git及Token的使用_git token怎么用

- 4mongodb读写性能分析

- 5springboot-redis设置定时触发任务、删除定时任务_springboot中。redis缓存超时后自动触发接口

- 6SQL 左连接、右链接、内连接_sql左连接

- 7认证过程中常用的加密算法MD5、Base64_如何判断base64和md5

- 8flutter学习笔记_flutter 学习笔记

- 9idea的使用7——版本控制SVN的使用_找不到要更新的版本管理目录

- 10迁移学习、载入预训练权重和冻结权重_使用迁移学习的方法加载预训练权重_怎么样加载预训练权重

当前位置: article > 正文

huggingface 笔记:Llama3-8B_huggingface中llama模型的访问令牌

作者:煮酒与君饮 | 2024-06-22 17:40:39

赞

踩

huggingface中llama模型的访问令牌

1 基本使用

- from transformers import AutoTokenizer

- from transformers import AutoModelForCausalLM

- import transformers

- import torch

- import os

-

-

- os.environ["HF_TOKEN"] = '*******'

- # 设置环境变量,用于存储Hugging Face的访问令牌

-

- model='meta-llama/Meta-Llama-3-8B'

- # 定义模型名称

-

- tokenizer=AutoTokenizer.from_pretrained(model)

- # 使用预训练模型名称加载分词器

-

- llama=AutoModelForCausalLM.from_pretrained(model, device_map="cuda:1")

- # 使用预训练模型名称加载因果语言模型,并将其加载到指定的GPU设备上

- llama.device

- #device(type='cuda', index=1)

2 推理

- import time

-

- begin=time.time()

-

- input_text = "Write me a poem about maching learning."

-

- input_ids = tokenizer(input_text, return_tensors="pt").to(llama.device)

-

- outputs = llama.generate(**input_ids)

-

-

- print(tokenizer.decode(outputs[0]))

-

- end=time.time()

- print(end-begin)

- '''

- <|begin_of_text|>Write me a poem about maching learning. I will use it for a project in my class. You can use whatever words you want. I will use it for a project in my class. You can use whatever words you want.<|end_of_text|>

- 1.718801736831665

- '''

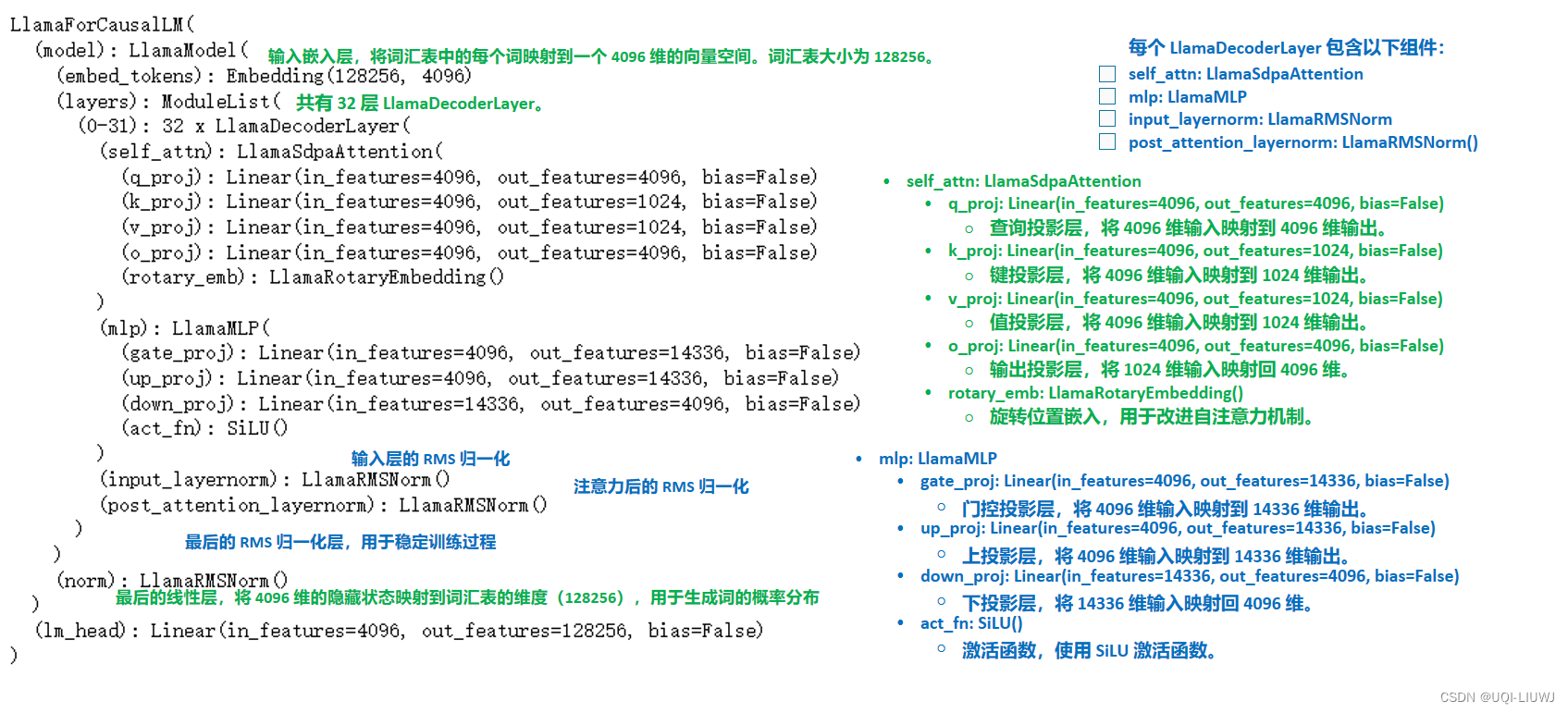

3 模型架构

- llama

- LlamaForCausalLM(

- (model): LlamaModel(

- (embed_tokens): Embedding(128256, 4096)

- (layers): ModuleList(

- (0-31): 32 x LlamaDecoderLayer(

- (self_attn): LlamaSdpaAttention(

- (q_proj): Linear(in_features=4096, out_features=4096, bias=False)

- (k_proj): Linear(in_features=4096, out_features=1024, bias=False)

- (v_proj): Linear(in_features=4096, out_features=1024, bias=False)

- (o_proj): Linear(in_features=4096, out_features=4096, bias=False)

- (rotary_emb): LlamaRotaryEmbedding()

- )

- (mlp): LlamaMLP(

- (gate_proj): Linear(in_features=4096, out_features=14336, bias=False)

- (up_proj): Linear(in_features=4096, out_features=14336, bias=False)

- (down_proj): Linear(in_features=14336, out_features=4096, bias=False)

- (act_fn): SiLU()

- )

- (input_layernorm): LlamaRMSNorm()

- (post_attention_layernorm): LlamaRMSNorm()

- )

- )

- (norm): LlamaRMSNorm()

- )

- (lm_head): Linear(in_features=4096, out_features=128256, bias=False)

- )

- 1

-

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/煮酒与君饮/article/detail/747201

推荐阅读

相关标签