xml格式是什么示例

In recent times, Machine Learning (a subset of Artificial Intelligence) has been at the forefront of technological advancement. It appears as though it is a strong contender for being the tool that could catapult human abilities and efficiency to the next level.

近年来,机器学习(人工智能的一个子集)一直处于技术进步的最前沿。 似乎它是可以将人类能力和效率提升到新水平的工具的有力竞争者。

While Machine Learning is the term that is commonly used, it is a rather large subset within the realm of AI. Most of the best machine learning based systems in use today actually belong to a subset of Machine Learning known as Deep Learning. The term Deep Learning is used to refer to a Machine learning approach that aims to mimic the functioning of the human brain to some extent. This helps bestow upon machines the power to perform certain tasks that humans can, such as object detection, object classification and much more. The Deep Learning models that are used to achieve this are often known as Neural Networks (since they try to replicate the functioning of the neural connections in the brain).

虽然机器学习是一个常用的术语,但它是AI领域中的一个相当大的子集。 当今使用的大多数最佳的基于机器学习的系统实际上都属于称为深度学习的机器学习的子集。 术语“ 深度学习”用于表示旨在在某种程度上模仿人脑功能的机器学习方法。 这有助于赋予机器执行人类可以执行的某些任务的能力,例如对象检测,对象分类等等。 用于实现此目标的深度学习模型通常称为神经网络 (因为它们试图复制大脑中神经连接的功能)。

Just like any other software, however, Neural Networks also come with their own set of vulnerabilities, and it is important for us to acknowledge these vulnerabilities so that the ethical considerations of the same can be kept in mind when further work in the field is carried out. In recent times, the vulnerability that has gained the most prominence are known as Adversarial Examples. This article aims to shed some light on the nature of Adversarial Examples and some of the ethical concerns that arise with the development of deep learning products as a result of these vulnerabilities.

但是,就像其他任何软件一样,神经网络也具有其自身的漏洞,对我们来说重要的是要认识到这些漏洞,以便在进行进一步的工作时可以牢记该漏洞的道德考虑。出来。 最近,最受关注的漏洞被称为“ 对抗示例”。 本文旨在阐明对抗性示例的性质,以及由于这些漏洞而在深度学习产品开发过程中引起的一些道德关注。

什么是对抗示例? (What are Adversarial Examples?)

The “regular” computer systems that most of us are familiar with can be attacked by hackers, and in the same way, Adversarial Examples can be thought of as a way of “attacking” a deep learning model. The concept of Adversarial Examples are best explained by taking the example of an Image Classification Neural Network. Image classification networks identify features of images through the training dataset and are later able to identify what is present in a new image that they have not seen in the past. Researchers have identified that it is possible to apply a “perturbation” to the image in such a way that the change is so small that it cannot be noticed by the human eye, but it completely changes the prediction made by the Machine Learning model.

我们大多数人都熟悉的“常规”计算机系统可能会受到黑客的攻击,并且以同样的方式,“对抗性示例”也可以被视为“攻击”深度学习模型的一种方式。 以图像分类神经网络为例,可以最好地解释对抗性示例的概念。 图像分类网络通过训练数据集识别图像的特征,并且以后能够识别新图像中存在的,以前从未看到的内容。 研究人员已经确定,可以对图像施加“扰动”,使得变化很小,以至于人眼无法察觉,但是它完全改变了机器学习模型所做的预测。

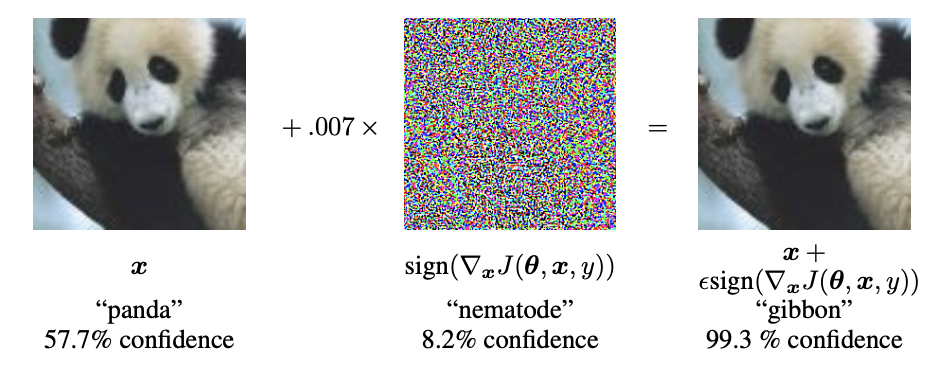

The most famous example is of an Adversarial example generated for the GoogLeNet model (Szegedy et al., 2014) that was trained on the ImageNet dataset.

最著名的例子是针对GoogLeNet模型(Szegedy等人,2014)生成的对抗性示例,该模型在ImageNet数据集上进行了训练。

As can be seen in the image above, the GoogLeNet model predicted that the initial image was a Panda with a confidence of 57.7%, however, after adding the slight perturbation, even though there is no apparent visual change in the image, the model now classifies it as a Gibbon with a confidence of 99.3%.

从上图可以看出,GoogLeNet模型预测初始图像是熊猫 ,置信度为57.7%,但是,在添加了微扰之后,即使图像中没有明显的视觉变化,该模型现在将其分类为长臂猿 ,可信度为99.3%。

The perturbation added above might appear to be a random assortment of pixels, however, in reality, each of the pixels in the perturbation have a value (represented as a color) that is calculated using complicated Mathematical algorithms. Adversarial Examples are not limited just to image classification models; they can also be used with audio and other types of files, however, the underlying principle remains the same as what has been explained above.

上面添加的扰动似乎是像素的随机分类,但是,实际上,扰动中的每个像素都有一个值(表示为颜色),该值是使用复杂的数学算法计算得出的。 对抗示例不仅限于图像分类模型; 它们也可以与音频和其他类型的文件一起使用,但是其基本原理与上面说明的相同。

There are many different algorithms that have varying degrees of success on different types of models, and an implementation of many of these can be found in the Celeverhans library (Papernot et al.)

有许多不同的算法在不同类型的模型上具有不同程度的成功,可以在Celeverhans库中找到其中的许多实现方法(Papernot等人)。

Generally, Adversarial attacks can be classified into one of two types:

通常,对抗性攻击可分为以下两种类型之一:

- Targeted Adversarial Attack 有针对性的对抗攻击

- Untargeted Adversarial Attack 无目标对抗攻击

有针对性的对抗攻击 (Targeted Adversarial Attack)

A targeted Adversarial Attack is an attack in which the aim of the perturbation is to cause the model to predict a specific wrong class.

有针对性的对抗攻击是一种攻击,其中摄动的目的是使模型预测特定的错误类别。

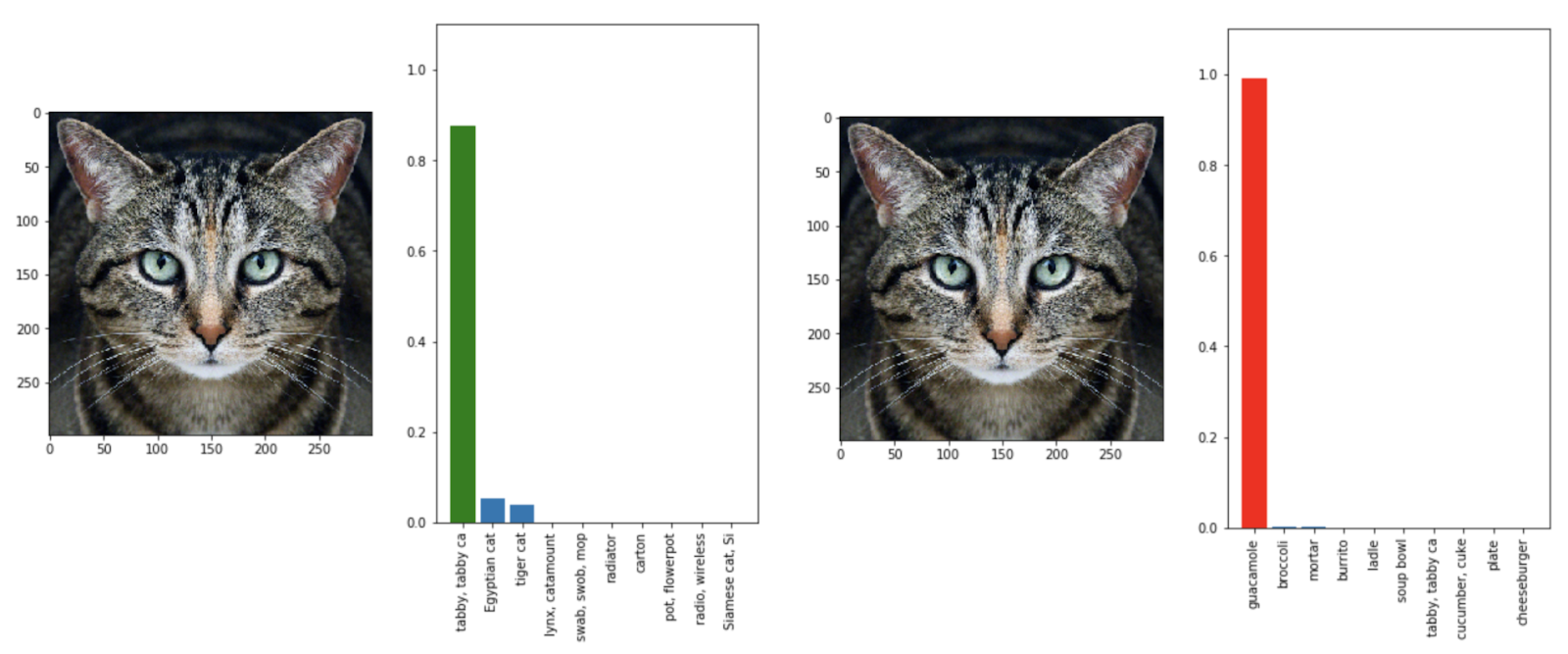

The image on the left shows the original image was classified correctly as being a Tabby Cat. Now, as part of the Targeted Attack that was conducted, the attacker decided that he would like the image to be classified as a guacamole instead. Thus, the perturbation was created in such a manner that it would force the model to predict the perturbed image as a guacamole and nothing else (i.e. Guacamole was the target class).

左图显示原始图像被正确分类为虎斑猫 。 现在,作为实施的有针对性攻击的一部分,攻击者决定将其图像分类为鳄梨调味酱 。 因此,以这样一种方式来创建扰动,该扰动将迫使模型将扰动的图像预测为鳄梨调味酱而不是其他(即鳄梨 调味酱是目标类别)。

非目标对抗攻击 (Untargeted Adversarial Attack)

As opposed to a Targeted Attack, an Untargeted Adversarial Attack involves the generation of a perturbation that will cause the model to predict the image as something that it is not.However, the attacker doesn’t explicitly choose what he would like the wrong prediction to be.

与有针对性的攻击相反,无目标的对抗攻击涉及到扰动的产生,这将导致模型将图像预测为不是它的东西,但是攻击者并未明确选择他想要的错误预测是。

An intuitive way to think about the difference between a Targeted Attack and an Untargeted Attack is that a Targeted Attack aims to generate a perturbation that will maximize the probability of some class other than the correct class which is chosen by the attacker (i.e. the target class) whereas an Untargeted Attack aims to generate a perturbation that will minimize the probability of the actual class to such an extent that the probability of some class other than the actual class becomes greater than the probability of the target class.

考虑定向攻击和非定向攻击之间区别的一种直观方法是,定向攻击旨在产生一种扰动,该扰动将使攻击者选择的正确类别(即目标类别)以外的某些类别的概率最大化),而无目标攻击的目的是产生一种扰动,以将实际类别的概率最小化到某种程度,使得除实际类别之外的某些类别的概率变得大于目标类别的概率。

道德问题 (Ethical Concerns)

As you read this, you might begin to think of some of the ethical concerns arising from Adversarial Examples, however, the true magnitude of these concerns only becomes apparent when we take a real-world example. We can take the development of self-driving cars as an example. Self driving cars tend to use some kind of deep learning framework to identify road signs and that helps the car perform actions on the basis of those signs. It turns out that by making actual minor alterations to the physical road signs, they too can serve as Adversarial Examples (it is possible to generate Adversarial Examples in the real world as well). In such a situation, one could modify a Stop sign in such a manner that cars would interpret it as a Turn Left sign, and this could have disastrous effects.

在阅读本文时,您可能会开始想到对抗性示例引起的一些道德关注,但是,只有当我们以实际示例为例时,这些关注的真实程度才变得显而易见。 我们可以以无人驾驶汽车的发展为例。 自动驾驶汽车倾向于使用某种深度学习框架来识别道路标志,并帮助汽车根据这些标志执行动作。 事实证明,通过对实际路标进行实际的细微改动,它们也可以用作对抗示例(也可以在现实世界中生成对抗示例)。 在这种情况下,可以以某种方式修改“ 停车”标志,使汽车将其解释为“ 左转”标志,这可能会造成灾难性的后果。

An example of this can be seen in the image below, where a physical change made to the Stop sign causes it to be interpreted as a Speed Limit sign.

下图显示了一个示例,其中对停止标志的物理更改导致将其解释为限速标志。

As a result of this, it is with good reason that people see some ethical concerns with the development of technologies like self-driving cars. While this should in no way serve as an impediment to the development of such technologies, it should make us wary of the vulnerabilities in Deep Learning models. We must ensure that further research is conducted to find ways to secure models against such attacks so that advanced technologies that make use of deep learning (like self-driving cars) become safe for use in production.

结果,有充分的理由使人们看到诸如自动驾驶汽车之类的技术发展方面的一些道德问题。 尽管这绝不妨碍此类技术的发展,但它应该使我们警惕深度学习模型中的漏洞。 我们必须确保进行进一步的研究,以找到保护模型免受此类攻击的方法,以使利用深度学习的先进技术(例如自动驾驶汽车)在生产中安全使用。

翻译自: https://medium.com/analytics-vidhya/what-are-adversarial-examples-e796b4b00d32

xml格式是什么示例