热门标签

热门文章

- 1手把手带你玩转Spark机器学习-使用Spark构建回归模型_spark线性回归 可视化

- 22024年(第十届)全国大学生统计建模大赛选题参考(二)_统计建模选题

- 3Python中列表的基本操作

- 4Mac OS X 10.10 Yosemite 关闭Dashboard和Spotlight_spotlightv100能删除吗

- 5产品经理 | 职业选择及面试技巧_产品经理面试职业规划

- 6【JAVA】 Java 性能比较差的代码写法及替换方案_java stream int 假发

- 7复刻yolo系列时出现的BUG及解决方法_runtimeerror: "slow_conv2d_cpu" not implemented fo

- 8XZ-Utils后门事件过程及启示_xzuntil

- 9HarmonyOS实战开发-拼图、如何实现获取图片,以及图片裁剪分割的功能。_鸿蒙 分割图片

- 10三款好用的 Docker 可视化管理工具_docker可视化界面管理工具

当前位置: article > 正文

AG_NEWS文本分类实战记录贴(RNN、LSTM、GRU)

作者:知新_RL | 2024-05-07 00:30:33

赞

踩

AG_NEWS文本分类实战记录贴(RNN、LSTM、GRU)

完整代码

模型结构

- import torch.nn as nn

-

-

- class RNNnet(nn.Module):

- def __init__(self, len_vocab, embedding_size, hidden_size, num_class, num_layers, mode):

- super(RNNnet, self).__init__()

- self.hidden = hidden_size

- self.num_layers = num_layers

- self.mode = mode

- self.embedding = nn.Embedding(len_vocab, embedding_size)

- if mode == "rnn":

- self.rnn = nn.RNN(embedding_size, hidden_size, num_layers=num_layers, batch_first=True)

- elif mode == "lstm":

- self.rnn = nn.LSTM(embedding_size, hidden_size, num_layers=num_layers, batch_first=True)

- elif mode == "gru":

- self.rnn = nn.GRU(embedding_size, hidden_size, num_layers=num_layers, batch_first=True)

- self.fc = nn.Linear(hidden_size, num_class)

-

- def forward(self, text):

- """

- :param text: [sentence_len, batch_size]

- :return:

- """

- # embedded:[sentence_len, batch_size, embedding_size]

- embedded = self.embedding(text)

- # output:[sentence_len, batch_size, hidden_size]

- # hidden:[1, batch_size, hidden_size]

- if self.mode == "rnn":

- output, hidden = self.rnn(embedded)

- elif self.mode == "lstm":

- output, (hidden, cell) = self.rnn(embedded)

- elif self.mode == "gru":

- output, hidden = self.rnn(embedded)

-

- return self.fc(hidden[-1])

主代码

- import os

-

- import matplotlib.pyplot as plt

- import torch

- import torch.nn as nn

- import torch.optim

- from torch.utils.data import DataLoader

- from torchtext.data.utils import get_tokenizer

- from torchtext.datasets import AG_NEWS

- from torchtext.vocab import build_vocab_from_iterator

- from tqdm import tqdm

-

- from model_set import RNNnet

-

-

- def loaddata(config):

- # Step1 加载数据集

- #######################################################################

-

- print("Step1: Loading DateSet")

- #######################################################################

- # 【数据集介绍】AG_NEWS, 新闻语料库,仅仅使用了标题和描述字段,

- # 包含4个大类新闻:World、Sports、Business、Sci/Tec。

- # 【样本数据】 120000条训练样本集(train.csv), 7600测试样本数据集(test.csv);

- # 每个类别分别拥有 30,000 个训练样本及 1900 个测试样本。

- os.makedirs(config.datapath, exist_ok=True)

- train_dataset_o, test_dataset_o = AG_NEWS(root=config.datapath, split=('train', 'test'))

- classes = ['World', 'Sports', 'Business', 'Sci/Tech']

-

- # for t in test_dataset_o:

- # print(t)

- # break

-

- return train_dataset_o, test_dataset_o, classes

-

-

- def bulvocab(traindata):

- # Step2 分词,构建词汇表

- #######################################################################

- #

- print("Step2: Building VocabSet")

- #######################################################################

- tokenizer = get_tokenizer('basic_english') # 基本的英文分词器,tokenizer会把句子进行分割,类似jieba

-

- def yield_tokens(data_iter): # 分词生成器

- for _, text in data_iter:

- yield tokenizer(text) # yield会构建一个类似列表可迭代的东西,但比起直接使用列表要少占用很多内存

-

- # 根据训练数据构建词汇表

- vocab = build_vocab_from_iterator(yield_tokens(traindata), specials=["<PAD>"]) # <unk>代指低频词或未在词表中的词

- # 词汇表会将token映射到词汇表中的索引上,注意词汇表的构建不需要用测试集

- vocab.set_default_index(vocab["<PAD>"]) # 设置默认索引,当某个单词不在词汇表vocab时(OOV),返回该单词索引

- print(f"len vocab:{len(vocab)}")

- len_vocab = len(vocab)

-

- return vocab, len_vocab

-

-

- def tensor_padding(tensor_list, seq_len):

- # 填充前两个张量

- padded_tensors = []

- for tensor in tensor_list:

- padding = (0, seq_len - len(tensor)) # 在末尾填充0

- padded_tensor = torch.nn.functional.pad(tensor, padding, mode='constant', value=0)

- padded_tensors.append(padded_tensor)

- return padded_tensors

-

-

- def dateset2loader(config, vocab, traindata, testdata):

- tokenizer = get_tokenizer('basic_english') # 基本的英文分词器,tokenizer会把句子进行分割,类似jieba

- # Step3 构建数据加载器 dataloader

- ##########################################################################

-

- print("Step3: DateSet -> Dataloader")

- ##########################################################################

- # text_pipeline将一个文本字符串转换为整数List, List中每项对应词汇表voca中的单词的索引号

- text_pipeline = lambda x: vocab(tokenizer(x))

-

- # label_pipeline将label转换为整数

- label_pipeline = lambda x: int(x) - 1

-

- # 加载数据集合,转换为张量

- def collate_batch(batch):

- """

- (3, "Wall") -> (2, "467")

- :param batch:

- :return:

- """

- label_list, text_list = [], []

- for (_label, _text) in batch:

- label_list.append(label_pipeline(_label))

- processed_text = torch.tensor(text_pipeline(_text), dtype=torch.int64)

- text_list.append(processed_text)

-

- # 指定句子长度统一的标准

- if config.seq_mode == "min":

- seq_len = min(len(item) for item in text_list)

- elif config.seq_mode == "max":

- seq_len = max(len(item) for item in text_list)

- elif config.seq_mode == "avg":

- seq_len = sum(len(item) for item in text_list) / len(text_list)

- elif isinstance(config.seq_mode, int):

- seq_len = config.seq_mode

- else:

- seq_len = min(len(item) for item in text_list)

- seq_len = int(seq_len)

- # 每一个batch里统一长度

- batch_seq = torch.stack(tensor_padding(text_list, seq_len))

-

- label_list = torch.tensor(label_list, dtype=torch.int64)

- return label_list, batch_seq

-

- train_dataloader = DataLoader(traindata, batch_size=config.batchsize, shuffle=True, collate_fn=collate_batch)

- test_dataloader = DataLoader(testdata, batch_size=config.batchsize, shuffle=True, collate_fn=collate_batch)

-

- return train_dataloader, test_dataloader

-

-

- def model_train(config, len_vocab, classes, train_dataloader, test_dataloader):

- # 构建模型

- device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

- rnn_model = RNNnet(

- len_vocab=len_vocab,

- embedding_size=config.embedding_size,

- hidden_size=config.hidden_size,

- num_class=len(classes),

- num_layers=config.num_layers,

- mode=config.mode

- )

-

- optimizer = torch.optim.Adam(rnn_model.parameters(), lr=config.l_r)

- loss_fn = nn.CrossEntropyLoss()

- rnn_model.train()

- rnn_model.to(device)

- # 训练模型

- LOSS = []

- ACC = []

- TACC = []

- os.makedirs(config.savepath, exist_ok=True)

- best_acc = 0

- for epoch in range(config.epochs):

- loop = tqdm(train_dataloader, desc='Train')

- total_loss, total_acc, count, i = 0, 0, 0, 0

- rnn_model.train()

- for idx, (label, text) in enumerate(loop):

- text = text.to(device)

- label = label.to(device)

- optimizer.zero_grad()

- output = rnn_model(text) # 预测

-

- loss = loss_fn(output, label)

- loss.backward()

- optimizer.step()

-

- predict = torch.argmax(output, dim=1) # 判断与原标签是否一样

- acc = (predict == label).sum()

- total_loss += loss.item()

- total_acc += acc.item()

- count += len(label)

- i += 1

- # 打印过程

- loop.set_description(f'Epoch [{epoch + 1}/{config.epochs}]')

- loop.set_postfix(loss=round(loss.item(), 4), acc=(round(acc.item() / len(label), 4) * 100))

- print(

- f"epoch_loss:{round(total_loss / i, 4)}\nepoch_acc:{round(total_acc / count, 4) * 100}%")

- # 保存模型参数

-

- LOSS.append(round(total_loss / i, 4))

- ACC.append(round((total_acc / count) * 100, 4))

-

- rnn_model.eval()

- test_loop = tqdm(test_dataloader)

- total_acc, count = 0, 0

- for idx, (label, text) in enumerate(test_loop):

- text = text.to(device)

- label = label.to(device)

- output = rnn_model(text)

- predict = torch.argmax(output, dim=1) # 判断与原标签是否一样

- acc = (predict == label).sum()

- total_acc += acc.item()

- count += len(label)

- print(f"测试集精度:{round((total_acc / count) * 100, 2)}%")

- temp_acc = round((total_acc / count) * 100, 2)

- TACC.append(temp_acc)

- if temp_acc > best_acc:

- best_acc = temp_acc

- torch.save(rnn_model.state_dict(), f"{config.savepath}/{config.modelpath}")

-

- print(f"LOSS_array:{LOSS}")

- print(f"ACC_array:{ACC}")

- print(f"TACC_array:{TACC}")

- with open(config.logpath, 'w') as f:

- f.write(f"LOSS_array:{LOSS}\nACC_array:{ACC}\nTACC_array:{TACC}")

-

-

- def modeltest(config, len_vocab, classes, test_dataloader):

- device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

- # 测试模型

- rnn_model_test = RNNnet(

- len_vocab=len_vocab,

- embedding_size=config.embedding_size,

- hidden_size=config.hidden_size,

- num_class=len(classes),

- num_layers=config.num_layers,

- mode=config.mode

- )

- rnn_model_test.load_state_dict(torch.load(f"{config.savepath}/{config.modelpath}"))

- rnn_model_test.eval()

- rnn_model_test.to(device)

- test_loop = tqdm(test_dataloader)

- total_acc, count = 0, 0

- for idx, (label, text) in enumerate(test_loop):

- text = text.to(device)

- label = label.to(device)

- output = rnn_model_test(text)

- predict = torch.argmax(output, dim=1) # 判断与原标签是否一样

- acc = (predict == label).sum()

- total_acc += acc.item()

- count += len(label)

- print(f"测试集精度:{round((total_acc / count) * 100, 2)}%")

-

-

- def plot_result(logpath, mode):

- mode_set = ["loss", 'train_accuracy', 'test_accuracy']

- if mode not in mode_set:

- return "wrong mode"

- color = ['blue', 'red', 'black']

- with open(logpath, "r") as f:

- line = f.readlines()[mode_set.index(mode)]

- y = eval(line[line.index(':') + 1:])

- x = [i for i in range(len(y))]

-

- plt.figure()

-

- # 去除顶部和右边框框

- ax = plt.axes()

- ax.spines['top'].set_visible(False)

- ax.spines['right'].set_visible(False)

-

- plt.xlabel('epoch')

- plt.ylabel(f'{mode}')

-

- plt.plot(x, y, color=color[mode_set.index(mode)], linestyle="solid", label=f"train {mode}")

- plt.legend()

-

- plt.title(f'train {mode} curve')

- plt.show()

- plt.savefig(f"{mode}.png")

-

-

- class Config:

- def __init__(self):

- #######################################

- # 数据集使用的是AG_NEWS,实现文本分类任务

- #######################################

- self.datapath = './data'

- self.savepath = './save_model'

- self.modelpath = 'rnn_model.pt'

- self.logpath = 'log_best.txt'

- self.embedding_size = 128

- self.hidden_size = 256

- self.num_layers = 1 # rnn的层数

- self.l_r = 1e-3

- self.epochs = 50

- self.batchsize = 1024

- self.plotloss = True

- self.plotacc = True

- self.train = True

- self.test = True

- self.seq_mode = "avg" # seq_mode:min、max、avg、也可输入一个数字自定义长度

- self.test_one = False

- self.test_self = "./test_self.txt"

- self.mode = "lstm"

-

- def parm(self):

- print(

- f"datapath={self.datapath}\n"

- f"savepath={self.savepath}\n"

- f"modelpath={self.modelpath}\n"

- f"logpath={self.logpath}\n"

- f"embedding_size={self.embedding_size}\n"

- f"hidden_size={self.hidden_size}\n"

- f"num_layers={self.num_layers}\n"

- f"l_r={self.l_r}\n"

- f"epochs={self.epochs}\n"

- f"batchsize={self.batchsize}\n"

- f"plotloss={self.plotloss}\n"

- f"plotacc={self.plotacc}\n"

- f"train={self.train}\n"

- f"test={self.test}\n"

- f"seq_mode={self.seq_mode}\n"

- f"test_one={self.test_one}\n"

- f"test_self={self.test_self}\n"

- f"mode={self.mode}\n"

- )

-

-

- def simple(vocab, text_one):

- device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

- # 测试模型

- rnn_model_test = RNNnet(

- len_vocab=len_vocab,

- embedding_size=config.embedding_size,

- hidden_size=config.hidden_size,

- num_class=len(classes),

- num_layers=config.num_layers,

- mode=config.mode

- )

- rnn_model_test.load_state_dict(torch.load(f"{config.savepath}/{config.modelpath}"))

- rnn_model_test.eval()

- rnn_model_test.to(device)

-

- text_pipeline = lambda x: vocab(tokenizer(x))

- tokenizer = get_tokenizer('basic_english') # 基本的英文分词器,tokenizer会把句子进行分割,类似jieba

-

- for text in text_one:

- text_one_tensor = torch.tensor(text_pipeline(text), dtype=torch.int64)

- text_one_tensor = text_one_tensor.to(device)

- print(f"预测标签为:{torch.argmax(rnn_model_test(text_one_tensor)).item() + 1}")

-

-

- if __name__ == "__main__":

- config = Config()

- config.parm()

- if config.train:

- train_dataset_o, test_dataset_o, classes = loaddata(config)

- vocab, len_vocab = bulvocab(train_dataset_o)

- train_dataloader, test_dataloader = dateset2loader(config, vocab, train_dataset_o, test_dataset_o)

- model_train(config, len_vocab, classes, train_dataloader, test_dataloader)

- if config.test:

- train_dataset_o, test_dataset_o, classes = loaddata(config)

- vocab, len_vocab = bulvocab(train_dataset_o)

- train_dataloader, test_dataloader = dateset2loader(config, vocab, train_dataset_o, test_dataset_o)

- modeltest(config, len_vocab, classes, test_dataloader)

- if config.plotloss:

- plot_result(config.logpath, 'loss')

- if config.plotacc:

- plot_result(config.logpath, 'train_accuracy')

- plot_result(config.logpath, 'test_accuracy')

- if config.test_one:

- train_dataset_o, test_dataset_o, classes = loaddata(config)

- vocab, len_vocab = bulvocab(train_dataset_o)

- with open(config.test_self, "r") as f:

- simple(vocab, f.readlines())

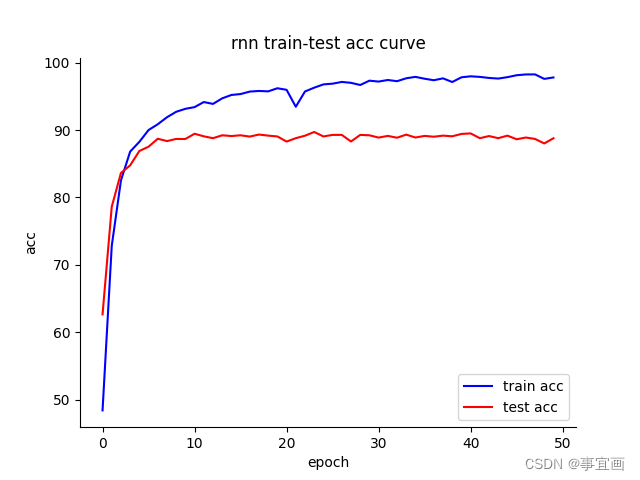

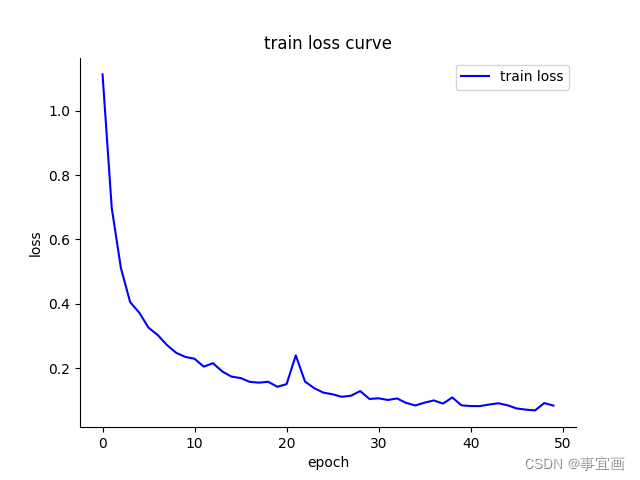

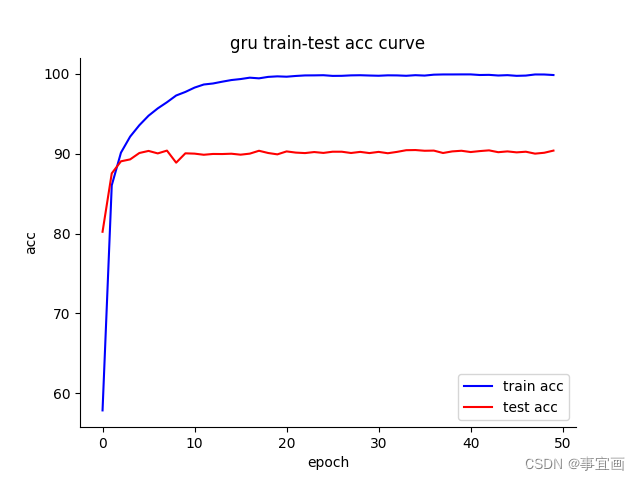

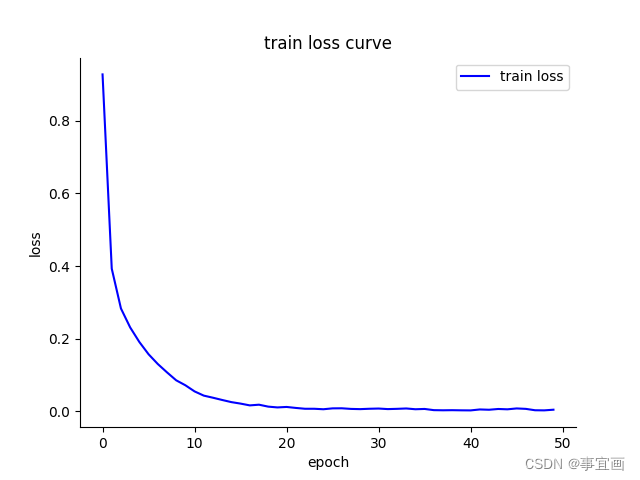

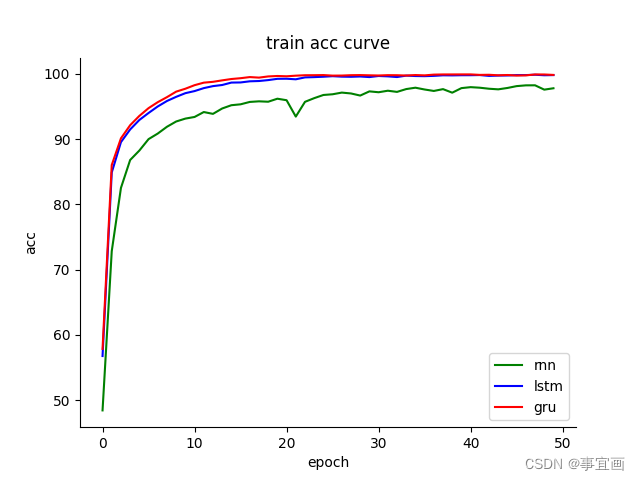

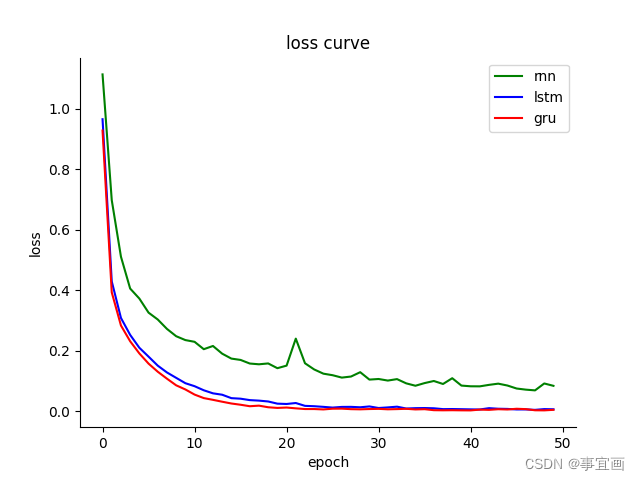

实验结果

主要参数

内存16G,在cpu上跑的,cpu为:AMD R7 5800U

- embedding_size=128

- hidden_size=256

- num_layers=1 #使用的记忆单元层数(rnn、lstm、gru)

- l_r=0.001

- epochs=50

- batchsize=1024

- seq_mode="avg" #使用每个batch里均长来统一句子长度

RNN(50epoch,每个epoch跑了50秒)

测试集精度:89.75%

LSTM(50epoch,每个epoch跑了1分钟30秒)

测试集精度:90.67%

GRU(50epoch,每个epoch跑了2分钟10秒)

测试集精度:90.46%

陌生数据检验

这是网上找的随便一个体育类新闻

北京时间4月17日,NBA附加赛,国王118-94大胜勇士,勇士被淘汰出局无缘季后赛,国王将与鹈鹕争夺最后一个季后赛名额。国王:基根-穆雷32分9篮板、福克斯24分4篮板6助攻、巴恩斯17分4篮板3助攻、萨博尼斯16分12篮板7助攻、埃利斯15分4篮板5助攻3抢断3盖帽。勇士:库里22分4篮板、库明加16分7篮板、穆迪16分3篮板、维金斯12分3篮板、追梦12分3篮板6助攻、保罗3分2助攻、克雷-汤普森10投0中一分未得。首节比赛,国王三分手感火热,领先勇士9分结束第一节。第二节,国王打出高潮将比分拉开,勇士节末不断追分。半场结束时,勇士落后国王4分。第三节,勇士一度将分差追到仅剩1分,国王延续三分手感再度拉开分差,国王领先15分进入最后一节。末节,国王将分差继续拉大,勇士崩盘,分差超过20分。最终,国王118-94大胜勇士。让谷歌翻译成英文

On April 17, Beijing time, in the NBA play-offs, the Kings defeated the Warriors 118-94, the Warriors were eliminated from the playoffs, and the Kings will compete with the Pelicans for the last playoff spot. Kings: Keegan Murray 32 points and 9 rebounds, Fox 24 points, 4 rebounds and 6 assists, Barnes 17 points, 4 rebounds and 3 assists, Sabonis 16 points, 12 rebounds and 7 assists, Ellis 15 points, 4 rebounds, 5 assists, 3 steals and 3 blocks. Warriors: Curry 22 points and 4 rebounds, Kuminga 16 points and 7 rebounds, Moody 16 points and 3 rebounds, Wiggins 12 points and 3 rebounds, Dream 12 points, 3 rebounds and 6 assists, Paul 3 points and 2 assists, Klay Thompson did not score a point on 0-of-10 shooting. In the first quarter of the game, the Kings were hot with a three-point hand, leading the Warriors by 9 points to end the first quarter. In the second quarter, the Kings played a climax to pull the score away, and the Warriors continued to chase points at the end of the quarter. At halftime, the Warriors trailed the Kings by four points. In the third quarter, the Warriors once chased the difference to only one point, and the King continued to open the gap again with a three-point hand, and the King led by 15 points into the final quarter. In the final quarter, the Kings continued to widen the difference, and the Warriors collapsed, with a margin of more than 20 points. In the end, the Kings defeated the Warriors 118-94.输入三个模型进行检验:

rnn:

lstm:

gru:

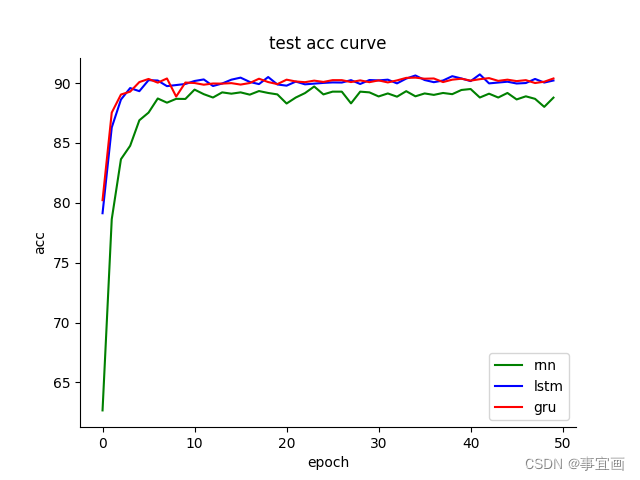

三个模型对比

可以看出,lstm跟gru都是要比rnn效果更好的,但其实三者在测试集上的准确度都接近90%也是非常不错的结果了。而且从test_acc可以看出,gru要比lstm更平稳。

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/知新_RL/article/detail/546586

推荐阅读

相关标签