热门标签

热门文章

- 17-1 数据结构考题 二叉树的遍历-先序

- 2pycharm的配置环境_pycharm 已有项目 informer 环境

- 3【C语言】实现插入排序、选择排序、冒泡排序、希尔排序、归并排序、堆排序、快速排序_c选择升序排列

- 4Dorker 安装

- 5(三) InnoDB笔记之查询(B+树索引)_b+树查询

- 6【Centos7上SVN客户端的安装和使用】_centos svn client

- 7【区块链-前端交互】第四篇:认识 ethers.js并运行测试代码_nodejs ethers

- 8Spark SQL/DSL_sparksql和spark dsl 的区别

- 9cube旋转立方体(Qt-OpenGL)_cube.transformvertices

- 10Hershell:跨平台反向Shell生成器_shell 获取 goos

当前位置: article > 正文

Android MediaMuxer+MediaCodec 编码yuv数据成mp4_安卓yuv混合成mp4

作者:知新_RL | 2024-06-07 09:19:57

赞

踩

安卓yuv混合成mp4

一、简介

使用 MediaCodec 对 yuv 数据进行编码,编码的格式为 H.264(AVC) 。

使用 MediaMuxer 将视频track和音频track混合到 mp4 容器中,通常视频编码使用H.264(AVC)编码,音频编码使用AAC编码。

二、流程分析

(简要介绍一下流程,具体api的参数说明起来篇幅太大,不清楚的可以自己搜索一下)

- 创建编码器并配置

MediaFormat mediaFormat = MediaFormat.createVideoFormat(MediaFormat.MIMETYPE_VIDEO_AVC, videoWidth, videoHeight);

// 设置编码的颜色格式,实则为nv12(不同手机可能会不一样)

mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Flexible);

// 设置视频的比特率,比特率太小会影响编码的视频质量

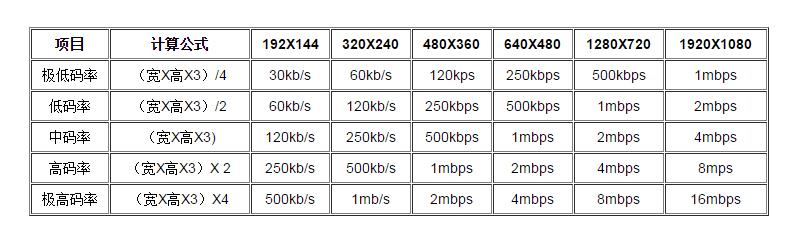

mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, width * height * 6);

// 设置视频的帧率

mediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 30);

// 设置I帧(关键帧)的间隔时间,单位秒

mediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1);

// 创建编码器、配置和启动

MediaCodec encoder = MediaCodec.createEncoderByType(MediaFormat.MIMETYPE_VIDEO_AVC);

encoder.configure(mediaFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

encoder.start();

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

关于比特率可以参考:

- 编码一帧数据

private void encode(byte[] yuv, long presentationTimeUs) { // 一、给编码器设置一帧输入数据 // 1.获取一个可用的输入buffer,最大等待时长为DEFAULT_TIMEOUT_US int inputBufferIndex = mEncoder.dequeueInputBuffer(DEFAULT_TIMEOUT_US); ByteBuffer inputBuffer = mEncoder.getInputBuffer(inputBufferIndex); // 2.将输入数据放到buffer中 inputBuffer.put(yuv); // 3.将buffer压入解码队列中,即编码线程就会处理队列中的数据了 mEncoder.queueInputBuffer(inputBufferIndex, 0, yuv.length, presentationTimeUs, 0); // 二、从编码器中取出一帧编码后的输出数据 // 1.获取一个可用的输出buffer,最大等待时长为DEFAULT_TIMEOUT_US MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo(); int outputBufferIndex = mEncoder.dequeueOutputBuffer(bufferInfo, DEFAULT_TIMEOUT_US); ByteBuffer outputBuffer = mEncoder.getOutputBuffer(outputBufferIndex); // 2.TODO MediaMuxer将编码数据写入到mp4中 // 3.用完后释放这个输出buffer mEncoder.releaseOutputBuffer(outputBufferIndex, false); }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- MediaMuxer写入编码数据

在写入前,需要配置一些视频的头部信息(csd参数),否则会报错。csd参数全称Codec-specific Data。对于H.264来说,"csd-0"和"csd-1"分别对应sps和pps;对于AAC来说,"csd-0"对应ADTS。

// 写入头部信息,并启动 MediaMuxer private int writeHeadInfo(ByteBuffer outputBuffer, MediaCodec.BufferInfo bufferInfo) { byte[] csd = new byte[bufferInfo.size]; outputBuffer.limit(bufferInfo.offset + bufferInfo.size); outputBuffer.position(bufferInfo.offset); outputBuffer.get(csd); ByteBuffer sps = null; ByteBuffer pps = null; for (int i = bufferInfo.size - 1; i > 3; i--) { if (csd[i] == 1 && csd[i - 1] == 0 && csd[i - 2] == 0 && csd[i - 3] == 0) { sps = ByteBuffer.allocate(i - 3); pps = ByteBuffer.allocate(bufferInfo.size - (i - 3)); sps.put(csd, 0, i - 3).position(0); pps.put(csd, i - 3, bufferInfo.size - (i - 3)).position(0); } } MediaFormat outputFormat = mEncoder.getOutputFormat(); if (sps != null && pps != null) { outputFormat.setByteBuffer("csd-0", sps); outputFormat.setByteBuffer("csd-1", pps); } int videoTrackIndex = mMediaMuxer.addTrack(outputFormat); Log.d(TAG, "videoTrackIndex: " + videoTrackIndex); mMediaMuxer.start(); return videoTrackIndex; } // 写入一帧编码后的数据 mMediaMuxer.writeSampleData(mVideoTrackIndex, outputBuffer, bufferInfo);

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 结束后释放相应对象

mEncoder.stop();

mEncoder.release();

mMediaMuxer.release();

- 1

- 2

- 3

三、完整代码

包含一些基本的返回值检查、接口回调、以及可以中途停止解码的方法等。

import android.media.MediaCodec; import android.media.MediaCodecInfo; import android.media.MediaFormat; import android.media.MediaMuxer; import android.util.Log; import java.io.IOException; import java.nio.ByteBuffer; public class VideoEncoder { private static final String TAG = "VideoEncoder"; private final static String MIME_TYPE = MediaFormat.MIMETYPE_VIDEO_AVC; private static final long DEFAULT_TIMEOUT_US = 10000; private MediaCodec mEncoder; private MediaMuxer mMediaMuxer; private int mVideoTrackIndex; private boolean mStop = false; public void init(String outPath, int width, int height) { try { mStop = false; mVideoTrackIndex = -1; mMediaMuxer = new MediaMuxer(outPath, MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4); mEncoder = MediaCodec.createEncoderByType(MIME_TYPE); MediaFormat mediaFormat = MediaFormat.createVideoFormat(MIME_TYPE, width, height); // 编码器输入是NV12格式 mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Flexible); mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, width * height * 6); mediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 30); mediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1); mEncoder.configure(mediaFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE); mEncoder.start(); } catch (IOException e) { e.printStackTrace(); } } public void release() { mStop = true; if (mEncoder != null) { mEncoder.stop(); mEncoder.release(); mEncoder = null; } if (mMediaMuxer != null) { mMediaMuxer.release(); mMediaMuxer = null; } } public void encode(byte[] yuv, long presentationTimeUs) { if (mEncoder == null || mMediaMuxer == null) { Log.e(TAG, "mEncoder or mMediaMuxer is null"); return; } if (yuv == null) { Log.e(TAG, "input yuv data is null"); return; } int inputBufferIndex = mEncoder.dequeueInputBuffer(DEFAULT_TIMEOUT_US); Log.d(TAG, "inputBufferIndex: " + inputBufferIndex); if (inputBufferIndex == -1) { Log.e(TAG, "no valid buffer available"); return; } ByteBuffer inputBuffer = mEncoder.getInputBuffer(inputBufferIndex); inputBuffer.put(yuv); mEncoder.queueInputBuffer(inputBufferIndex, 0, yuv.length, presentationTimeUs, 0); while (!mStop) { MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo(); int outputBufferIndex = mEncoder.dequeueOutputBuffer(bufferInfo, DEFAULT_TIMEOUT_US); Log.d(TAG, "outputBufferIndex: " + outputBufferIndex); if (outputBufferIndex >= 0) { ByteBuffer outputBuffer = mEncoder.getOutputBuffer(outputBufferIndex); // write head info if (mVideoTrackIndex == -1) { Log.d(TAG, "this is first frame, call writeHeadInfo first"); mVideoTrackIndex = writeHeadInfo(outputBuffer, bufferInfo); } if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) == 0) { Log.d(TAG, "write outputBuffer"); mMediaMuxer.writeSampleData(mVideoTrackIndex, outputBuffer, bufferInfo); } mEncoder.releaseOutputBuffer(outputBufferIndex, false); break; // 跳出循环 } } } private int writeHeadInfo(ByteBuffer outputBuffer, MediaCodec.BufferInfo bufferInfo) { byte[] csd = new byte[bufferInfo.size]; outputBuffer.limit(bufferInfo.offset + bufferInfo.size); outputBuffer.position(bufferInfo.offset); outputBuffer.get(csd); ByteBuffer sps = null; ByteBuffer pps = null; for (int i = bufferInfo.size - 1; i > 3; i--) { if (csd[i] == 1 && csd[i - 1] == 0 && csd[i - 2] == 0 && csd[i - 3] == 0) { sps = ByteBuffer.allocate(i - 3); pps = ByteBuffer.allocate(bufferInfo.size - (i - 3)); sps.put(csd, 0, i - 3).position(0); pps.put(csd, i - 3, bufferInfo.size - (i - 3)).position(0); } } MediaFormat outputFormat = mEncoder.getOutputFormat(); if (sps != null && pps != null) { outputFormat.setByteBuffer("csd-0", sps); outputFormat.setByteBuffer("csd-1", pps); } int videoTrackIndex = mMediaMuxer.addTrack(outputFormat); Log.d(TAG, "videoTrackIndex: " + videoTrackIndex); mMediaMuxer.start(); return videoTrackIndex; } }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

四、调用示例

结合上一篇的解码器,可以做一些解码再编码的例子。

链接:Android MediaExtractor+MediaCodec解码视频,返回yuv回调数据

VideoDecoder mVideoDecoder = new VideoDecoder(); mVideoDecoder.setOutputFormat(VideoDecoder.COLOR_FORMAT_NV12); // 设置输出nv12的数据 VideoEncoder mVideoEncoder = null; // 某某线程中 mVideoDecoder.decode("/sdcard/test.mp4", new VideoDecoder.DecodeCallback() { @Override public void onDecode(byte[] yuv, int width, int height, int frameCount, long presentationTimeUs) { Log.d(TAG, "frameCount: " + frameCount + ", presentationTimeUs: " + presentationTimeUs); if (mVideoEncoder == null) { mVideoEncoder = new VideoEncoder(); mVideoEncoder.init("/sdcard/test_out.mp4", width, height); } // yuv数据操作,例如保存或者再去编码等 mVideoEncoder.encode(yuv, presentationTimeUs); } @Override public void onFinish() { Log.d(TAG, "onFinish"); if (mVideoEncoder != null) mVideoEncoder.release(); } @Override public void onStop() { Log.d(TAG, "onStop"); if (mVideoEncoder != null) mVideoEncoder.release(); } });

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/知新_RL/article/detail/684819

推荐阅读

相关标签