热门标签

热门文章

- 1国内无法访问下载Docker镜像的多种解决方案, Docker 镜像 网络代理配置,推荐使用网络代理_国内无法拉取docker镜像

- 2使用Dom4j解析XML文件

- 3SpringCloud源码学习笔记之Eureka服务端——服务注册_no static resource eureka.

- 4自然语言生成(NLG)的好处是什么,它如何影响BI?_智能语音 nlg

- 5基于Big-Bang-Big-Crunch(BBBC)算法的目标函数最小值计算matlab仿真

- 6开源低代码平台,JeecgBoot v3.7.0 里程碑版本发布_检测到当前菜单表是 vue2版本 的,这将导致菜单加载异常,请更换成vue3版本的表!

- 7飞桨模型保存_重磅发布开源框架、生物计算平台螺旋桨,百度飞桨交了年终成绩单...

- 8svn提交提示服务器文件被锁,svn被锁定怎么解决-svn被锁定的解决方法 - 河东软件园...

- 9鸿蒙实战开发教程-注册登录页面_鸿蒙登录页面

- 10软考中级系统集成必备100题(91-100)真题精炼(1)

当前位置: article > 正文

银行客户认购产品预测

作者:知新_RL | 2024-07-14 08:20:39

赞

踩

银行客户认购产品预测

# 查看当前挂载的数据集目录, 该目录下的变更重启环境后会自动还原

# View dataset directory.

# This directory will be recovered automatically after resetting environment.

!ls /home/aistudio/data

!pip install --upgrade pip

!pip install shap

!pip install numba --user --ignore-installed llvmlite

!pip install numba

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

data178027 Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple Requirement already satisfied: pip in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (22.3.1) Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple Requirement already satisfied: shap in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (0.41.0) Requirement already satisfied: tqdm>4.25.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from shap) (4.64.1) Requirement already satisfied: packaging>20.9 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from shap) (21.3) Requirement already satisfied: cloudpickle in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from shap) (1.6.0) Requirement already satisfied: scipy in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from shap) (1.6.3) Requirement already satisfied: pandas in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from shap) (1.1.5) Requirement already satisfied: numpy in ./.data/webide/pip/lib/python3.7/site-packages (from shap) (1.21.6) Requirement already satisfied: numba in ./.data/webide/pip/lib/python3.7/site-packages (from shap) (0.56.4) Requirement already satisfied: scikit-learn in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from shap) (0.24.2) Requirement already satisfied: slicer==0.0.7 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from shap) (0.0.7) Requirement already satisfied: pyparsing!=3.0.5,>=2.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from packaging>20.9->shap) (3.0.9) Requirement already satisfied: setuptools in ./.data/webide/pip/lib/python3.7/site-packages (from numba->shap) (65.6.3) Requirement already satisfied: importlib-metadata in ./.data/webide/pip/lib/python3.7/site-packages (from numba->shap) (5.2.0) Requirement already satisfied: llvmlite<0.40,>=0.39.0dev0 in ./.data/webide/pip/lib/python3.7/site-packages (from numba->shap) (0.39.1) Requirement already satisfied: pytz>=2017.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pandas->shap) (2019.3) Requirement already satisfied: python-dateutil>=2.7.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pandas->shap) (2.8.2) Requirement already satisfied: joblib>=0.11 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn->shap) (0.14.1) Requirement already satisfied: threadpoolctl>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn->shap) (2.1.0) Requirement already satisfied: six>=1.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from python-dateutil>=2.7.3->pandas->shap) (1.16.0) Requirement already satisfied: typing-extensions>=3.6.4 in ./.data/webide/pip/lib/python3.7/site-packages (from importlib-metadata->numba->shap) (4.4.0) Requirement already satisfied: zipp>=0.5 in ./.data/webide/pip/lib/python3.7/site-packages (from importlib-metadata->numba->shap) (3.11.0) Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple Collecting numba Using cached https://pypi.tuna.tsinghua.edu.cn/packages/6b/b5/b0a0af320c43f2925c699e8613382d3669829b585717ef2d795a06187564/numba-0.56.4-cp37-cp37m-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (3.5 MB) Collecting llvmlite Using cached https://pypi.tuna.tsinghua.edu.cn/packages/6f/78/15e11f84531c3e4e078ed2faa4e6e078ef2a0c06c6275020bc10c3865e9c/llvmlite-0.39.1-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (34.6 MB) Collecting importlib-metadata Using cached https://pypi.tuna.tsinghua.edu.cn/packages/35/07/fd0145f9e57356098fe15415dbb9616fd628373ecf88faab9aae0c988d2c/importlib_metadata-5.2.0-py3-none-any.whl (21 kB) Collecting setuptools Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ef/e3/29d6e1a07e8d90ace4a522d9689d03e833b67b50d1588e693eec15f26251/setuptools-65.6.3-py3-none-any.whl (1.2 MB) Collecting numpy<1.24,>=1.18 Using cached https://pypi.tuna.tsinghua.edu.cn/packages/6d/ad/ff3b21ebfe79a4d25b4a4f8e5cf9fd44a204adb6b33c09010f566f51027a/numpy-1.21.6-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.whl (15.7 MB) Collecting typing-extensions>=3.6.4 Using cached https://pypi.tuna.tsinghua.edu.cn/packages/0b/8e/f1a0a5a76cfef77e1eb6004cb49e5f8d72634da638420b9ea492ce8305e8/typing_extensions-4.4.0-py3-none-any.whl (26 kB) Collecting zipp>=0.5 Using cached https://pypi.tuna.tsinghua.edu.cn/packages/d8/20/256eb3f3f437c575fb1a2efdce5e801a5ce3162ea8117da96c43e6ee97d8/zipp-3.11.0-py3-none-any.whl (6.6 kB) Installing collected packages: zipp, typing-extensions, setuptools, numpy, llvmlite, importlib-metadata, numba [31mERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts. python-lsp-server 1.5.0 requires ujson>=3.0.0, but you have ujson 1.35 which is incompatible. parl 1.4.1 requires pyzmq==18.1.1, but you have pyzmq 23.2.1 which is incompatible. flake8 4.0.1 requires importlib-metadata<4.3; python_version < "3.8", but you have importlib-metadata 5.2.0 which is incompatible.[0m[31m [0mSuccessfully installed importlib-metadata-5.2.0 llvmlite-0.39.1 numba-0.56.4 numpy-1.21.6 setuptools-65.6.3 typing-extensions-4.4.0 zipp-3.11.0 Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple Requirement already satisfied: numba in ./.data/webide/pip/lib/python3.7/site-packages (0.56.4) Requirement already satisfied: llvmlite<0.40,>=0.39.0dev0 in ./.data/webide/pip/lib/python3.7/site-packages (from numba) (0.39.1) Requirement already satisfied: importlib-metadata in ./.data/webide/pip/lib/python3.7/site-packages (from numba) (5.2.0) Requirement already satisfied: setuptools in ./.data/webide/pip/lib/python3.7/site-packages (from numba) (65.6.3) Requirement already satisfied: numpy<1.24,>=1.18 in ./.data/webide/pip/lib/python3.7/site-packages (from numba) (1.21.6) Requirement already satisfied: typing-extensions>=3.6.4 in ./.data/webide/pip/lib/python3.7/site-packages (from importlib-metadata->numba) (4.4.0) Requirement already satisfied: zipp>=0.5 in ./.data/webide/pip/lib/python3.7/site-packages (from importlib-metadata->numba) (3.11.0)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

前言

所给数据质量比较好,没有缺失值和重复值,但正负样本不均衡,模型使用了xgboost,lightgbm,catboost三个模型训练,结果lightgbm>xgboost>catboost。lightgbm加交叉验证可以达到0.97左右,xgboost在0.965左右,catboost在0.96左右。

一.项目背景

用户购买预测是数字化营销领域中的重要应用场景。在数字营销成为新常态下,数字营销向何处去本质上是由主力消费人群及其消费方式与偏好等决定的,因此消费品企业有必要重新认识后疫情时代下主流消费人群的行为特征及其变化。通过这个项目,鼓励学习者利用营销活动信息,为企业提供销售策略,也为消费者提供更适合的商品推荐。

本次项目以银行具体产品认购预测为背景,想让你来预测下客户是否会购买银行的产品。在和客户沟通的过程中,记录了和客户联系的次数,上一次联系的时长,上一次联系的时间间隔,同时在银行系统中保存了客户的基本信息,包括:年龄、职业、婚姻、之前是否有违约、是否有房贷等信息,此外所给数据集还统计了当前市场的情况:就业、消费信息、银行同业拆解率等。

- age : 年龄

- job : admin ,unknown,unemployed,management

- marital:婚姻:married,divorced,single

- default:信用卡是否违约:yes or no

- housing:是否有房贷:yes or no

- contact:联系方式:unkown,telephone,cellular

- month:上次联系的月份:jan,feb,mar,…

- day_of_week:上一次联系的星期几:mon,tue,wed,thu,fri,…

- duration:上一次联系的时长(秒)

- campaign:活动期间联系客户的次数

- pdays:上一次与客户联系后的间隔天数

- previous:在本次营销活动前,与客户联系的次数

- poutcome:之前营销活动的结果,unknown,other,failure,success

- emp_var_rate:就业变动率(季度指标)

- cons_price_index:消费者价格指数(月度指标)

- cons_conf_index:消费者信心指数(月度指标)

- lending_rate3m:银行同业拆解率3个月利率(每日指标)

- nr_employed:雇员人数(季度指标)

- subscribe:客户是否进行购买:yes or no

评价标准:Accuracy(所有分类准确的百分比)

- 1

运行

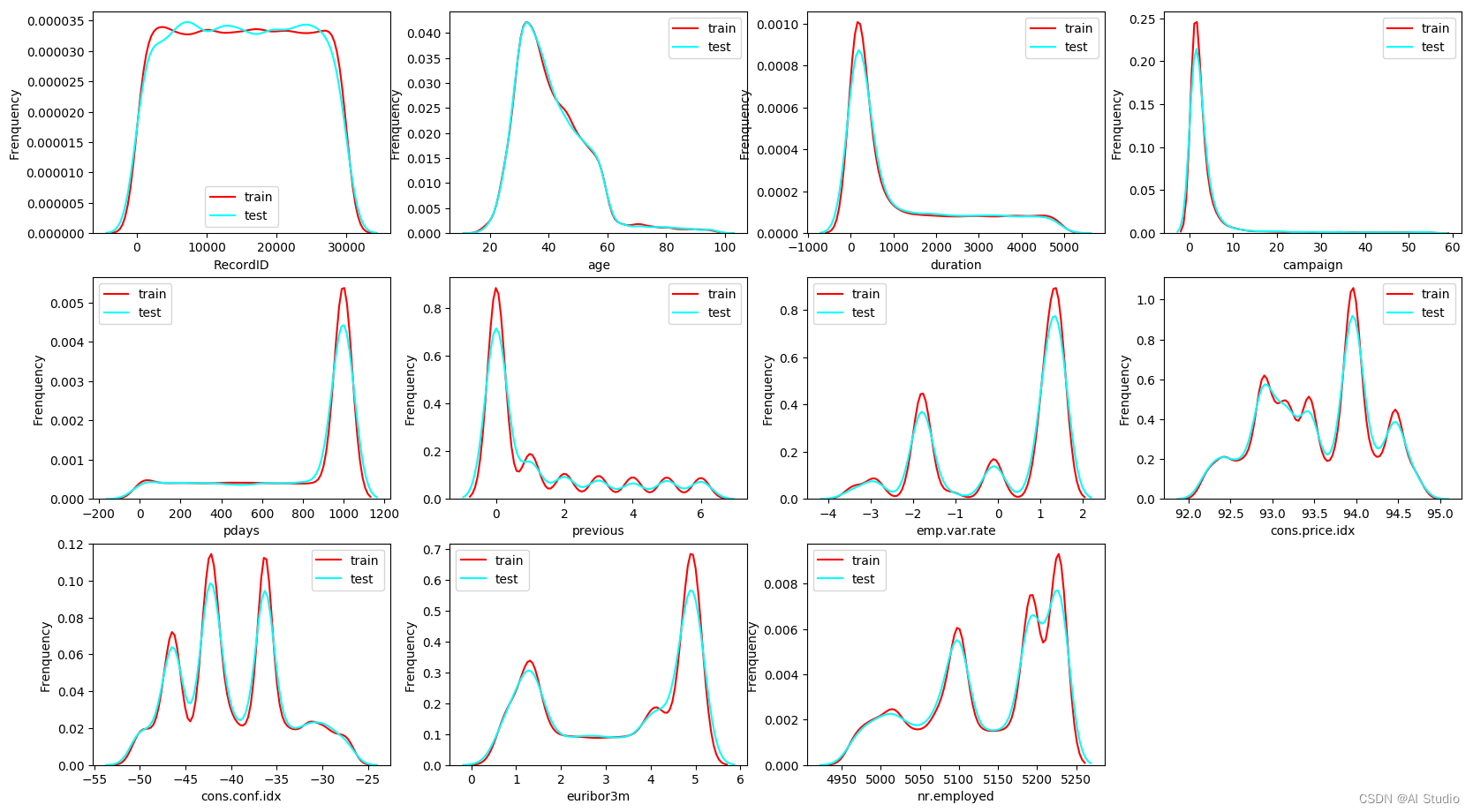

二、数据探索

1.读取数据

import pandas as pd

import numpy as np

df=pd.read_csv("train.csv")

test=pd.read_csv("test.csv")

df['subscribe'].value_counts()

- 1

- 2

- 3

- 4

- 5

no 19548

yes 2952

Name: subscribe, dtype: int64

- 1

- 2

- 3

- 1

运行

目标变量比例失衡

查看数据统计量

df.describe().T

- 1

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| RecordID | 22500.0 | 15011.876889 | 8679.392766 | 1.000 | 7483.750000 | 15032.500000 | 22514.500000 | 30000.000 |

| age | 22500.0 | 40.904489 | 12.026945 | 17.000 | 32.000000 | 38.000000 | 48.000000 | 98.000 |

| duration | 22500.0 | 1146.554311 | 1430.790448 | 0.000 | 144.000000 | 354.000000 | 1877.000000 | 4918.000 |

| campaign | 22500.0 | 3.857244 | 7.210891 | 1.000 | 1.000000 | 2.000000 | 3.000000 | 56.000 |

| pdays | 22500.0 | 774.562533 | 326.020060 | 0.000 | 558.750000 | 999.000000 | 999.000000 | 999.000 |

| previous | 22500.0 | 1.316444 | 1.918733 | 0.000 | 0.000000 | 0.000000 | 2.000000 | 6.000 |

| emp.var.rate | 22500.0 | 0.078529 | 1.573831 | -3.400 | -1.800000 | 1.100000 | 1.400000 | 1.400 |

| cons.price.idx | 22500.0 | 93.538746 | 0.647698 | 92.201 | 92.969840 | 93.485726 | 93.994000 | 94.767 |

| cons.conf.idx | 22500.0 | -39.872633 | 5.692010 | -50.800 | -43.643788 | -41.522404 | -36.100000 | -26.900 |

| euribor3m | 22500.0 | 3.307811 | 1.608627 | 0.634 | 1.410000 | 3.964364 | 4.864000 | 5.045 |

| nr.employed | 22500.0 | 5138.567351 | 81.748896 | 4963.600 | 5081.293851 | 5165.319989 | 5218.069326 | 5228.100 |

# 查看工作区文件, 该目录下的变更将会持久保存. 请及时清理不必要的文件, 避免加载过慢.

# View personal work directory.

# All changes under this directory will be kept even after reset.

# Please clean unnecessary files in time to speed up environment loading.

!ls /home/aistudio/work

- 1

- 2

- 3

- 4

- 5

duration分箱演示

import matplotlib.pyplot as plt import seaborn as sns bins=[0,143,353,1873,4198] df1=df[df['subscribe']=='yes'] binning=pd.cut(df1['duration'],bins,right=False) time=pd.value_counts(binning) #可视化 time=time.sort_index() fig=plt.figure(figsize=(6,2),dpi=120) sns.barplot(time.index,time,color='royalblue') x=np.arange(len(time)) y=time.values for x_loc,jobs in zip(x,y): plt.text(x_loc,jobs+2,'{:.1f}%'.format(jobs/sum(time)*100),ha='center',va='bottom',fontsize=8) plt.xticks(fontsize=8) plt.yticks([]) plt.ylabel('') plt.title('duration_yes',size=8) sns.despine(left=True) plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

<Figure size 720x240 with 1 Axes>

- 1

# 如果需要进行持久化安装, 需要使用持久化路径, 如下方代码示例:

# If a persistence installation is required,

# you need to use the persistence path as the following:

!mkdir /home/aistudio/external-libraries

!pip install beautifulsoup4 -t /home/aistudio/external-libraries

- 1

- 2

- 3

- 4

- 5

mkdir: cannot create directory ‘/home/aistudio/external-libraries’: File exists

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Collecting beautifulsoup4

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/9c/d8/909c4089dbe4ade9f9705f143c9f13f065049a9d5e7d34c828aefdd0a97c/beautifulsoup4-4.11.1-py3-none-any.whl (128 kB)

Collecting soupsieve>1.2

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/16/e3/4ad79882b92617e3a4a0df1960d6bce08edfb637737ac5c3f3ba29022e25/soupsieve-2.3.2.post1-py3-none-any.whl (37 kB)

Installing collected packages: soupsieve, beautifulsoup4

Successfully installed beautifulsoup4-4.11.1 soupsieve-2.3.2.post1

[33mWARNING: Target directory /home/aistudio/external-libraries/soupsieve already exists. Specify --upgrade to force replacement.[0m[33m

[0m[33mWARNING: Target directory /home/aistudio/external-libraries/soupsieve-2.3.2.post1.dist-info already exists. Specify --upgrade to force replacement.[0m[33m

[0m[33mWARNING: Target directory /home/aistudio/external-libraries/beautifulsoup4-4.11.1.dist-info already exists. Specify --upgrade to force replacement.[0m[33m

[0m[33mWARNING: Target directory /home/aistudio/external-libraries/bs4 already exists. Specify --upgrade to force replacement.[0m[33m

[0m

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

可以看出时长对目标变量有一定的区分

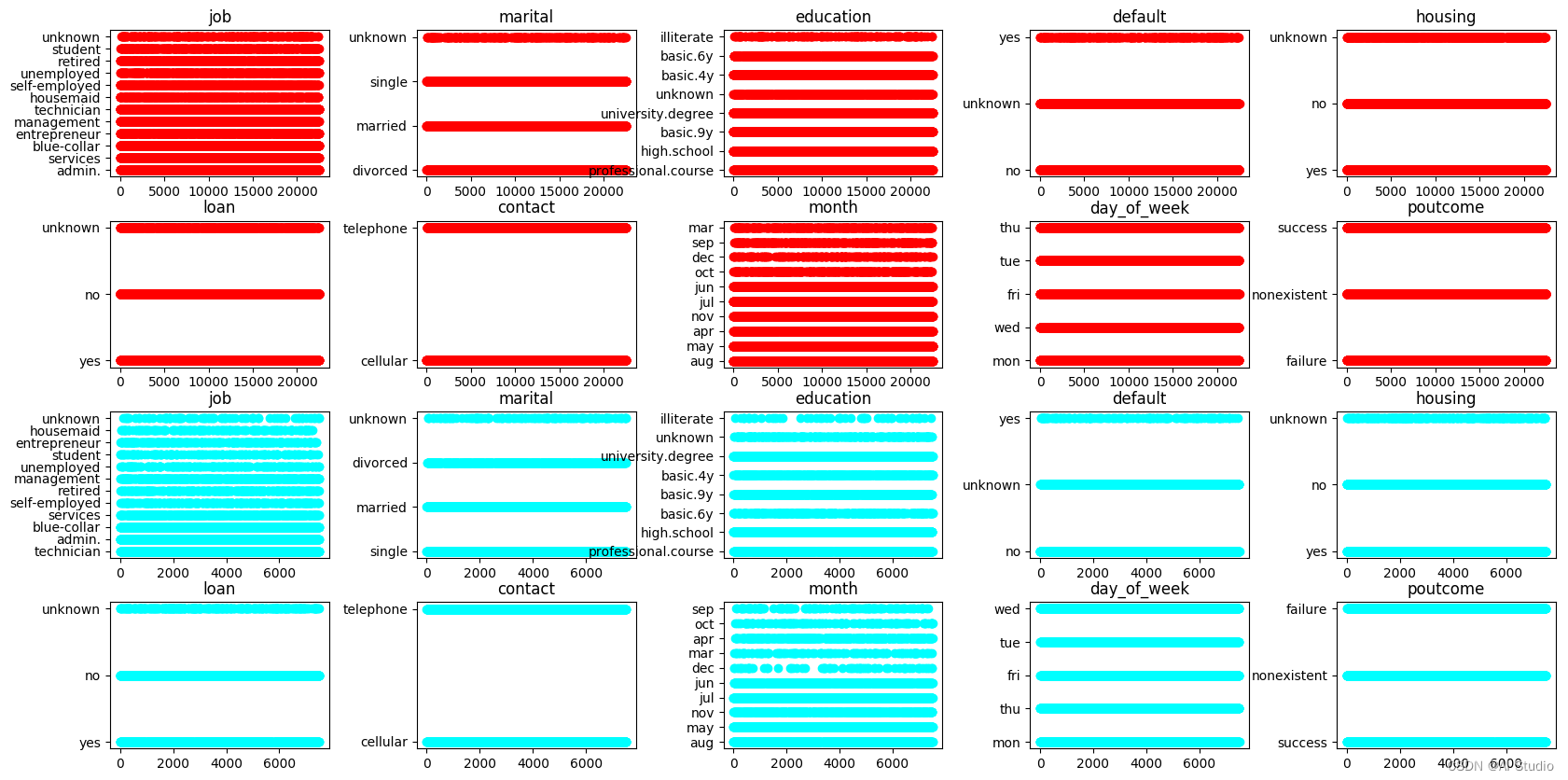

3.查看数据分布

#分离数值变量与分类变量 Nu_feature=list(df.select_dtypes(exclude=['object']).columns) Ca_feature=list(df.select_dtypes(include=['object']).columns) #查看训练集和测试集数值变量分布 import matplotlib.pyplot as plt import seaborn as sns import warnings warnings.filterwarnings('ignore') plt.figure(figsize=(20,15)) i=1 for col in Nu_feature: ax=plt.subplot(4,4,i) ax=sns.kdeplot(df[col],color='red') ax=sns.kdeplot(test[col],color='cyan') ax.set_xlabel(col) ax.set_ylabel('Frenquency') ax=ax.legend(['train','test']) i=i+1 plt.show() #查看分类变量分布 Ca_feature.remove('subscribe') col1=Ca_feature plt.figure(figsize=(20,10)) j=1 for col in col1: ax=plt.subplot(4,5,j) ax=plt.scatter(x=range(len(df)),y=df[col],color='red') plt.title(col) j=j+1 k=11 for col in col1: ax=plt.subplot(4,5,k) ax=plt.scatter(x=range(len(test)),y=test[col],color='cyan') plt.title(col) k=k+1 plt.subplots_adjust(wspace=0.4,hspace=0.3) plt.show()

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

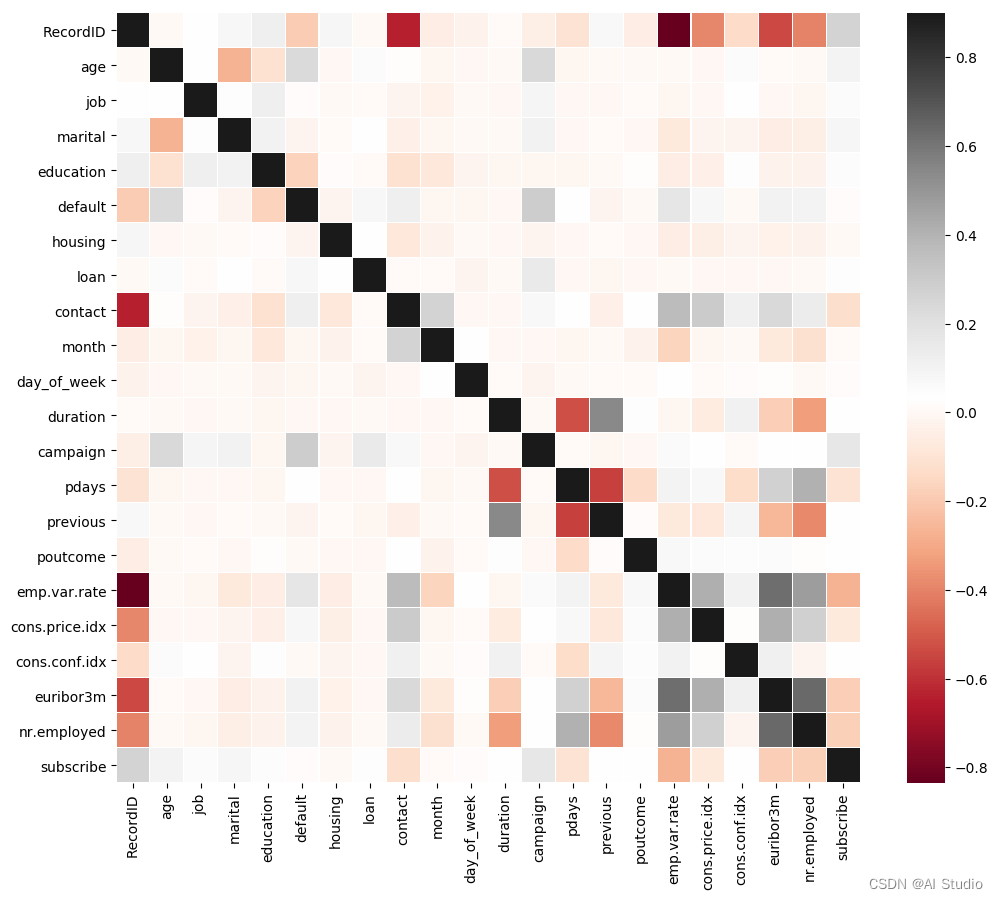

4.数据相关图

from sklearn.preprocessing import LabelEncoder

lb=LabelEncoder()

cols=Ca_feature

for m in cols:

df[m]=lb.fit_transform(df[m])

test[m]=lb.fit_transform(test[m])

df['subscribe']=df['subscribe'].replace(['no','yes'],[0,1])

correlation_matrix=df.corr()

plt.figure(figsize=(12,10))

sns.heatmap(correlation_matrix,vmax=0.9,linewidths=0.05,cmap='RdGy')

#相关性比较高的特征在模型特征输出部分也占据比较重要的

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

<matplotlib.axes._subplots.AxesSubplot at 0x7fdcecdb9c50>

- 1

三、数据建模

本次数据没有做任何特征工程,所以直接使用原始数据

from lightgbm.sklearn import LGBMClassifier from sklearn.model_selection import train_test_split from sklearn.model_selection import KFold from sklearn.metrics import accuracy_score,auc,roc_auc_score X=df.drop(columns=['RecordID','subscribe']) Y=df['subscribe'] test=test.drop(columns='RecordID') #划分训练及测试集 x_train,x_test,y_train,y_test=train_test_split(X,Y,test_size=0.3,random_state=1) #建立模型 gbm=LGBMClassifier(n_estimators=600,learning_rate=0.01,boosting_type='gbdt',objective='binary',max_depth=-1,random_state=2022,metric='auc') #交叉验证 result1=[] mean_score1=0 n_fold=5 kf=KFold(n_splits=n_fold,shuffle=True,random_state=2022) for train_index,test_index in kf.split(X): x_train=X.iloc[train_index] y_train=Y.iloc[train_index] x_test=X.iloc[test_index] y_test=Y.iloc[test_index] gbm.fit(x_train,y_train) y_pred1=gbm.predict_proba((x_test),num_iteration=gbm.best_iteration_)[:,1] print('验证集AUC:{}'.format(roc_auc_score(y_test,y_pred1))) mean_score1+=roc_auc_score(y_test,y_pred1)/n_fold y_pred_final1=gbm.predict_proba((test),num_iteration=gbm.best_iteration_)[:,1] y_pred_test=y_pred_final1 result1.append(y_pred_final1) #模型评估 print('mean验证集auc:{}'.format(mean_score1)) cat_pre1=sum(result1)/n_fold ret1=pd.DataFrame(cat_pre1,columns=['subscribe']) ret1['subscribe']=np.where(ret1['subscribe']>0.5,'yes','no').astype('str') ret1.to_csv('测试.csv',index=False)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

验证集AUC:0.8926203815766213

验证集AUC:0.8896462215236163

验证集AUC:0.8795877825112728

验证集AUC:0.9017302955665025

验证集AUC:0.9012188887720168

mean验证集auc:0.892960713990006

- 1

- 2

- 3

- 4

- 5

- 6

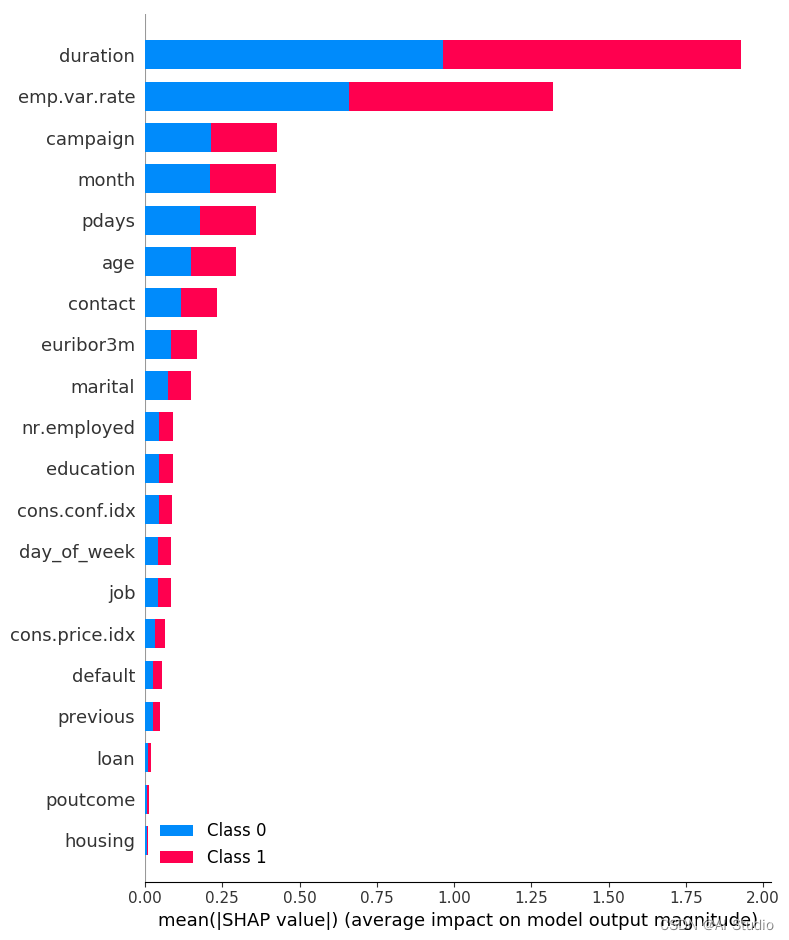

特征输出

import shap

explainer=shap.TreeExplainer(gbm)

shap_values=explainer.shap_values(X)

shap.summary_plot(shap_values,X,plot_type='bar',max_display=20)

(gbm)

shap_values=explainer.shap_values(X)

shap.summary_plot(shap_values,X,plot_type='bar',max_display=20)

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 1

运行

- 1

运行

请点击此处查看本环境基本用法.

Please click here for more detailed instructions.

此文章为搬运

原项目链接

声明:本文内容由网友自发贡献,不代表【wpsshop博客】立场,版权归原作者所有,本站不承担相应法律责任。如您发现有侵权的内容,请联系我们。转载请注明出处:https://www.wpsshop.cn/w/知新_RL/article/detail/823930

推荐阅读

相关标签